Overview

A public cloud is a pool of virtual resources—developed from hardware owned and managed by a third-party company—that is automatically provisioned and allocated among multiple clients through a self-service interface. It’s a straightforward way to scale out workloads that experience unexpected demand fluctuations.

Today’s public clouds aren’t usually deployed as a standalone infrastructure solution, but rather as part of a heterogeneous mix of environments that leads to higher security and performance; lower cost; and a wider availability of infrastructure, services, and applications.

What makes a cloud public?

- Resource allocation: Tenants outside the provider’s firewall share cloud services and virtual resources that come from the provider’s set of infrastructure, platforms, and software.

- Use agreements: Resources are distributed on an as-needed basis, but pay-as-you-go models aren’t necessary components. Some customers—like the handful of research institutions using the Massachusetts Open Cloud—use public clouds at no cost.

- Management: At a minimum, the provider maintains the hardware underneath the cloud, supports the network, and manages the virtualization software.

For example, Lotte Data Communication Company (LDCC) built a private cloud using Red Hat® OpenStack® Platform to integrate their internal systems. But it worked so well that LDCC began offering the exact same cloud infrastructure to customers. The same bundle of technologies underpin both clouds, but LDCC’s customers are using a public cloud because the use, resource, and management agreements fall in line with what makes clouds public.

Who provides public clouds?

Any company can create a public cloud, and there are thousands of public cloud services all over the world. Alibaba Cloud, Amazon Web Services (AWS), Google Cloud, IBM Cloud, and Microsoft Azure are among the largest—and most popular—ones today.

Public cloud services are built on extensive data center infrastructure. Those data centers house the physical hardware and servers that deliver cloud services to users and businesses. Data centers supporting public cloud services are designed for scalability and flexibility. They dynamically allocate computing resources based on demand, allowing their users to scale computing power, data storage, and other resources as needed.

Additionally, data centers are distributed across various geographical regions. A distributed infrastructure improves performance by reducing latency, provides redundancy, and ensures data resilience in case of hardware failures or other issues. Users can access cloud resources and applications over the internet, with the underlying data center infrastructure handling the complexity behind the scenes.

How do public clouds work?

Public clouds are set up the same way as private clouds. Both types of cloud use a handful of technologies to virtualize resources into shared pools, add a layer of administrative control over everything, and create automated self-service functions. Together, those technologies create a cloud: private if it’s sourced from systems dedicated to and managed by the people using them, public if you provide it as a shared resource to multiple users. A hybrid cloud is a combination of two or more interconnected cloud environments—public or private—while a multi-cloud is a combination of two or more public cloud solutions.

All that technology not only has to integrate for the cloud to just work, it also has to integrate with any customer’s existing IT infrastructure—which is what makes public clouds work well. That connectivity relies on perhaps the most overlooked technology of all: the operating system. The virtualization, management, and automation software that creates clouds all sit on top of the operating system. And the consistency, reliability, and flexibility of the operating system directly determines how strong the connections are between the physical resources, virtual data pools, management software, automation scripts, and customers.

When that operating system is open sourced and designed for enterprises then the infrastructure holding up a public cloud is not only reliable enough to serve as a proper foundation, but flexible enough to scale. It's why 9 of the top 10 public clouds run on Linux, and why Red Hat Enterprise Linux is the most deployed commercial Linux subscription in public clouds, like Microsoft Azure, Amazon Web Services, Google Cloud, and IBM Cloud.

How do I use public clouds?

A public cloud is perhaps the simplest of all cloud deployments: A client needing more resources, platforms, or services simply pays a public cloud service provider by the hour or byte to have access to what’s needed when it’s needed. Infrastructure, raw processing power, storage, or cloud-based applications are virtualized from hardware owned by the vendor, pooled into data lakes, orchestrated by management and automation software, and transmitted across the internet—or through a dedicated network connection—to the client.

Cloud computing is the result of meticulously developed infrastructure, kind of like today’s electric, water, and gas utilities are the result of years of infrastructural development. Cloud computing is made available through network connections in the same way that utilities have been made available through networks of underground pipes.

Homeowners and tenants don’t necessarily own the water that comes from their pipes; don’t oversee operations at the plant generating the electricity that powers their appliances; and don’t determine how the gas that heats their home is acquired. These homeowners and tenants simply make an agreement, use the resources, and pay for what’s used within a certain amount of time.

Public cloud computing is a similar cost-effective utility. The clients don’t own the gigabytes of storage their data they use; don’t manage operations at the server farm where the hardware lives; and don’t determine how their cloud-based platforms, applications, or services are secured or maintained. Public cloud users simply make an agreement, use the resources, and pay for what’s used.

Machine learning in the cloud

The increase in machine learning applications necessitates an increase in the consumption of public cloud computing services. Machine learning (ML) works seamlessly with public clouds to leverage the scalability, flexibility, and resources provided by cloud service providers. ML applications benefit from public cloud architecture in the following ways:

- Machine learning algorithms require significant amounts of data for training. Similarly, ML models, especially deep learning models, often demand substantial computational power for training. Public cloud services provide data storage solutions and computing resources that scale to accommodate these datasets.

- Public cloud environments are designed to scale resources up or down based on demand and adjust their pricing as resources are consumed. ML workloads can benefit from this scalability, allowing organizations to allocate more resources during intensive training phases and scale down during periods of lower demand, optimizing costs.

- Machine learning applications often need to integrate with other cloud services, such as databases, messaging queues, or analytics services. Public cloud platforms provide a wide range of services that can be seamlessly integrated into ML workflows to enhance overall functionality.

Public clouds in hybrid environments

Enterprises are adopting fewer public- or private-only cloud distributions and more hybrid environment solutions that include bare-metal, virtualization, private, and public cloud infrastructure—as well as on-premise architecture. This allows each environment’s advantage to minimize the disadvantages of another.

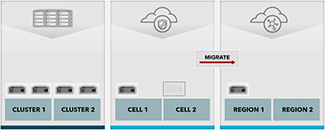

For example: imagine an enterprise running all workloads on 1 virtual cluster. That cluster would be running at full capacity, leading to poor response times and an inundation of calls or tickets to operations teams from upset application owners. This situation could be solved by rolling out another virtual cluster and automating workload balance between the 2. This is the start of a hybrid environment.

The enterprise could expand its infrastructure portfolio to include a private Infrastructure-as-a-Service (IaaS) cloud (like Red Hat® OpenStack® Platform). Workloads that don't need to run on virtual infrastructure could be migrated to the IaaS private cloud—saving money and increasing workload uptime.

To reduce poor response times to cloud users thousands of miles away, the enterprise could place some workloads on public clouds in nearby regions. This would allow the enterprise to control costs and maintain high availability.

Why Red Hat?

The majority of enterprises can’t afford to dedicate 100% of their business to a single environment—be it public or private cloud. But even in a hybrid environment, your developers can’t be distracted by application programming interface (API) and incompatibile integration frameworks when migrating workloads. Developers need to have confidence that their apps will run the same way everywhere, which is a key outcome of an open hybrid cloud strategy.

When your hybrid cloud strategy includes a public cloud, we’re ready to help with an ecosystem of hundreds of Red Hat® Certified Cloud and Service Providers. Run the industry’s leading hybrid cloud application platform on major cloud providers with Red Hat OpenShift cloud services editions to build, deploy, and scale cloud-native applications in a public cloud. It’s this consistency that binds successful hybrid environments, allowing you to implement the cloud strategy that works for you, on your time, that meets your requirements.