Red Hat Blog

"Gather your children around the monitor." Burr Sutter, Red Hat's global director of developer experience, incited Red Hat Summit attendees to get their families around the computer for day two's live demo of Red Hat technologies. Was it worth it? Yes indeed.

In less than an hour, Sutter and team delivered a demo that took us from developing a new feature to deployment to production in a multicloud environment, enhancing security policy, and testing failover capabilities. Note that Burr and team did it live and with audience participation, just like Red Hat customers do every day in production.

The demo environment emulated a large financial services company that started in North America and did "exceptionally well," expanding to London, Sydney, São Paulo, Singapore and back around to San Francisco.

The demo application was a price-guessing game running on nodes around the world. Attendees could play from their phones or desktop, with a leaderboard showing the users who were winning.

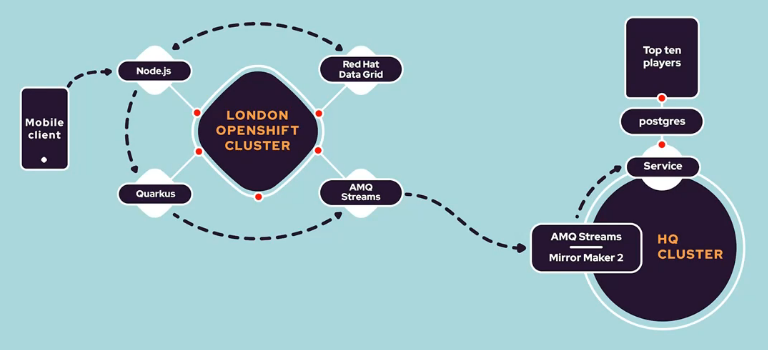

Application infrastructure

The demo application infrastructure featured a mobile client talking to a Node.js backend, part of Red Hat Runtimes, that talks to Red Hat Data Grid to maintain state across transactions, and Quarkus Java Runtime providing the scoring logic for the application. AMQ Streams, based on Apache Kafka, was used to stream data from the regional clusters to the headquarters cluster, which was maintaining a PosgreSQL database to track the real-time leaderboard.

Sutter then brought in Jennifer Vargas to show how the application is built using Red Hat Managed Integration and CodeReady Workspaces (based on Eclipse Che), so developers can worry about the app and not the underlying platform (Red Hat OpenShift).

Vargas showed how part of the environment included a "legacy" virtual machine running in OpenShift as a first-class citizen alongside containerized applications. Vargas also demoed Red Hat Managed Integration (available later this year), and the ability to drop down into code and make live changes. (Bonus demo of what happens when there's a missing ";" that causes an error, which can be fixed without needing to reload the app server. Don't try this at work, kids.)

Tools for operations

We also got a look at using OpenShift Operators and where organizations can use Operators to enforce policy in their environments. While not as visually enticing as the game and leaderboard, the demo illustrated how you can automate the tasks of deploying updates.

Ryan Cook showed off a tiny OpenShift cluster that literally fit in the palm of his hands. Four Intel NUC systems running OpenShift that could be used for an edge deployment that can operate independently, manage workloads closer to the user, and transmit data back to the main cluster when necessary.

How does one manage all those edge clusters? Cook showed off a browser full of tabs tracking 17 OpenShift clusters. Not insurmountable, but not ideal, either.

Burr brought on Tracy Rankin and she walked us through Red Hat Advanced Cluster Management for Kubernetes, and showed off the ability to manage OpenShift clusters across multiple providers -- including Microsoft Azure, Google Cloud, IBM Cloud, Amazon Web Services, and on OpenStack and bare metal.

The entire open hybrid cloud, all in one central view, with handy search capabilities that allows users to drill down into resources.

The price is right

After setting up the application architecture and cluster infrastructure, we moved on to running the We also got to see live deployment of a new cluster as well as disabling a region to see how well the app could fail over without one of the regions. Spoiler alert: The failover happened without any real interruption to users. application. Users could go to redhatkeynote.com and automatically join in the game with a randomly assigned username. The challenge was to try to guess the costs of items like Red Hat t-shirts and other Cool Stuff, though users got to start with a $1 bill to help learn the ropes.

Operations isn't all fun and games, though, so Burr brought out Liz Blanchard to demonstrate policy management at scale using Red Hat Advanced Cluster Management for Kubernetes. Blanchard demonstrated how a policy could be rolled out to the environment and then applied so that systems with outdated and vulnerable images can be updated, as well as deploying an OpenShift Operator to enforce policy.

From there Blanchard drilled down to the San Francisco cluster, which had several vulnerabilities that were caught by the new policy. With a few clicks the upgrade was approved and started to roll out to the cluster without any hitches. We also saw that you could set up the policy to run automatically rather than requiring manual intervention.

After pausing the app to show the policy demo, Sutter restarted the app, but with a new wrinkle. Instead of clicking numbers, users would input numbers by drawing them on the screen, and the app would interpret the drawing using TensorFlow to do handwriting recognition.

Finally, they tried pulling a cluster out of the mix to test failover and the system handled it without a blip, shunting users away from London to Frankfurt.

By the end of the demo, more than 2,600 users had connected to the application and it had processed more than 133,000 transactions.

From the cloud to the edge

What about more practical applications? The real-time price guessing game is a great illustration of Red Hat technologies, but what about more real-world workloads that you might see in manufacturing or other industries? During the evening general sessions, we got a good look at some demos that more closely mirror workloads our customers are depending on, from core datacenters to edge servers.

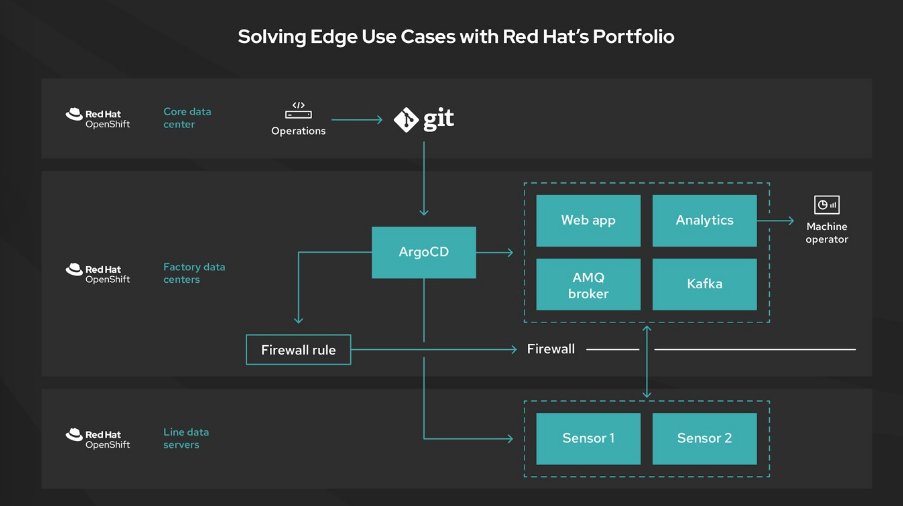

Red Hat's Sherard Griffin provided a demo that spans the core datacenter to factory datacenters to the edge.

At the heart of each is Red Hat OpenShift, from the core to the edge. Applications are run at factory datacenters, taking data from line datacenters. It allows developers to push out updates from the core to the factory and edge systems, while maintaining centralized control. Machine operators can monitor data on the floor, make changes to sensors, and more through GitOps.

The demo made use of Open Data Hub, Jupyter notebooks, Tekton pipelines, Argo CD, and much more, showing how to create learning models at the core and push out to the edge.

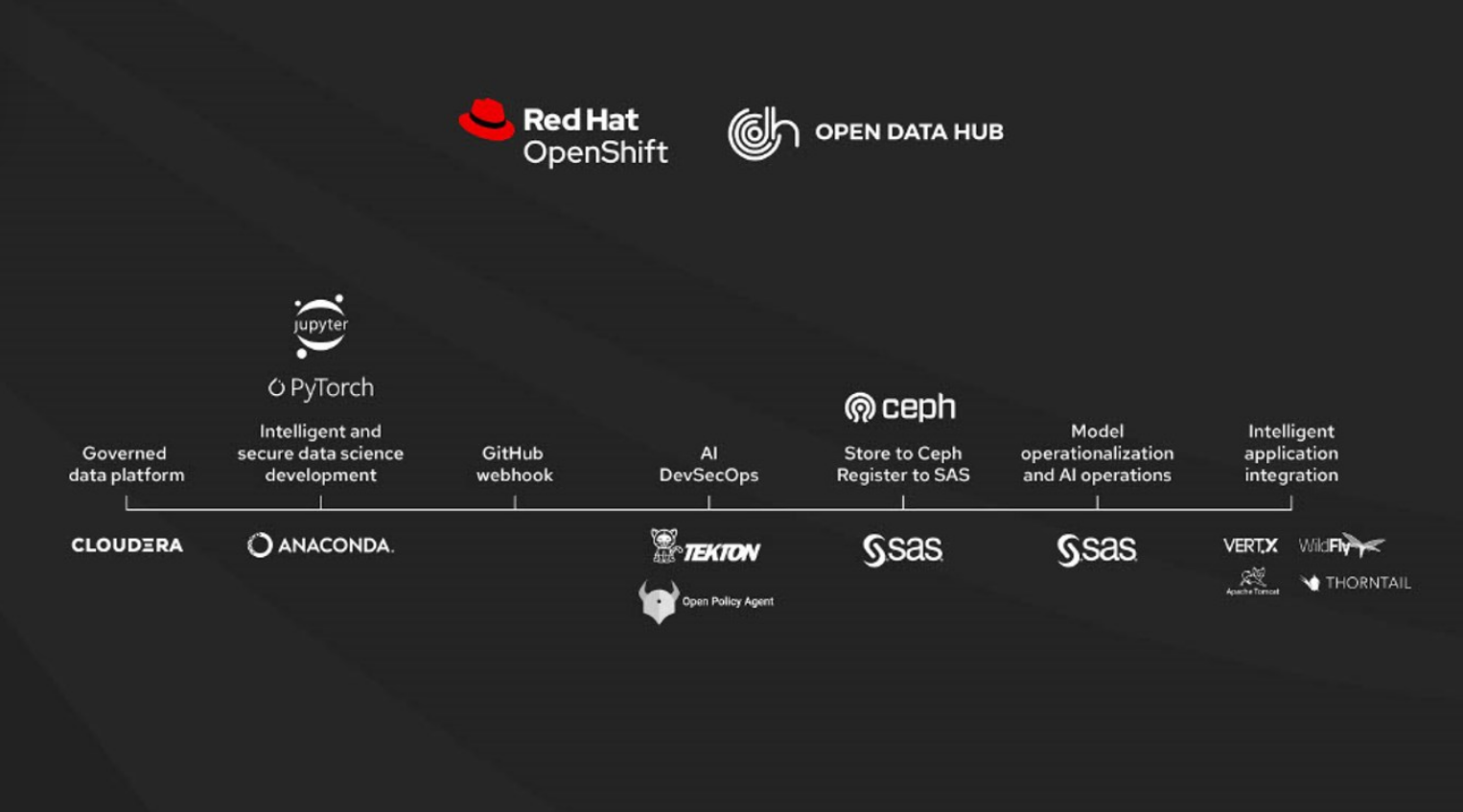

Sutter returned to walk through an end-to-end DevSecOps pipeline for AI and Machine Learning applications, to show how organizations can more reliably operationalize AI.

This demonstration brought together products from Red Hat's portfolio, upstream open source components, and products from Red Hat partners all brought together to take AI/ML from experimentation by data scientists to production.

This demo looked at computer vision and object detection, a common workload across many industries. Sophie Watson from Red Hat's AI Center of Excellence noted that this workload requires frequent updates to react to changes in the environment or new learning and improvements. We got a look at what goes into an image recognition system and components that are required.

If you've never seen what it takes to create an application that recognizes real-world images from training data, it's well worth watching to see how it all comes together.

See for yourself

Sometimes words just don't suffice. If you didn't get to see our demos in real time, be sure to head over to the Red Hat Summit Virtual Experience and hit the On Demand replay.

Über den Autor

Red Hat is the world’s leading provider of enterprise open source software solutions, using a community-powered approach to deliver reliable and high-performing Linux, hybrid cloud, container, and Kubernetes technologies.