Red Hat Blog

Time for more cgroups fun! In this post we're going to take a look at control groups (cgroups) v2, which are supported in Red Hat Enterprise Linux 8.

We may have launched a new version of RHEL. This happens sometimes.

On May 7, 2019, Red Hat officially launched Red Hat Enterprise Linux 8. Huzzah! Out of the box, RHEL 8 is not a huge change from the RHEL 7 user experience, at least not at first. For instance, we’re still using things like systemd, GNU Bash, Network Manager and yum. (Although yum now has a whole new shiny engine under the hood.)

From a control groups perspective, things seem to be the same as always. However, I want to point out two very distinct pieces of news.

-

User sessions, which are a special type of temporary slice known as a “transient slice,” now honor drop-in files again. This behavior had stopped working in RHEL 7.4, based on upstream changes to how the system handled transient slices. You can also change their settings on the fly with systemctl set-property commands. The location for drop in files has changed for when systemctl set-property is used. The drop ins are now stored in

/etc/systemd/system.control. -

RHEL 8 supports cgroups v2. This is a big one, and is the primary focus of my rambling today.

Control groups v2 - the big picture

One of the great things about open source development is that features can be designed and implemented organically and grow and change as needed. However, a drawback is that this methodology can sometimes lead to a hot mess and uncomfortable technical debt.

In the case of cgroups v1, as the maintainer Tejun Heo admits, "design followed implementation," "different decisions were taken for different controllers," and "sometimes too much flexibility causes a hindrance."

In short, not all of the controllers behave in the same manner and it is also completely possible to get yourself into very strange situations if you don’t carefully engineer your group hierarchy. Therefore, cgroups v2 was developed to simplify and standardize some of this.

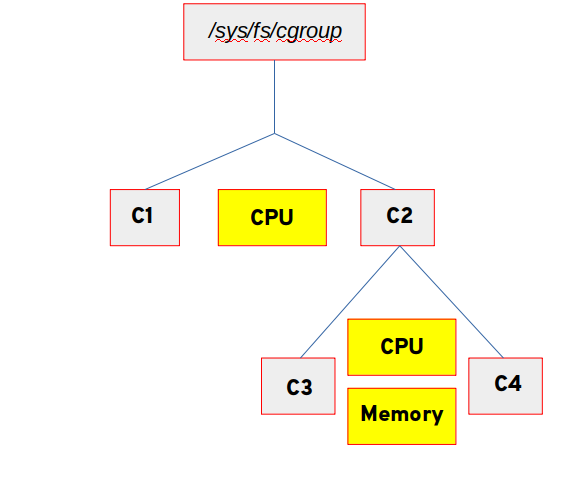

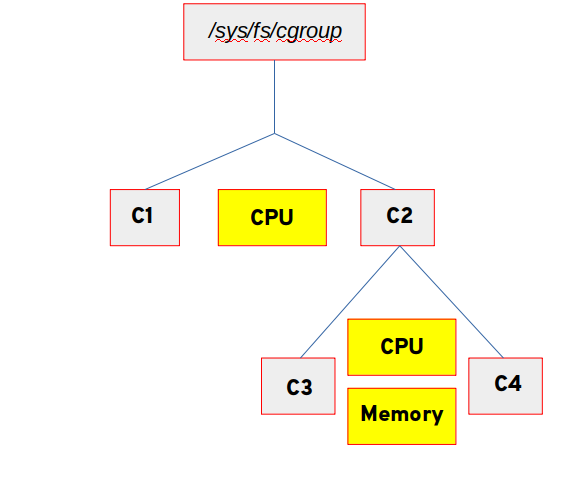

Let’s take a look at how the two versions are different. I’m going to show two different diagrams - controllers are in yellow blocks and cgroup directories have a grey background.

The diagram below shows that in the case of cgroups v1, each controller can have one or more cgroups under it, as well as nested groups within. A process can live in any or all of these cgroups (well, one per controller) and it’s up to the user to make sure that the combination of cgroups is sane.

In the case of cgroups v2, there is a single hierarchy of cgroups. Each cgroup uses the control file cgroup.subtree_control to determine what controllers are active for the children in that particular cgroup. As our online documentation puts it, “Essentially, CGroups v1 has cgroups associated with controllers whereas CGroups v2 has controllers associated with cgroups.”

The next image shows the directory structure of the cgroups that have been created. Controllers active for those groups have been placed in line on the chart. So for instance, C1 and C2 both have the CPU controller active. C3 and C4 also have the CPU controller from their parent and have the memory controller active as well.

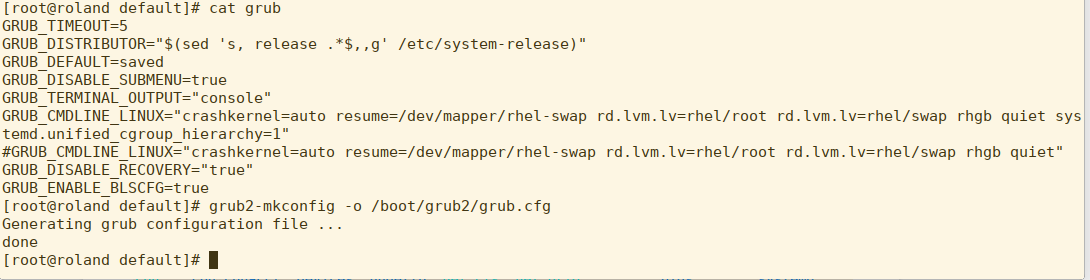

There is a document on the Customer Portal here that tracks the current state of cgroups V2. If you are interested in how cgroups will change over the lifecycle of RHEL, it’s a good article to follow. This same document gives guidance on how to activate cgroups v2. In essence, the only fully supported method is to add systemd.unified_cgroup_hierarchy=1 to the kernel boot line in grub.conf.

The Nitty and the Gritty

Let’s take a look at the technical differences in some depth, as well as try out some manipulation and see how things work under v2.

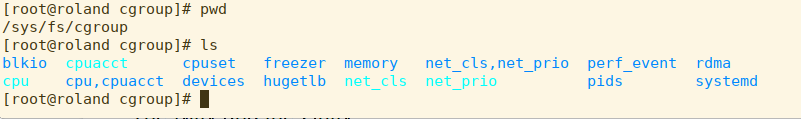

I’ve logged into roland, a RHEL 8 virtual machine. He’s currently running cgroups v1, so let’s see what that looks like.

Nothing strange there. Now, we’re going to change the kernel boot line in /etc/default/grub and rebuild the grub.conf.

As you can see, I’ve commented out the original GRUB_CMDLINE_LINUX and added a version that includes systemd.unified_cgroup_hierarchy=1. I then rebuilt the grub.conf file using grub2-mkconfig. Remember kids, don’t edit grub.conf by hand in RHEL 7 or RHEL 8.

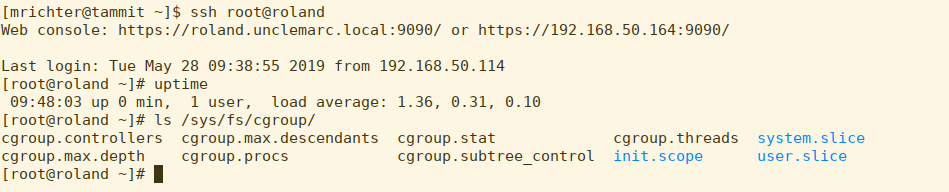

Let’s reboot roland and see what his filesystem looks like after the fact.

That’s a bit of a different look. We no longer see controllers -- in fact, we now see the default active slices at the top level (system.slice and user.slice)

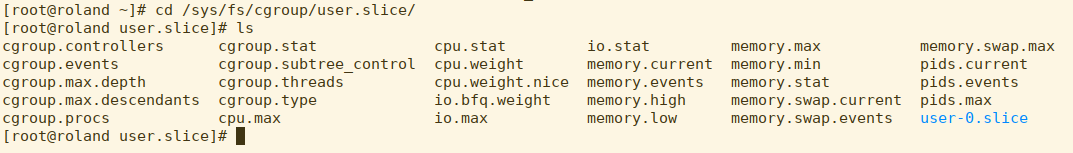

Looking at the user slice, we can see a subdirectory called user-0.slice. If you remember from previous blogs, every logged in user gets a slice under the top level user slice. These are not identified by names, but by the UID. user-0.slice, therefore, is everyone’s favorite superuser, root.

Let’s see what happens when I login as myself in another terminal.

A new subdirectory has been created for user-1000.slice, which matches my current UID.

So far, none of this is unreasonable or in violation of any of the things we’ve learned already.

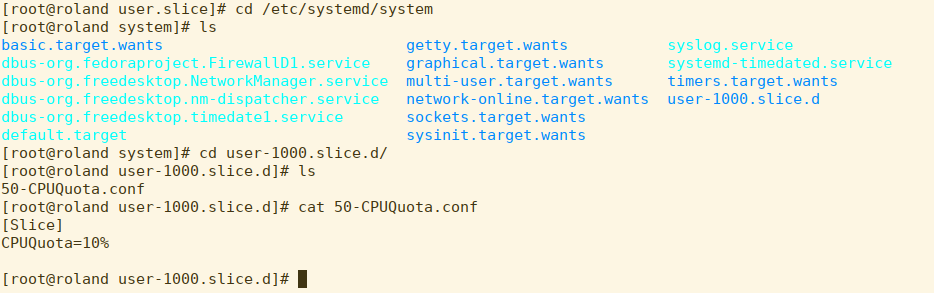

Now because I have a history of using roland for very processor intensive work, the owner of the system has decreed that I be given a rather harsh quota. If we look, we can see that there is a drop-in file for this user slice that has been created by that mean system administrator.

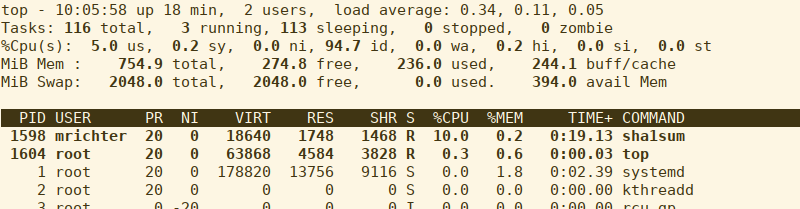

This restricts UID 1000 to 10% of one CPU. Let’s see if it’s active. I start up foo.exe, which will gulp as much CPU as it can get and then check top:

Well, that’s working. What a sysadmin can do now is to change this value to update quota immediately, without a user losing any running processes.

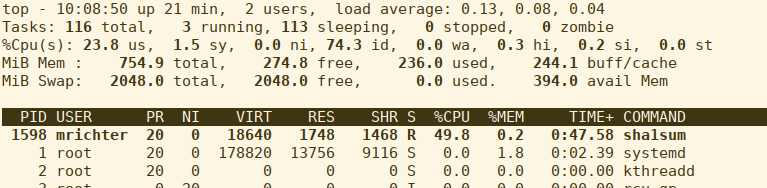

Our admin changes the quota to 50%

We check usage:

Let’s look at the internal changes going on in the virtual filesystem:

In this shot, we see that under user.slice, the default cpu.max (which is what CPUQuota actually sets) is 100000 ticks, with no limit. Once the quota is set, the child slice user-1000.slice has a cpu.max of 50000 ticks per 100000 ticks - in other words, 50% of the CPU’s time is the maximum allowed usage.

Finally, let’s talk about determining what cgroup a process might be living in, as well as a new “rule” for v2 and attaching processes.

There’s quite a bit to unpack in this screen cap, so let’s take it slowly.

As you can see, the user-1000.slice actually has two items under it. We’re going to ignore the user@1000.service for now and look at session-3.scope.

A scope lives under a slice and inherits the settings -- it’s a very temporary part of the hierarchy. With cgroups v1, a process could be attached at almost any part of the hierarchy. With cgroups v2, a process MUST live on a “leaf” - in other words, part of the hierarchy that has no children. In the case of our current user here, that would be the session-3.scope. The PID for a process in a cgroup lives in cgroup.procs - as we can see, there are 5 PIDs running in the scope when I ran the cat command (cat being one of them)

To put a process into a cgroup by hand, you can echo the PID into the appropriate cgroup.procs file. If you try and do this for a member of the hierarchy that is not a leaf, it will fail. Let’s look at our V2 hierarchy again.

It is legal to move a process into C1, C3 or C4. Attempting to put a process into C2 will fail, as it has child cgroups.

Most of the time, you probably won’t be too concerned about manually moving a process into a cgroup, but it’s always a possibility for more complex use cases.

Should I be using cgroups v2?

That’s a great question. Glad you asked it.

RHEL 8 ships with cgroups v1 as the default. There’s some good reasoning behind this. Probably the most important is that the following packages, as of RHEL 8.0, require version 1:

-

libvirt

-

runc

-

Kubernetes

So if your RHEL 8 system is hosting containers or KVM virtual machines right now, it’s advised to stick with cgroups v1. Now, there are Bugzillas currently open to fully support all of these use cases in RHEL 8, but they were not able to be completed by the launch date. Also, the intent is for v1 to remain the default “out of the box” experience. Version 1 will continue to be available as part of RHEL 8.

If your systems are running workloads that don’t require those features, cgroups v2 may offer a more straightforward way to manage your resources. If you are developing services and applications, this is a great opportunity to see how they interact with v2. I believe that we will see all use cases supported under v2 under RHEL 8 eventually, so it may be worth investing the learning time now so that you can hit the ground running. You can always consider cgroups v2 a technical preview, if you will, even though the feature is actually production ready and fully supported.

If this is the first time you’re reading my blog series on Control Groups (cgroups) then allow me to offer you the links to the prior entries in the series. It’s a great review of where we’ve been.

“World Domination With Cgroups” series:

Part 1 - Cgroup basics

Part 2 - Turning knobs

Part 3 - Thanks for the memories

Part 4 - All the I/Os

Part 5 - Hand rolling your own cgroup

Part 6 - cpuset

As always, thanks for spending some time with us today and enjoy your quest towards World Domination!

Note: information about drop-in files has been edited as of April 24, 2020.

Über den Autor

Marc Richter (RHCE) is a Principal Technical Account Manager (TAM) in the US Northeast region. Prior to coming to Red Hat in 2015, Richter spent 10 years as a Linux administrator and engineer at Merck. He has been a Linux user since the late 1990s and a computer nerd since his first encounter with the Apple 2 in 1978. His focus at Red Hat is RHEL Platform, especially around performance and systems management.