Red Hat blog

In part 4 of a series on Ceph performance, we take a look at RGW bucket sharding strategies and performance impacts.

Ceph RGW maintains an index per bucket, which holds the metadata of all the objects that the bucket contains. RGW needs the index to provide this metadata when it's requested. For example, listing bucket contents pulls up the stored metadata, maintaining a journal for object versioning, bucket quota, multi-zone synchronization metadata, etc. So, in a nutshell, the bucket index stores some useful pieces of information. The bucket index does not affect read operations on objects, but it does add extra operations when writing and modifying RGW objects.

Writing and modifying bucket indices at scale has some implications. Firstly, there is a limited amount of data that we can store on a single bucket index object because the underlying RADOS object key-value interface that is used for bucket index object is not unlimited and only a single RADOS object per bucket is used by default. Secondly, the large index objects can lead to performance bottlenecks as all writes to that populated bucket end up modifying the single RADOS object backing the bucket index.

To tackle the problems associated with very large bucket index objects, a bucket-index sharding feature was introduced in RHCS 2.0. With this, every bucket index can now be spread across multiple RADOS objects, allowing bucket index metadata to be scalable by allowing the number of objects that a bucket can hold to scale with the number of index objects (shards).

However, this feature was limited to only newly created buckets and requires pre-planning of future bucket object population. To alleviate this bucket resharding administrator command was added which helps in modifying the number of bucket index shards for existing buckets. However, with this manual approach, bucket resharding was typically done when degraded performance symptoms were seen in the cluster. Also, manual resharding required quiescing of writes to the bucket during the resharding process.

The significance of dynamic bucket resharding

RHCS 3.0 introduced a dynamic bucket resharding capability. With this feature bucket indices will now reshard automatically as the number of objects in the bucket grows. You do not need to stop reading or writing objects to the bucket while resharding is happening. Dynamic resharding is a native RGW feature, where RGW automatically identifies a bucket that needs to be resharded if the number of objects in that bucket is more than 100K, RGW schedules resharding for that buckets by spawning a special thread which is responsible for processing the scheduled reshard operation. Dynamic resharding is a default feature now and no action is needed by the administrator to activate it.

In this post, we will drill down into the performance associated with dynamic resharding capability and understand how some of this can be minimized using pre-sharded buckets.

Test Methodology

To study the performance implications associated of storing a large number of objects in a single bucket, as well as dynamic bucket resharding, we have intentionally used a single bucket for each test type. Also, the buckets were created using default RHCS 3.3 tunings. The tests consist of two types:

-

Dynamic bucket resharding test, where a single bucket stored up to 30 million objects

-

Pre-Sharded bucket test, where the bucket was populated with approximately 200 Million objects

For each type of test, COSBench test was divided into 50 rounds, where each round wrote for 1 hour followed by 15 minutes of read and RWLD (70Read, 20Write, 5List, 5Delete) operations respectively. As such, during the entire test cycle, we wrote over ~245 million objects across two buckets.

Dynamic Bucket Resharding: Performance Insight

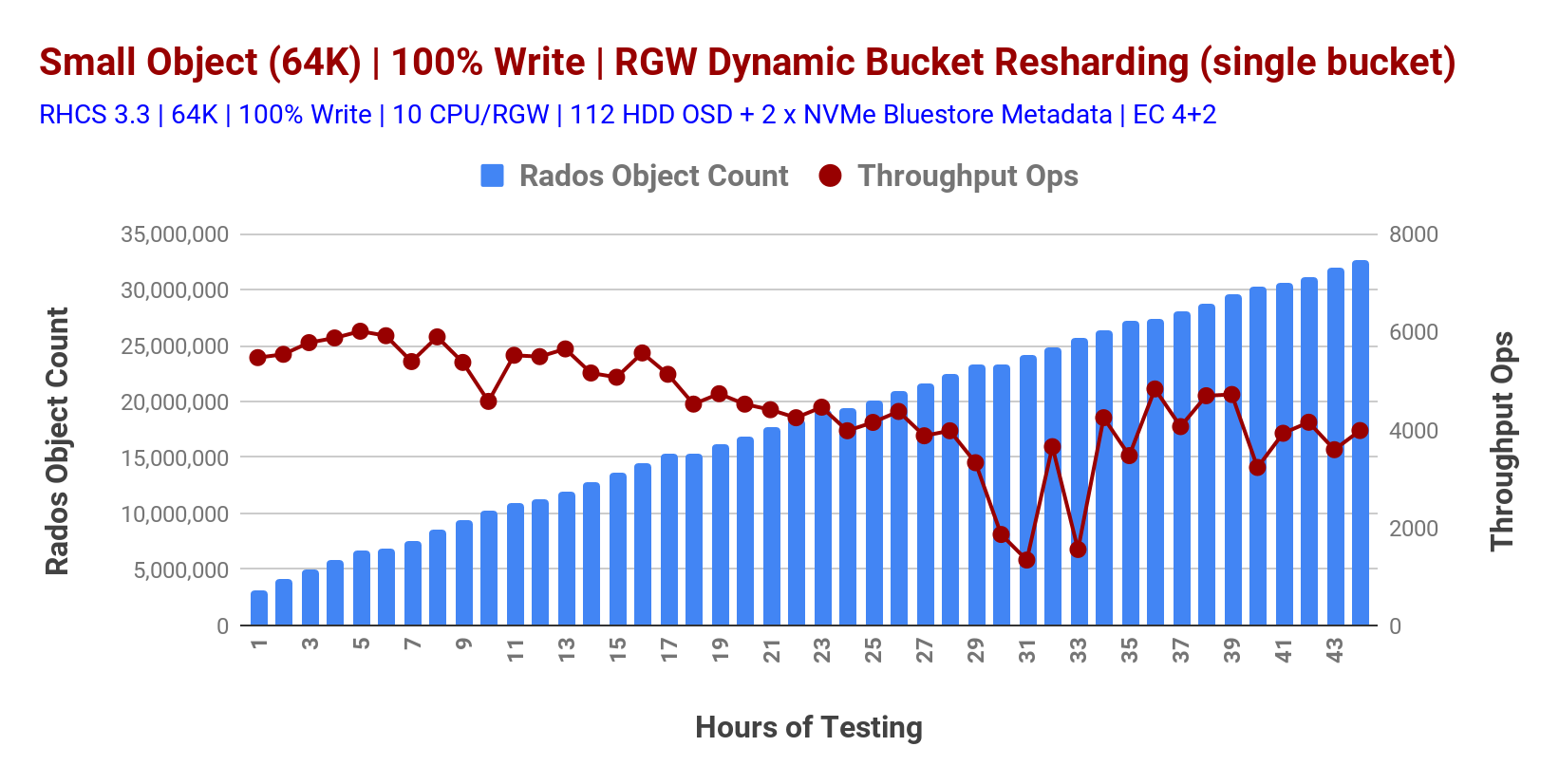

As explained above, dynamic bucket resharding is a default feature in RHCS, which kicks in when the number of stored objects in the bucket crosses a certain threshold. Chart 1 shows performance change while continuously filling up the bucket with objects. The first round of test delivered ~5.4K Ops while storing ~800K objects in the bucket under test.

As test rounds progressed, we kept on filling the bucket with objects. Test round-44 delivered ~3.9K Ops while bucket object count reached ~30 Million. Corresponding to the growth of object count, bucket shard count also increased from 16 (default) at round-1 until 512 at the end of round-44. The sudden plunge in throughput Ops as represented in Chart 1 is most likely to be attributed to RGW dynamic resharding activity on the bucket.

Chart 1: RGW Dynamic Bucket resharding

Pre-Sharded Bucket: Performance Insight

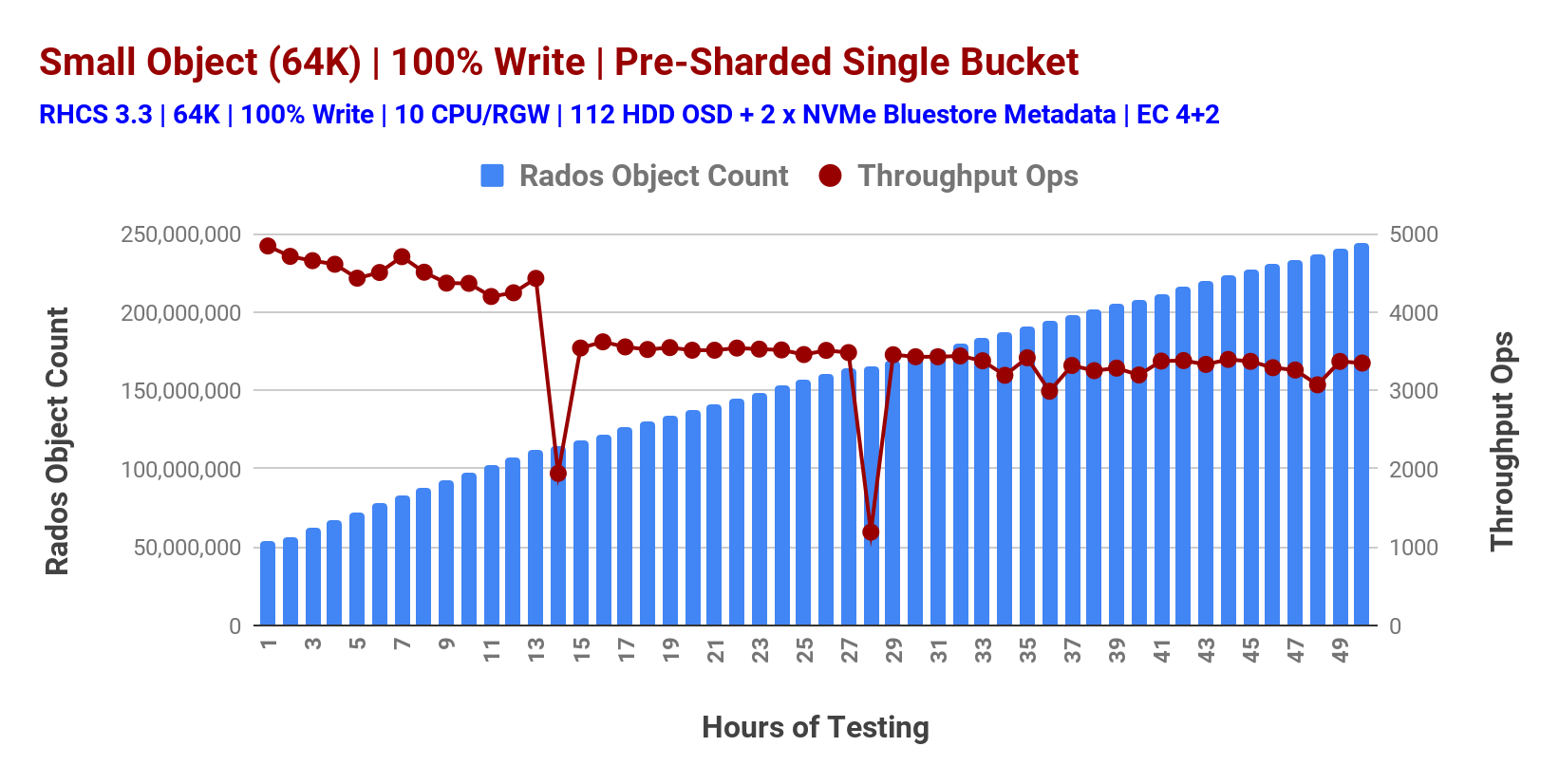

The non-deterministic performance with an overly populated bucket (Chart 1) leads us to the next test type where we pre-sharded the bucket in advance before storing any objects in it. This time we stored over 190 Million objects in that pre-sharded bucket and overtime we measured the performance which is shown in Chart 2. As such with the pre-sharded bucket we observed stable performance, however, there were two sudden plunges in performance at 14th and 28th hour of testing, which is attributed to RGW dynamic bucket sharding.

Chart 2: Pre-Sharded Bucket

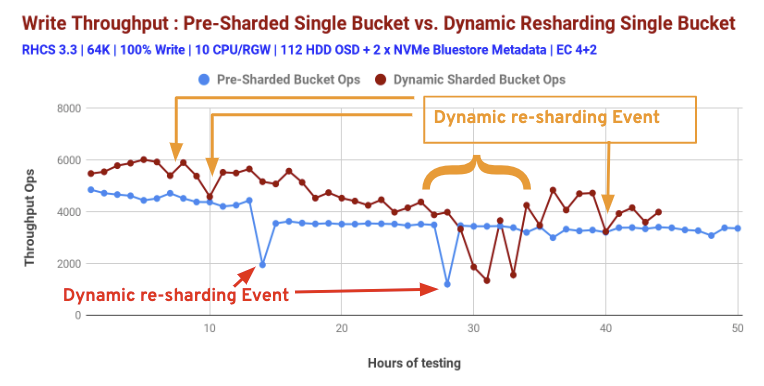

Chart 3 shows head-to-head performance comparison of the pre-sharded and the dynamically sharded bucket. Based on the post-test bucket statistics data we believe that the sudden plunges in performance for both the categories were caused by the dynamic re-sharding event.

As such, pre-sharding bucket helped achieving deterministic performance, hence from architectural point-of-view, here is some of the guidance:

-

If the application’s object storage consumption pattern is known, specifically the expected count (number) of objects per bucket, in that case pre-sharding the bucket generally helps.

-

If the number of objects to be stored per bucket is unknown, dynamic bucket re-sharding feature does the job automagically. However, it imposes minor performance tax at the time of re-sharding.

Our testing methodology exaggerates the impact of these events at the cluster level. During the test each client writes to a distinct bucket, and each of the clients has a tendency to write objects at a similar rate. The result of this is that the buckets the clients are writing to surpass dynamic sharding thresholds with similar timing. In real world environments it is more likely that dynamic sharding events would better distributed in time.

Chart 3: Dynamic Bucket resharding and Pre-sharding bucket performance comparison: 100% Write

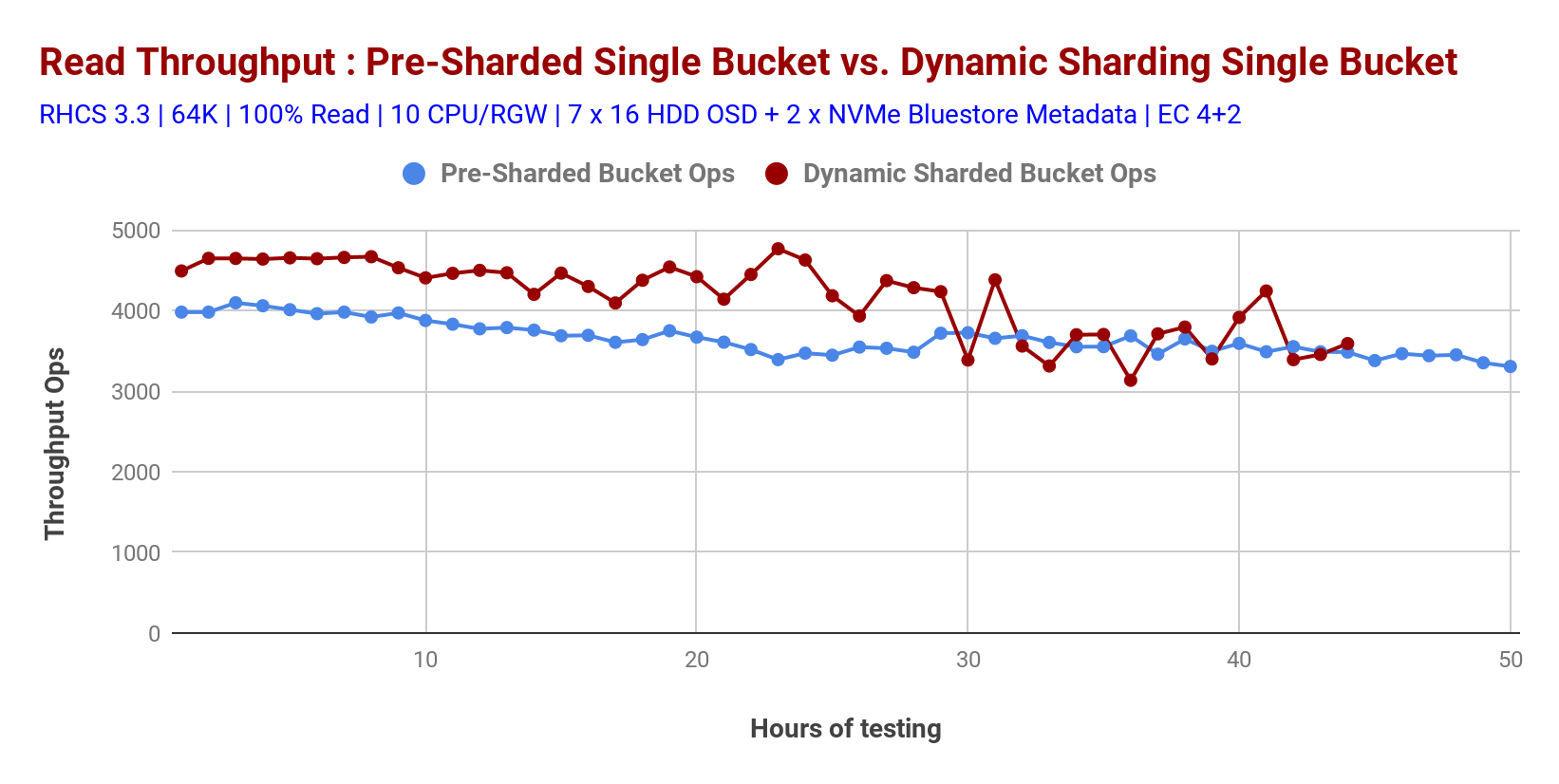

The read performance of dynamically resharded bucket found to be slightly higher compared to pre-shared bucket, however pre-sharded bucket showed deterministic performance as represented in Chart 4.

Chart 4: Dynamic Bucket resharding and Pre-sharding bucket performance comparison: 100% Read

Summary and up next

If we know how many objects the application would store in a single bucket, pre-sharding the bucket generally helps with overall performance. On the flip side, if the object count is not known in advance, the dynamic bucket re-sharding feature of Ceph RGW really helps to avoid degraded performance associated with overloaded buckets.

In the next post we will learn how the performance of RHCS 3.3 has improved since RHCS 2.0 and what all performance benefits BlueStore OSD backend brings with it.