Red Hat blog

We’re delighted to announce availability of the new Alpha 4 release of Red Hat Ceph Storage 5, built on the upstream project’s Pacific release cycle. This post is the first of a series that will walk you through the enhancements coming with the next major upgrade of Red Hat Ceph Storage—well ahead of their production release—and give the details needed to facilitate testing with early-access releases.

Today’s post centers on the new Cephadm interface to the orchestration application programming interface (API), which is intended to become the preferred bare-metal installation and management method for Ceph across the broader vendor community. You can find download details for early access releases at the end of this blog. Now, without further ado, on to what is new…

A short history

In the recent past, Red Hat’s Ceph distribution has been deployed and managed using the ceph-ansible playbooks. Ansible provided an easy way for operators familiar with Red Hat’s configuration tool of choice to build and configure Ceph clusters. ceph-ansible spans not only installation but provides several “day 2” management tasks.

However, for all its flexibility, Ansible requires an understanding of its operation before you can start deploying and managing a storage cluster. Ceph technology has reached a level of maturity such that it is now both possible and timely to once again reduce the learning curve to deploying a distributed storage cluster. Red Hat Ceph Storage 5 Alpha introduces a new integrated orchestration “engine,” sharing operations between Rook (the interface of choice in Kubernetes environments), and Cephadm (the bare-metal install interface).

And for those of you who, like us, love to automate with Ansible, fear not: We’ve got plans for you as well. But that’s another post for another day.

Back to the task at hand, Cephadm takes care of the same install-time operations Ceph-ansible supports but natively, from the Ceph command line interface (CLI), and will eventually integrate seamlessly with the Dashboard UI. Cephadm builds on the orchestrator API introduced starting with the Red Hat Ceph Storage 4 release and runs as just another module in the Manager daemon (MGR).

This means that instead of your management tasks taking place outside of the Ceph cluster, installation, scale-out, and upgrade are now fully integrated features that behave like any other bit of core Ceph functionality. This new architecture centralizes configuration information and makes it available to other Ceph components, enabling advanced management functionality as we have been building into the Rook operator for Kubernetes over the past two years, much as you can see in production today with Red Hat OpenShift Container Storage. You can think of Cephadm as the orchestration interface to manage a Ceph cluster when the hosting environment is not Kubernetes (where Rook would take charge instead).

Architectural overview

Cephadm uses SSH connections to interact with hosts and deploys services using standard container images from Red Hat’s registry or a local cache. There are no dependencies on outside tools, and a cluster is built simply by downloading a binary and running a bootstrap sequence. The only dependencies are Python 3, LVM, and the Podman container runtime. In addition, because Cephadm runs inside the Manager, the tool is inherently highly available.

In short, Cephadm is the easiest way yet to get a new Ceph cluster up and running quickly. The bootstrap sequence is started from the command line on the first host of the cluster. Running the Cephadm script in “bootstrap mode” will set up a minimal cluster (one monitor and manager daemon) on the local host in minutes. From that point on, other operations, such as adding more hosts or storage, will take place through the Ceph CLI. Watch us build a working Ceph cluster in less than three minutes:

The details

Following are detailed, step-by step instructions aimed at those among you who want to try the new tool in Alpha 4.

Pre-bootstrap checks: Everything we do in Red Hat Ceph Storage 5 Alpha is container based, so you’ll need a Red Hat account to access them (don’t panic if you don’t already have an account: just head over to the Customer Portal and create one!). With your account in place, you’ll need to verify that package dependencies are met—You just need to verify that python3, lvm2, and the podman package are installed.

Finally, your host will need a hostname defined. If you’re still staring at a hostname of localhost.localdomain, the hostnamectl set-hostname command is your friend.

Download the Cephadm ‘binary’: The binary is a python script Cephadm uses to bootstrap a cluster, but the mgr/cephadm module also uses this binary to deploy services on hosts by piping it through an SSH connection.

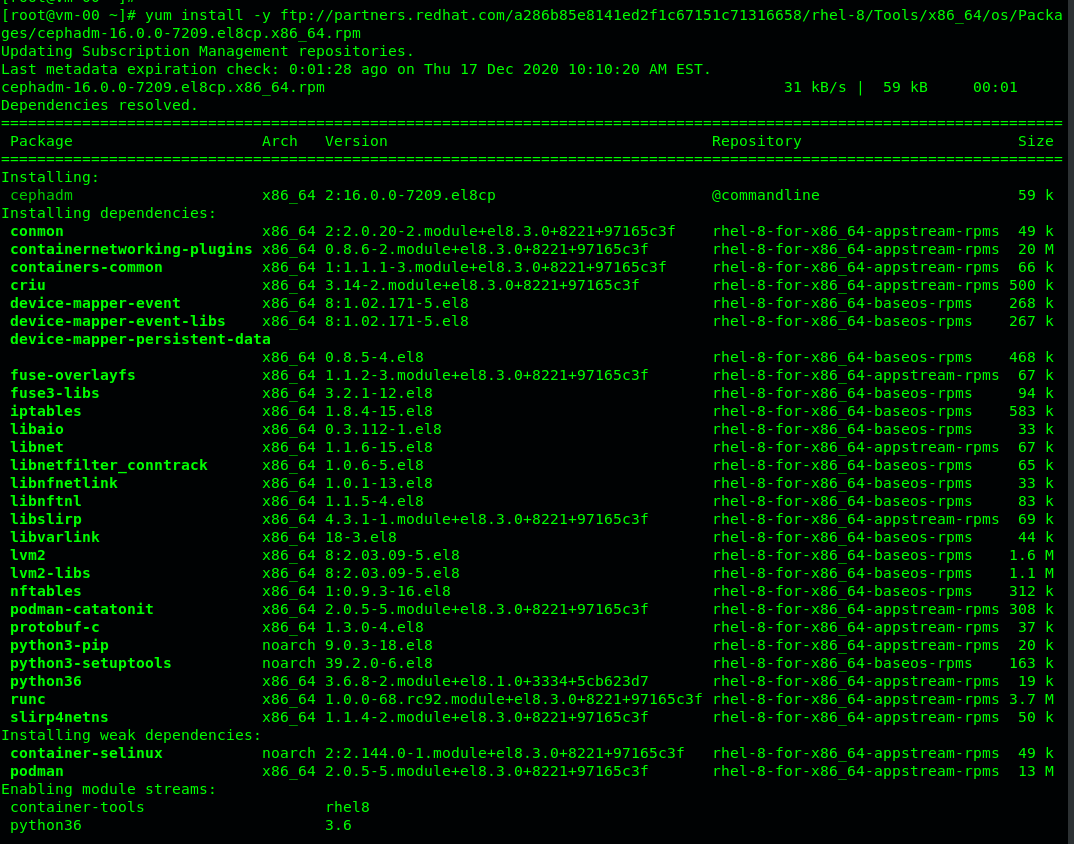

yum install -y ftp://partners.redhat.com/a286b85e8141ed2f1c67151c71316658/rhel-8/Tools/x86_64/os/Packages/cephadm-16.0.0-7209.el8cp.x86_64.rpm

Bootstrapping: The bootstrap process establishes a single-node Ceph cluster and supports various options to tailor its security, monitoring, and management. To see which options are available, use the cephadm bootstrap -h command.

In this example we’ll rely on the defaults, which will:

-

Enable the management UI.

-

Install the monitoring and alerting stack.

-

Pull container images from the Red Hat container registry.

cephadm bootstrap --mon-ip <ip-of-host> --registry-url registry.redhat.io --registry-username <your-rh-account-name> --registry-password <your-rh-password> --allow-fqdn-hostname

Building a cluster with Cephadm starts with the bootstrap process:

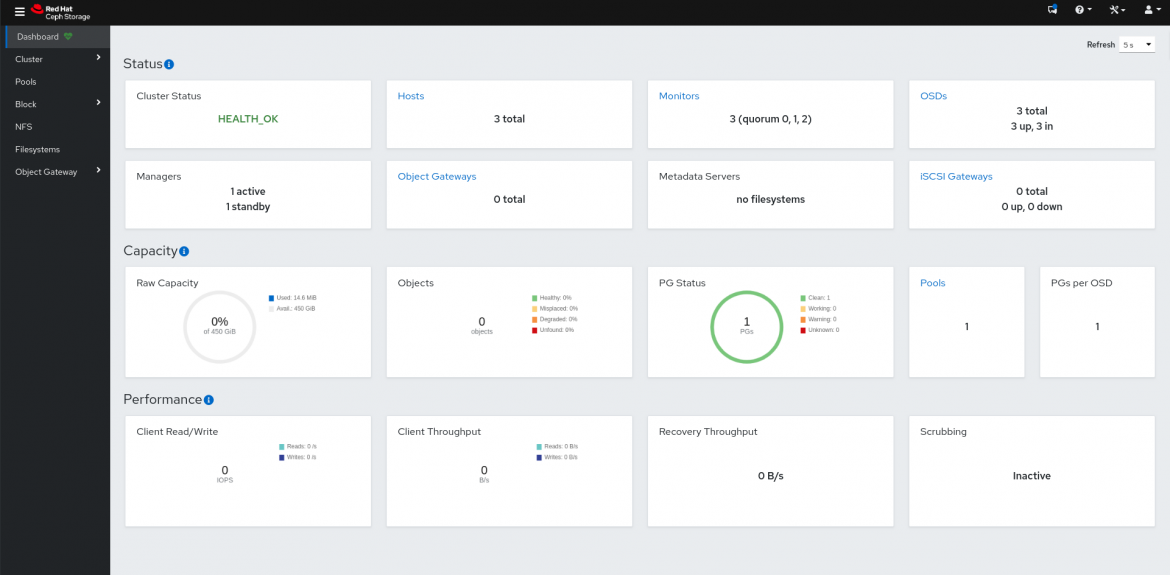

Upon successful completion, the bootstrap process provides a username and password to access the Ceph Dashboard user interface, as well as a command to run to access the Ceph CLI. With these, the user can connect to the Cephadm CLI interface to inspect a running cluster:

Once the bootstrap is completed, a minimal cluster is up and running. A single MON and MGR instance and the monitoring stack used by the Dashboard are now running in containers on the host. All Ceph CLI interaction now happens within Cephadm’s “shell mode.” To provide the flexibility needed when managing multiple Ceph clusters, the shell sub-command supports numerous parameters to determine which Ceph cluster to connect to, but if the cluster is local to the shell, you need no parameters!

Now try out the interface. For example, ceph orch ps shows all the containers running in the cluster. The orchestrator and cephadm modules support a number of new configuration and management commands, which can be viewed with ceph orch -h and ceph cephadm -h.

/etc/ceph. It also added it to the local host’s authorized_keys and it needs to be copied to the other hosts that will be part of the cluster. The next example shows the public key being copied to other hosts using the ssh-copy-id command.Add hosts: Once the key has been copied to other hosts, those hosts can be added to the cluster with this command in the Ceph shell: ceph orch host add <hostname>

Add OSDs: Adding storage daemons (OSD, for object storage daemon) is easy, too. Here we have chosen to add all available devices in the cluster, but an operator can create an OSD service specification to retain more control over what disks drives are to be used as OSDs by Ceph. The service specification is described here.

Open the Dashboard: The Ceph Dashboard has been up and running since the bootstrap completed (the login credentials were shown at the end of the bootstrap process).

With a healthy, running cluster, the Cephadm interface can be used to deploy storage protocol services as needed, including the filesystem metadata daemon (MDS) used by CephFS or the S3 storage gateway (RGW). Try it yourself by adding an RGW instance to the cluster you just built with ceph orch apply rgw test_realm test_zone.

Cephadm is under active development, so you should assume its final state may vary somewhat from the preview we're covering now. Learn more about what else it can already do in the upstream documentation of the Ceph project.

Do try this at home

Red Hat Ceph Storage 5 Alpha 4 is available via anonymous FTP. We want to note very clearly that Red Hat Ceph Storage 5.0 Alpha is early access and provided with no support. Do not upgrade a production installation to an early access release!

Download site is on ftp.partners.redhat.com via anonymous FTP:

ftp://partners.redhat.com/d960e6f2052ade028fa16dfc24a827f5

The container image is available through the Red Hat Container Catalog. Please submit feedback through your Red Hat contact or via Red Hat Bugzilla.

About the authors

Daniel Pivonka is a Software Engineer focused on Red Hat Ceph Storage.

Paul Cuzner is a Principal Software Engineer working within Red Hat's Cloud Storage and Data Services team. He's has more than 25 years of experience within the IT industry, encompassing most major hardware platforms from IBM mainframe to commodity x86 servers. Since joining Red Hat in 2013, Cuzner's focus has been on applying his customer and solutions-oriented approach to improving the usability and customer experience of Red Hat's storage portfolio.

Cuzner lives with his wife and son in New Zealand, where he can be found hacking on Ceph during the week and avoiding DIY jobs around the family home on weekends.

Federico Lucifredi is the Product Management Director for Ceph Storage at Red Hat and a co-author of O'Reilly's "Peccary Book" on AWS System Administration.