Blog de Red Hat

In the previous post we covered the details of a vDPA related proof-of-concept (PoC) showing how Containerized Network Functions (CNFs) could be accelerated using a combination of vDPA interfaces and DPDK libraries. This was accomplished by using the Multus CNI plugin adding vDPA as secondary interfaces to kubernetes containers.

We now turn our attention from NFV and accelerating CNFs to the general topic of accelerating containerized applications over different types of clouds. Similar to the previous PoC our focus remains on providing accelerated L2 interfaces to containers leveraging kubernetes to orchestrate the overall solution. We also continue using DPDK libraries to consume the packet efficiently within the application.

In a nutshell, the goal of the second PoC is to have a single container image with a secondary accelerated interface that can run over multiple clouds without changes in the container image. This implies that the image will be certified only once decoupled from the cloud it’s running on.

As will be explained, in some cases we can provide wirespeed/wirelatency performance (vDPA and full virtio HW offloading) and in other cases reduced performance if translations are needed such as AWS and connecting to its Elastic Network Adapter (ENA) interface. Still, as will be seen it’s the same image running on all clouds.

Note that for wirespeed/wirelatency performance, any NIC supporting vDPA or virtio HW offload can be used with the same container image. This is as opposed to container images using single-root input/output virtualization (SR-IOV) where a vendor-specific driver is required corresponding to the specific cloud/on-prem HW.

For the PoC we have chosen to use a vDPA on-prem cloud (similar to the one used in the previous blog), Alibaba public cloud bare metal instances using virtio full HW offloading (see the Achieving network wirespeed in an open standard manner post for detail on full HW offloading) and AWS cloud providing VMs with ENA interfaces.

Similar to the previous blog this post is a high level solution overview describing the main building blocks and how they fit together. We assume that the reader has an overall understanding of Kubernetes, the Container Network Interface (CNI) and NFV terminology such as VNFs and CNFs.

Given that this is a work in progress we will not provide a technical deep dive and hands on blogs right now. There are still ongoing community discussion on how to productize this solution with multiple approaches. We will however provide links to relevant repos for those who are interested to learn more (the repos should be updated by the community).

We plan to demonstrate a Proof of Concept (PoC) showing how to accelerate containers over multiple clouds at the North American Kubecon in November 2019 at the Red Hat booth. We will be joined by a few companies we've been working with on the demo as well. We encourage you to visit us and see first-hand how this solution works.

The challenge and overall solution

Different vendors and cloud providers have implemented or are experimenting with various forms of network acceleration. On the one hand they are able to tailor optimized wirespeed/wirellatency solutions to their NICs (for example using SRIOV in on-prem or AWS ENA interfaces). On the other hand, this implies that application developers requiring accelerated interfaces are forced to tailor their application/container-image to the specific-cloud/specific-hardware leading to potential lock in.

By leveraging virtio-networking based solutions we believe that open standards and open source implementations could be provided to those applications instead, eventually decoupling the accelerated applications from the cloud/hardware they run on.

Looking at the evolution of the clouds to hybrid clouds we believe the challenge of providing standard accelerated network interfaces to containers is becoming especially imperative.

As more workloads are migrated from VMs to containers, awareness is growing to the need for high performance network interfaces to fully utilize the applications. Those interfaces we believe should be open and standard.

The virtio-networking approach for solving this problem is based on using a number of building blocks all exposing the same virtio data plane and control plane towards the accelerated containerized application:

-

vDPA for on-prem—a virtio dataplane going directly from the NIC to the container while using a translation layer between the NICs control plane and the virtio control plane, while having a direct data plane. This provides wirespeed/wirelatency to the container. We are exposing a vhost user socket based on the vhost user protocol to the container for the control plane

-

Virtio full HW offloading (e.g. for Alibaba bare metal servers)—Alibaba is one example of a public cloud provider adapting virtio full HW offloading into their servers. By using this approach we are able to provide wirespeed/wirelatency to the container as well. Although this approach exposes a virtio based control plane using PCI directly from the HW, we translate it to a vhost user protocol in order to expose a single socket based interface to the container (for all virtio-networking approaches)

-

Mediator layers for public cloud—In the case of AWS since the ENA interface (see details in the AWS section) does not support virtio data plane/control plane, both planes need to pass through a translation layer. This will reduce performance however will ensure the separation/transparency from the application’s perspective. We believe there are many optimizations that can be done on this solution looking forward to improve the performance

In the following sections we will delve into how these solutions are implemented in practice over the three clouds we choose to use for the PoC (on-prem, Alibaba public cloud bare metal instances and AWS).

Running accelerated containers for on-prem

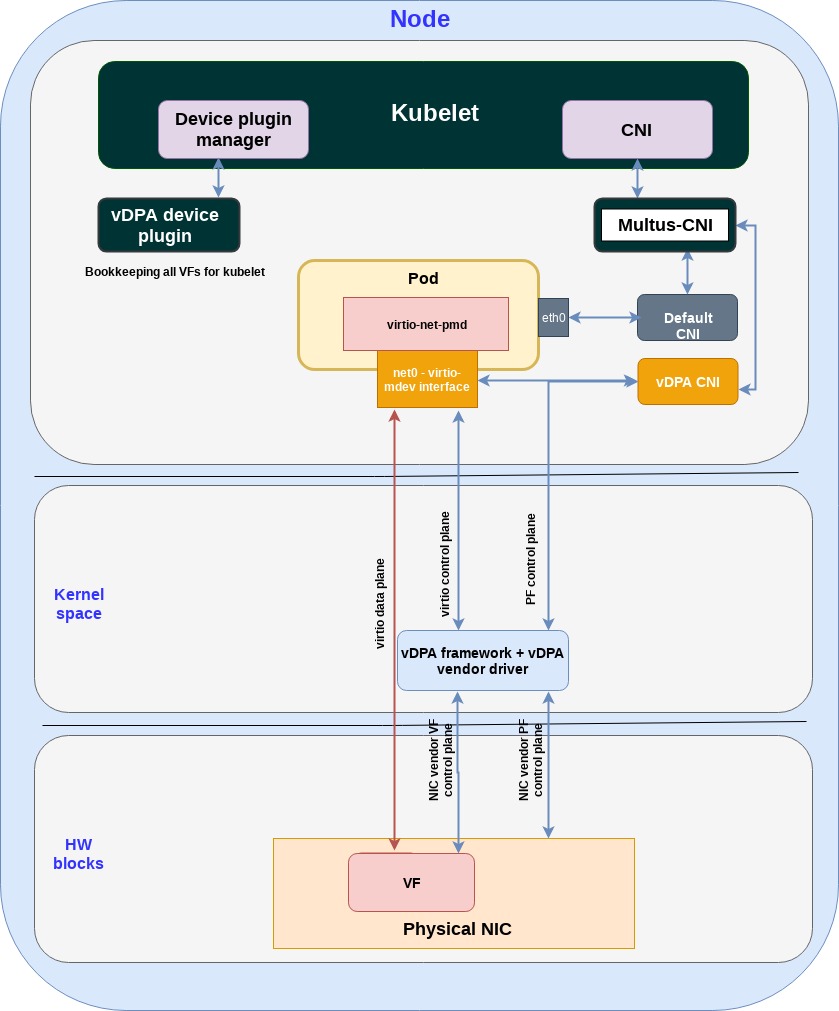

As described in details in the previous post vDPA is the virtio building block for providing an accelerated interface to containers running on prem:

The physical NIC implements the virtio ring layout enabling the container to open a direct data plane connection (using DPDK) to the physical NIC. The control plane on the other hand can be proprietary for each NIC vendor and the vDPA framework provides the building blocks to translate it to a vhost user socket exposed to the containerized application.

When we move on to describe the Alibaba and AWS deployments next it’s important to emphasize again that we always run the same container image and virtio-net-pmd DPDK driver on all clouds.

Running accelerated containers on Alibaba bare metal cloud

In this section we will show how we provide standard virtio interfaces for containers on top of the Alibaba cloud platform.

The Alibaba cloud’s ECS bare metal instance uses virtio network adapters as the networking interface. Each adapter is compatible with the virtio spec both for the dataplane and for the control plane. It should be noted, however, that in practice these adapters contain a number of gaps when compared to the virtio spec.

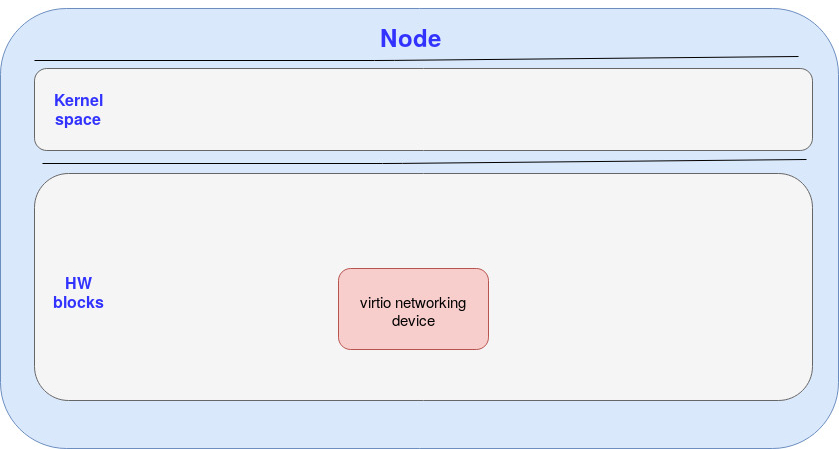

The following diagram presents the HW building blocks used in the Alibaba cloud platform in order to provide a virtio networking device (we removed the user space for simplicity):

Figure: Building blocks virtio networking device of Alibaba ECS bare metal instance

The Hardware virtio network device (can be one or more) advertises a legacy control plane over a PCI bus. This enables the kernel to identify this device through the PCI bus.

Note that in the on-prem case the HW network device uses its proprietary control plane over the PCI bus (which the vDPA then translates to virtio).

In the virtio networking device there are no VFs (virtual functions) at least visible to the user, it’s a single PF vs the on-prem where the physical NIC has multiple VFs. This implies that in the Alibaba case we can at most pre provision multiple virtio devices on the NIC (using Alibaba web interface and internal implementation). On the other hand, in the on-prem case we can have the vDPA CNI dynamically create virtio devices via VFs.

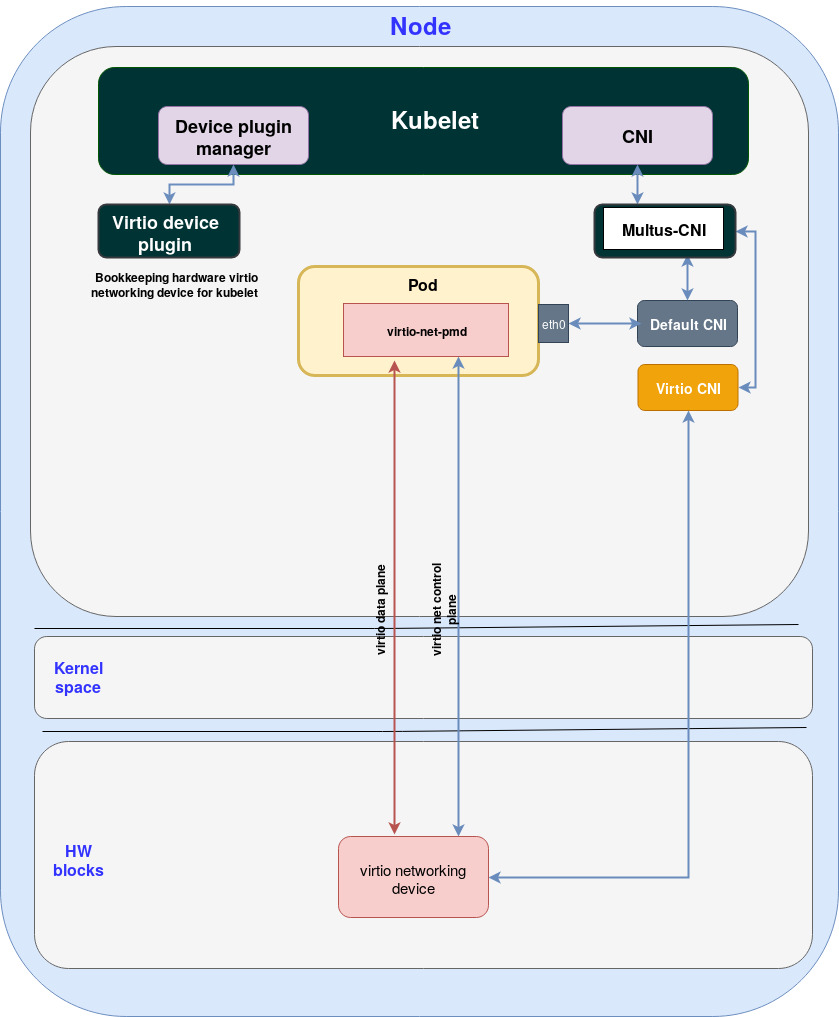

Next we want to access the virtio networking device from the virtio-net-pmd running inside the pod (in the user space). One straightforward way is to expose the card and its PCI control plane directly to the userspace as demonstrated by the following figure:

Figure: Direct access to Ali’s virtio Ethernet device

In this way, the control plane of virtio networking device is exposed directly to virtio-net-pmd running inside the pod. virtio-net-pmd can send commands directly to virtio networking device, and the dataplane goes directly to the hardware virtio networking device.

This works in an ideal case however there are several drawbacks that are found in this approach:

-

The virtio-net-pmd interface differs between the Alibaba and on-prem deployments—in the case of on-prem, we provide a vhost-user socket based interface for virtio-net-pmd to the pod. However in the Alibaba case we use PCI interface since we need to provide the hardware device information (e.g. PCI bus device function) to the virtio-net-pmd in the pod. This means we now have two different interfaces going to the pod complicating the management layers.

-

Gaps in virtio HW implementation—in practice, the Alibaba hardware implementation has a number of gaps thus it is not 100% virtio compatible. There are several small violations we encountered making it challenging to directly connect the HW virtio networking device to the pod.

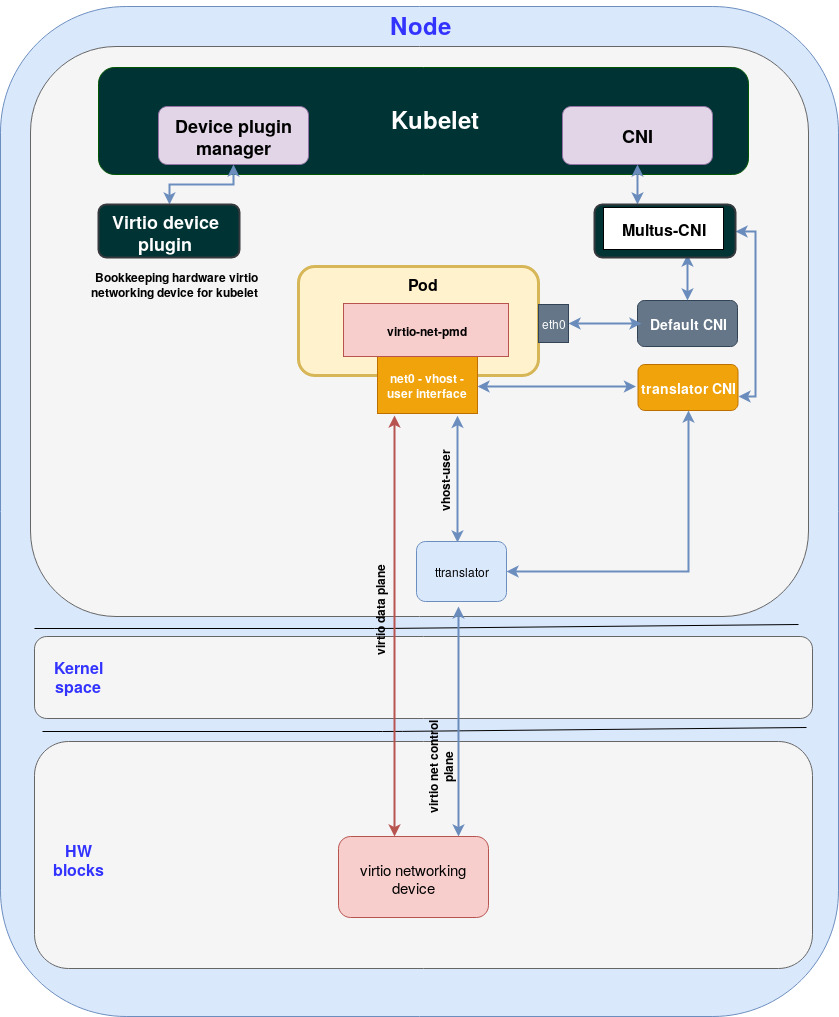

To address the above limitations, we decided to deploy a translator from PCI to vhost-user socket as demonstrated in the following diagram:

Figure: Using translator to provide a unified socket interface for the POD

The translator block added between the pod and the HW block solves these issues:

-

The translator exposes a vhost-user socket to the virtio-net-pmd (the pod) translating the vhost-user commands into the virtio commands sent to the HW virtio networking device and vice versa.

-

Inside the translator a number of workarounds have been added to address the gaps between the existing HW implementation in the virtio networking device and the virtio specification.

Running accelerated containers on AWS

In the final section, we will show how we can still provide a standard virtio interface for containers when the platform does not support vDPA, which is the case of AWS as of today.

AWS Cloud instances accelerated network interface offering is based on the Elastic Network Adapter (ENA), which provides data and control planes that aren’t compatible with the Virtio specification. It means that a container image directly using ENA NIC would require an ENA specific driver which means it’s now coupled to the AWS ENA environment.

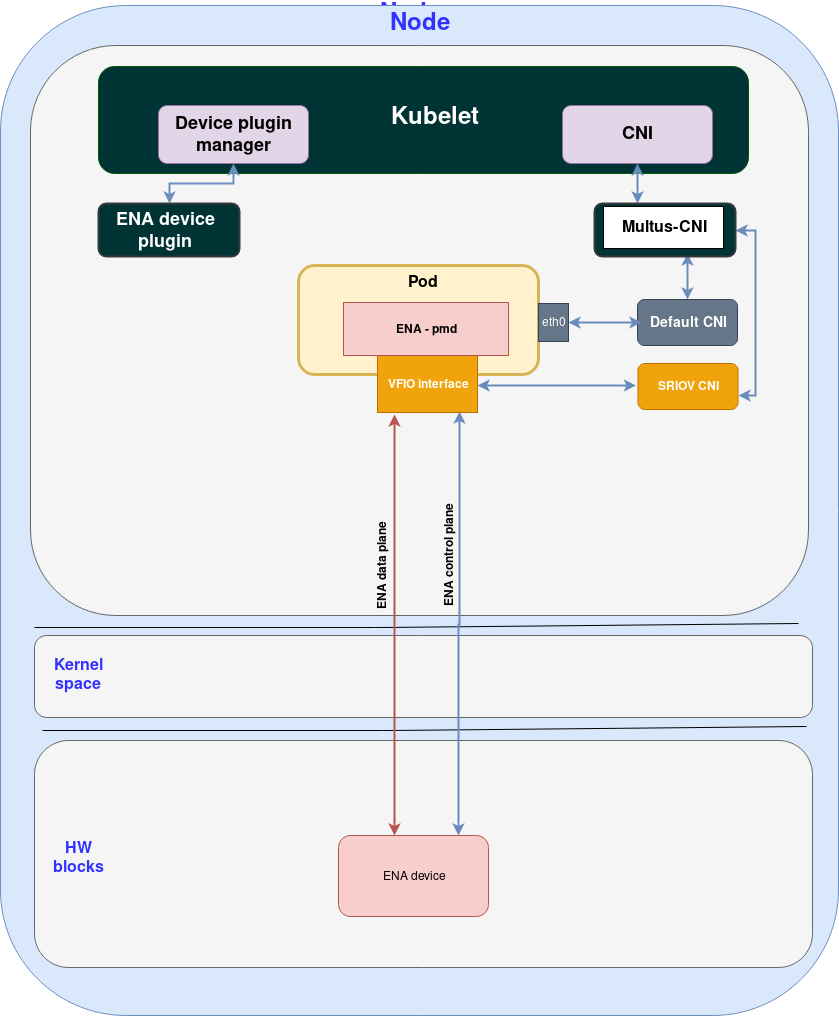

The following diagram shows the AWS ENA architecture when combined with Kubernetes Multus and SR-IOV CNI (see previous post for additional details):

Note the following:

-

If we compare the HW blocks to on-prem and Alibaba in this case we have an ENA device to work with.

-

Similar to the on-prem SRIOV use case both the control plane and data plane go directly from the ENA device to the pod (since ENA is based on SRIOV).

-

As pointed out since the pod contains the ENA-pmd it is not compatible with any other cloud.

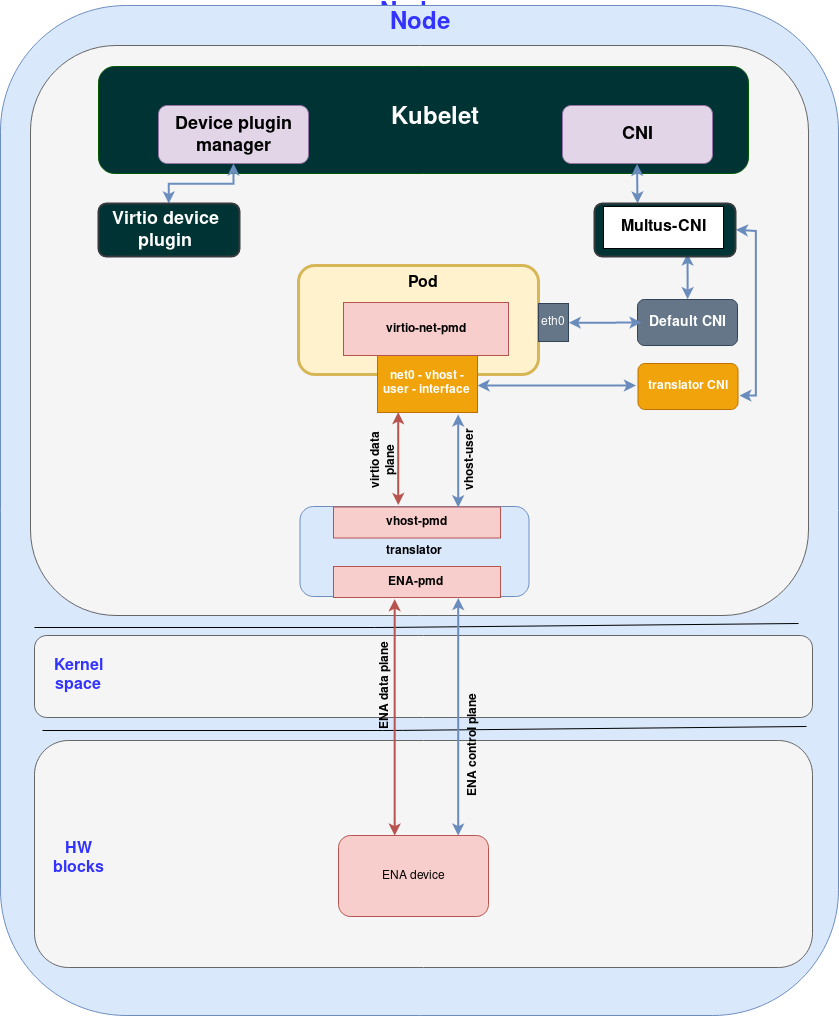

In order to overcome the limitation of a lack of a standard interface, a mediator layer is added between the ENA and the container image. By adding the mediator the container image uses virtio ring for the data plane and vhost-user protocol for the control plane. This means it’s using the exact same interfaces as in the on-prem and Alibaba case.

The following diagram presents the modified ENA use case:

Note the following:

-

As explained, the mediator layer, named “translator” in the above diagram, translates the ENA data/control plane to virtio data plane / vhost-user control plane.

-

The mediation layer adds another copy into the data plane which will reduce the overall performance not to mention additional load on the CPU performing data copying. We believe that looking forward a number of approaches can be taken to reduce the performance penalty. We also believe that vDPA may end up being used in public clouds as well removing the need for such mediators in some of the cases.

Summary

In this blog we have discussed the topic of how could a single container image (using a secondary accelerated interface) run over any cloud. We presented a possible solution to the problem based on the virtio building blocks.

We believe this problem will become critical as more and more apps requiring wirespeed/wirelatency are containerized and need to be deployed over multiple clouds. The cost of certifying, deploying and upgrading different images of the same app for different clouds as happening today is extremely expensive.

Going back to what we did, we’ve shown how vDPA could be used for the on-prem case, virtio full HW offloading for public cloud bare metal servers case and virtio mediator layers for a generic public cloud (although not performance optimal since packet copying are required).

Similar to the previous blog this is a work in progress and readers are encouraged to check out the GitHub repo containing the most up to date details and instructions on how the different parts are connected together.

An important point we hope has been conveyed in this blog is that virtio advanced building blocks are relevant for enterprise and more general use cases on top of the NFV use cases detailed in our previous blog.

In the next blog we will conclude this virtio-networking blog series and provide some insight into future directions our community is evaluating. We will also describe a number of topics we plan on blogging about in the next series to come.

Sobre los autores

Experienced Senior Software Engineer working for Red Hat with a demonstrated history of working in the computer software industry. Maintainer of qemu networking subsystem. Co-maintainer of Linux virtio, vhost and vdpa driver.