Blog de Red Hat

In past releases, Red Hat OpenStack Platform director has used a single Heat stack for the overcloud deployment. With the Train release, it’s now possible to use multiple stacks for a single cloud deployment. Multiple stacks is advantageous to edge deployments as it allows for each distributed edge site to be managed and scaled independently, minimizing operational complexity. First, let’s review the concept of a “stack” in director, as the term can often have overloaded meanings in software engineering.

What is a stack?

For director, a stack specifically refers to the concept of a Heat stack. A Heat stack is a grouping of resources that is stored in the Heat database and manipulated with the Heat application programming interface (API). A resource in a stack often corresponds to REST resources exposed by other OpenStack API services. For instance, a resource could be a Nova instance, a Glance image, or a Neutron network. Grouping these resources into a stack and operating on them as a single group with Heat provides a logical operation of the life-cycle management (create, update, delete) of the resources. Effectively, one can deploy a complex cloud application (or an OpenStack cloud itself with director) consisting of many OpenStack resources using Heat orchestration.

Single stack versus Multi-stack deployments

Traditionally, director has used a single stack for the entire OpenStack cloud deployment. If you’re familiar with director, the default name of the stack is “overcloud." A single stack has some benefits such as single operations to create or update the entire deployment, or the ability to monitor the state of software and hardware configuration across the whole cloud.

However, using a single stack has also presented some challenges. As the size of a cloud deployment grows and the number of managed resources in the stack increases, operations become slower. . Also, having to manage the entire stack at once can be a challenge when deployments are geographically distributed and operations that need to complete are isolated within a defined maintenance window.

Multi-stack deployments can address these types of challenges. A cloud operator can scale an existing deployment using a new stack while leaving the previously deployed stack(s) unchanged. Effectively, the multi-stack feature partitions a cloud deployment by breaking up the size of management operations into smaller units.

Multi-stack deployments for DCN

Multi-stack deployments are particularly effective for Distributed Compute Node (DCN) deployments for edge computing. DCN can deploy geographically distributed compute nodes so that workloads running on those nodes can be located closer to the end consumer. The compute nodes are deployed at locations that are separated (often by long distances) from the control-plane services where the OpenStack API and management services operate.

As there are typically many such distributed sites of compute nodes, using multi-stack to manage operations on those nodes is beneficial. Each DCN site can be represented as its own stack with director, allowing for that site to be deployed, managed and scaled individually.

The following diagram illustrates a single OpenStack cloud deployed with three stacks named central, dcn1, and dcn2. The nodes that are managed and deployed by those stacks are distributed geographically across different locations:

A practical example

Let’s take a look at a practical example of a DCN deployment using multi-stack. In this example, there are three stacks deployed. There is a “control plane” stack that also includes storage and compute at the central site, and 2 DCN stacks for distributed compute and storage resources at remote sites.

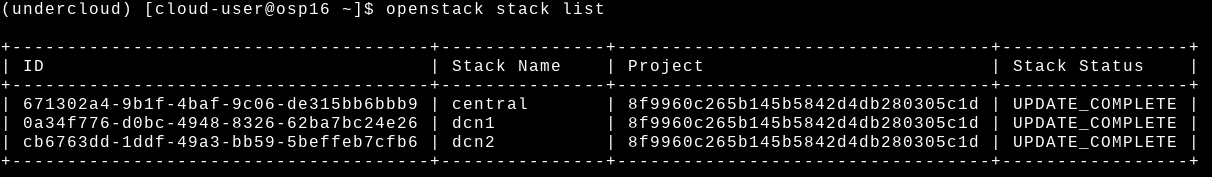

The output from openstack stack list shows our three stacks instead of just a single “overcloud” stack:

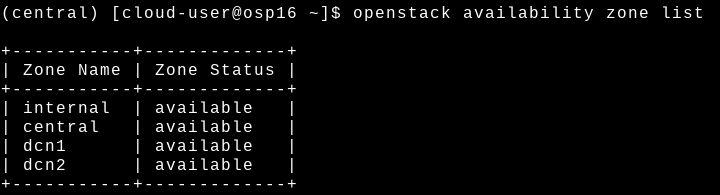

With the deployment spread across multiple stacks in this method, each can be managed individually as needed. Additionally, configuration can differ across the stacks to correspond with differences in configuration needed at different sites. For example, Nova compute and Cinder volume availability zones (AZ) can be configured per site. These settings can use the stack names as their values for consistency. This results in the following AZ configuration:

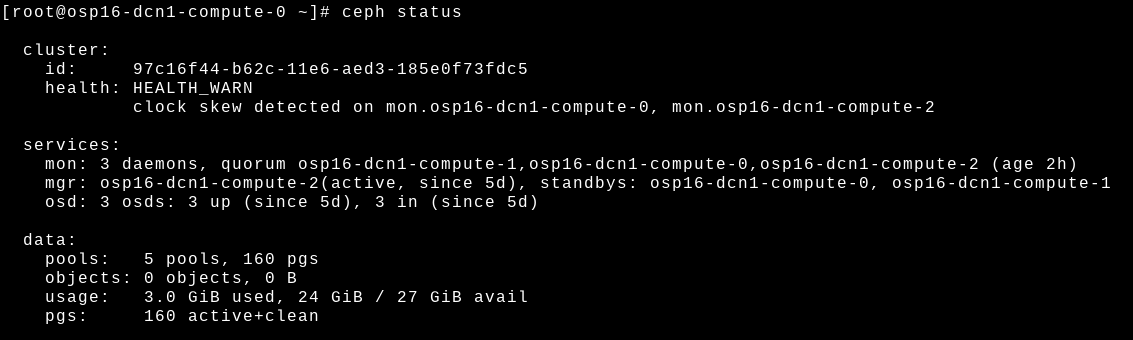

Likewise, the compute and volume services are grouped according to their availability zone. In this deployment, there are three nodes per site deployed, and each node is running both compute, volume, and storage services for a hyperconverged infrastructure (HCI) deployment. The storage service in this example uses Ceph:

As director has traditionally limited the deployment of some clustered resources to be one per stack, using multi-stack has the advantage of managing additional deployments of those resources in a single cloud. In this case, there are distinct Ceph clusters at each site instead of only a single Ceph cluster. Multiple Ceph clusters are particularly advantageous for DCN deployments as storage is co-located along with compute resources at the edge site close to the end consumer. Having a dedicated Ceph cluster per site helps ensure that storage consumption is not stretched across sites, which can badly impact performance and overall reliability. Dedicated storage per site also segregates the storage consumption model in order to improve overall data security.

As director has traditionally limited the deployment of some clustered resources to be one per stack, using multi-stack has the advantage of managing additional deployments of those resources in a single cloud. In this case, there are distinct Ceph clusters at each site instead of only a single Ceph cluster. Multiple Ceph clusters are particularly advantageous for DCN deployments as storage is co-located along with compute resources at the edge site close to the end consumer. Having a dedicated Ceph cluster per site helps ensure that storage consumption is not stretched across sites, which can badly impact performance and overall reliability. Dedicated storage per site also segregates the storage consumption model in order to improve overall data security.

If we ssh to one of the DCN nodes and check the Ceph status, we can see that it consists of nodes only at the local site:

The above screenshot shows that only the Ceph services at the dcn1 site are a part of the cluster at that site.

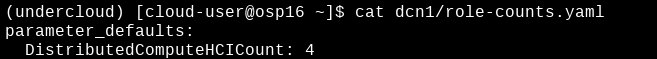

Each stack can be managed individually without affecting the other stacks. For example, the count of nodes in the dcn1 stack could be increased to scale out compute and storage resources at that site. The count of nodes would be increased in the appropriate environment file where the count parameter is defined for the dcn1 stack:

The “openstack overcloud deploy” command would then be used to update only the dcn1 stack:

During such an operation, whether it be for configuration change, scale out, or minor updates, only the dcn1 stack is updated. No other stacks would be affected by the dcn1 stack operation.

Summary

Multi-stack for DCN is a powerful way to partition a director deployment into a corresponding stack per edge site. While any partitioning scheme may be possible, it makes sense to split up the stacks based on a logical grouping of resources. In the DCN case, it allows for deploying a distinct Ceph cluster per edge site, and it gives the operator a method to isolate scale and management operations that target a specific site.

Sobre el autor

James Slagle is a Senior Principal Software Engineer at Red Hat. He's been working on OpenStack since the Havana release in 2013. His focus has been on OpenStack deployment and particularly OpenStack director. Recently, his efforts have been around optimizing director for edge computing architectures, such as the Distributed Compute Node offering (DCN).