Blog de Red Hat

Red Hat and NVIDIA have been strong partners for many years, and have a history of collaboration, focused on our customers' short and long-term needs. Our engineering teams have collaborated upstream on technologies as diverse as video drivers, heterogeneous memory management (HMM), KVM support for virtual GPUs, and Kubernetes. Increased interest amongst enterprises in performance-sensitive workloads such as fraud detection, image and voice-recognition and natural language processing, makes an even more compelling case for the two companies to work together.

NVIDIA delivers a number of offerings that address emerging use cases with GPU-based hardware acceleration and complementary software that supports AI and machine learning workloads. NVIDIA’s DGX-1 server, for example, tightly integrates up to eight NVIDIA Volta GPUs into a standard form-factor, providing improved performance in a relatively small datacenter-friendly footprint. Red Hat has the software portfolio that is well positioned to highlight the unique capabilities of DGX-1 hardware and NVIDIA’s CUDA software platform.

Our mutual customers demand reliability, stability and tight integration with existing enterprise applications, as well as, the security features, familiarity and industry certifications of Red Hat software coupled with NVIDIA’s innovative hardware.

With that in mind, today's announcement about an expanded collaboration between Red Hat and NVIDIA to drive technologies and solutions for the artificial intelligence (AI), technical computing and data sciences markets across many industries, shouldn't come as a surprise.

Beyond the headlines, let’s take a deeper look into what these announcements mean to you:

- Red Hat Enterprise Linux is now supported on the NVIDIA DGX-1. Customers can use new or existing Red Hat Enterprise Linux subscriptions to install the software on DGX-1 systems, backed by joint support from Red Hat and NVIDIA.

- Going beyond hardware certification of the NVIDIA DGX-1 servers, we’re also optimizing Red Hat Enterprise Linux performance on these systems by providing customized tuned profiles. Moreover, by using Red Hat Enterprise Linux as the operating system on DGX-1 customers should take full advantage of SELinux which is optimized to run in security-conscious environments often found in government, healthcare and financial verticals.

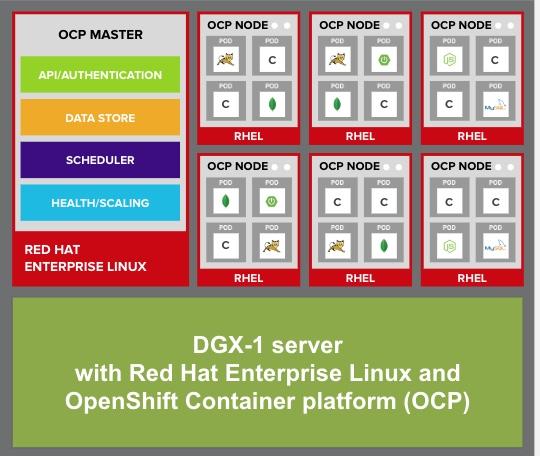

- Red Hat OpenShift Container Platform that is built on open source innovation and industry standards, including Red Hat Enterprise Linux and Kubernetes, is now supported on DGX-1. This provides customers interested in container deployments with access to advanced GPU-acceleration capabilities of DGX systems. A direct result of community work in the Kubernetes open source project is the device plug-ins capability, which adds support for orchestrating hardware accelerators. Device plug-ins provide the foundation for GPU enablement in OpenShift.

- NVIDIA GPU Cloud (NGC) containers can now be deployed on Red Hat OpenShift Container Platform. NVIDIA uses NGC containers to deliver integrated software stacks and drivers to run GPU-optimized machine learning frameworks such as TensorFlow, Caffe2, PyTorch, MXNet, and many others. As of today, you can work with these container images on OpenShift clusters running on DGX-1 systems.

As far back as two years ago, Red Hat and NVIDIA engaged in the upstream Kubernetes Resource Management Working Group to broaden support for performance-sensitive workloads such as machine and deep learning, low latency networking, and technical computing. Our mutual customers can benefit from this engineering engagement as their technical requirements and feature requests have a better chance of being incorporated into this popular container orchestration platform. Many of the features initially scoped in the upstream Kubernetes have landed in OpenShift Container Platform, including proof-of-concept use of GPUs with Device Plugins and creation of GPU Accelerated SQL queries with PostgreSQL & PG-Strom.

Nvidia and Red Hat have been collaborating in the upstream Linux kernel community on Heterogeneous Memory Management. In addition to traditional system memory, HMM allows GPU memory to be used directly by the Linux kernel and improves performance by avoiding data copies between main memory and GPU memory. Our customers could greatly benefit from simplified development of applications that use GPUs by copying data directly to GPU memory and using familiar operating system APIs (such as those found in glibc) rather than using dedicated driver APIs.

To support features such as virtual GPU, NVIDIA have been working with Red Hat and others in the upstream Linux community to enable support for the mediated device framework (mdev). The framework manages device drivers calls enabling applications to take advantage of the underlying hardware. NVIDIA GPUs are one of the device types targeted by mdev.

Today's announcements, along with a proven track record of successful upstream work across multiple projects, should instill confidence in our mutual customers to continue to rely on Red Hat and NVIDIA for their next generation of workloads and optimized hardware.

To learn more about how Red Hat and NVIDIA align on open source solutions to fuel emerging workloads, visit Red Hat (booth #44) at the GPU Technology Conference (GTC) in Washington, D.C., from October 23-24, 2018. At the event, Red Hat and NVIDIA will also jointly present a “Best practices for deploying Red Hat platforms on DGX systems in datacenters” on Tuesday, October 23, 3:30 p.m. - 4:20 p.m.

Sobre el autor

A 20+ year tech industry veteran, Jeremy is a Distinguished Engineer within the Red Hat OpenShift AI product group, building Red Hat's AI/ML and open source strategy. His role involves working with engineering and product leaders across the company to devise a strategy that will deliver a sustainable open source, enterprise software business around artificial intelligence and machine learning.