Blog Red Hat

Why network bound disk encryption is important

It's hard to overstate the importance of data security. Many organizations using RHEL are storing sensitive information, ranging from medical and financial information all the way up to governments storing national security related information.

When storing any kind of sensitive information, you should consider disk encryption to protect the data. Disk encryption can help keep information secure in the event that a storage device falls into the wrong hands. The most obvious way this could happen would be if a laptop or other hardware is lost or stolen. However, there are many other potential ways you might lose control of a storage device.

Perhaps you have a storage device that has a predictive failure, and you have a hardware vendor come in and replace the storage device. Is it possible that the old storage device that leaves your control ends up in the wrong hands? When servers in your datacenter reach the end of their life, what happens to their storage devices? How many people have physical access to your datacenter or other locations where storage devices exist?

Blockers to encryption

RHEL has offered the ability to encrypt disks for many years, but the need to manually decrypt a device has been a limiting factor, particularly on the root filesystem device. If the root filesystem is encrypted your system would not boot up without you manually connecting to the console and typing the encryption passphrase to unlock the root filesystem device. If you are working with hundreds, or thousands of systems, this is simply not practical to do each time the systems are rebooted.

This is why Red Hat offers the network bound disk encryption (NBDE) functionality, which can automatically unlock volumes by utilizing one or more network servers (referred to as Tang servers).

At a high level, if your RHEL systems (using the Clevis client) can establish a network connection to a specified number of these Tang servers, they can automatically unlock encrypted volumes, including the root filesystem volume. These Tang servers should be secured within your internal network so that if the encrypted storage device is lost, stolen, or otherwise removed from your environment, there would no longer be access to your Tang servers for automatic unlocking.

Unlock the power of encryption with RHEL System Roles

RHEL System Roles are a collection of Ansible roles and modules that are included in RHEL to help provide consistent workflows and streamline the execution of manual tasks. The NBDE Client and NBDE Server System Roles help you implement NBDE across your RHEL environment in an automated manner.

Note that the Clevis client also supports binding LUKS automatic decryption with a Trusted Platform Module (TPM), however the NBDE Client System Role does not support this feature currently and it will not be covered in this blog.

If you aren’t already familiar with NBDE, please refer to the RHEL documentation on configuring automated unlocking of encrypted volumes using policy-based decryption.

In the demo environment, I have several existing RHEL 8 and RHEL 7 clients that already have a LUKS encrypted root volume, and by the end of the post I’ll have implemented NBDE in the environment using RHEL System Roles.

In a future post, I’ll cover using the roles with a more advanced scenario that utilizes multiple Tang servers for high availability, how to automate backing up the Tang keys, and how to automate rotating the Tang keys.

Environment overview

In my example environment, I have a control node system named controlnode running RHEL 8 and five managed nodes:

-

Two RHEL 8 systems (rhel8-server1 and rhel8-server2).

-

Two RHEL 7 systems (rhel7-server1 and rhel7-server2).

-

A RHEL 8 system that I would like to be the Tang server (tang1).

The four RHEL 8 and RHEL 7 systems all have encrypted root volumes. Whenever I reboot these systems, they pause at boot, and I must manually type in the encryption passphrase for them to continue booting:

The four RHEL 8 and RHEL 7 systems all have encrypted root volumes. Whenever I reboot these systems, they pause at boot, and I must manually type in the encryption passphrase for them to continue booting:

![]() I would like to use the NBDE client and server system roles to configure Tang on the tang1 server, and configure each of the four RHEL 8 and RHEL 7 systems with the Clevis client, so that they automatically connect to the Tang system at boot and unlock the root volume without manual user intervention.

I would like to use the NBDE client and server system roles to configure Tang on the tang1 server, and configure each of the four RHEL 8 and RHEL 7 systems with the Clevis client, so that they automatically connect to the Tang system at boot and unlock the root volume without manual user intervention.

Each of these systems is configured to use DHCP. The NBDE client System Role does not currently support systems with Static IP addresses (see BZ 1985022 for more information).

I’ve already set up an Ansible service account on the five servers, named ansible, and have SSH key authentication set up so that the ansible account on controlnode can log in to each of the systems. In addition, the Ansible service account has been configured with access to the root account via sudo on each host. I’ve also installed the rhel-system-roles and ansible packages on controlnode. For more information on these tasks, refer to the Introduction to RHEL System Roles post.

In this environment, I’m using a RHEL 8 control node, but you can also use Ansible Tower as your RHEL system role control node. Ansible Tower provides many advanced automation features and capabilities that are not available when using a RHEL control node. For more information on Ansible Tower and its functionality, refer to this overview page.

Defining the inventory file and role variables

I need to define an Ansible inventory to list and group the hosts that I want the NBDE system roles to configure.

From the controlnode system, the first step is to create a new directory structure:

[ansible@controlnode ~]$ mkdir -p nbde/group_vars

These directories will be used as follows:

-

nbde directory will contain the playbook and the inventory file.

-

nbde/group_vars will define Ansible group variables that will apply to the groups of hosts that were defined in the inventory file.

I’ll create the main inventory file at nbde/inventory.yml with the following content:

all: children: nbde-servers: hosts: tang1: nbde-clients: hosts: rhel8-server1: rhel8-server2: rhel7-server1: rhel7-server2:

This inventory lists the five hosts, and groups them into two groups:

-

nbde-servers group contains the tang1 host.

-

nbde-clients group contains the rhel8-server1, rhel8-server2, rhel7-server1, and rhel7-server hosts.

![]() If using Ansible Tower as your control node, this Inventory can be imported into Red Hat Ansible Automation Platform via an SCM project (example Github or Gitlab) or using the awx-manage Utility as specified in the documentation.

If using Ansible Tower as your control node, this Inventory can be imported into Red Hat Ansible Automation Platform via an SCM project (example Github or Gitlab) or using the awx-manage Utility as specified in the documentation.

Next, I’ll define the role variables that will control the behavior of the NBDE client and server roles when they run. The README.md files for both of these roles, available at /usr/share/doc/rhel-system-roles/nbde_client/README.md (NBDE client role) and /usr/share/doc/rhel-system-roles/nbde_server/README.md (NBDE server role), contain important information about the roles, including a list of available role variables and how to use them.

One of the variables I’ll define is the encryption_password variable, which is the password that the role can use to unlock the existing LUKS device that will be configured as a NBDE Clevis client. You should Ansible Vault to encrypt the value of this variable so that it is not stored in plain text.

I can generate an encrypted string for this variable with the following command:

$ ansible-vault encrypt_string 'your_luks_password' --name "encryption_password"

Replace the your_luks_password with the password you currently have defined on your encrypted LUKS volumes. The “encryption_password” at the end of the line defines the Ansible variable name, and should not be changed.

When this ansible-vault command is run, it will prompt you for a new vault password. This is the password you’ll need to use each time you run the playbook so that the variable can be decrypted. After you type a vault password, and type it again to confirm, the encrypted variable will be shown in the output, for example:

encryption_password: !vault |

$ANSIBLE_VAULT;1.1;AES256

33333566373238613734663561306663616237333463633834623461356537346233613066633732

6439363966303265373038666233326536636133376338380a383965646637393539393033383838

38663635623733346566346230643137306532383364343133343639336538303034323639396335

3130356365396334360a383132326237303563626238333062633735306165353334306430353761

62623539643033643266663236333332636633333262336332386538386139356335

Next, I’ll create a file that will define variables for the system listed in the nbde-clients inventory group by creating a file at nbde/group_vars/nbde-clients.yml with the following content:

nbde_client_bindings:

- device: /dev/vda2

encryption_password: !vault |

$ANSIBLE_VAULT;1.1;AES256

33333566373238613734663561306663616237333463633834623461356537346233613066633732

6439363966303265373038666233326536636133376338380a383965646637393539393033383838

38663635623733346566346230643137306532383364343133343639336538303034323639396335

3130356365396334360a383132326237303563626238333062633735306165353334306430353761

62623539643033643266663236333332636633333262336332386538386139356335

servers:

- http://tang1.example.com

The nbde_client_bindings dictionary variable will be defined, containing several keys. The device key will define the existing encrypted device on each host (/dev/vda2) that the role will bind with Clevis. The encryption_password variable, as previously discussed, will contain our current LUKS password, encrypted via Ansible Vault. And finally, the servers variable will define a list of Tang servers that should be used. In this example, we are using a single Tang server, http://tang1.example.com.

For the NBDE server role, I will use the default role variable values, so it is unnecessary to define any variables for it.

Creating the playbook

The next step is creating the playbook file at nbde/nbde.yml with the following content:

- name: Open firewall for Tang hosts: nbde-servers tasks: - firewalld: port: 80/tcp permanent: yes immediate: yes state: enabled - name: Deploy NBDE Tang servers hosts: nbde-servers roles: - rhel-system-roles.nbde_server - name: Create /etc/dracut.conf.d/nbde_client.conf hosts: nbde-clients tasks: - copy: content: 'kernel_cmdline="rd.neednet=1"' dest: /etc/dracut.conf.d/nbde_client.conf owner: root mode: u=rw - name: Deploy NBDE Clevis clients hosts: nbde-clients roles: - rhel-system-roles.nbde_client

The first task, Open Firewall for Tang, runs on the nbde-servers group (which contains just the tang1 server) and will open TCP port 80 in the firewall, which is necessary for the Clevis clients to connect to the Tang server.

The second task, Deploy NBDE Tang servers, also runs on the nbde-servers group (containing just the tang1 server). This task calls the nbde_server system role, which will install and configure the Tang server.

The third task, Create /etc/dracut.conf.d/nbde_client.conf, runs on hosts in the nbde-clients group (which contains the four RHEL 8 and RHEL 7 clients). This task is needed due to recent RHEL 8 changes related to clevis and its dracut modules. For more information, see this issue in the upstream Linux System Roles project.

And the final task, Deploy NBDE Clevis clients, which also runs on hosts in the nbde-clients group (containing the four RHEL 8 and RHEL 7 clients), calls the nbde_client system role, which will install and configure the Clevis client, utilizing the role variables we previously defined in the nbde/group_vars/nbde-clients.yml file.

If you are using Ansible Tower as your control node, you can import this Ansible playbook into Ansible Automation Platform by creating a Project, following the documentation provided here. It is very common to use Git repos to store Ansible playbooks. Ansible Automation Platform stores automation in units called Jobs which contain the playbook, credentials and inventory. Create a Job Template following the documentation here.

Running the playbook

At this point, everything is in place, and I’m ready to run the playbook. If you are using Ansible Tower as your control node, you can launch the job from the Tower Web interface. For this demonstration I’m using a RHEL control node and will run the playbook from the command line. I’ll use the cd command to move into the nbde directory, and then use the ansible-playbook command to run the playbook.

[ansible@controlnode ~]$ cd nbde [ansible@controlnode nbde]$ ansible-playbook nbde.yml -b -i inventory.yml --ask-vault-pass

I specify that the nbde.yml playbook should be run, that it should escalate to root (the -b flag), and that the inventory.yml file should be used as my Ansible inventory (the -i flag). I also specified the --ask-vault-pass argument which will cause ansible-playbook to prompt me for the Ansible Vault password that can be used to decrypt the encryption_password variable that was previously encrypted.

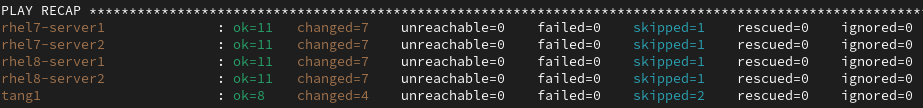

After the playbook completes, I verified that there were no failed tasks:

Validating the configuration

To validate the Tang server is active, I’ll run systemctl status tangd.socket on the tang1 server:

$ ssh tang1 sudo systemctl status tangd.socket ● tangd.socket - Tang Server socket Loaded: loaded (/usr/lib/systemd/system/tangd.socket; enabled; vendor preset: disabled) Active: active (listening) since Wed 2021-07-28 13:54:01 MDT; 1min 21s ago Listen: [::]:80 (Stream) Accepted: 0; Connected: 0; Tasks: 0 (limit: 12288) Memory: 0B CGroup: /system.slice/tangd.socket Jul 28 13:54:01 tang1.example.com systemd[1]: Listening on Tang Server socket.

The tangd.socket is active on the tang1 server, so I’ll check the status of Clevis on one of the RHEL clients:

$ ssh rhel8-server1 sudo clevis luks list -d /dev/vda2 1: tang '{"url":"http://tang1.example.com"}'

This output indicates that the /dev/vda2 device is bound to the tang1 server. Note that the clevis luks list command is not available on RHEL 7.

As a final test, I’ll reboot the four RHEL 8 and RHEL 7 hosts and validate that they boot up with no manual intervention.

The hosts boot up and pause at the LUKS passphrase prompt:

However, at this point the system is initiating a connection to the Tang server in the background, and after around five or 10 seconds, the system continues to boot with no manual intervention.

In the event that the Tang server was unreachable, I could still manually type the original LUKS passphrase to unlock the device and boot up the system.

Conclusion

The NBDE Client and NBDE Server System Roles can help you quickly and easily implement Network Bound Disk Encryption across your RHEL environment.

This introduction covered automating the deployment of a simple NBDE implementation using a single Tang server. In a future blog, I’ll cover how to automate the deployment of three Tang servers for high availability, how to automate backing up the Tang keys, and how to automate rotating the Tang keys.

We offer many RHEL system roles that can help automate other important aspects of your RHEL environment. To explore additional roles, review the list of available RHEL System Roles and start managing your RHEL servers in a more efficient, consistent and automated manner today.

À propos de l'auteur

Brian Smith is a Product Manager at Red Hat focused on RHEL automation and management. He has been at Red Hat since 2018, previously working with Public Sector customers as a Technical Account Manager (TAM).