Il blog di Red Hat

Application developers, architects, and operations teams should aim to optimize their applications and services to minimize cloud computing infrastructure costs. There are a number of emerging open source technologies that can make a significant contribution to this objective, and we’ll highlight some in this post.

Take Quarkus for example

Optimizing Java code using Quarkus (an open source, Kubernetes-native Java framework) can help reduce runtime memory requirements by an order of magnitude while dropping initial startup times from seconds to milliseconds.

Low startup times reduce infrastructure usage by making it practical to scale down significantly when an application or process is idle. Longer startup times necessitate an application or process remaining continuously “hot,” executing in-memory and using resources even when idle.

The IDC Quarkus Lab Validation report offers a summary of the cost savings and other key benefits associated with adopting Quarkus, compared with another popular Java framework. The study finds that Quarkus can save up to 64% of cloud memory utilization when running in native mode.

Edge Computing Necessitates Infrastructure Optimization

Optimizing applications in order to minimize infrastructure usage becomes even more important as we venture into edge computing.

-

In the traditional cloud computing model, the “cloud” is an ocean of thousands of servers at a few locations, providing near infinite computing capacity for an application. Should utilization at any of those centralized locations approach capacity, it is relatively economical to install additional servers at the site.

-

The edge computing model turns the traditional cloud computing model on its head. Rather than thousands of servers at a few central locations, edge computing implies a few servers at each of thousands of network edge locations. Should utilization at any of those thousands of edge sites approach capacity, physically visiting the site to add a few more servers is not economical. In addition, space, power, and cooling restrictions at many edge locations—particularly telco edge locations—may make it impossible to add capacity.

Fortunately, there are additional open source technologies emerging that can enable edge infrastructure utilization to be exceptionally well optimized.

Optimizing Cloud Utilization With Open Source Technologies

Containers

For a number of years the virtual machine (VM) has been the primary technology used to improve server utilization. The VM achieved this by separating the physical server into multiple virtual servers, thus enabling more efficient utilization of a single physical server. However, the VM is now being challenged by a technology that offers not only superior server optimization, but also many other benefits: containers.

Unlike VMs, applications in containers share the server’s operating system (OS) with other applications that are each isolated in their own containers. Sharing the OS eliminates the resource utilization overhead of the VM and provides more direct exposure of the underlying server hardware to the application.

In addition, a new container can be instantiated much more quickly than a VM. The superior infrastructure utilization efficiency of the container is contributing to its rapid adoption. Edge computing applications, for which efficiency is paramount, may eschew VMs in favor of containers.

Serverless (Knative)

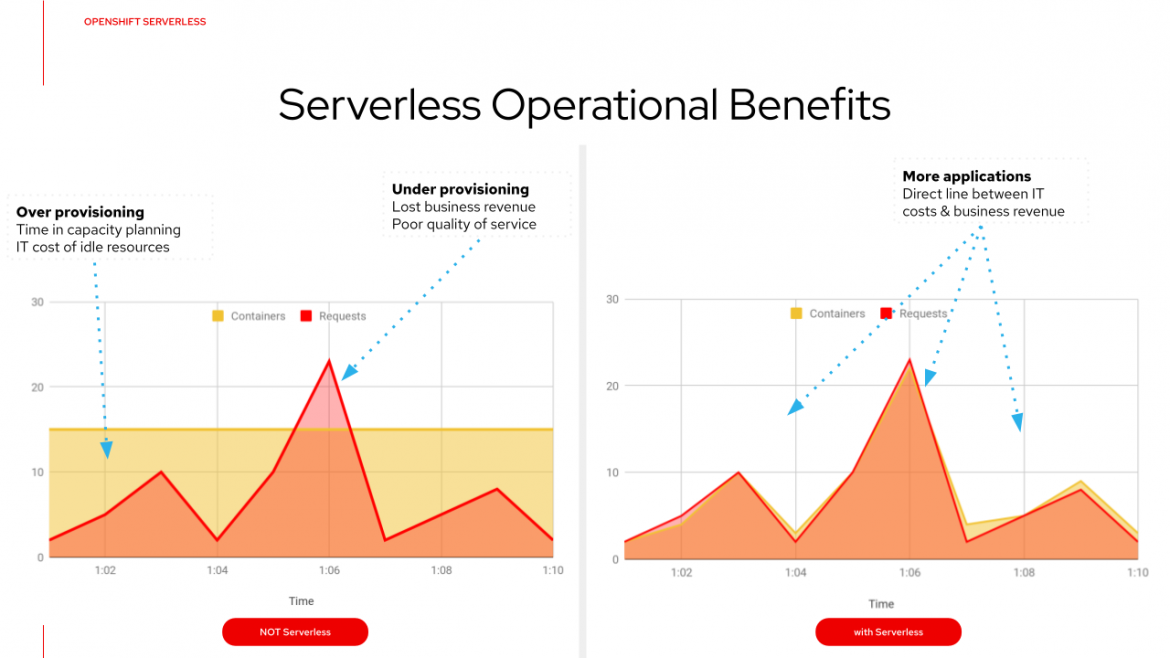

Another significant new technology enabling efficient cloud infrastructure optimization is “Serverless” technology, as exemplified by the Knative open source project. There are still servers in Serverless, but a cloud provider or platform handles the routine work of provisioning, maintaining, and scaling the server infrastructure. Serverless allows developers to simply package their code in containers for deployment, without having to manage servers or worry about capacity scaling.

Serverless applications are “event driven” in nature, which means they follow a very simple pattern: An event occurs, which triggers an application to execute. When running on Kubernetes, an event triggers an associated container or containers to be instantiated. The application residing in the container then processes the event. With Serverless, Kubernetes scales the number of containers down to zero once the triggered application is idle for enough time.

From an infrastructure utilization perspective, the most important aspect of Serverless is that it enables an application to scale to zero, using essentially no infrastructure when idle.

Serverless apps are deployed in containers that launch nearly instantaneously when called. (VMs take several minutes to launch, making them unsuitable for Serverless applications.) Serverless offerings from public cloud providers are usually metered on-demand through an event-driven execution model. As a result, when a Serverless application is sitting idle, it costs nothing.

Modern applications are developed with each application process or microservice deployed in containers—each of which can be independently scaled up or down as needed. This independent, fine-grained scaling of an individual application process also leads to optimization of infrastructure utilization and costs. Serverless deployment of containerized application processes allows each application process to consume only the minimal cloud resources required at any given moment - the ultimate in efficiency!

Knative is an open source implementation of the Serverless model. Knative allows serverless applications to be deployed and run on any Kubernetes platform, including the Red Hat OpenShift Container Platform. Red Hat OpenShift Serverless is the Red Hat supported distribution of Knative on OpenShift. It provides an enterprise-grade serverless platform which brings portability and consistency across hybrid and multi-cloud environments.

Serverless apps consume only the cloud resources required to meet demand, providing the ultimate in resource utilization efficiency.

Serverless apps consume only the cloud resources required to meet demand, providing the ultimate in resource utilization efficiency.

Virtual Application Networks (Skupper)

Another way to reduce the edge infrastructure usage is to deploy only the smallest bit of application code possible to the edge site, with the remainder of the application code residing in a centralized cloud. Most internet of things (IoT) applications, for example, will involve data filtering or artificial intelligence (AI) processing at the edge, forwarding only the most critical video or data streams on to the cloud.

With containerization, the data filtering/AI processing component of the IoT application can be deployed to the edge site as a containerized microservice, independent of other application components. Deploying only the required application components to the edge can help optimize edge infrastructure utilization.

But how will an application subcomponent or microservice—which may be deployed at thousands of edge sites—communicate securely with the other microservices incorporated into the application? Messaging brokers such as Kafka or AMQ have traditionally been used for asynchronous communications between microservices. However, setting up, maintaining, and securing a message broker are challenging and costly tasks, particularly when scaling to thousands of edge computing sites.

Virtual Application Networks (VANs) are emerging as the preferred method for intra-application communications across multiple sites. A VAN connects an application’s distributed processes and services into a virtual network so that they can communicate with each other as if they were all running in the same site. Because they operate at Layer 7 (the application layer), VANs are able to provide connectivity across multiple cloud locations without regard to the actual networking configuration between the sites.

Layer 7 application services routers form the backbone of a VAN in the same way that conventional network routers form the backbone of a VPN. However, instead of routing IP packets between network endpoints, Layer 7 application services routers route messages between application endpoints using the open source Advanced Message Queuing Protocol (AMQP), which has been fully supported by Red Hat for many years.

Skupper is an open source project used to create VANs in Kubernetes. By using Skupper, a distributed application composed of microservices running in disparate Kubernetes clusters can be deployed. In a Skupper network, each location contains a Skupper instance for each distributed application component or microservice. Once Skupper application instances connect, they continually share information about the services that each Skupper instance exposes. This means that each Skupper instance is aware of each service that has been exposed to the Skupper network, regardless of the location in which each service resides.

Moving an application to the cloud raises security risks, and utilizing multiple clouds or Kubernetes clusters multiplies those risks. Without Skupper, either your services must be exposed to the public internet, or you must adopt complex layer 3 network controls like VPNs, firewall rules, and access policies. Increasing the challenge, layer 3 network controls do not extend easily to multiple clusters. These layer 3 network controls must be duplicated for each cluster.

Skupper provides security that scales across clusters while avoiding the need for these complex layer 3 network controls. In a Skupper network, the connections between Skupper routers are secured with mutual Transport Layer Security (mTLS), which is used to set up a secure, encrypted channel across the entire Skupper VAN. This means that the Skupper network is isolated from external access, preventing security risks such as lateral attacks, malware infestations, and data exfiltration.

Crucially for edge computing use cases, Skupper networks utilize cloud resources very efficiently. While a Skupper VAN does not “scale to zero” like a serverless application, an idle Skupper instance uses minimal cloud resources - on the order of 65MB of RAM and almost no CPU utilization. When active, the Skupper instances will scale automatically, only consuming cloud resources proportionate to the edge communications load required at any given time.

The Ubiquitous Edge Deployment Model

A mobile device may roam into any one of thousands of telco network edge locations. It would be ideal to deploy a data filtering process for an IoT device, for example, to each of these edge locations. However, the extreme resource constraints at each of these locations makes deploying a conventional application to each edge location untenable.

The open source technologies described above, however, can make it practical to deploy an application process ubiquitously, across each of every one of the telco edge sites, despite the resource constraints at these sites.

The ubiquitous deployment model would support the very premise of edge computing—enabling data processing near its source in order to provide exceptionally rapid response to real world events. The open source technologies discussed in this blog post will be key to making ubiquitous computing feasible and practical.

To explore further, more details of the analyst study can be found here.

Sull'autore

Michael Hansen has been involved with leading-edge technologies for more than 30 years, mostly within the telecommunications industry. He specializes in creative application of emerging technologies. He helped create Red Hat’s Open Telco model, which involves the transformation of telcos into an open platform for the development and delivery of digital goods and services, enabling them to carve out a more significant role in today’s digital economy.