In my last post I explained how to deploy MongoDB and Yahoo! Cloud Serving Benchmark (YCSB) pods in a multi-tenant environment using Red Hat OpenShift Platform projects. Later in this blog I’ll show how to measure our cluster performance/ability to work with many MongoDB pods and using Red Hat OpenShift Container Storage as the persistent storage layer.

All the scripts in this blog can be found in our git repository.

Yahoo! Cloud System Benchmark

The workload generator that we are going to use is called YCSB and it was created by researchers from Yahoo in 2010. The idea was to create a framework to check the performance of a cluster with methods that are more web, cloud and key/value-oriented vs traditional database workload tests like TPC-H. The program is Java based and has six different workloads (a-f) to use (you can read more about the different workloads in the YCSB documentation).

I’m going to use “workloadb” in my testing it has a 70% read / 30% write IO ratio. I’ll be loading the data using “workloadb” (there is no difference when loading the data between workload a, b, c or d) and distribute the data using the “uniform” method (you can use other methods like zipfian, hotspot, sequential, exponential or latest).

OpenShift on AWS Test Environment

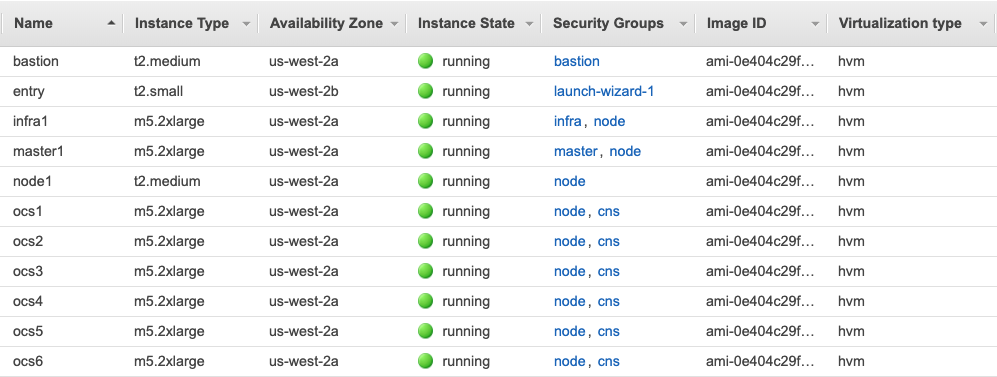

All of the posts in this blog series are using OpenShift Container Platform on an AWS setup that includes 8 EC2 instances deployed as 1 master node, 1 infrastructure node and 6 worker nodes that also run OpenShift Container Storage pods.

The 6 worker nodes are both storage providers and persistent storage consumers (MongoDB). As shown below, the OpenShift Container Storage worker nodes are m5.2xlarge instance types with 8 vCPUs, 32 GB Mem, with 3x100GB gp2 volumes attached to each node for OpenShift Container Platform, and a single 1TB gp2 volume for OpenShift Container Storage storage cluster. The cluster is deployed in the us-west-2 region using a single availability zone (AZ) us-west-2a.

The software we are using is OpenShift Container Platform/OpenShift Container Storage v3.11.

Multi-tenant MongoDB setup on OpenShift Container Platform using OpenShift Container Storage

I’m going to use the same template we exported, edited and imported back in part 1 when we showed a simple deployment of MongoDB with OpenShift Container Storage.

If you look at the end of the template, all the variables that are used are declared:

oc get template mongodb-persistent-ocs -n openshift -o yaml|sed -n '/parameters:/,$p'

The output includes some variables that we used in the previous blog post, like MONGODB_USER, MONGODB_PASSWORD, MONGODB_DATABASE and MONGODB_ADMIN_PASSWORD, but I’m more interested in MEMORY_LIMIT, VOLUME_CAPACITY and MONGODB_VERSION.

Since we are going to deploy many MongoDB instances via pods, we need to do a little bit of math calculation. As described above, the AWS worker instances that I use have 32GB of RAM. I will require and limit each MongoDB pod to 2GB of RAM by using the MEMORY_LIMIT variable.

For the test I’ll run, each MongoDB database will be populated with 4 million documents, so a 10GB PVC (Persistent Volume Claim) will be created for each MongoDB pod. To achieve that I’ll use the VOLUME_CAPACITY variable. Lastly, I want to use version 3.6 of MongoDB. For this purpose, I'll use the MONGODB_VERSION variable.

Creating Projects and loading data

I’ll use two scripts from the git repository to create the MongoDB and YCSB pods and populate the database with data.

First there is the create_and_load script that basically creates a new project, creates a MongoDB pod using our template and the variables I’ve outlined above, creates the YCSB pod in the same project, and then uses the YCSB pod to communicate with the MongoDB pod to populate the data.

I’m not going to cover all of the script, but it is important to show how the function create_mongo_pod creates the new app:

function create_mongo_pod() { oc new-app -n ${PROJECT_NAME} --name=mongodb36 --template=${OCP_TEMPLATE} \ -p MONGODB_USER=${MONGODB_USER} \ -p MONGODB_PASSWORD=${MONGODB_PASSWORD} \ -p MONGODB_DATABASE=${MONGODB_DATABASE} \ -p MONGODB_ADMIN_PASSWORD=${MONGODB_ADMIN_PASSWORD} \ -p MEMORY_LIMIT=${MONGODB_MEMORY_LIMIT} \ -p VOLUME_CAPACITY=${PV_SIZE} \ -p MONGODB_VERSION=${MONGODB_VERSION} }

As specified before, we are using the variables to provide memory limit and PVC size.

The other important function in this Bash script is create_ycsb_pod, which as you can see below, declares a pod definition with a container I’ve built in my public repository.

The container has the minimal Java requirements as well as the YCSB minimal requirements to run with MongoDB (the tar file that comes with YCSB support many types of databases).

function create_ycsb_pod() { cat <<EOF | oc create -f - apiVersion: v1 kind: Pod metadata: labels: name: ycsb name: ycsb-pod namespace: ${PROJECT_NAME} spec: containers: - image: docker.io/vaelinalsorna/centos7_yscb_mongo-shell:0.1 imagePullPolicy: IfNotPresent name: ycsb-pod resources: limits: memory: 1Gi securityContext: capabilities: {} privileged: false terminationMessagePath: /dev/termination-log dnsPolicy: Default restartPolicy: OnFailure nodeSelector: serviceAccount: "" status: {} EOF }

As you can see, I’ve limited the YCSB pod to 1GB of RAM usage.

The other script create_and_load-parallel is a wrapper for create_and_load and that will run it in parallel/background with as many projects as needed. All the variables in create_and_load-parallel are pretty self explanatory so you can play with different memory requirements, different size of PVCs, different number of project/pods and YCSB specific variables that will impact the workload type, distribution, number of records in database and so on.

In my current setup I’ve chosen to do 30 projects/MongoDB pods, so I’ll run:

$ ./create_and_load-parallel some_directory_for_log

Once the script has been completed, I have 30 projects, each with a single MongoDB pod and single YCBS pod ready to be used. (Note: execution time depends on the CPU, RAM, storage that you have and the number of projects to be created).

If you want to check the database size and also check the connectivity of your YCSB pod to the MongoDB pod you can use this small bash script, check_db_size, and provide the project name:

readonly PROJECT_NAME=${1} readonly MONGODB_USER=redhat readonly MONGODB_PASSWORD=redhat readonly MONGODB_DATABASE=redhatdb readonly PROJECT_NAME=${1} readonly MONGODB_IP=$(oc get svc -n ${PROJECT_NAME} | grep -v glusterfs | grep mongodb | awk '{print $3}') oc -n ${PROJECT_NAME} exec $(oc get pod -n ${PROJECT_NAME} | grep ycsb | awk '{print $1}') -it -- bash -c "mongo --eval \"db.stats(1024*1024*1024)\" ${MONGODB_IP}:27017/${MONGODB_DATABASE} -u ${MONGODB_USER} -p ${MONGODB_PASSWORD}"

The output for one of the MongoDB pods/database should look like:

$ ./check_db_size mongodb-1 MongoDB shell version v3.6.12 connecting to: mongodb://172.30.251.242:27017/redhatdb?gssapiServiceName=mongodb Implicit session: session { "id" : UUID("3bed5e87-c464-44b8-9328-da896877d156") } MongoDB server version: 3.6.3 { "db" : "redhatdb", "collections" : 1, "views" : 0, "objects" : 4000000, "avgObjSize" : 1167.879518, "dataSize" : 4.350690238177776, "storageSize" : 4.461406707763672, "numExtents" : 0, "indexes" : 1, "indexSize" : 0.21125030517578125, "fsUsedSize" : 5.700187683105469, "fsTotalSize" : 9.990234375, "ok" : 1 }

The highlighted rows show we have 4 million objects in our database and also the physical storage size of this database (which have only a single collection), roughly 4.5GB in size.

Testing the performance of the cluster using YCSB

So now that we have YCSB data loaded, I can actually start to run some performance testing.

I’m going to use two more bash scripts from our git repository that will work in a similar way to the loading of the data part, one script as a wrapper to run in parallel and in the background the script that actually runs the YCSB job.

The run_workload script accepts similar parameters to previously used create_and_load script, just without the information that was needed to create the MongoDB pod (like RAM size, MongoDB version and so on). The script has a single function (run_workload) that accepts several variables, among them the likes of workload type (YCSB_WORKLOAD), the number of objects (YCSB_RECORDCOUNT) we have, how many operations we want to perform (YCSB_OPERATIONCOUNT), how many threads to use (YCSB_THREADS) and some others

The run_workload-parallel script not only runs run_workload, but also monitors it and will inform you when all YCSB jobs are done.

The first of the script contains the variables:

readonly PROJECT_BASENAME=mongodb readonly NUMBER_OF_PROJECTS=30 readonly YCSB_WORKLOAD=workloadb readonly YCSB_DISTRIBUTION=uniform readonly YCSB_RECORDCOUNT=8000000 readonly YCSB_OPERATIONCOUNT=2000000 readonly YCSB_THREADS=2 readonly RUN_NAME=${1}

As you can see I’ve chosen to run the “workloadb” core workload with 2 threads per YCBS pod, using 8 million objects and running 2 million operations. I’m running in parallel over 30 projects that I’ve previously created and populated with data previously. The script accepts a single parameter (“${1}”) to set the log directory that will be used to collect all the YCSB run logs.

Once all the jobs are done (you’ll see the output "all 30 jobs are done..."), the script will also summarize two data points and print them into a file named results.txt in the log directory of the run. The data points we concentrate on are the time it took to run the jobs (RunTime) and the other one is the throughput (ops/sec).

The summary will include also an average of these data points for all 30 YCSB pods to get a “score” to the cluster. There are other interesting data points in the YCSB output, the AverageLatency for each operation (read/update/clean) and also 95thPercentileLatency and 99thPercentileLatency metrics that can help with determining latency abilities of the cluster.

Here’s a sample of the summary output:

$ cat report.txt |grep average average runtime(sec): 250.00 average Throughput(ops/sec): 133.30

Conclusion

In this post in our MongoDB series I’ve walked through how to install MongoDB in a multi-tenant environment, how to populate MongoDB pods using YCBS and how to run performance testing for the cluster as a whole by using YCSB workloads.

In the next blog post, I will compare the results from this blog with an identical AWS setup only instead of using EBS GP2 devices as our OCS devices, I’ll use EC2 instances that include AWS Instance Storage and I’ll explore the corresponding difference in performance and cost.

Sull'autore

Sagy Volkov is a former performance engineer in ScaleIO, he initiated the performance engineering group and the ScaleIO enterprise advocates group, and architected the ScaleIO storage appliance reporting to the CTO/founder of ScaleIO. He is now with Red Hat as a storage performance instigator concentrating on application performance (mainly database and CI/CD pipelines) and application resiliency on Rook/Ceph.

He has spoke previously in Cloud Native Storage day (CNS), DevConf 2020, EMC World and the Red Hat booth in KubeCon.

Altri risultati simili a questo

Ricerca per canale

Automazione

Le ultime novità sulla piattaforma di automazione che riguardano la tecnologia, i team e gli ambienti

Intelligenza artificiale

Aggiornamenti sulle piattaforme che consentono alle aziende di eseguire carichi di lavoro IA ovunque

Servizi cloud

Maggiori informazioni sul nostro portafoglio di servizi cloud gestiti

Sicurezza

Le ultime novità sulle nostre soluzioni per ridurre i rischi nelle tecnologie e negli ambienti

Edge computing

Aggiornamenti sulle piattaforme che semplificano l'operatività edge

Infrastruttura

Le ultime novità sulla piattaforma Linux aziendale leader a livello mondiale

Applicazioni

Approfondimenti sulle nostre soluzioni alle sfide applicative più difficili

Serie originali

Raccontiamo le interessanti storie di leader e creatori di tecnologie pensate per le aziende

Prodotti

- Red Hat Enterprise Linux

- Red Hat OpenShift

- Red Hat Ansible Automation Platform

- Servizi cloud

- Scopri tutti i prodotti

Strumenti

- Formazione e certificazioni

- Il mio account

- Risorse per sviluppatori

- Supporto clienti

- Calcola il valore delle soluzioni Red Hat

- Red Hat Ecosystem Catalog

- Trova un partner

Prova, acquista, vendi

Comunica

- Contatta l'ufficio vendite

- Contatta l'assistenza clienti

- Contatta un esperto della formazione

- Social media

Informazioni su Red Hat

Red Hat è leader mondiale nella fornitura di soluzioni open source per le aziende, tra cui Linux, Kubernetes, container e soluzioni cloud. Le nostre soluzioni open source, rese sicure per un uso aziendale, consentono di operare su più piattaforme e ambienti, dal datacenter centrale all'edge della rete.

Seleziona la tua lingua

Red Hat legal and privacy links

- Informazioni su Red Hat

- Opportunità di lavoro

- Eventi

- Sedi

- Contattaci

- Blog di Red Hat

- Diversità, equità e inclusione

- Cool Stuff Store

- Red Hat Summit