Il blog di Red Hat

Availability zones (AZs) in OpenStack have often been misunderstood, and they are defined in different ways by various cloud operators. By definition, an availability zone is a logical partition of either compute (Nova), block storage (Cinder) or networking services (Neutron). However, because availability zones were implemented independently in each project, the configuration of them is very different across the different resources. We’re going to tackle AZs and explain how to use them.

In this three part mini-series we will focus on:

- Part I - Introduction to availability zones, their importance, and usability within OpenShift

- Part II - Using multiple Nova and Cinder availability zones when running OpenShift on OpenStack

- Part III - Using multiple Nova availability zones with a single Cinder availability zone when running OpenShift on OpenStack

Within the series, a reader can expect to learn how to effectively use availability zones when running OpenShift and OpenStack, and how to overcome common issues encountered along the way.

OpenStack Availability Zones

Before we dive into the problems, let's explore availability zones further starting with Nova. Nova availability zones have been available since Red Hat OpenStack Platform 3 release. They provided a way for cloud operators to logically segment their compute based on arbitrary factors like location (country, datacenter, rack), network layout and/or power source.

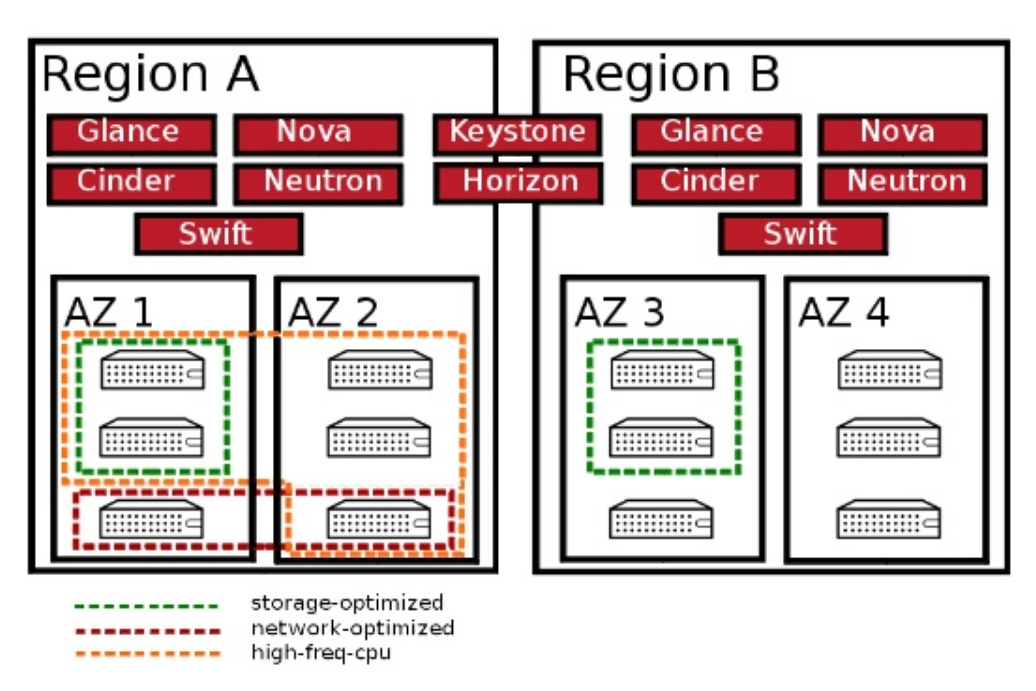

The first example shows four availability zones spread across two regions, and with multiple racks within each region. The dotted lines show the underlying host aggregates that the compute nodes reside in. Be aware, a compute node can be in multiple host aggregates, but only in one availability zone.

When discussing availability zones in Nova there is often confusion around zones and host aggregates because to have an availability zone, you need to first create a host aggregate, both of which require OpenStack administrator rights. In order to stifle the confusion, let's quickly discuss the difference between a host aggregate and an availability zone.

Host aggregates vs. availability zones

Host aggregates are a mechanism for partitioning hosts that isn’t explicitly exposed to users in an OpenStack cloud, based on arbitrary characteristics like server type, processor type, drive characteristics. Administrators map flavors to the host aggregates by setting metadata on the host aggregate and matching the flavor extra specifications. It is then up to the scheduler to determine the best match for the user request. Compute nodes can also be in more then one host aggregate.

Optionally, Administrators may expose a host aggregate as an availability zone. Availability zones differ from host aggregates in that they are exposed to the user as a Nova boot option, and hosts can only be in a single availability zone. Administrators can configure a default availability zone where instances will be scheduled when the user fails to specify one.

A prerequisite to creating an availability zone, is a host aggregate. Below is an example of the creation process.

The first step is to create the availability zone and host aggregate in one command where AZ1 is the availability zone name and HA1 is the host aggregate name :

$ openstack aggregate create --zone AZ1 HA1

The next step is to associate compute resources, in this example overcloud-compute-1.localdomain, to the availability zone AZ1 which is part of host aggregate HA1:

$ openstack aggregate add host HA1 overcloud-compute-1.localdomain

Finally, you may validate the availability zone AZ1 which is part of host aggregate HA1 and has compute nodes associated to with these commands:

$ openstack aggregate list $ openstack aggregate show HA1

With the creation of our Nova availability zone, we turn to Cinder zones. Cinder zones, like Nova zones are very similar in why they might be used: location, storage backend type, power and network layout. Cinder differs from Nova not from a concept perspective, but from the configuration aspects required for the proper creation of availability zones. This configuration may vary depending on deployment tools used with OpenStack.

When using Red Hat OpenStack Platform, the installation process is performed with OpenStack Director. The OpenStack Director provides an OpenStack administrator the ability to specify additional configuration settings to customize an environment based upon needs. For this particular case, enabling Cinder availability zones requires adding and setting additional variables within an environment file.

Each backend is represented in cinder.conf with a stanza and a reference to it from the enabled_backends key. The keys valid in the backend stanza are dependent on the actual backend driver and unknown to Cinder.

For example, to provision in Cinder two additional backends with named availability zones and specify a default availability zone one could create a Heat environment file with the following contents:

parameter_defaults:

ExtraConfig:

Cinder::config::cinder_config: # Defined variables and values placed into cinder.conf

AZ2/volume_driver: # AZ2 volume driver variable

value: cinder.volume.drivers.rbd.RBDDriver # RBDDriver for Ceph cluster backends

AZ2/rbd_pool: # AZ2 rbd_pool variable

value: volumes # AZ2 rbd_pool variable value

AZ2/rbd_user: # AZ2 rbd_user variable

value: openstack # AZ2 rbd_user value

AZ2/rbd_ceph_conf: # AZ2 location of ceph.conf variable

value: /etc/ceph/ceph.conf # AZ2 path of ceph.conf value

AZ2/volume_backend_name: # AZ2 backend name variable

value: tripleo_ceph # AZ2 backend name value

AZ2/backend_availability_zone: # AZ2 backend availability zone variable

value: AZ2 # AZ2 backend availability zone value

AZ3/volume_driver: # AZ3 volume driver variable

value: cinder.volume.drivers.rbd.RBDDriver # AZ3 RBDDriver for Ceph cluster backends

AZ3/rbd_pool: # AZ3 rbd_pool variable

value: volumes # AZ3 rbd_pool variable value

AZ3/rbd_user: # AZ3 rbd_user variable

value: openstack # AZ3 rbd_user value

AZ3/rbd_ceph_conf: # AZ3 location of ceph.conf variable

value: /etc/ceph/ceph.conf # AZ3 path of ceph.conf value

AZ3/volume_backend_name: # AZ3 backend name variable

value: tripleo_ceph # AZ3 backend name value

AZ3/backend_availability_zone: # AZ3 backend availability zone variable

value: AZ3 # AZ3 backend availability zone value

tripleo_ceph/backend_availability_zone: # AZ1 Default non backend availability zone name variable

value: AZ1 # AZ1 availability zone name

cinder::storage_availability_zone: AZ1 # Cinder non-backend availability zone name

cinder_user_enabled_backends: ['AZ2','AZ3'] # Cinder backends defined

cinder::default_availability_zone: AZ1 # Cinder default availability zone

Nova::config::nova_config: # Defined variables and values placed into nova.conf

cinder/cross_az_attach: # Cinder cross attach zones in nova.conf

value: false # Value false since we do not want attaches across AZs

This environment file could be saved as my_backends.yaml and passed as an environment file to the deploy command line:

openstack overcloud deploy [other overcloud deploy options] -e path/to/my-backends.yaml

With a successful overcloud deployment of Red Hat OpenStack Platform, accessing the Cinder availability zones is as simple as listing out the cinder service list.

$ cinder service-list

OpenShift administrators often want to leverage Nova availability zones so that their pods are spread across different hardware resources in the environment to provide high availability. This architecture meets OpenShift requirements up until the use of Persistent Volume Claims (PVC) are required.

When requesting a PVC, OpenShift expects a Cinder availability zone with the corresponding name of the Nova availability zone. However, it is common that in many deployments there may be many Nova availability zones, but only one Cinder availability zone. This is due to the likelihood that only one storage backend is used.

Due to this, there are considerations and parameters regarding availability zones that need to be considered prior to deploying OpenShift on OpenStack.

OpenStack Availability Zones in OpenShift

OpenShift uses cloud providers to leverage some of their services such as being able to create volumes in the provider cloud storage automatically when users create a PVC, or expose services using the provider load balancers.

In this particular case of OpenShift on OpenStack, a sample cloud provider configuration file (/etc/origin/cloudprovider/openstack.conf) usually looks something like this:

[Global]

auth-url = http://1.2.3.4:5000//v3

username = myuser

password = mypass

domain-name = Default

tenant-name = mytenant

Note, you can configure that file manually or use the openshift_cloudprovider_openstack_* variables in the ansible installer. See Configuring for OpenStack for more information.

With that configuration file, OpenShift is able to query the OpenStack API and do some tasks such as automatically tagging instances with the Nova availability zone they run as ‘failure-domain.beta.kubernetes.io/zone=<nova_az>’.

Also when PVs are created, they will be labeled with the same label but applying to the Cinder AZ.

The OpenShift scheduler decides where the pods run depending on different predicates and parameters. This post focuses on the parameter NoVolumeZoneConflict.

The NoVolumeZoneConflict parameter checks:

- The attached volume’s ‘failure-domain.beta.kubernetes.io/zone’ label (aka, the cinder AZ of the volume).

- The pod restrictions.

- If there are available nodes with the same ‘failure-domain.beta.kubernetes.io/zone’ label.

To ensure that:

- If there is just a single availability zone (‘nova’ by default), the labels will always match.

- If the ‘failure-domain.beta.kubernetes.io/zone’ labels match (Cinder AZ name = Nova AZ name), the pod is scheduled to a node labeled with the same zone as the volume. The volume is then attached to the node and the pod is started with the appropriate volume attachment.

In production environments, it is common to have different availability zones to split your masters/nodes to avoid downtimes. In that case, you can have two different scenarios:

- Scenario 1 (Recommended): n number of Nova AZs (to simplify, 3 Nova AZs) and c number of Cinder AZs (to simplify, 3 Cinder AZs) with the same name:

- Nova AZs = AZ1, AZ2, AZ3

- Cinder AZs = AZ1, AZ2, AZ3

- Scenario 2: n number of Nova AZs (AZ1, AZ2,...) and a single Cinder AZ (‘nova’ by default)

There is a third scenario where you have several Nova AZs and Cinder AZs named differently. In that case, it applies the same solutions as Scenario 2.

The following scenarios consisted of the following OpenShift architecture:

- 3 master nodes

- cicd-master-0 running in AZ1

- cicd-master-1 running in AZ2

- cicd-master-2 running in AZ3

- 3 infrastructure nodes

- cicd-infra-0 running in AZ1

- cicd-infra-1 running in AZ2

- cicd-infra-2 running in AZ3

- 3 application nodes

- cicd-node-0 running in AZ1

- cicd-node-1 running in AZ2

- cicd-node-2 running in AZ3

Part II of this series will focus on handling multiple availability zones with two key OpenStack services: Nova and Cinder.

Note about Cinder API Versions

Cinder API is versioned and even if OpenShift automatically tries to autodiscover the version by itself, there is an optional parameter in the openstack.conf file ‘bs-version’ to override the automatic version detection. Valid values are v1, v2, v3 and auto (default). When auto is specified, automatic detection will select the highest supported version exposed by the underlying OpenStack cloud.

If you have problems with Cinder version detection in OpenShift (OpenShift controller logs will show “BS API version autodetection failed”), configure bs-setting parameter (for instance, to v2):

- Before installation time, in the Ansible inventory as openshift_cloudprovider_openstack_blockstorage_version=v2, or

- In the /etc/origin/cloudprovider/openstack.conf file as

[BlockStorage] bs-version=v2

Part I: conclusions

In this introduction we have seen what Availability Zones are in the OpenStack context and the differences between Nova and Cinder AZs. Regarding OpenShift, we have presented the scenarios we are going to detail in future posts and some considerations regarding Cinder API versions.