Il blog di Red Hat

This is a story about a unique challenge that one of Red Hat’s Technical Account Manager (TAM) customers had while expanding their Red Hat Identity Management (IdM) environment. In this post, we will go through the specifics of the problem and how we tackled it.

Whenever they enrolled a specific, big portion of their environment — one that amounts for around +15,000 clients — Red Hat Enterprise Linux (RHEL) 6 IdM servers would crash or slow down significantly.

What is going on under the hood?

After some investigation with the customer, and examining how the customer’s application works, it was obvious that the application in question has some unique behavior. We needed a look into what’s going on at a deeper level. The first round of preliminary tests with strace showed there were a lot of identity related calls, and repetitive usage of libnss.

Yet there was no clear visibility on the specifics and concurrence rate of those calls across the whole environment, so we needed to quantify those calls.

To capture these metrics, we developed and distributed a SystemTap script to run on production servers. Systemtap has less impact than strace, especially on production environments and the output can be easily visualized.

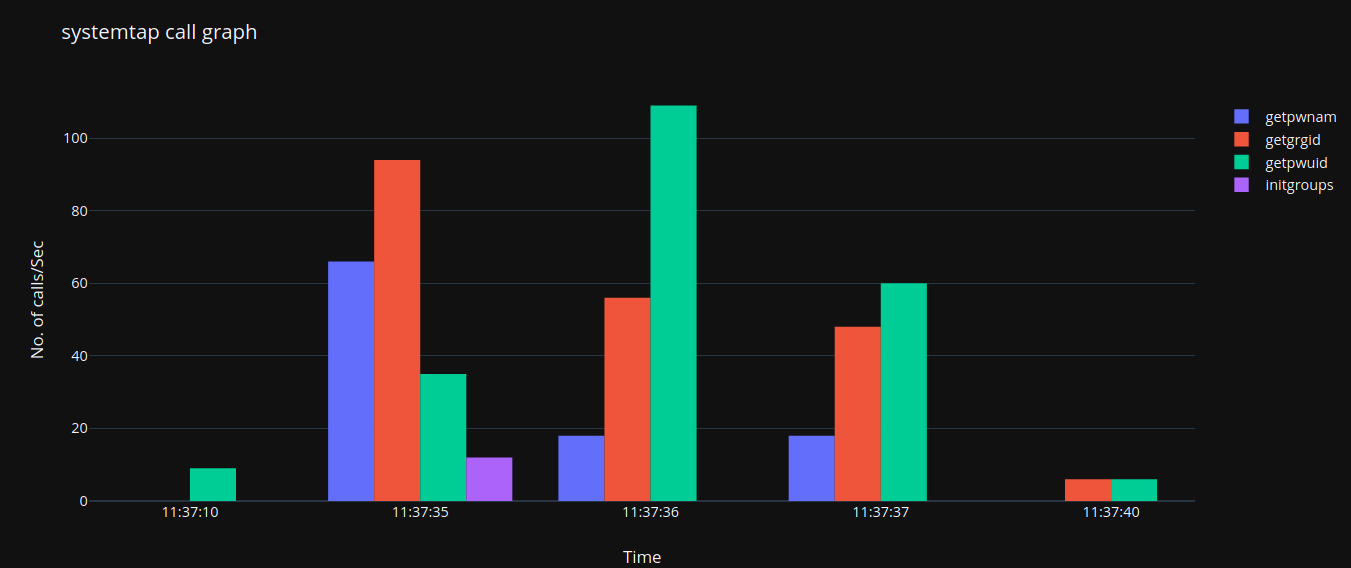

Deploying this script on a sample machine of the environment that represents different types of roles (management, master, workers, etc.) revealed the following (simplified view):

A total of six machines would peak with up to 110 getpwuid calls, and 94 getgrgid calls, and they were all against the same userid. Multiplying these numbers by hundreds or thousands representing production workloads caused troubles to IdM servers.

There were other aspects that added to the complexity:

-

The environment contains thousands of diskless machines. Whenever the systems were rebooted at the same time for updates, they re-enrolled at the same time to IdM.

-

A huge and complex user/user groups structure with a lot of nested groups.

Armed with this data, it was clear what needed to happen next.

Off to the lab

Red Hat has a scalability lab running beefy machines to test product limits. In collaboration with Red Hat’s engineering team, the TAM set up an environment to simulate the customer’s criteria using containers. The points to focus on were:

-

Firing large numbers of simultaneous identity calls against the same userid. By default, identity calls are expected to be spread over time and some are served by cached entries also.

-

Testing the enrollment of hundreds and thousands of clients at the same time.

-

Testing against a large and complex structure of users and user groups.

Utilizing create-test-data.py, a fairly large environment was created consisting of:

-

5000 users

-

27000 Hosts

-

104 user groups

-

~5 nested groups per user

-

~20 total groups per user

Now it was time to test those unique customer use cases.

Mass client enrollment, no entropy for you!

The entropy on IdM servers dropped significantly while mass registering clients. This is expected to a certain degree, as IdM client enrollment has some cryptographic needs, like issuing certificates.

So, the entropy pool will deplete eventually. This will introduce delays and/or failures while registering. In the tests, entropy of 300-200 was the low threshold to try and stay above while mass enrolling. Take special care if your IdM servers are virtual machines as they might have lower entropy compared to physical servers. More about this topic in this article.

I guess some readers now are anxious to get a solid number “how many clients can be registered simultaneously?”

The answer is, as usual, it depends. Many factors will contribute to that number, such as:

-

Total number of IdM servers (load distribution)

-

How fast an IdM server/client finish the enrollment (concurrency)

-

Usage of virtual machines vs. physical servers (threshold)

Hence, monitoring the entropy levels — among other metrics — might be a better approach here.

An unexpected visitor

Enrolling multiple clients at the same time revealed a unique case that we did not witness previously.

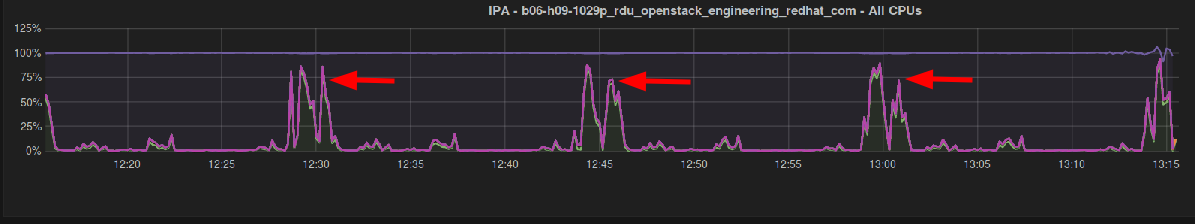

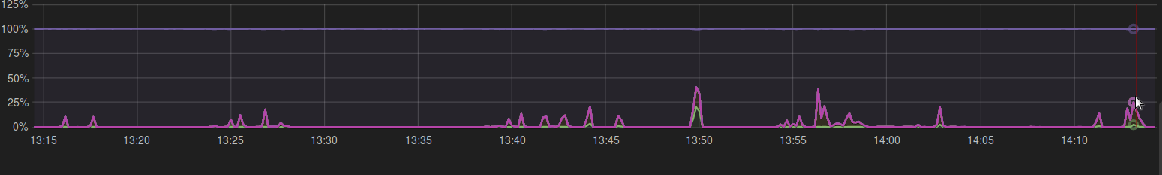

By default, clients refresh their connections to IdM servers every 15 minutes. So in this test environment, and potentially the customer’s, clients are virtually enrolled at the same time, and they try to refresh their connections all together. Which led to this:

CPU load spikes on an idle environment every 15 minutes. Of course, in other production environments, clients’ enrollment is done over a long period of time so that issue was not visible.

An enhancement request was raised for this issue, and Red Hat engineering developed a good countermeasure by adding randomness to the connection timeout.

Time for DDos

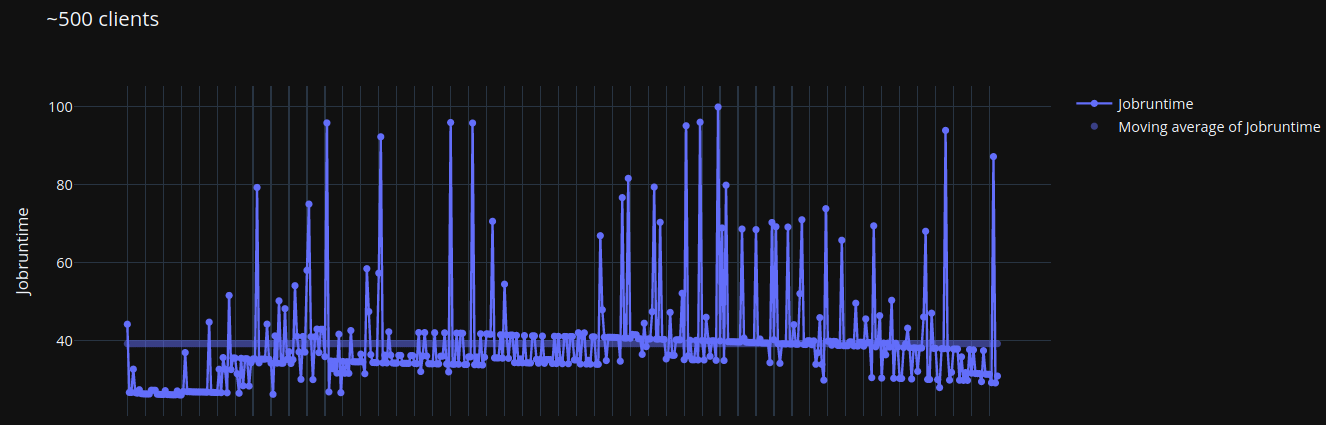

After applying several tunings on IdM servers and clients to handle a large environment, it was time to simulate these identity calls captured using SystemTap. Several trials were made to simulate 100, 500, 1000, up to 5000 simultaneous clients.

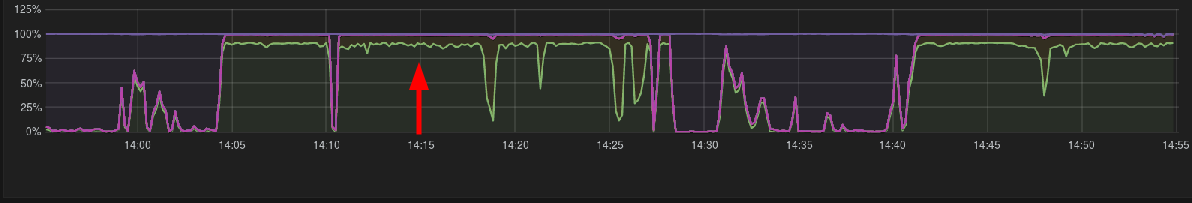

The first issue we noticed was that the CPU load spikes, and doesn’t go back to normal.

This turned out to be because IdM servers have multiple NUMA nodes, which libdb (the backend database for LDAP) has an issue with and therefore threads get stuck. This issue is detailed here along with the workaround applied.

Getting this out of the way didn’t yield good results. While servers were not getting constantly overloaded now, the responses to clients still suffered delays.

The graph with blue spikes shows an average response rate of 39 seconds. and some spikes up to 100 seconds.

Digging into debug logs along with Red Hat engineering revealed something: the majority of time consumed in these tests was in dereference calls. Dereferencing simplifies many client operations mainly, in this situation, to get answers to two questions:

- What are the groups for this user?

- What are the members for this group?

These calls are directly related to the customer’s use case, and they were found to be the root cause.

After testing different options there was a clear winner on how to address this situation. Setting ldap_deref_threshold=0 on clients to prevent them from sending deref calls.

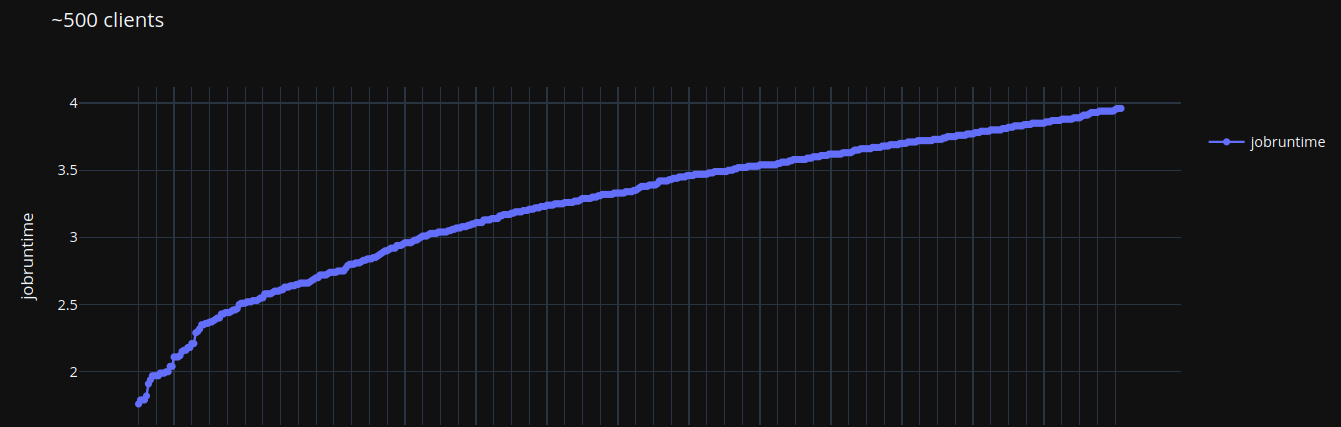

Testing with all tunables in place and the deref control disabled did in fact yield good results.

This graph tops at 3.9 seconds which still fits the customer’s expectations. And IdM servers’ load was at acceptable levels as well around the 25% CPU mark.

Wrapping all those results up. It was clear that moving to RHEL 7 would help with auto-tuning in place, and improvements to internal plugins. Also, we know what tunables to apply now.

Final thoughts

There is still a lot of work to be done to enhance the performance in such situations. Which would be coming to future versions of IdM. Yet, working closely with Red Hat Technical Account Managers can help navigating those complicated situations to satisfaction.

Sull'autore

Ahmed Nazmy is a Principal Technical Account Manager (TAM) in the EMEA region. He has expertise in various industry domains like Security, Automation and Scalability. Ahmed has been a Linux geek since the late 90s, having spent time in the webhosting industry prior to joining Red Hat.