Thank you to Dr. Clement Escoffier, Vert.x Core Engineer, Red Hat, for review and diagrams.

In the day and age of Kubernetes and microservices, traditional implementations of clustered services need to be reevaluated, and Vert.x Clustering is no exception. Kubernetes and Vert.x microservices provide functionality that overlaps Vert.x Clustering, but do not replace it entirely. This article will compare the approaches and offer pragmatic pros and cons for each.

Vert.x Clustering

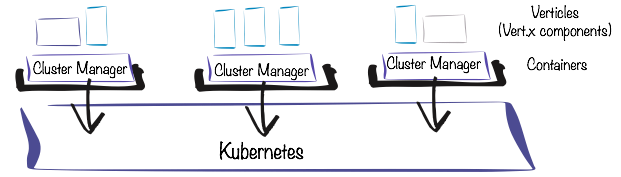

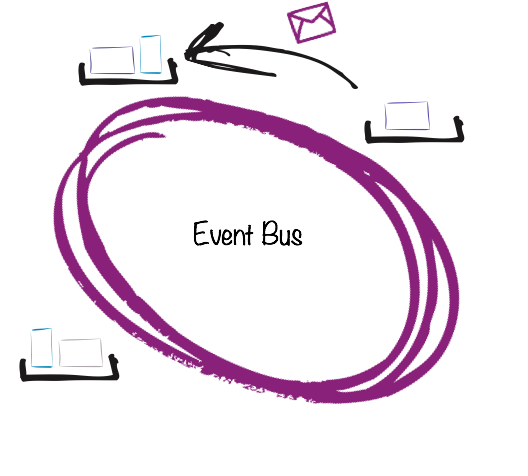

With Vert.x Clustering, Vert.x can be run as a set of clustered instances that provide capabilities for high availability, distributed data, and a distributed Vert.x Event Bus. These are capabilities that are needed for deploying Verticles across multiple Vert.x instances and to have them perform in unison.

Cluster managers are provided for Infinispan (Red Hat Data Grid), Hazelcast, Zookeeper, and Ignite. Of note, Infinispan, Hazelcast, and Ignite can be run as embedded cluster managers, rather than be reliant on a separate deployment, such as a Zookeeper cluster. Yet, it may be possible to share an existing Zookeeper cluster with other dependent systems, such as Kafka, if they are available, and if you are fine with multiple systems dependent on that one Zookeeper cluster.

If you want to run a cluster manager in Kubernetes, such as Infinispan, be aware that multicasting is generally not available, and Kube Ping for JGroups will be needed for cluster member discovery.

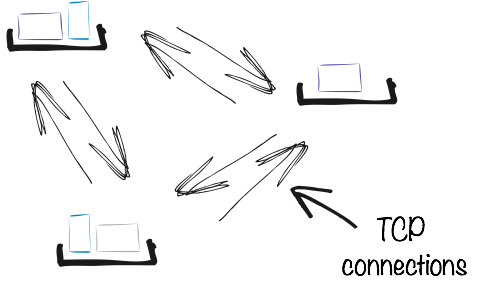

When a clustered Vert.x instance starts up, it connects to a cluster manager which tracks cluster membership and cluster-wide topic subscriber lists. Additionally, the cluster manager keeps state for distributed maps, counters, and locks. Cluster managers do not manage the TCP connections for the distributed Event Bus, but it provides the membership management facility required to establish the connections between the nodes.

With the distributed Event Bus, it’s possible to send messages through addresses to multiple Vert.x instances, each containing their own deployments of Verticles that are listening to topics, or simply responding to synchronous requests. With the distributed Event Bus being point-to-point, no message broker is involved.

As a whole, Vert.x Clustering provides everything you need to build a complex distributed system, with some constraints that will be highlighted later in the article.

Vert.x Message-Driven Microservices

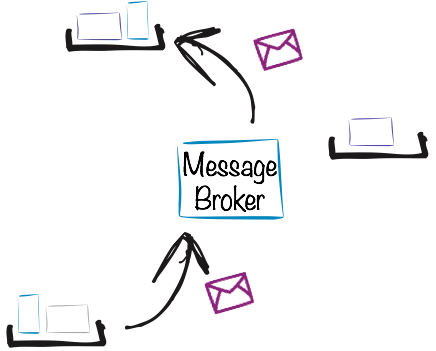

One way to build Vert.x microservices is to utilize a message broker, such as Kafka or ActiveMQ Artemis (AMQP 1.0) to send messages between microservices. This allows you to run each Vert.x microservice completely independent of other Vert.x microservices, and also dynamically add and remove them as message topic consumers.

By utilizing a highly scalable message broker, such as Kafka or Artemis, you can create large deployments of Vert.x microservices with load balancing via consumer (or message) groups. These groups allow only one consumer in the consumer group to read a given message.

A major benefit of using a message broker is message delivery guarantees. You do not have guarantees with the Vert.x Event Bus or HTTP that any given message will actually be delivered successfully. If you are handling sensitive data, such as financial transactions, losing data is not an option, and the message-driven microservice approach is preferred. Furthermore, you can deliver messages reliably across data centers using message brokers. Vert.x Clustering does not handle that use case.

A downside of using a message broker is relatively high latency for sending a message from producer to consumer. That being said, given proper networking, your message broker can be tuned to reduce these latencies when necessary, and reasonable latencies (under 10 ms) can be expected.

If you want to learn more about message-driven Vert.x, I suggest you look at Vert.x Proton for AMQP and Vert.x Kafka Client; both of which have great example projects to get started.

Vert.x Microservices (on Kubernetes)

When people think of microservices, they also think of HTTP/1 as the means for microservices to communicate. Yet, there is also the possibility of using HTTP/2, Websockets, and even gRPC to the same effect. Additionally, there is the possibility to do more than just Request/Reply and have bidirectional communication as well.

Implementing this type of microservice is also the most simple, as there are no dependencies on a cluster manager or message broker. Yet, there could still be the need for service discovery of microservices which adds a bit of complexity. However, if you run your Vert.x microservices on Kubernetes (or OpenShift), you can take advantage of internal (and external) DNS to lookup services without the complexity of service discovery.

Also, by using HTTP or gRPC, you can take advantage of the Istio Service mesh for advanced load balancing, circuit breakers, distributed tracing, and other critical elements needed for microservices. Comparable features have limited support with Vert.x Clustering and also message brokers as they do not integrate with a service mesh.

Like Vert.x Clustering, there are no message delivery guarantees, but you can implement automatic retries and circuit breakers to improve your reliability. Yet, even with those improvements, message loss can and will occur, as there are still no guarantees. Furthermore, you must have idempotent consumers because one message could be delivered multiple times!

Direct Comparison

There are many features and attributes associated with Vert.x Clustering and microservices, but it is possible to bring them into alignment for comparison. With a side-by-side comparison, pros and cons can be made more clear, and ultimately, this can help you determine the appropriate approach to build a distributed system with Vert.x for your needs.

| Feature/Attribute | Vert.x Clustering | Vert.x Message-Driven Microservice | Vert.x Microservice on k8s/Istio |

| Transport | Vert.x TCP Event Bus |

|

|

| Connection | Peer-to-Peer | Message Broker | Peer-to-Peer |

| Communication | Bidirectional | Unidirectional | Bidirectional (HTTP/2, WS, gRPC) |

| Payloads |

|

|

JSON

Protobuf (gRPC) |

| Message Channel Types |

|

|

|

| Location Transparency | Yes (Event Bus Address) | Yes (Topic) | Yes (internal DNS) |

| Latency (Relative) | Low | High | Low/Medium |

| Scalability (Relative) | Low/Medium | High | Medium |

| Flow Control | No | Yes | No (HTTP)

Yes (gRPC) |

| Delivery Guarantees | At-Most-Once |

|

At-Most-Once |

| Ordering Guarantees | No | Yes | No |

| Consumer Groups | No | Yes | N/A |

| Load Balancing | Yes. (Round robin send to multiple registered handlers) | N/A | Yes (k8s Service or Istio traffic routing) |

| Timeouts | Yes | Yes (Producer) | Yes (HTTP, gRPC, Istio) |

| Automatic Retries | Yes (Circuit Breaker, RxJava) | Yes (Broker) | Yes (Circuit Breaker, Istio) |

| Circuit Breakers | Yes | N/A | Yes (Hystrix or Istio) |

| Distributed Tracing | No | Yes | Yes |

| Service Mesh Compatible | No | No | Yes |

Considerations

So, what approach should you choose? There is no clear winner, as it depends entirely on your use case. With Vert.x Clustering, you gain many features, but there are also many related constraints for those features. Going with microservices, you have more simplicity, but must rely on other tools, such as Infinispan, to have distributed data. You can see a comparison of the pros and cons of each approach below.

Vert.x ClusteringPros:

|

Vert.x Message-DrivenPros:

|

Vert.x MicroservicesPros:

|

Cons:

|

Cons:

|

Cons:

|

Conclusions

Building distributed systems is difficult, but Vert.x offers a lot of features and flexibility to build exactly what you need for your particular use case. It’s best to start first with the fundamentals, such as whether you need message delivery guarantees or not. Given those basic requirements, you can begin to design your system.

If you need commercial support, Red Hat supports Vert.x through Red Hat OpenShift Application Runtimes, plus you can get bundled support for Data Grid (Infinispan), AMQ, and more. You can also utilize Red Hat Consulting services to help your team architect Vert.x for your needs. Please reach out to ermurphy@redhat.com for any inquiries.

執筆者紹介

チャンネル別に見る

自動化

テクノロジー、チーム、環境にまたがる自動化プラットフォームの最新情報

AI (人工知能)

お客様が AI ワークロードをどこでも自由に実行することを可能にするプラットフォームについてのアップデート

クラウドサービス

マネージド・クラウドサービスのポートフォリオの詳細

セキュリティ

環境やテクノロジー全体に及ぶリスクを軽減する方法に関する最新情報

エッジコンピューティング

エッジでの運用を単純化するプラットフォームのアップデート

インフラストラクチャ

世界有数のエンタープライズ向け Linux プラットフォームの最新情報

アプリケーション

アプリケーションの最も困難な課題に対する Red Hat ソリューションの詳細

オリジナル番組

エンタープライズ向けテクノロジーのメーカーやリーダーによるストーリー

製品

ツール

試用、購入、販売

コミュニケーション

Red Hat について

エンタープライズ・オープンソース・ソリューションのプロバイダーとして世界をリードする Red Hat は、Linux、クラウド、コンテナ、Kubernetes などのテクノロジーを提供しています。Red Hat は強化されたソリューションを提供し、コアデータセンターからネットワークエッジまで、企業が複数のプラットフォームおよび環境間で容易に運用できるようにしています。

言語を選択してください

Red Hat legal and privacy links

- Red Hat について

- 採用情報

- イベント

- 各国のオフィス

- Red Hat へのお問い合わせ

- Red Hat ブログ

- ダイバーシティ、エクイティ、およびインクルージョン

- Cool Stuff Store

- Red Hat Summit