Red Hat 블로그

Red Hat Ceph Storage 4 brought the upstream Ceph Nautilus codebase to our customers, and laid out the foundation of our Ceph storage product portfolio for the rest of the year. 4.0 is integrated with OpenStack Platform 16 from the start, enabling customers to roll out the latest and greatest across the Red Hat Portfolio: OpenStack Platform 16 on RHEL 8.1 with Red Hat Ceph Storage 4.0 through a single, director-driven install process, equally supporting dis-aggregated and hyperconverged configurations. The combination of Bluestore and Beast.ASIO as default components literally doubled our object store write performance compared to 12 months ago, and that is just the start of our object story for what promises to be a very busy year.

4.1

Today, we are releasing version 4.1 of Red Hat Ceph Storage (RHCS), the newest update to our infrastructure storage offering, establishing a new standard for software-defined storage usability and new milestones in security and lifecycle management. Bold words, says you. Read on, says I.

Ceph Storage 4.1 introduces an interface between the Object Storage Gateway (RGW) and Hashicorp Vault to provide our customers running petabyte-scale S3 storage clusters access to the popular key management product to support versioned keys for object encryption. This functionality was developed with the team at Workday.

Another enhancement targeting object store users is the support for Amazon S3’s Secure Token Service (STS) in RGW. STS is a standalone REST service that provides temporary tokens for an application or user to access a Simple Storage Service (S3) endpoint after the user authenticates against an identity provider (IDP). With this latest update, Ceph Object Gateway supports STS `AssumeRoleWithWebIdentity`. This service allows web application users who have been authenticated with an OpenID Connect/OAuth 2.0 compliant IDP to access S3 resources through RGW.

Lifecycle management and platform support is the second area delivering major improvements. This release introduces support for EUS RHEL distributions, starting with 8.2. This is a first for a Red Hat storage product, supporting our container images on the 2-year RHEL minor version lifecycle for customers who wish to minimize upgrade churn.

This is a need we see prevalently in the Finance and Telco verticals, and consequently we are enabling this for the upcoming OpenStack Platform 16.1. Support for the regular RHEL lifecycle remains unchanged, and it includes now RHEL 7.8 and 8.2. A further enhancement allows denser colocation of the Grafana container, increasing the capabilities of our minimal three-node configuration.

CephFS snapshots are now fully supported. This is interesting in its own right, and will see further use to enable backup and restore of Kubernetes in an upcoming release of OpenShift Container Storage.

Several improvements were made to the Ceph Storage Dashboard. My favorite is the addition of Bluestore compression stats.

Of course, these highlights are not the complete story. Do review the release notes for the gory details. But before you do that, let me walk you through the grand tour of the major changes we introduced with the 4.0 release.

Security

Security-conscious customers will take note of the support for the FIPS-140-2 standard provided through the use of RHEL’s core cryptographic components as they become certified by NIST. In combination with other enhancements, this has already enabled a Red Hat partner selling services in the Federal space to attain FedRAMP certification for a private cloud built with OpenStack Platform and Ceph Storage on top of a “FIPS mode” RHEL install.

The introduction of the messenger v.2 protocol enabled encryption of the Ceph protocol itself, empowering customers wishing to encrypt a Ceph’s cluster’s private network to do so. While the threat of rogue insiders roaming in one’s datacenter cannot be mitigated by software means, this feature enables our customers to pursue policies where everything transmitted over the network is encrypted, irrespective of physical or VLAN segregation of traffic.

Support for multi-factor auth delete in RGW rounds up the security features found in 4.0. Multi-factor authentication added an increased layer of security toward preventing accidental or malicious bucket deletion. Enabling MFA when changing the versioning state of a bucket or permanently deleting an object version helps mitigate security issues from compromised credentials, requiring a second factor to be provided to authenticate successfully.

Management

Ceph Storage 4 maintained compatibility with existing Ceph installations while enhancing Ceph’s overall manageability. By adding a new management UI providing operators with better oversight of their storage clusters, Ceph Storage no longer requires mastering the internal details of the RADOS distributed system, significantly lowering the learning curve to SDS adoption. Operators can now manage storage volumes, create users, monitor performance and even delegate cluster management from the new dashboard interface. Power users can operate clusters from the command line just as before, but with additional delegation options available for their junior administrators, combining the best of both worlds.

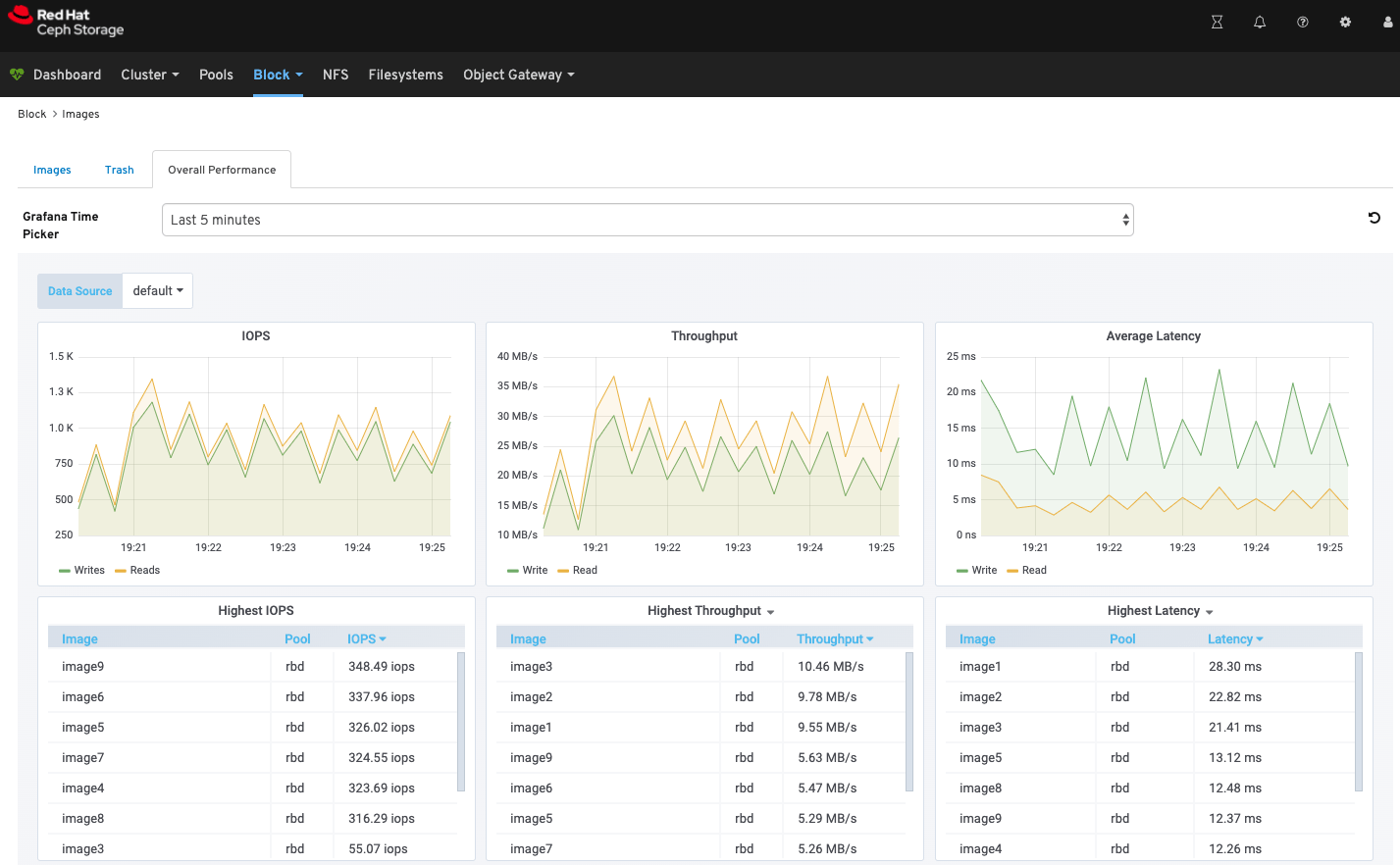

We also helped you put noisy neighbors in their place, with the long-awaited arrival of “RBD Top”. Integrated within the Dashboard is a facility providing a bird’s-eye view of the overall block workloads’ IOPS, throughput, as well as average latency. It includes a ranked list of the top 10 images that are using the highest IOPS and throughput, and as well as those images experiencing the highest request latency — hence its moniker.

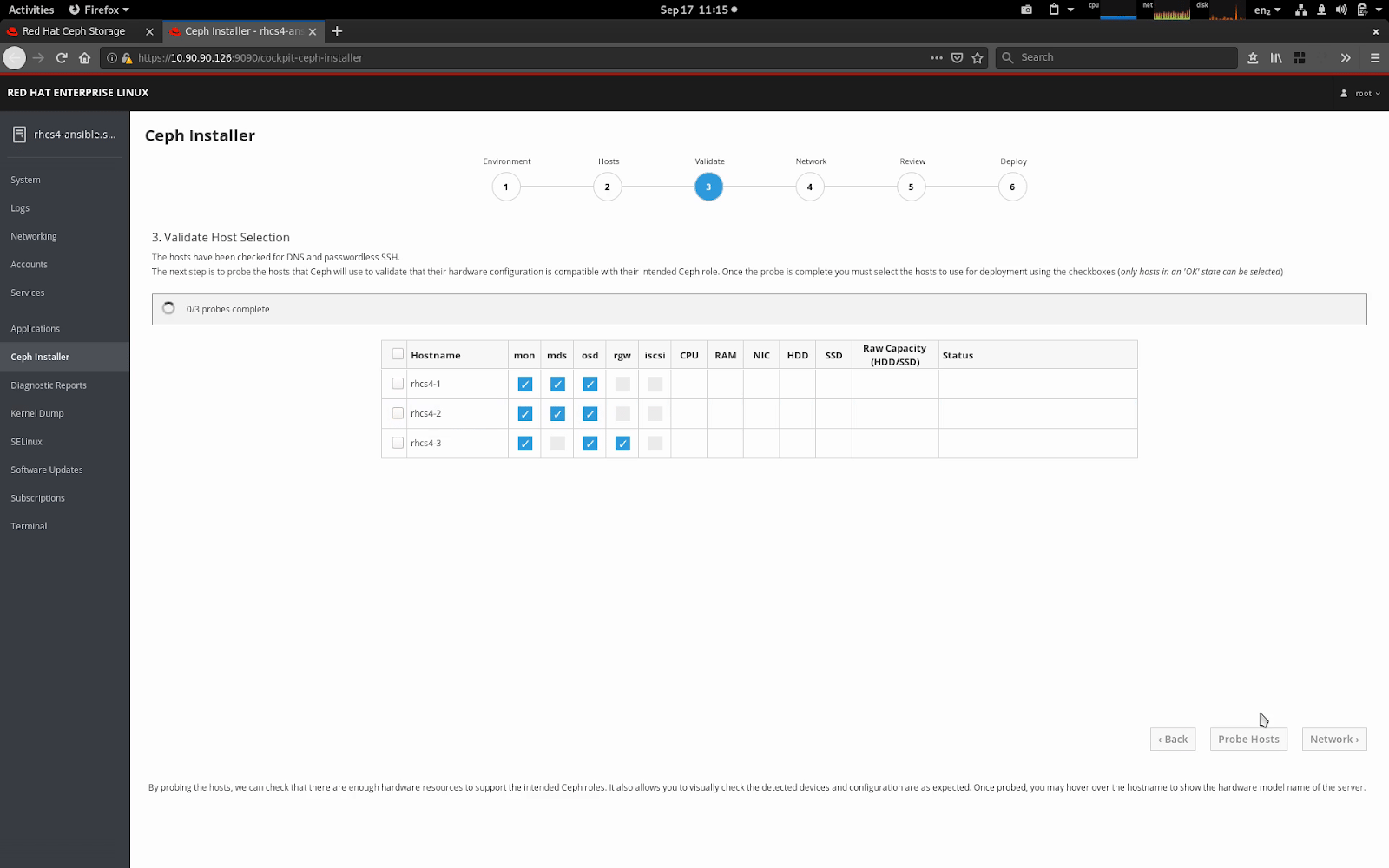

Last but not least, we added the simplest install experience for any Ceph Storage yet. During our most successful beta program to date, we introduced a new GUI installation tool built on top of the Cockpit web console.

The UI installer was a further step forward in usability, delivering an opinionated install experience backed by the same Ansible logic that many operators are already familiar with, and requiring no Ceph experience whatsoever on the part of those deploying a Ceph cluster for the first time.

While selecting hardware from a reference architecture remains necessary to ensure a good balance of economics and performance, the installer removes Ceph expertise as a hurdle to deploying a cluster for hands-on evaluation. The install UI guides users with no prior Ceph knowledge to build clusters ready for use by providing sensible defaults and making the right choices without asking the operator for much more than a set of servers to turn into a working cluster.

Overall, making Ceph easier to install and manage significantly lowers the bar to access advanced, enterprise-class storage technology to a broader set of end users with no distributed systems expertise.

Scale

Our Nautilus-based releases bring increased robustness to the class of storage clusters larger than 10 petabytes increasingly common in our S3 Object Store, Data Analytics and AI user bases. We are part of a rarified group of vendors with more than a handful of customers with S3-compatible capacity exceeding 10PB, with our leading edge customers exceeding 50PB now. We are proud of the work we are doing together with these advanced customers, and how they push us to continuously improve the product with requirements that foreshadow what the broader market will need in the years ahead.

Increasing automation of the cluster’s internal management (now including `pg_num` alongside bucket sharding and data placement activities) is a key element of storage scalability without requiring an ever-expanding operations staff.

A new implementation of the RGW’s webserver and more robust MDS scaling in CephFS joined a robust amount of tuning under the hood to deliver the scalability enhancements our users have come to expect from the Ceph platform every year. The combination of the new Object store front-end with the Bluestore backend results in twice the object store write performance we were delivering just a year ago! As we continue to push storage scale boundaries further up (and out), improved monitoring tools like “RBD Top” are needed to provide immediate insight on what clients are generating the most I/O.

In addition, we delivered TCO improvements at the lower end of the capacity range by introducing a minimum configuration of three servers and a recommended configuration of at least four (down from five and seven, respectively) for customers that are looking at compact Object Store configurations to start their scale-out infrastructure.

We’re Always Ready to Support You

Red Hat Ceph Storage 4 is the first Ceph release delivered with a 60-month support lifecycle available right from the start, thanks to extension beyond three years optionally available through Red Hat’s ELS program. As always, if you need technical assistance, our Global Support team is but one click away using the Red Hat support portal. Do try this at home Red Hat Ceph Storage is available from Red Hat’s website. Try out your hand with the future of storage today!

For more information on Red Hat Ceph Storage, please visit our Ceph Storage product page.

저자 소개

Federico Lucifredi is the Product Management Director for Ceph Storage at Red Hat and a co-author of O'Reilly's "Peccary Book" on AWS System Administration.