Blog da Red Hat

In this post we are going to discuss the challenges network operators currently face around the ongoing certification of containerized network functions (CNFs) or virtual network functions (VNFs), and how those challenges can be addressed with virtio data path acceleration (vDPA). vDPA is an innovative open and standards-based approach for CNF/VNF network acceleration.

If you are interested in understanding the certification problem, how acceleration is done today through proprietary vendor SR-IOV and how vDPA provides the same capabilities openly solving the certification challenge, please read on!

So let’s talk about a certification

When a network service provider decides to add new VNFs or CNFs to its infrastructure it faces the challenge of validating and certifying those VNFs/CNFs for operational correctness within their particular operational environment.

In order to accomplish this goal, the VNFs/CNFs require validation for the initial onboarding of those functions, as well as re-validating each time the infrastructure changes or the VNFs/CNFs themselves are upgraded.

It is this latter portion that at least partially motivated our recent work on vDPA. Another key motivation, or rather goal, for this work is very simple: As a network operator, I want containers and VMs to be offered maximum network performance available on the platform when executing without any special intervention required on the operator’s part. Finally, consider many contemporary use cases where power, cooling or computational form factor/packaging are limited; in these cases, more is less and vDPA helps reduce computational requirements.

Certification processes include dedicated labs, developing broad automations/CIs and involving a lot of people to get the clock working properly and be on top of things when problems occur. In short, this is a costly process, very costly. In addition to the engineering resource and related costs, this also adds to the time it takes to accomplish the goal of recertification.

Amplify the time by the costs and you can quickly see how these combinations get worse the more functions that need to be certified in various configurations; in essence, an unwelcomed and often unexpected combinatorial explosion is upon us!

In typical deployments, when telcos want their VNFs/CNFs to be performant and run efficiently (high throughput and low latency) they will combine two building blocks:

-

A data plane development kit (DPDK) library in the VNF/CNF application to process packets as fast as possible (avoiding kernel system calls).

-

Single root input/output (SR-IOV) to steer packets fast and with low latency from the application from/to the hardware network interface card (NIC).

For SR-IOV to work with DPDK in VNFs/CNFs, a specific vendor DPDK driver is required to be added to the application’s image, matching the NIC used in the physical server which we call a poll mode driver (PMD). The driver is called poll because the driver needs to poll the hardware rather than work with interrupts in order to achieve the best performance.

The driver is part of the VNF/CNF image which means that we need a new image for each SR-IOV NIC used on the servers. What’s more, since these are different images (of the exact same application) we also need to test all the possible combinations of the images running on different servers.

This implies that for a VNF/CNF to run on two types of NICs the certification needs to be done for two combinations. Things get out of hand quickly.

For 3 NICs and 3 apps you now have 6 combinations (3! = 3x2x1). For 5 NICs and 5 apps you now have 120 combinations (5! = 5x4x3x2x1) As you see, once you start creating separate images with vendor NIC drivers the growth rate of the amount of drivers is factorial as and therefore so is the cost.

Virtio data path acceleration (vDPA) aims to address this problem. By integrating a single open standard PMD driver in the VNF/CNF image decoupled from the vendors NICs and having NICs implement a virtio data path, we are able to flatten the previous factorial growth. In other words, vDPA is a powerful solution for addressing the certification challenge of network accelerated VNFs/CNFs.

This work is part of the virtio-networking community, and we have published a number of blogs for additional reading around enhancing the open standard virtio networking interface. The next few sections will explain how this is done in practice starting with CNFs and moving on to VNFs.

Kubernetes CNIs and Multus

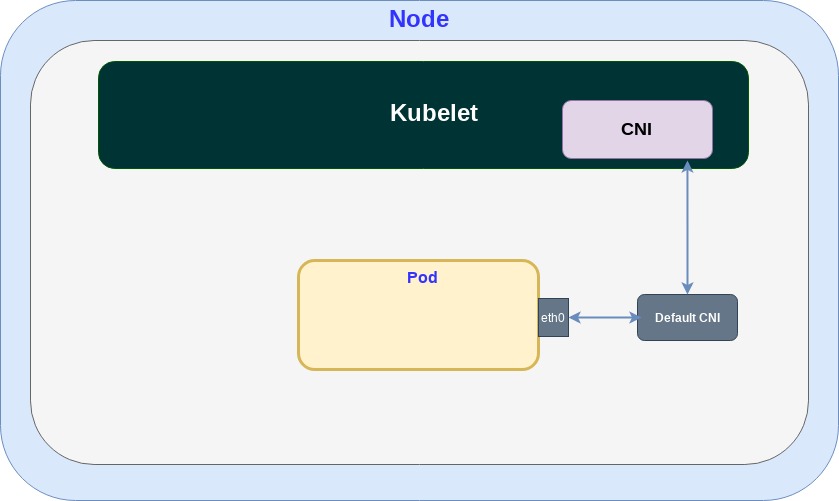

The Kubernetes Container Networking Interface (CNI) plugins are used to facilitate the deployment of additional networking features. The standard Kubernetes networking model is focused on simplicity: make a pod appear to the application developer as a single host with a single network interface (in our example “eth0”):

In order to provide advanced network services for CNFs we now require the ability to receive and transmit directly to high speed L2/L3 interfaces. The previous standard “eth0” interface does not provide such advanced capabilities since it goes through the Linux kernel, and is also used for pod management.

Thus we need the ability to attach an additional network interface to the Kubernetes pod, providing the desired high speed interfaces and separating it from the pod management interface.

Multus is a CNI plugin that allows multiple network types to be added to the pod. Through the additional networks we can add the desired L2 and L3 fast network interfaces. In the following diagram, we have “eth0” attached to the pod as the standard network interface and an additional “net0” interface attached for accelerated networking:

The additional interface uses the CNI plugin so that the state of the new ”net0” can be managed by Kubernetes services.

Accelerating containers with SR-IOV

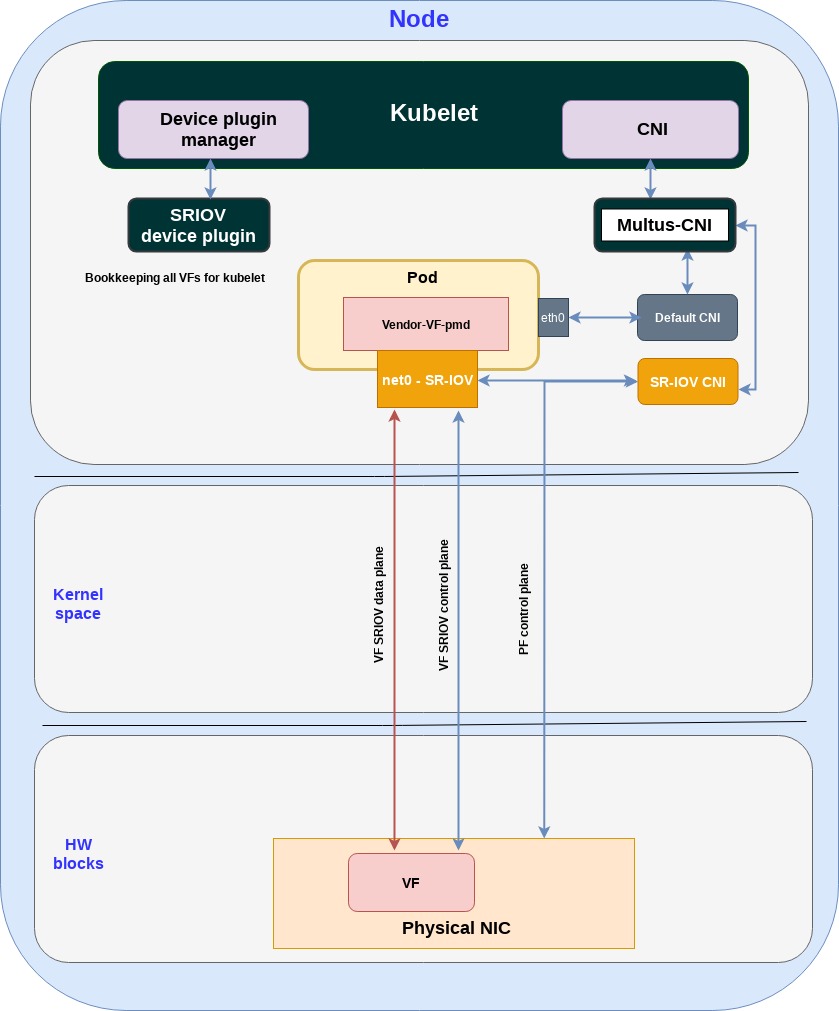

With Multus providing additional network types, let’s examine how things are done today for SR-IOV.

SR-IOV is used both for accelerating VNFs and CNFs however, for this example we will stick to CNF acceleration.

SR-IOV enables a physical NIC, which is managed through its physical function (PF), to be split into multiple virtual functions (VFs) and makes them available for direct IO to a VM or user space application. It’s widely used for CNFs since it provides low latency, high throughput and hardware based quality of service. As mentioned this is typically done in combination with DPDK where the DPDK app performs the packet processing and SR-IOV performs the packet steering.

The following diagram shows network acceleration for containers based on SR-IOV:

Let’s go over the different blocks:

-

SR-IOV CNI: interacts with the pod (adding an accelerated interface) and physical NIC, to provision VFs.

-

SR-IOV device plugin: performs the SR-IOV resource bookkeeping, manages the VFs pool and allocates VFs to containers.

-

vendor-VF-pmd: is part of the DPDK library running in the application and used for managing the control/data plane to the NICs VFs

-

net0-SR-IOV interface: connects the vendor-VF-pmd driver inside the pod to the physical NICs VF.

As pointed out, the main drawback of this approach is that each vendor implements its own data plane/control plane through SR-IOV (for example, proprietary ring layouts). This means that different vendor-VF-pmd drivers are not compatible with other vendor’s NIC resulting with the application image being tightly coupled to the servers physical NIC.

For additional details on the SR-IOV solution and how it compares to vDPA see our post "Achieving network wirespeed in an open standard manner: introducing vDPA."

Accelerating containers with vDPA

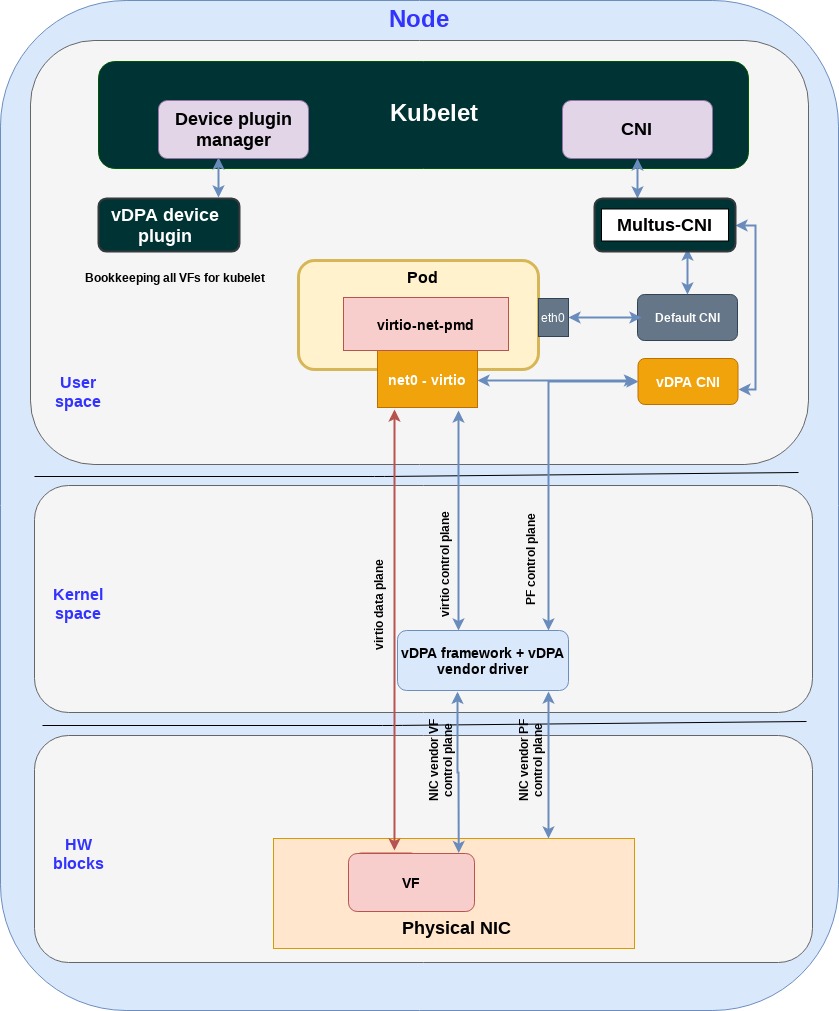

So let’s move to vDPA and explain what all the fuss is about.

vDPA is another approach for inserting high performance networking interfaces directly into containers, as well as VMs. It’s similar to SR-IOV since the NIC exports VFs to the user space CNF.

Like SR-IOV it can also be used for connecting and accelerating high speed NICs to user space DPDK application running as CNFs. It is also important to understand that as we said earlier, this applies to both containers and virtual machines, thus making this solution additive and at the same time it should be simplifying for the network operator. As you will see, while numerous network interface cards may be added to the same machine configuration, the containers or VMs do not notice, and more importantly, neither does the network operator.

The goal here is for containers and VMs to simply enjoy the highest network performance available on the platform when they are executing without any special intervention required on the operator’s part.

vDPA is based on the virtio network device interface for its data plane, an open standard adopted among NIC vendors (see the virtio spec). The standard has been around for over 10 years, mainly used for virtualization, so it’s stable and hardened.

vDPA also provides a translation layer from vendor specific control planes to the standard virtio network device control plane. This is a key point for NIC vendors. To support vDPA, they only need to provide a translation kernel driver instead of changing anything in each of their own control planes. The NIC vendors need only support the virtio data plane in their NIC hardware.

Similar to SR-IOV vDPA exports VFs however since the data path operation, such as the ring layout is standard now, we can have a single standard PMD driver inside the CNF which is decoupled from the vendor’s NIC:

Let’s go over the different blocks:

-

vDPA CNI: interacts with the pod (adding accelerated interfaces) and physical NIC (provisioning VFs).

-

vDPA device plugin: responsible for bookkeeping VF resources.

-

virtio-net-pmd: manages the control plane and data plane, is decoupled from the vendors NIC.

-

net0-virtio: connects the virtio-net-pmd driver inside the pod to the physical NICs VF.

-

vDPA framework + vDPA vendor drivers: responsible for providing the framework. Each NIC vendor can hook into maintaining its own control plane while implementing the standard virtio network device data plane in hardware (e.g. using virtio ring layout).

If you take a second and compare this diagram with the previous SR-IOV diagram you can see that in the SR-IOV approach both the control plane and data plane go directly from the pod to the physical NIC based on a vendor proprietary implementations.

In the vDPA approach the data plane goes directly from the pod to the physical NIC using the standard virtio ring layout the NIC vendor implements. The control plane however does not go directly from the pod to the NIC but rather passes through a vDPA translation framework in the kernel which enables the NIC vendors to maintain their proprietary control planes and still expose a standard virtio control and data planes to the pod.

Now that you have a network acceleration approach for CNFs decoupled from the vendors NIC you are able to effectively address the certification problem we described in the introduction. It doesn’t matter how many physical servers with different vendor NICs are used, it’s always the same CNF image running over all the NICs once these cards support vDPA.

For additional details on the vDPA CNF solution see our post, "Breaking cloud native network performance barriers."

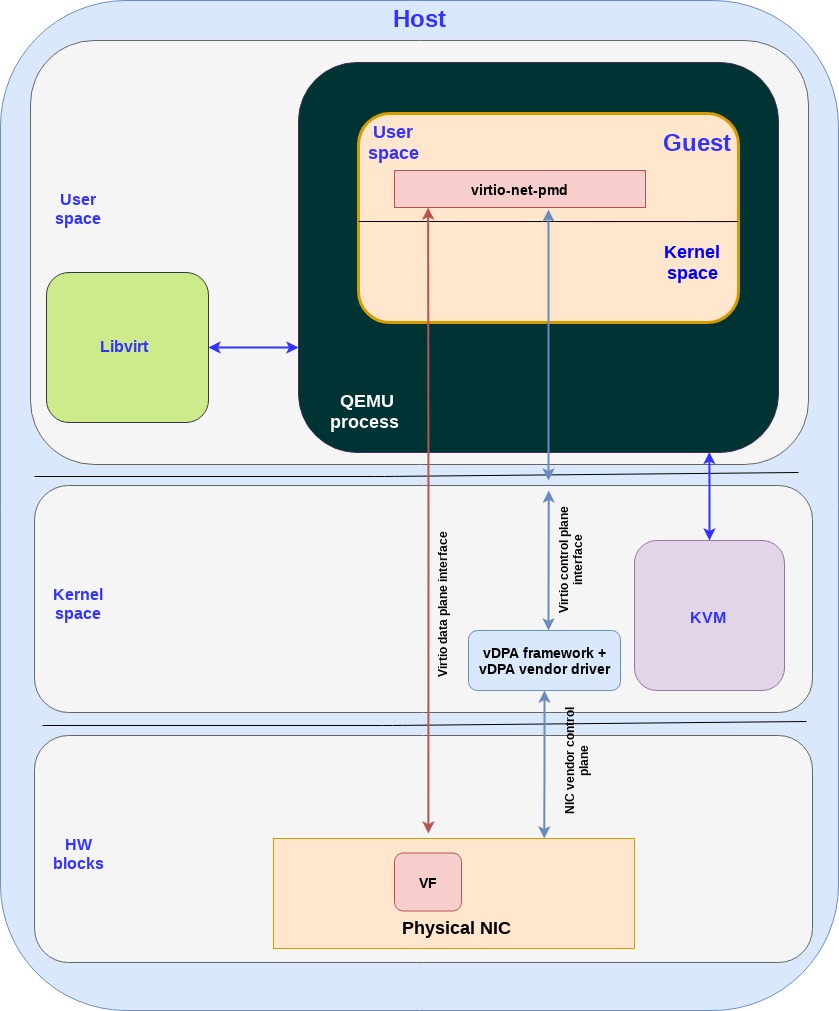

Accelerating VMs with vDPA

Until now we focused on CNFs however the exact same vDPA framework can be used for accelerating VNFs as well. This diagram shows how a VNF can be accelerated with vDPA:

Let’s go over the different building blocks:

-

KVM: The Linux kernel based virtual machine that allows Linux to function as a hypervisor, so a host machine can run multiple isolated virtual environments called guests (also named VMs of VNFs in our discussion). KVM basically provides Linux with hypervisor capabilities.

-

QEMU: A hosted virtual machine monitor that, through emulation, provides a set of different hardware and device models for the guest machine. QEMU can be used with KVM to run virtual machines leveraging hardware extensions.

-

Libvirt: an interface that translates XML-formatted configurations to qemu CLI calls. It also provides an admin daemon to configure machine facilities and child processes such as qemu, so qemu will not require root privileges.

-

Guest: the VNF in our case running inside the VM. It has a user space and kernel space. The application is DPDK based running in the VM user space.

-

virito-net-pmd: the same driver we use for the CNF solution. Note that the data plane and the control plane messages are the same, however the control plane transport changes (vhost user messages instead of ioctls commands).

-

vDPA framework + vDPA vendor drivers - the same framework and drivers we use for the CNF solution.

To simplify the discussion we assumed a VNF runs over a single VM however for multiple VMs we will spin up multiple VMs/QEMU-processes using the same solution described.

For additional details on the virtualization building blocks see our post, "Introduction to virtio-networking and vhost-net."

When talking about VNFs, not only are we able to effectively address the certification problem we described, but we are also able to support live migration of network accelerated VNFs between servers with different NICs, significantly simplifying telco operations.

For additional details on the vDPA VNF solution we'll refer you to the post "Achieving network wirespeed in an open standard manner: introducing vDPA" once again.

Summary

Lets refresh the goals we sought to achieve through implementing vDPA. We were first and foremost interested in containers and VMs being connected to the highest network performance available on the platform where they are executing, without any special intervention required on the operator’s part.

This was done in order to avoid the combinatorial explosion of certification which, at first, may seem like a small issue. But, in reality, it’s not! We see a general trend towards freeing up server CPUs to run more apps by shifting network loads to hardware, as well as saving on power/cooling and computational footprint for use cases such as various contemporary edge cases.

The current SR-IOV approach creates a factorial growth in testing/certification permutations and may not scale cost effectively. vDPA provides scalability by decoupling the driver inside the VNF/CNF image from the specific vendor NIC being used.

The virtio-networking community is leading the vDPA effort spanning multiple upstream communities including the Linux kernel, QEMU, libvirt, DPDK and Kubernetes. The major NIC vendors are doing significant work to support the vDPA approach, and we expect that vDPA production deployments should occur in the next 12-18 months.

Pushing the vDPA approach to public clouds is also being discussed, and the virtio-networking community believes at least some of the public cloud providers will be willing to adopt the vDPA approach. This will enable the deployment of network accelerated CNFs/VNFs on hybrid cloud environments composed of on-prem servers and public clouds.