The 5G Open HyperCore architecture, an open 5G solution that's operable across any hyperscaler, includes many elements. Some of these elements use a service mesh for improved observability and the Multus tool for flexible pod networking in Kubernetes. In this article, we'll look at using init containers in the Istio Container Network Interface (CNI) and explore how a sidecar container gets plugged into a pod.

Istio injects an init container (istio-init in particular) into pods deployed with a service mesh. The istio-init container sets up the pod network traffic redirection to and from the Istio sidecar proxy (for all TCP traffic). This requires the user or service account deploying the pods to have elevated Kubernetes role-based access control (RBAC) permissions. This could be problematic for some organizations' compliance requirements, and could even make certain industry security certifications impossible.

The Istio CNI plugin is a replacement for the istio-init container. The istio-cni approach performs the same networking functionality without requiring Kubernetes tenants to have elevated Kubernetes RBAC permissions.

This article compares the details, pros, and cons of the istio-init and istio-cni approaches and offers a recommendation.

About init container

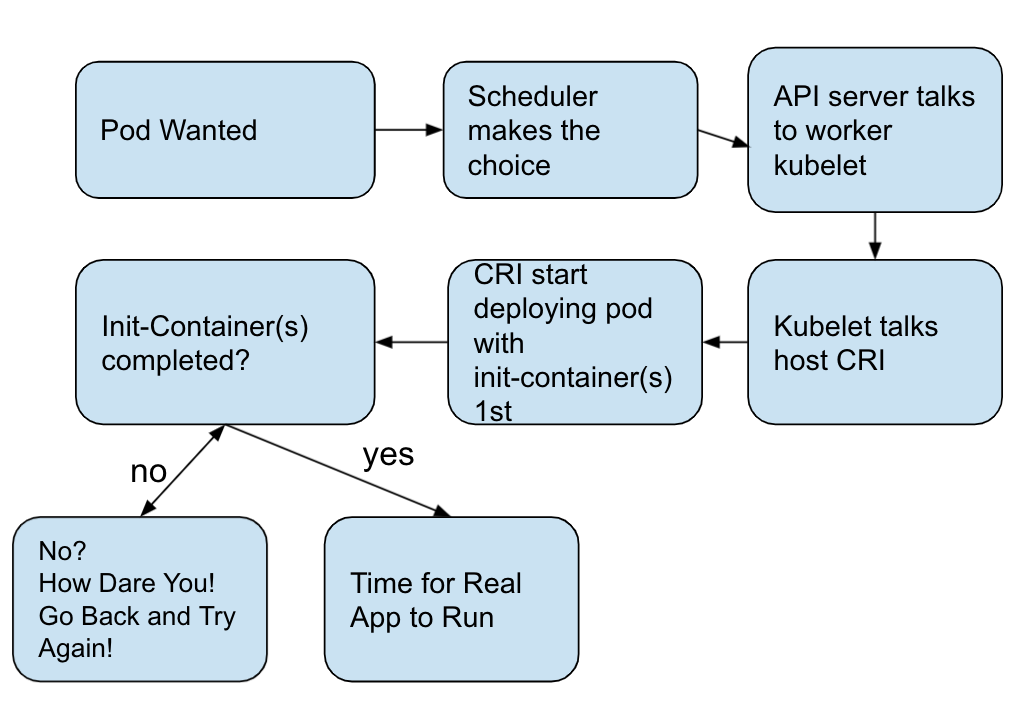

An init container is a dedicated container that runs before an application container launches. It's used to contain utilities and installation scripts that don't exist in the main application container image. Multiple init containers can be specified in a pod, and if more than one is specified, the init containers run sequentially. The next init container can run only after the previous init container runs successfully. Kubernetes initializes the pod and runs the application container only when all init containers have run.

During pod startup, the init container starts after the network and data volumes are initialized. Each container must exit successfully before the next container can start. If it exits due to an error, it produces a container startup failure, and it will retry according to the policy specified in the pod's restartPolicy. The pod is not ready until all init containers run successfully, and the init container terminates automatically after it runs.

[ Learn about cloud-native Kubernetes-native microservices with Quarkus and MicroProfile. ]

Istio is implemented by default with an injected init container called istio-init, which creates iptables rules before other containers in the pod can start. This requires the user or service account that's deploying pods in the service mesh to have sufficient privileges to deploy containers with CAP_NET_ADMIN, CAP_NET_RAW capabilities. Capabilities are a Linux kernel feature providing more granular permission control than the traditional Unix-like permissions structure (both privileged and unprivileged).

It's best to avoid giving application pods capabilities that are not required by workloads (the applications running within pods). Specifically, CAP_NET_ADMIN provides the ability to configure interfaces, IP firewall rules, masquerading, and promiscuous mode and other permissions. For a deeper look at capabilities, refer to the "man capabilities" man page in your Linux terminal.

You need to be careful with capabilities. In some scenarios, allowing these capabilities could expose container escape situations that may compromise a node and later the whole cluster. One way of mitigating this issue is by taking the responsibility for iptables rules out of the pod by using a CNI plugin instead.

Install Istio with the Istio CNI plugin

The CNI project, part of the Cloud Native Computing Foundation, consists of a specification and libraries for writing plugins to configure network interfaces in Linux containers. It also supports network plugins. CNI is concerned only with the network connectivity of containers and removing allocated resources when the container is deleted.

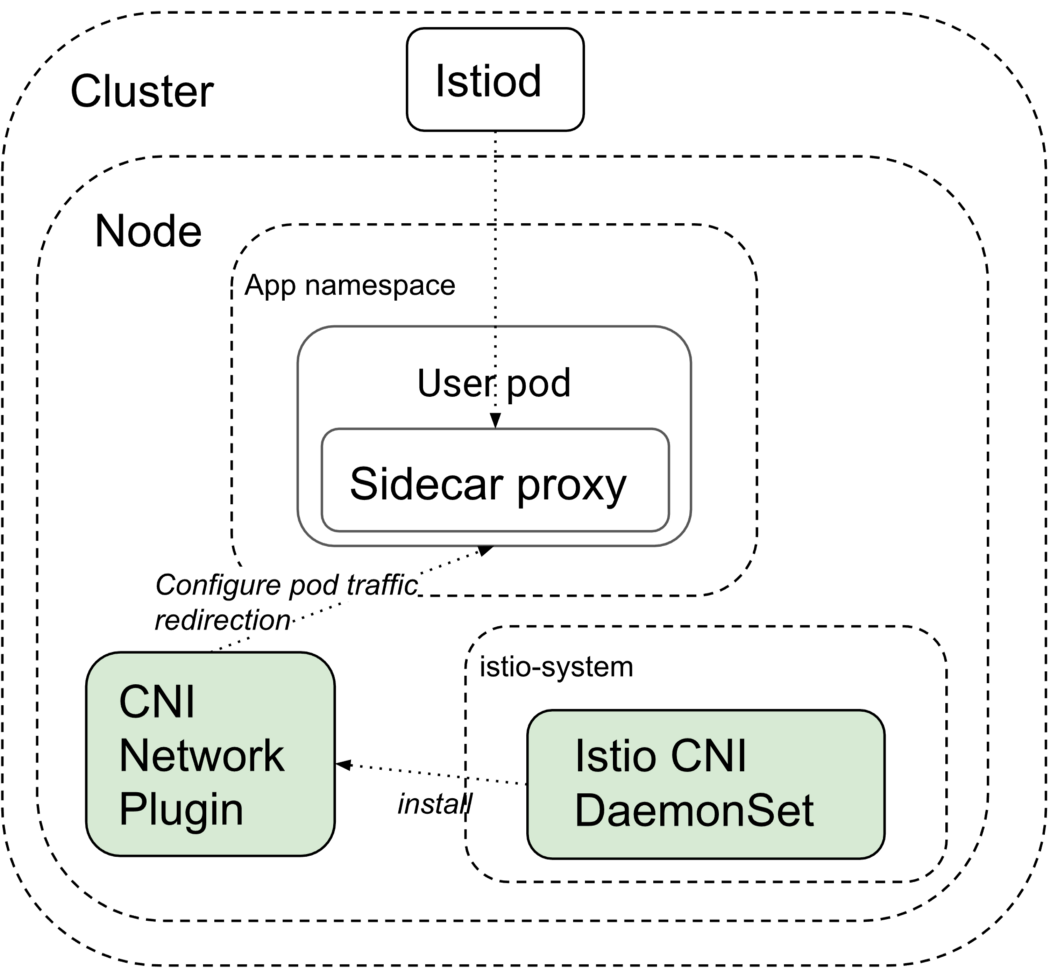

The Istio CNI plugin is a replacement for the istio-init container. It provides the same functionality of configuring iptables in the pods' network namespace but without requiring the pod to have the CAP_NET_ADMIN capability. Istio requires traffic redirection to capture network data properly in both the istio-cni and init container modes. Istio needs this to happen in the setup phase of the Kubernetes pod's lifecycle so that it's ready when the workloads start.

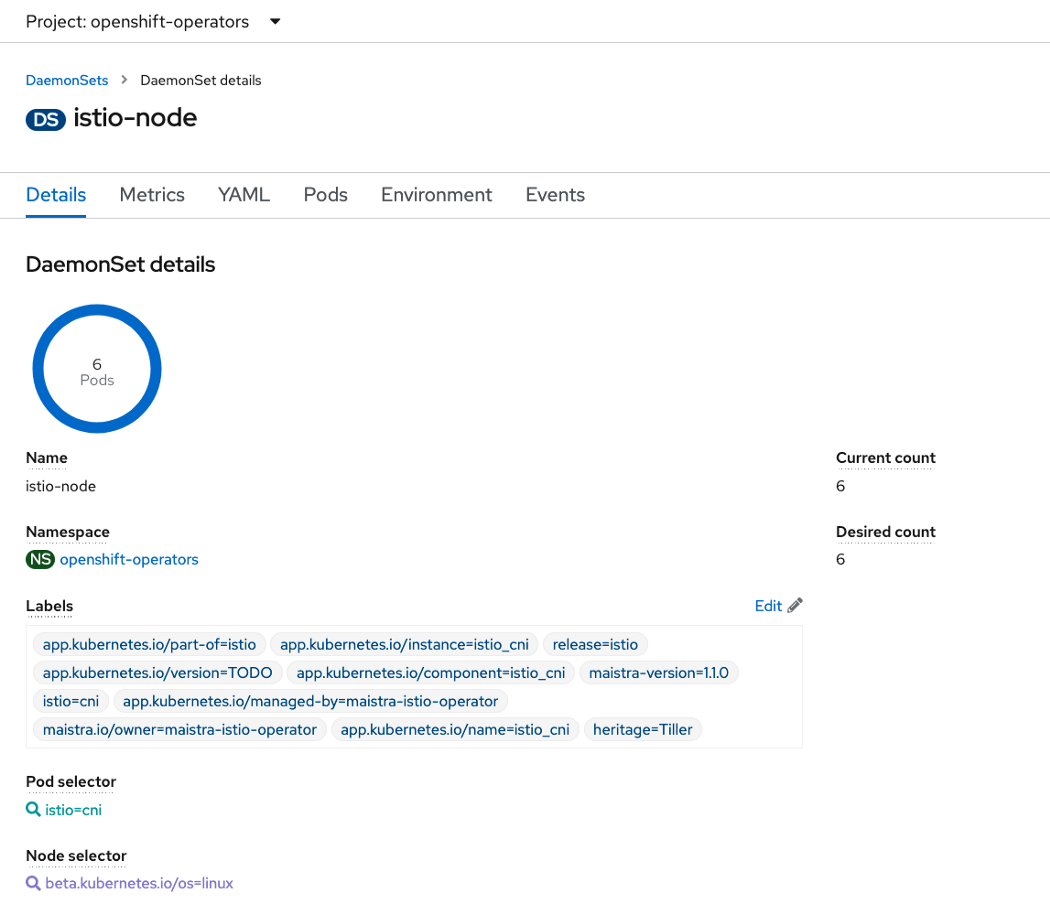

CNI plugins run after pod sandboxes are created, and they typically run as binaries on the host system or through a separate process from a given workload (see the Istio CNI DaemonSet in the figure above). The latter allows segregation of this responsibility from a workload into a separate dedicated process. This means the pod no longer needs to have additional capabilities for Istio to function, which reduces the exposed surfaces.

[ Download the eBook Storage patterns for Kubernetes for dummies. ]

Use istio-cni with Multus CNI

Istio can be leveraged in a way that makes service mesh a platform capability with multitenancy. This lets the Istio platform serve across multiple tenant namespaces in a controlled manner without sacrificing pod-level configurations to enable and disable it. As a platform capability, it is implemented and ready to be used across the cluster, and it's a maintained part of the platform lifecycle management system. Each node in the cluster gets the ability to accommodate tenant workloads with seamless service mesh availability with DaemonSets.

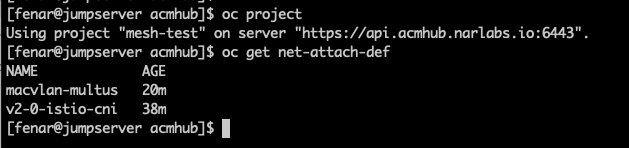

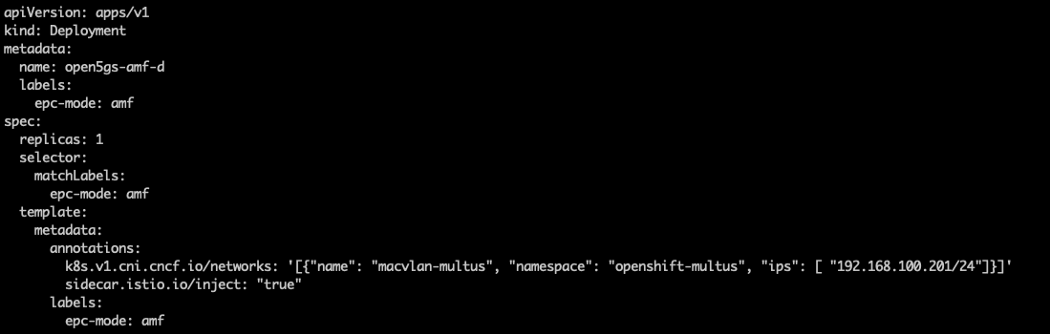

When using istio-cni in concert with Multus CNI (a CNI meta plugin used for attaching multiple networks to pods), istio-cni is configured to run using a Network Attachment Definition (net-attach-def), a standard for multiple network attachments for pods.

Istio functionality can be enrolled and enabled for each tenant workload namespace. This means a service mesh can be added or removed at the tenant namespace level. The functionality can also be used by pods marked with sidecar.istio.io/inject: "true" label, meaning a service mesh can also be enabled or disabled at the tenant workload level, in a pod deployment manifest.

[ Boost security, flexibility, and scale at the edge with Red Hat Enterprise Linux. ]

Despite the tenant namespace being enrolled in a service mesh, some of the workloads within a particular namespace may not want to be enrolled, for example, to avoid the Istio sidecar latency tax. To address this, Istio implements a pod deployment label selector to increase control.

Pros and cons of istio-init and istio-cni

The istio-init and instio-cni methods have advantages and disadvantages.

Security risk:

- istio-init: high

- istio-cni: low

An istio-init container requires CAP_NET_ADMIN and CAP_NET_RAW capabilities to alter iptables rules in its pod network namespace. These capabilities include a large concern for container escape security exposures. Conversely, istio-cni performs iptables rule alterations using DaemonSets deployed and maintained by cluster administrators. For details on the risk of using privileged containers, see NIST Special Publication 800–190 Application Container Security Guide (especially section 3.4.3).

Lifecycle management (LCM) difficulty:

- istio-init: high

- istio-cni: low

Upgrading the Kubernetes platform separately from add-on capabilities (such as Istio) can be problematic. Building a homogeneous application platform with capabilities embedded inside is often what an enterprise needs for better LCM and support. Many Linux distributions and Kubernetes software-as-a-service (SaaS) providers (such as AWS, Azure, Google Cloud Platform, and others) are moving to the CNI approach.

Extensibility:

- istio-init: low

- istio-cni: high

CNI is a vendor-neutral, constructive approach to implementing and maintaining container orchestration engines (such as Kubernetes). Therefore, it offers workload portability across different Linux distributions and Kubernetes SaaS platforms. Additionally, the ecosystem for service mesh for traces (Zipkin, Jaeger), log query (ElasticSearch), and visualization (Kiali) must be integrated into the service mesh, and it needs to be done at the platform level by administrators.

Complexity:

- istio-init: medium

- istio-cni: medium

To enable common, consistent methods, the CNI method looks more complex. However, using the init container methodology for setting network rules creates irregularity and inconsistency across different distros and SaaS platforms.

Multivendor potential:

- istio-init: low

- istio-cni: high

Many Linux distributions and SaaS platforms are moving towards the CNI method to offer multiple network interfaces (such as Multus) for tenant workloads, and using istio-cni brings consistency for the software stack.

Recommendation

Service mesh is a rapidly evolving area that has not addressed networking well because it is highly environment-specific and often requires manual intervention to configure. We believe that many mesh deployment mechanisms and installers will try to solve these problems by making the network layer pluggable and consistent. To avoid duplication, we believe it's prudent to define a common interface between existing network plugins and the service mesh, which is CNI.

With Istio mesh deployment, we recommend using istio-cni, and the Istio CNI plugin in particular. This helps you implement service mesh in the most abstracted way possible and check that control plane components use the fewest privileges necessary.

This article is adapted from the authors' Medium post What a mesh and published with permission.

About the authors

Doug Smith works on the network team for Red Hat OpenShift. Smith came to OpenShift engineering after focusing on network function virtualization and container technologies in Red Hat's Office of the CTO.

Smith integrates new networking technologies with container systems like Kubernetes and OpenShift. He is a member of the Network Plumbing Working Group and a contributor to OpenShift, Multus, and Whereabouts. Smith's background is in telephony and containerizing open source software solutions to replace proprietary hardware tandem switches using Asterisk, Kamailio, and Homer.

Fatih, known as "The Cloudified Turk," is a seasoned Linux, Openstack, and Kubernetes specialist with significant contributions to the telecommunications, media, and entertainment (TME) sectors over multiple geos with many service providers.

Before joining Red Hat, he held noteworthy positions at Google, Verizon Wireless, Canonical Ubuntu, and Ericsson, honing his expertise in TME-centric solutions across various business and technology challenges.

With a robust educational background, holding an MSc in Information Technology and a BSc in Electronics Engineering, Fatih excels in creating synergies with major hyperscaler and cloud providers to develop industry-leading business solutions.

Fatih's thought leadership is evident through his widely appreciated technology articles (https://fnar.medium.com/) on Medium, where he consistently collaborates with subject matter experts and tech-enthusiasts globally.

More like this

Simplify Red Hat Enterprise Linux provisioning in image builder with new Red Hat Lightspeed security and management integrations

F5 BIG-IP Virtual Edition is now validated for Red Hat OpenShift Virtualization

The Containers_Derby | Command Line Heroes

Can Kubernetes Help People Find Love? | Compiler

Browse by channel

Automation

The latest on IT automation for tech, teams, and environments

Artificial intelligence

Updates on the platforms that free customers to run AI workloads anywhere

Open hybrid cloud

Explore how we build a more flexible future with hybrid cloud

Security

The latest on how we reduce risks across environments and technologies

Edge computing

Updates on the platforms that simplify operations at the edge

Infrastructure

The latest on the world’s leading enterprise Linux platform

Applications

Inside our solutions to the toughest application challenges

Virtualization

The future of enterprise virtualization for your workloads on-premise or across clouds