I am writing this in late January 2023. Unless you have been living in a cave, isolated from the world, and without access to the news, you've probably heard many things (good and bad) about ChatGPT and how it is:

- Already changing how people study, to the point where some universities are banning it to prevent cheating in written tests and essays.

- Showing great potential to affect how people create, write, and plan anything from marketing campaigns to fictional stories and practically anything else that people usually write about.

- Causing concerns for people like, "hey, will this AI thing take my job?"

This will not be the last time you witness discussions about AI and ChatGPT, because it is really impressive and disruptive.

[ Get started with IT automation with the Ansible Automation Platform beginner's guide. ]

What can you do with ChatGPT?

You can type questions into ChatGPT and ask it to give you a definition and even respond in the manner of a specific character (for example, Dracula) or like a specialist in a particular subject. You can continue the dialogue with additional information, like "answer my question again, considering that I only want options that…" (you can be really creative here).

So, it understands common typed language, can interpret your text, and adjusts to the parameters that you define for the context or type of answer you want. Its current limitations are that its knowledge is based on the information that was available to the project during its training, which had a cut-off date of 2021.

Because it does not have access to current information, it is a totally different beast compared to something like Google search.

How good is ChatGPT in general?

I have to admit I was positively impressed by how well it (apparently) comprehends my questions and comments. Usually the answers make sense, but that doesn't necessarily mean they are correct. In many cases they are not—or at least they are not complete.

But if you use discernment and evaluate what you receive from ChatGPT, in many occasions the information is useful, at least up to a certain point and assuming you don't simply trust what the AI tells you… (and you will not, right?).

[ Learn 3 ChatGPT productivity tips for IT professionals ]

Can it do programming? Can it develop Ansible playbooks?

To simplify, let me just say that it can generate programs (and scripts and Ansible playbooks and Ansible roles). But how useful or ready to use are they? That will become clear with some examples.

I started by giving it a block of code and asking, "Considering this example variable, write me an Ansible playbook that will convert items of this list, replacing single quotes with double quotes. Use a Jinja filter for this, without a loop":

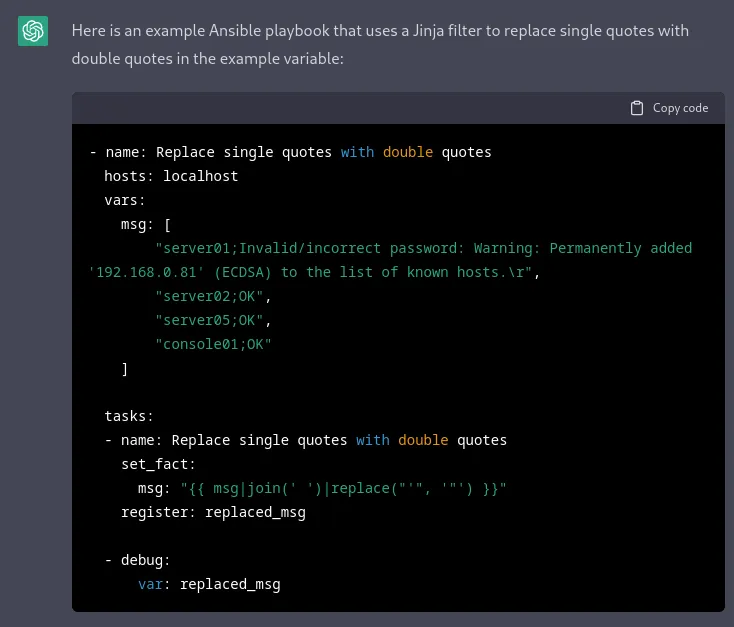

The first response I got was:

Which looks OK, and it even gave this explanation about the code:

"This playbook uses the

set_factmodule to create a new variablereplaced_msgwhich is the originalmsgvariable with all single quotes replaced with double quotes. Thejoinfilter is used to join all items of the list into a single string, and thereplacefilter is used to replace all single quotes with double quotes. The final result is then saved in thereplaced_msgvariable and displayed using thedebugmodule."

The problem with this code is that it is… wrong.

- Initially, it would not even run. The double quotes needed to be escaped, which I added to the code:

tasks: - name: Replace single quotes with double quotes set_fact: msg: "{{ msg|join(' ')|replace(\"'\", '\"') }}" register: replaced_msg - debug: var: replaced_msg - Then I was able to run it, but the result is not what I wanted:

TASK [debug] *********************************************************************************************************** ok: [localhost] => { "replaced_msg": { "ansible_facts": { "msg": "server01;Invalid/incorrect password: Warning: Permanently added \"192.168.0.81\" (ECDSA) to the list of known hosts.\r server02;OK server05;OK console01;OK" }, "changed": false, "failed": false } }

The output was concatenated to one big string, but I wanted it in a list, like in the original variable.

Asking again

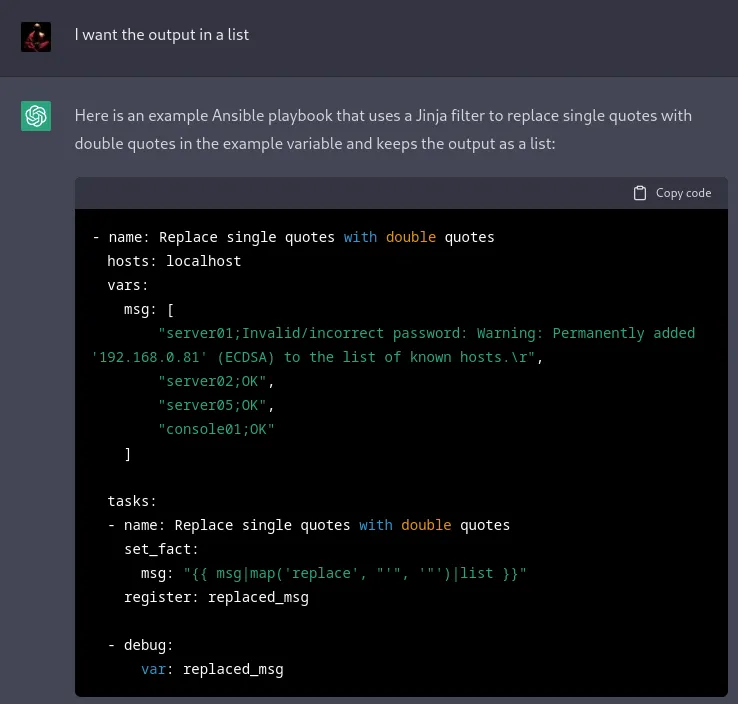

So I added more context to my request, asking it to provide the output in a list:

Again, it did not escape the double quotes, and I had to fix that for it to run:

tasks:

- name: Replace single quotes with double quotes

set_fact:

msg: "{{ msg|map('replace', \"'\", '\"')|list }}"

register: replaced_msg

- debug:

var: replaced_msg

Now my output was generated the way I wanted: as a list and with the single quotes replaced. But, the logic is kind of weird. I would have just set the new variable instead of setting a variable and registering the result. And I would have eventually remembered the map filter, which is more appropriate to operate with a list.

Getting the example from ChatGPT was somehow useful, even though it was not perfect.

Other limitations

I have not even talked about some of the other limitations, like:

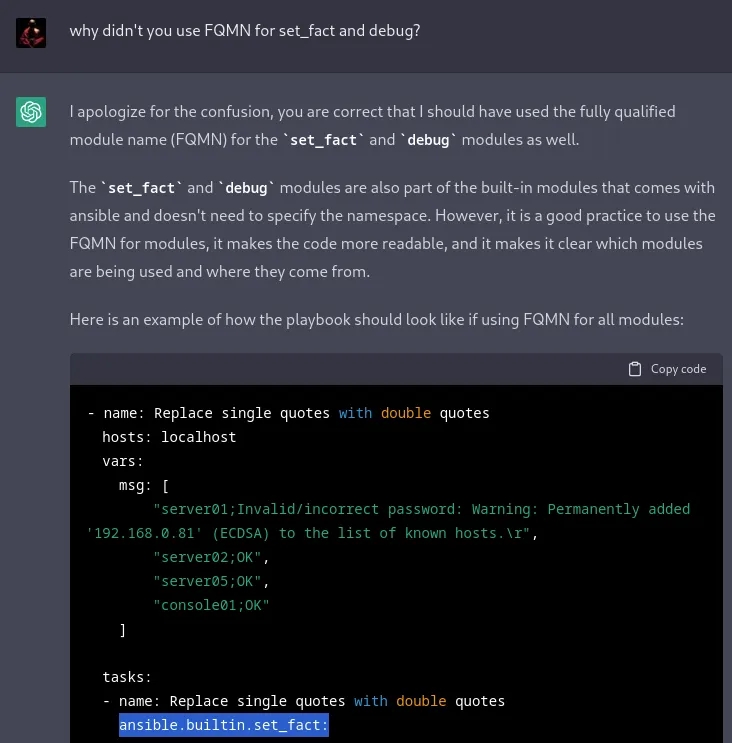

- The code should be using fully qualified module names (FQMN).

- All tasks should be named.

So as a very stubborn human being, I went back to the same dialogue with ChatGPT and asked, "why didn't you use FQMN for set_fact and debug?" I got a polite apology, an explanation, and a new example:

And then I asked, "did you know that best practices also suggest that every task should be named?" Again, ChatGPT apologized and tried again:

I omitted the full output because I just wanted to show that ChatGPT was very humble and responded elegantly to my comments.

As you can see, it got better in every increment. But I gave up trying to instruct it to put backslashes before the proper double quotes.

At this point, I just needed to remember the Jinja filter to solve my problem, not write the perfect instruction to make ChatGPT write the perfect playbook.

[ For another experiment, read Ansible and ChatGPT: Putting it to the test ]

Wrapping up

If you are trying to learn or if you have no clue about how to perform a certain programming task, ChatGPT can show you some examples that may or may not work the way you want. This can be useful in some situations, because if you search for examples in a search engine, you may find thousands of references that you need to evaluate, interpret, and test, versus having to so for the single result ChatGPT provides. Reading manuals is always recommended, but sometimes you must read pages and pages until you find one applicable example.

ChatGPT is also useful if you just want a quick example to give you ideas or help you remember a module or function that you've already used before.

But I would not recommend you take anything provided by the AI and use it without fully understanding, validating, and testing it. Especially if you need to use it in a production environment. Well, this general advice is applicable to ANYTHING you find on the internet. I am just being obvious.

If you know what you want and ask the right questions, you can get something good from ChatGPT. But you must also strike a balance between "how much time do I want to spend writing instructions, evaluating, rewriting, rinse, repeat" vs. "I can write this perfectly in 15 minutes."

Without a doubt, this technology has a lot of potential. And the next version will include much more information that is much more recent. I just hope that the AI from the future doesn't think I was cruel to its grandson when it reads this article. After all, Skynet is still on my mind.

About the author

Roberto Nozaki (RHCSA/RHCE/RHCA) is an Automation Principal Consultant at Red Hat Canada where he specializes in IT automation with Ansible. He has experience in the financial, retail, and telecommunications sectors, having performed different roles in his career, from programming in mainframe environments to delivering IBM/Tivoli and Netcool products as a pre-sales and post-sales consultant.

Roberto has been a computer and software programming enthusiast for over 35 years. He is currently interested in hacking what he considers to be the ultimate hardware and software: our bodies and our minds.

Roberto lives in Toronto, and when he is not studying and working with Linux and Ansible, he likes to meditate, play the electric guitar, and research neuroscience, altered states of consciousness, biohacking, and spirituality.

More like this

Extend trust across the software supply chain with Red Hat trusted libraries

AI insights with actionable automation accelerate the journey to autonomous networks

Technically Speaking | Build a production-ready AI toolbox

Technically Speaking | Platform engineering for AI agents

Browse by channel

Automation

The latest on IT automation for tech, teams, and environments

Artificial intelligence

Updates on the platforms that free customers to run AI workloads anywhere

Open hybrid cloud

Explore how we build a more flexible future with hybrid cloud

Security

The latest on how we reduce risks across environments and technologies

Edge computing

Updates on the platforms that simplify operations at the edge

Infrastructure

The latest on the world’s leading enterprise Linux platform

Applications

Inside our solutions to the toughest application challenges

Virtualization

The future of enterprise virtualization for your workloads on-premise or across clouds