In 2006, we had a distributed systems problem at the University of Minnesota. At the time, it was common for each department to run a "shadow IT" department. While this was usually okay for certain support tasks such as the help desk, it wasn't a great fit for larger IT roles including managing servers. And it seemed every department wanted to run its own web server.

[ Get the guide to installing applications on Linux. ]

Some departments were so independent that they ran web servers on a desktop PC in an empty office or cubicle. One department had its web server tucked under a conference table. That meant the computer—and its website—was at risk of getting shut off if someone bumped the power switch during a meeting. And that happened.

That's when we realized the university needed a new solution, something we could support at central IT and other departments could use to host their websites. We needed an enterprise web-hosting service.

Designing a shared web-hosting service

Our challenge in creating an enterprise-wide hosting service was mostly limited by cost. In higher education, we often face tight budgets. But creating a secure, shared web-hosting service that everyone could use was a big job. And it was made more complex by the need to fund it through our existing "enterprise IT tax" model, the fee that departments paid to support central IT projects. If we could create a web-hosting service funded through the IT tax, then departments would be more likely to adopt it. After all, they were "already paying for it" through the IT tax, so deans would be less likely to spend department money for their IT teams to run their own web servers.

We already ran Red Hat Enterprise Linux (RHEL) for central IT, so we leveraged that for our new shared web hosting. We started with this outline:

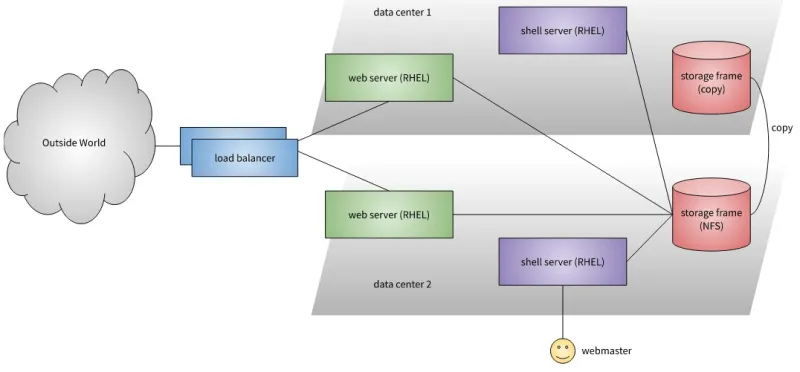

- Web servers: Two Linux web servers to run the HTTP and HTTPS websites using Apache.

- We had multiple datacenters, so we put one Linux web server in each of two datacenters. They accessed a common web document root through a read-only Network File System (NFS) mounted from an enterprise storage system.

- Mounting the document root as read-only was a security feature. Most websites wouldn't need to modify their web pages. But the key phrase is most websites. It turned out that a few big departments had websites that wrote updates to their web files, so we eventually had to mount the shared storage as read-write.

- Shell servers: Two other Linux servers acted as shell servers where webmasters could update their web content. This was the only place where webmasters could edit files, accessed through SSH or SFTP. The document root was mounted read-write from these servers.

With two web servers and two shell servers, we had redundancy on the Linux end. Our storage also had redundancy. The storage frame made a regular copy or mirror of the document root to a second storage frame located at the other datacenter. If one of our datacenters went offline, we could just update the Linux servers to point to the surviving storage frame.

This simple architecture really did the job! If one server went down, we had a spare to use right away. If an entire site went offline, we could quickly switch to the second site.

[ You may also be interested in reading How to deploy an Apache web server quickly. ]

A few lessons learned

We recently retired the Linux web-hosting environment. After 16 years of hosting more than 650 websites and 200 content creators on 3.6TB, we moved to a vendor for web hosting. But that Linux web-hosting service had a great run, and it taught us a few lessons about running a big Linux system.

1. Use standard POSIX/Unix file permissions to the fullest extent possible

Given a common shared filesystem, our filesystem permission structure was critical to providing a secure and least-privileged architecture. The apache user by default had read-only rights on files and access-only on directories. For use cases that required it, write access for the apache user was permitted on a case-by-case basis by using a segmented group-based access configuration. We automated the configuration to enforce the permission structure, avoiding "config drift."

Using a mature filesystem permission structure design really helped keep the overall security architecture simpler and cleaner with minimal additional operational overhead. And having a solid permission structure minimized the reach of any security events.

[ Learn how to manage your Linux environment for success. ]

2. Simplify the installation to match the usability of the target audience

Back in 2006, the folks using desktop-hosted Apache installations were using Dreamweaver to design and maintain their sites. This was the vast majority of the target content creators. They only needed to use HTML and CSS to set up their websites. The content creator was published using SFTP from Dreamweaver. This meant they only needed to be supplied with a hostname, account, password, and the DocumentRoot path of their VirtualHost—that's it. This helped keep the underlying filesystem layout very simple and easy to document.

This also meant the Apache installation could be stripped of all nonessential modules. The Apache configuration was about as simple as it could get and still function as a web server and be secure. This helped a lot over the years with eventual security issues. There just wasn't much to break.

3. Lack of service updates led to technical debt

The service ran for over 16 years with no fundamental architecture changes. We had few changes along the way, including some lifecycle-based changes as the initial bare-metal deployment made way for virtual servers. The back-end storage frames also went through several lifecycle changes.

However, the service's use-case design did not. After such a long time, simple web hosting outlived the use case it was designed for. Lacking any feature updates to fit the growing needs of content management, our web-hosting service ended up being the place where all the "old" websites lived. The simplicity of the architecture meant our Linux-based web hosting had a long life, running with minimal operational cost. We didn't add new services to enhance the service, and that complacency led to a decline in adoption of new sites.

Conclusion

While we eventually moved web hosting to an outside service, we also recognize the longevity of this service. Running web servers on Linux made it easy to support many users and websites, with very little work from systems administrators.

If you're looking to set up a similar service in your own organization, use these takeaways to make your system powerful, flexible, and easy to use.

About the authors

Jim Hall is an open source software advocate and developer, best known for usability testing in GNOME and as the founder + project coordinator of FreeDOS. At work, Jim is CEO of Hallmentum, an IT executive consulting company that provides hands-on IT Leadership training, workshops, and coaching.

Dack Anderson has been with the University of Minnesota for close to 25 years. He started using Red Hat Linux in 1997.

More like this

Getting started with socat, a multipurpose relay tool for Linux

More than meets the eye: Behind the scenes of Red Hat Enterprise Linux 10 (Part 4)

The Overlooked Operating System | Compiler: Stack/Unstuck

Linux, Shadowman, And Open Source Spirit | Compiler

Browse by channel

Automation

The latest on IT automation for tech, teams, and environments

Artificial intelligence

Updates on the platforms that free customers to run AI workloads anywhere

Open hybrid cloud

Explore how we build a more flexible future with hybrid cloud

Security

The latest on how we reduce risks across environments and technologies

Edge computing

Updates on the platforms that simplify operations at the edge

Infrastructure

The latest on the world’s leading enterprise Linux platform

Applications

Inside our solutions to the toughest application challenges

Virtualization

The future of enterprise virtualization for your workloads on-premise or across clouds