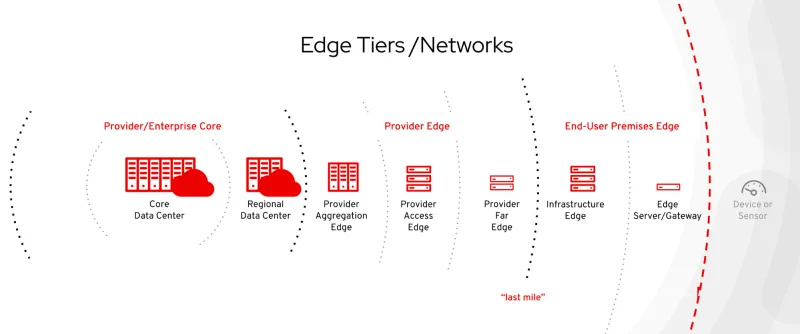

One of the characteristics of edge computing is that rather than one big network, multiple smaller networks are being used. Network connectivity inside of these smaller networks is mostly reliable, but the connectivity between these network bubbles can be unstable—some edge network concepts are even designed to cope with temporary network interruptions between these networks.

With edge networks, monitoring all networks from a single location is getting more unrealistic. The most basic monitoring would be a central system which periodically connects to all remote IPs it knows about.

To debug issues, we need to:

-

Verify if select devices could at a certain time reach each other.

-

Verify how network latency between devices was at a given time.

-

Check if certain services (HTTP, SSH or simple TCP services) were reachable.

Ideally, we would like to have this data available for all connections between the devices in the edge clouds which are relevant for our overall applications. This kind of monitoring should also be simple to setup and maintain, and have a low performance overhead.

These were basically the requirements brought up by Red Hat consultants to help customers monitor edge networks. TAMs and more colleagues chimed in, and after just a weekend, our colleague Marko Myllynen had written pmda-netcheck, which we will introduce in this post.

Monitoring details

Edge networks have many single devices, communicating with each other. Having one single sensor host in each of these networks monitor the network could already help us, but instead we will just set up all of our Red Hat Enterprise Linux (RHEL) systems in these networks as network sensors: The exact same RHEL systems which are running our applications will additionally do network checks: they can ping IPs, measure whether they get replies or not, and measure the latency of these replies. As we turn all RHEL systems into network sensors, we can look at the data of each involved system and find out if it was properly communicating with its peers at the time of the incident. These checks cause a tiny bit of additional traffic, insignificant on the networks we have seen so far.

Software-wise, we rely on PCP: the Performance Co-Pilot. This is a well proven software and a long time part of RHEL. Designed for performance monitoring, PCP is capable of measuring and archiving all kinds of metrics of systems: load, network bandwidth, memory utilization and many more. The relatively new pmda-netcheck allows us to monitor the network, and use the PCP infrastructure to store the results.

Setting up a RHEL as network sensor

For our monitoring, we will distinguish two kinds of systems:

-

Sensor nodes: these are running at least PCP 5.0.2, which is part of RHEL 8.2 beta and later. Each of these nodes can be configured to monitor connectivity to remote IP addresses and latency.

-

Other devices: these are all other devices reachable from "sensor nodes," their reachability/latency can be monitored by "sensor nodes."

Of course, sensor nodes can also monitor the reachability of each other. The only real requirement for something to be monitored is to be addressable via IP, have routing to the sensor node, and respond to monitoring requests, for example ICMP/ping.

Leaving the realm of support from Red Hat, upstream PCP supports many BSD, Linux and Unix variants.

Let’s set up pmda-netcheck on a RHEL 8.2 beta (or later) system. First, install the necessary packages using yum.

# yum -y install pcp-zeroconf pcp-pmda-netcheck

At this point, pcp-zeroconf should have configured our system to monitor a basic set of metrics on the system. For more details, please refer to this article.

Next we will configure pmda-netcheck to monitor reachability to various remote systems. Using man pmdanetcheck will give you more details.

# cd /var/lib/pcp/pmdas/netcheck # vi netcheck.conf

In this config file, we configure the net checks which should be done from this RHEL system. These are the modifications I did for our example here:

modules = ping,ping_latency,port_open hosts = DGW,www.heise.de,fluxcoil.net,ftp.NetBSD.org,global.jaxa.jp ports = 80

These modifications configure monitoring via ping, measurement of the ping-latency, and verification if port TCP/80 is reachable. Now, on selinux enabled systems—which I hope applies to your system—we need to explicitly allow members of group ‘pcp’ to create ICMP open echo requests. ‘man icmp’ has details.

# id pcp uid=994(pcp) gid=991(pcp) groups=991(pcp) # sysctl net.ipv4.ping_group_range net.ipv4.ping_group_range = 1 0 # echo 'net.ipv4.ping_group_range = 991 991' \ >/etc/sysctl.d/10-ping-group.conf # sysctl -p /etc/sysctl.d/10-ping-group.conf net.ipv4.ping_group_range = 991 991

We can now install the pmda and verify access to the metrics:

# cd /var/lib/pcp/pmdas/netcheck # ./Install [..] Terminate PMDA if already installed ... Updating the PMCD control file, and notifying PMCD ... Check netcheck metrics have appeared ... 4 metrics and 10 values #

If the last command shows warnings, verify the previous SELinux settings on your system.

# pminfo netcheck netcheck.port.time netcheck.port.status netcheck.ping.latency netcheck.ping.status # pmrep netcheck.ping.latency n.p.latency n.p.latency n.p.latency n.p.latency n.p.latency www.heise.d fluxcoil.ne ftp.NetBSD. Global.jaxa 192.168.4.1 millisec millisec millisec millisec millisec 4.102 0.056 151.546 224.621 0.272 4.102 0.056 151.546 224.621 0.272 4.102 0.056 151.546 224.621 0.272 4.102 0.056 151.546 224.621 0.272 [..]

Here pminfo has shown us the available metrics, with pmrep we are looking at the latency metrics. The first four columns refer to the configured DNS names to monitor and the last column is our gateways IP. All of them were reachable in the timeframe. If unreachable, we would see -2 here. The metric netcheck.ping.status can also be used for the status reachable/unreachable.

So far, we have verified that our network monitoring metrics are available, we have used pminfo/pmrep which were communicating with the local pmcd daemon. To be prepared for requests like “yesterday at 16:35, was the network connection to fluxcoil.net ok?” we need to configure pmlogger to archive the metrics for us.

As we installed pcp-zeroconf, our system is already running pmlogger and is archiving metrics. Directory /var/log/pcp/pmlogger/$(hostname)/ contains the archive files with our past metrics. To verify which metrics are recorded in the latest archive file:

# pminfo -a $(ls -r /var/log/pcp/pmlogger/$(hostname)/*0) proc.id.container proc.id.uid proc.id.euid proc.id.suid [..]

The default config archives >1200 metrics, but not our netcheck metrics. Let’s change that! We open the config, and go to the end of the file:

# vi /var/lib/pcp/config/pmlogger/config.default [..] # It is safe to make additions from here on ... # [access] disallow .* : all; disallow :* : all; allow local:* : enquire;

Here, we insert a new section instructing to archive netcheck. The modified part looks like this:

# It is safe to make additions from here on ... # log advisory on default { netcheck } [access] disallow .* : all; disallow :* : all; allow local:* : enquire;

Now, we can restart pmlogger, and verify that our metric appears in the archive files.

# systemctl restart pmlogger # pminfo -a $(ls -r /var/log/pcp/pmlogger/$(hostname)/*0) netcheck netcheck.port.status netcheck.port.time netcheck.ping.latency netcheck.ping.status #

Congratulations! We have turned this system into a network monitoring node. From now it will be watching reachability via ping and latency to several remote IPs. Using the archive files written by pmlogger, we can look up our metrics for the whole recorded timespan. Extending this monitoring to more systems, you can get an even better overview over the network.

How can we access the recorded data? Our archive files are in /var/log/pcp/pmlogger/$(hostname). Let’s look at one of these, which netcheck metrics got recorded over which time span?

$ pmlogsummary -Il 20200301.0 netcheck.ping.latency Log Label (Log Format Version 2) Performance metrics from host rhel8u2a commencing Sun Mar 1 00:10:14.853 2020 ending Mon Mar 2 00:10:09.300 2020 netcheck.ping.latency ["www.heise.de"] 4.143 08:34:39.663 millisec netcheck.ping.latency ["fluxcoil.net"] 0.483 14:07:39.696 millisec netcheck.ping.latency ["ftp.NetBSD.org"] 151.553 13:14:39.719 millisec netcheck.ping.latency ["global.jaxa.jp"] 238.429 04:10:40.454 millisec netcheck.ping.latency ["192.168.4.1"] 0.483 14:07:39.696 millisec

Now, if we are investigating an issue from yesterday starting at 15:00, and want to know the latency metrics for the next 10 minutes in two minute steps, we could use this:

$ pmrep -p -a 20200301.0 -S @15:00 -T 10m -t 2m netcheck.ping.latency n.p.latency n.p.latency n.p.latency n.p.latency n.p.latency www.heise.d fluxcoil.ne ftp.NetBSD. Global.jaxa 192.168.4.1 millisec millisec millisec millisec millisec 15:00:00 3.897 0.474 151.712 224.678 0.239 15:02:00 3.947 0.445 151.738 224.662 0.185 15:04:00 3.847 0.822 151.384 224.635 0.195 15:06:00 3.836 0.490 151.375 224.610 0.212 15:08:00 3.821 0.570 155.640 224.679 0.220 15:10:00 3.900 0.783 151.571 224.856 0.231

By default, pmlogger is configured to archive metrics for about 24 hours. For network monitoring, you probably want to extend that time span in file /etc/pcp/pmlogger/control.d/local, for example in changing -T24h10m into -T365d.

This will change the disk space required for the archive files. If you disable some of the >1200 metrics which are not important for you, the archive files will consume less space. As a start, you could disable archiving of all metrics, and just one by one enable the metrics you really need.

Summing up

As of RHEL 8.2 beta, pmda-netcheck can already deal with quite some variants of checks. PCP on the system can also supply us with other metrics from the system: the load, memory usage, network details and much more. With the steps above, we can turn our farm of RHEL systems in our edge network into independent network monitor nodes. In case of issues, this provides us with the tools to find who can/could communicate with whom, and we get hints about the latency. Starting with RHEL 8.2 beta, these metrics can also nicely be visualized with PCP / Redis / pmproxy / Grafana:

We have created a thread in the community section of the customer portal to gather comments, questions and additional information regarding this post. You can access the thread here.

关于作者

Christian Horn is a Senior Technical Account Manager at Red Hat. After working with customers and partners since 2011 at Red Hat Germany, he moved to Japan, focusing on mission critical environments. Virtualization, debugging, performance monitoring and tuning are among the returning topics of his daily work. He also enjoys diving into new technical topics, and sharing the findings via documentation, presentations or articles.