In the previous post (vDPA Kernel Framework Part 1: vDPA Bus for Abstracting Hardware), we discussed the design and implementation of the kernel vDPA framework with a brief introduction of the vDPA bus drivers. We will proceed to cover the technical details of the vDPA bus drivers and how they can provide a unified interface for the vDPA drivers.

This post is intended for kernel developers and userspace developers for VMs and containers who want to understand how vDPA could be a backend for VMs or a high performance IO for containers.

The post is composed of two sections: the first focuses on the vhost-vDPA bus driver and the second on the virtio-vDPA bus driver. In each section we explain the design of each bus driver and the DMA mapping design as well.

The vhost-vDPA bus driver

The design of vhost-vDPA bus driver

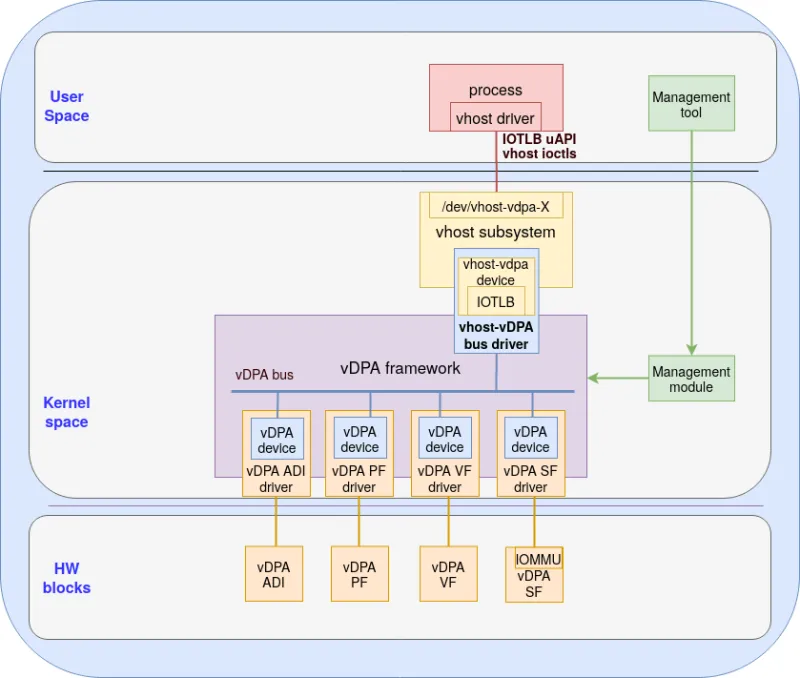

The vhost is the historical data path implementation of virtio inside the kernel. It was used for emulating the datapath of virtio devices on the host side. It exposes mature userspace APIs for setting up the kernel data path through vhost device (which are character devices). The vhost-vDPA bus driver implements a new vhost device type on top of the vDPA bus. By leveraging vhost uAPIs (userspace APIs) and mediating between vhost uAPIs and vDPA bus operations, vhost-vDPA bus drivers present vhost devices to the userspace vhost driver. This is illustrated in the following figure:

Figure 1: vhost-vDPA bus driver

The key responsibility of the vhost-vDPA bus driver is to perform mediation between the vhost uAPIs and vDPA bus operations. From the point of view of vhost subsystem, vhost-vDPA bus driver is another type of vhost device which we refer to as a vhost-vDPA device with an IOTLB (IO translation lookaside buffer) that supports vhost uAPIs. When a vDPA device is probed by the vhost-vDPA bus driver, a char device (/dev/vhost-vdpa-X) will be created for userspace drivers to accept vhost uAPIs.

The vhost-vDPA device described above reuses the existing vhost uAPIs for the following tasks:

-

Setting device owner (ioctls): each vhost-vdpa device should be paired with a process which is the owner of this vhost-vdpa device. The vhost-vdpa device won’t allow a process other than the owner to change the memory mapping.

-

Virtqueue configurations (ioctls): setting up base address, queue size; set or get queue indices. The vhost-vDPA bus driver will translate those commands to vDPA bus operations and send them to vDPA device drivers via vDPA bus.

-

Setting up eventfd for virtqueue interrupts and kicks (ioctls): vhost use eventfd for relaying the events such as kicks or virtqueue interrupt to userspace. The vhost-vDPA bus driver will translate those commands to vDPA bus operations and send them to the vDPA device driver via vDPA bus.

-

Populating device IOTLB: vhost IOTLB message write to (or read from) vhost-vdpa char device. The vhost-vDPA leverages device IOTLB abstraction for vhost devices. The userspace vhost drivers should inform the memory mapping between IOVA (IO Virtual Address) and VA (Virtual Address). The userspace vhost drivers updates the vhost-vDPA device on the following types of vhost IOTLB messages:

-

VHOST_IOTLB_UPDATE: establish a new IOVA->VA mapping in vhost-vDPA device IOTLB.

-

VHOST_IOTLB_INVALIDATE: invalidate an existing mapping of IOVA->VA in vhost-vDPA device IOTLB.

-

VHOST_IOTLB_MISS: vhost-vDPA device IOTLB may ask for a translation from userspace of IOVA->VA using this message. This is for future usage when vDPA devices will support demand paging.

-

The traditional vhost ioctls are designed specifically to set up a datapath for vhost devices. This however doesn’t satisfy the vDPA device abstracted by vDPA bus. The reason is since we need ioctls for the control path in addition to the data path ioctls. Thus we need to add additional ioctl for configuring the vhost control path.

The following extensions are required:

-

Get virtio device ID: This is useful for userspace to match a type of device driver to vDPA device.

-

Setting and getting device status: This allows userspace to control the device status (e.g. device start and stop).

-

Setting and getting device config space: This allows userspace drivers to access the device specific configuration space.

-

Config device interrupt support: This allows vDPA devices to emulate or relay config interrupts to userspace via eventfd.

-

Doorbell mapping: This allows userspace to map the doorbell of a specific virtqueue into its own address space. This will eliminate the extra cost of relaying doorbell via eventfd. In the case of VM, it saves the vmexit.

With the above extension, the vhost-vDPA bus driver provides a full abstraction of vDPA devices. Userspace may control the vDPA device as if it was a vhost device. When the vhost-vDPA bus driver receives userspace requests it can perform two actions, depending on the type of request:

-

The vhost-vdpa bus driver may translate the request and forward the request through vDPA bus.

-

Ask for the help from other kernel subsystems to finish the request.

Memory (DMA) mapping with vhost-vDPA

The DMA abstraction of the vhost-vdpa device is done through the userspace visible IOTLB. The userspace driver needs to create or destroy IOVA->VA mapping in order to make the vDPA device work.

For different use cases, IOVA could be:

-

Guest Physical Address (GPA) when using vhost-vDPA as a backend for VM without vIOMMU.

-

Guest IO Virtual Address (GIOVA) when using vhost-vDPA as a backend for VM with vIOMMU.

-

Virtual Address (VA) when using vhost-vDPA for a userspace direct IO datapath.

Depending on where the DMA translation is done by hardware, vhost-vDPA drivers behave differently. The translation can be done on the platform IOMMU or on chip IOMMMU.

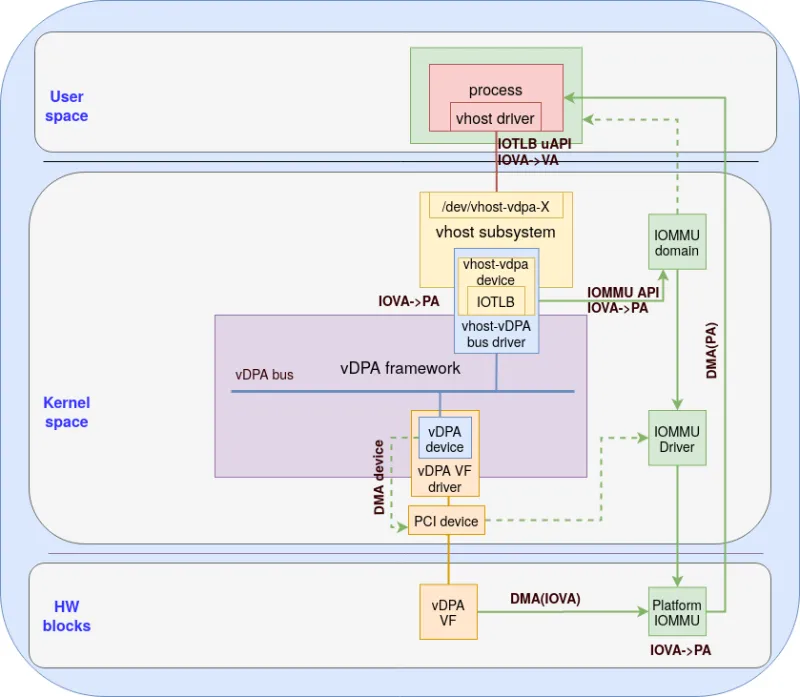

DMA translation on platform IOMMU

For devices that depend on platform IOMMU for DMA translation, the memory mapping is processed as seen in figure 2:

Figure 2: Memory mapping with platform IOMMU

The vhost-vDPA bus driver detects the device requirements of the platform IOMMU by checking whether the device specific DMA config ops are implemented. During the probing of a vDPA device on the vDPA bus, the vhost-vDPA bus driver will probe the platform IOMMU that the vDPA device (vDPA VF in the above figure) is attached to. If device specific DMA operations are not implemented that means the device depends on the platform IOMMU.

The probing of the platform IOMMU is done through the DMA device (usually a PCI device as illustrated in figure 2, in this case vDPA is a VF) which is specified during the vDPA device creation. The vhost-vDPA bus driver will then allocate an IOMMU domain which is dedicated for the vhost-vDPA owner process for isolating DMA from other processes. During the removal of the vhost-vDPA device, the vhost-vDPA bus driver will destroy the IOMMU domain. The vhost-vDPA device uses the IOMMU domain as a handle for requesting mapping to be set up or destroyed in the platform IOMMU hardware.

When the domain is allocated during probing, the vhost-vDPA device is ready for receiving DMA mapping requests from userspace through the vhost IOTLB uAPI in the following order:

-

Userspace writes a vhost IOTLB message to vhost-vDPA char device when the userspace wants to establish/invalidate an IOVA->VA mapping.

-

The vhost-vDPA device receives the request and converts VA to PA (Physical Address). Note that PA may not be contiguous even if the VA is thus a single IOVA to VA mappings could have several IOVA->PA mappings.

-

The vhost-vDPA device will lock/unlock the pages in memory and store/remove the mappings of IOVA->PA in it’s IOTLB.

-

The vhost-vDPA device will ask the IOMMU domain to build/remove the IOVA->PA mapping through the IOMMU API. These APIs are a unified set of operations that are used for IOMMU drivers to cooperate with other kernel modules for userspace DMA isolation.

-

The IOMMU domain will ask the IOMMU driver to perform vendor/platform specific tasks to establish/invalidate the mapping in the platform IOMMU hardware.

The device (vDPA VF as an example in this case) will initiate DMA requests with IOVA. The IOVA will be validated and translated by platform IOMMU to PA for real DMA requests.

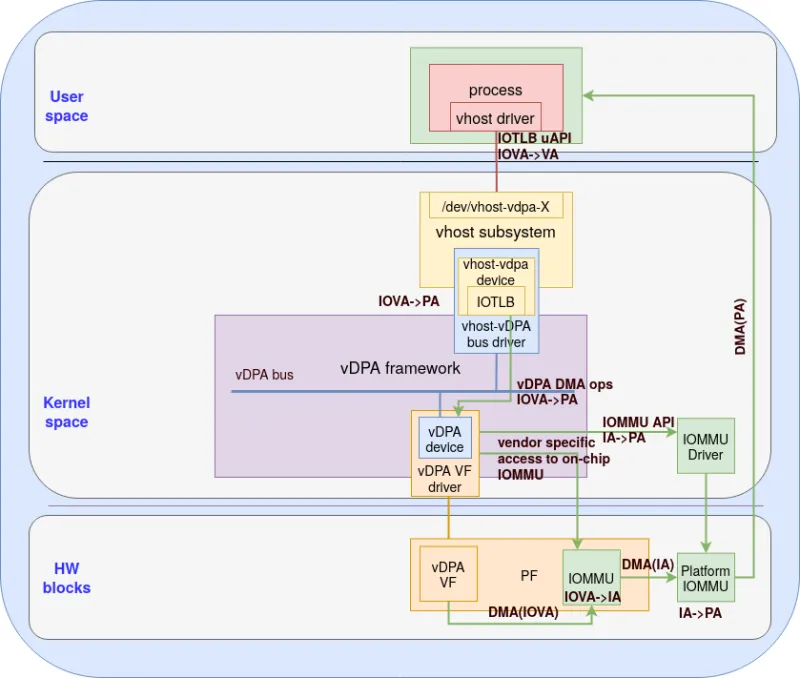

DMA translation on chip IOMMU

In this case devices require the DMA translation to be implemented by its own device or driver. The vhost-vdpa bus driver will forward the DMA mapping request to the vDPA bus as illustrated in figure 3:

Figure 3: Memory mapping with device specific DMA translation

When the vhost-vDPA bus driver detects that vDPA DMA bus operations are implemented in the vDPA driver (as opposed to the platform IOMMU), all the DMA/IOMMU processing will be done in the level of vDPA device driver. This usually requires the setup of the DMA/IOMMU domain for both on-chip IOMMU and platform IOMMU. In this case the vhost-vDPA driver will not use IOMMU API but rather forward the memory mapping to the vDPA device driver through vDPA DMA bus operations:

-

The vhost-vDPA device receives the request and converts VA to PA.

-

The vhost-vDPA device will lock/unlock the pages in the memory and store/remove the IOVA->PA mappings in its IOTLB.

-

The vhost-vDPA device will ask the vhost-VDPA bus driver to forward the mapping request to the vDPA device driver.

-

The vDPA device driver will use vendor specific methods to set up an embedded IOMMU on the device. When needed, vDPA device drivers need to cooperate with platform IOMMU drivers for necessary setups. This means the vDPA device driver needs to setup/remove IOVA->IA mappings (IA is Intermediate Address) in the on-chip IOMMU and IA->PA mappings in the platform IOMMU.

When the device (vDPA VF) initiates DMA requests with IOVA, the IOVA will be validated and translated by on-chip IOMMU to IA for real DMA requests first. Then the platform IOMMU will be in charge of translating and validating the IA->PA mapping.

With the help of IOTLB abstraction, the cooperation with the IOMMU domain and the vDPA device drivers, the vhost-vDPA device presents a unified and hardware agnostic vhost device to the userspace.

The virtio-vDPA bus driver

The design of virtio-vdpa bus driver

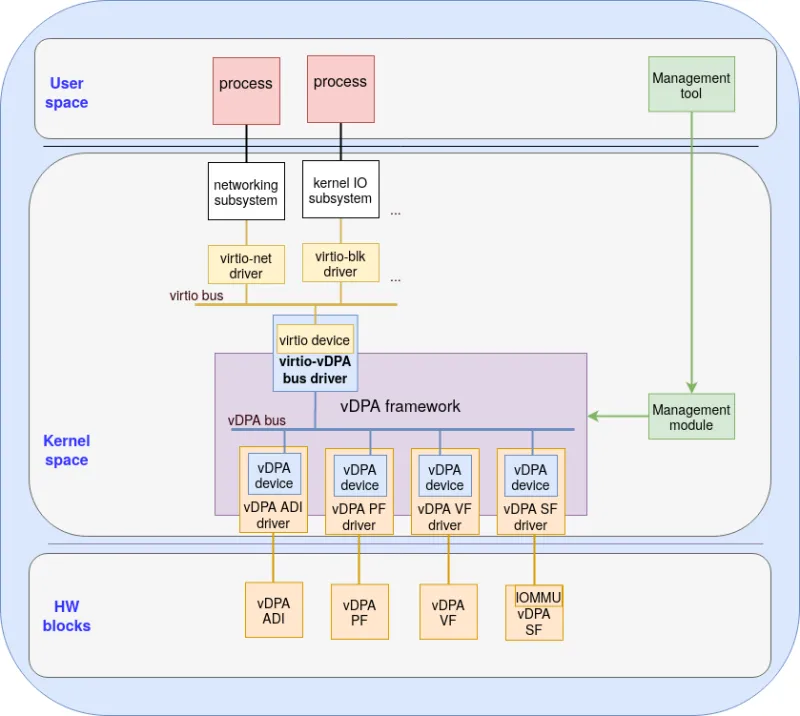

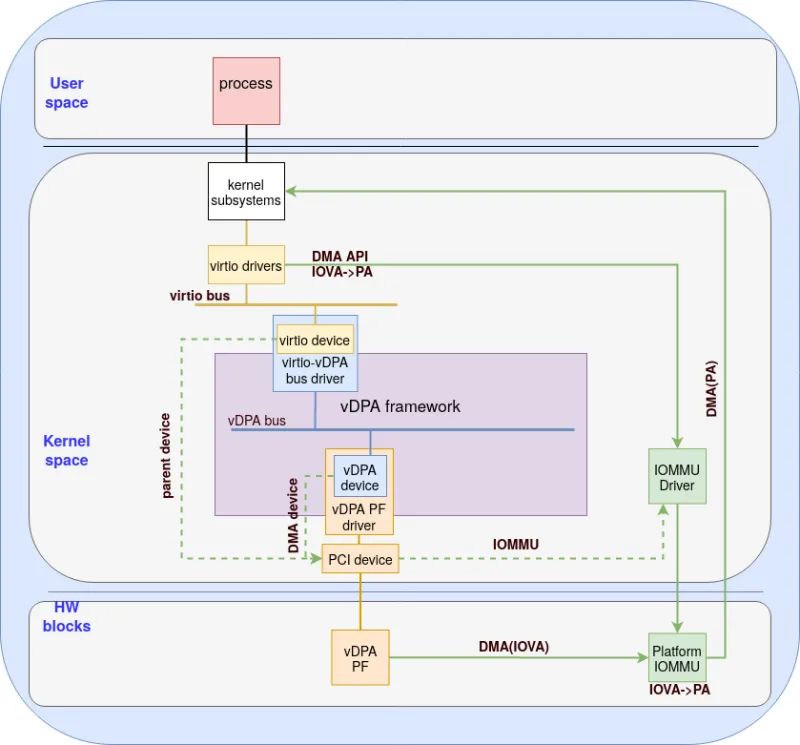

The virtio-vDPA bus driver presents a virtio device and leverages kernel virtio drivers as illustrated in the following figure:

Figure 4: the virtio-vDPA bus driver

The goal is to enable the kernel virtio drivers to control the vDPA device as if they were virtio devices without any modification. For example, using kernel virtio-net drivers to control vDPA networking devices. The virtio-vDPA bus driver is used for providing virtio based kernel datapath to the userspace.

The virtio-vDPA bus driver will emulate virtio devices on top of vDPA devices:

-

Implementing a new vDPA transport (which is the bus abstraction for virtio device) that performs the translation between the virtio bus operations and vDPA bus operations.

-

Probing vDPA devices and registering virtio devices on the virtio bus.

-

Forwarding the device interrupt to the virtio device.

The virtio-vDPA bus driver could be treated as a mediator between the virtio bus and the vDPA bus. From the view of vDPA bus the virtio-vDPA bus driver is a bus driver (he doesn’t directly see the virtio device or the virtio bus). From the perspective of the virtio bus the virtio-vDPA bus driver is a virtio device (he doesn’t directly see the virtio-vDPA bus driver or the vDPA bus).

Using this abstraction, the virtio device on behalf of the vDPA device can be probed by virtio drivers. For example, the vDPA networking devices could be probed by the virtio-net driver and utilized by the kernel networking subsystem eventually. Processes can use socket based uAPI to send or receive traffic via vDPA networking devices. Similarly, processes can use kernel IO uAPI to read and write via vDPA block devices with the help of the virtio-blk driver and the virtio-vDPA bus driver.

Memory (DMA) mapping with virtio-vDPA driver

The virtio-vDPA block is still evolving so in this blog we will only focus on the on platform IOMMU approach (the on chip approach is still being debated). The memory mapping with virtio-vDPA driver is illustrated in figure 5:

Figure 5: memory (DMA) mapping with the virtio-vDPA bus driver

The kernel uses a single DMA domain for all devices owned by itself. Kernel drivers cooperate with the IOMMU driver for setting up DMA mapping through the DMA API. Such domain setups are done during the boot process. The virtio-vDPA bus driver only needs to handle DMA map/unmap and there’s no need for caring about IOMMU domain setups as in the case of the vhost-vDPA driver.

The DMA API requires a device handle for finding the IOMMU. The virtio driver uses the parent device of the virtio device as the DMA handle. When the virtio-vDPA bus driver registers the virtio device to the virtio bus, it will specify the DMA device (usually the PCI device) of the underlying vDPA device as the parent device of the virtio device. When the virtio driver tries to setup or invalidate a specific DMA mapping, the IOVA->PA mapping will be passed via DMA API to the PCI device (parent device of the virtio device). The kernel will then find the IOMMU that the PCI device is attached to and forward the request to the IOMMU driver which talks to the platform IOMMU.

When the vDPA device wants to perform DMA through IOVA, the translation and validation will be done by the IOMMU and the PA will be used in the real DMA to access the buffers owned by the kernel afterwards.

Summary

This post describes how the the vhost-vDPA bus driver and the virtio-vDPA bus driver leverage the kernel virtio and vhost subsystems for providing a unified interface for kernel virtio drivers and userspace vhost drivers.

We’ve also explained the differences between the platform IOMMU support and chip IOMMU support where vhost-vDPA bus driver supports both while virtio-vDPA bus driver only supports the platform IOMMU.

In the next post we will shift our attention to providing use cases of using the vDPA kernel framework with VMs and containers.

关于作者

Experienced Senior Software Engineer working for Red Hat with a demonstrated history of working in the computer software industry. Maintainer of qemu networking subsystem. Co-maintainer of Linux virtio, vhost and vdpa driver.