Red Hat OpenShift supports the best of both virtualization worlds: virtual machines (VMs) and containers. In the containers and Kubernetes world, the "services" model permits external access to and consumption of applications that are deployed as containers within the pods. This configuration allows you to define simple ingress points to applications with load balancing. However, in the VM world, external load balancers are traditionally used to group services residing in VMs.

This article explains how to balance incoming traffic to multiple VMs using a Kubernetes service approach. This method provides a consistent way of doing similar jobs and prevents needing to consume or pay extra for load balancing.

[ Learn the differences between VMs and containers. ]

How OpenShift creates VMs

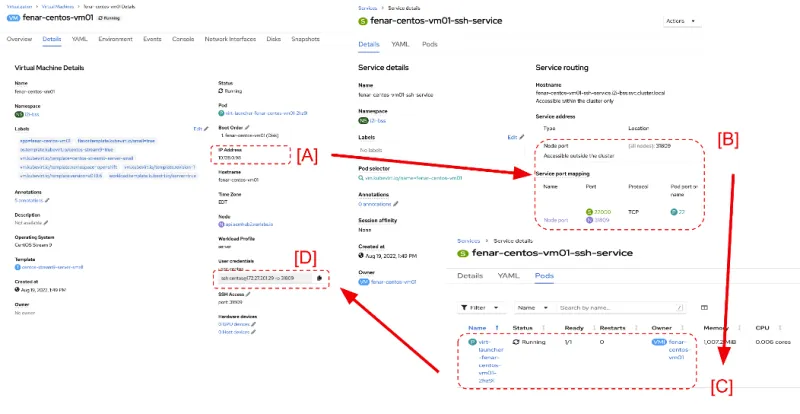

When you create a VM with OpenShift, the VM is assigned an Internet Protocol (IP) address from a pool of service addresses. A launcher pod accompanies the VM to allow remote Secure Shell (SSH) access to it, and the launcher pod is exposed through a Kubernetes service NodePort so that the VM can be accessed from outside of the cluster.

In Figure 1, despite the appearance of the KubeVirt VM exposing its IP address as a reachable address (Box A), that address is owned by the launcher pod (see Box X in Figure 2). The launcher pod acts as a sidecar proxy to the VM, which has only a pod namespace-level address (See Box Y in Figure 2).

From an SSH perspective, when a request is sent to either the service cluster's IP with a service port or to a Kubernetes node with NodePort, the request is passed directly to the VM listening on the pod port.

[ Learn more about cloud-native development in the eBook Kubernetes Patterns: Reusable elements for designing cloud-native applications. ]

Implement load balancing with multiple VMs

You can take advantage of this internal design to implement load balancing with multiple VMs by using a Kubernetes service with VM launcher pods. There are two steps to accomplishing this:

1. Assign a common pod selector label across all launcher pods.

2. Create a service that targets the service running inside the VMs (in this example, a simple web portal).

Regarding the Kubernetes service type:NodePort in the example above:

- You can swap the service type:NodePort with type:LoadBalancer, even though you are still doing Kubernetes-native load-balancing with VMs.

- Not all IP+NodePort uses are internet-reachable, as Node IP addresses can be routable only within local enterprise networks. However, consumers of that VM-based service leverage the service type:NodePort. As a matter of fact, most enterprise IT traffic stays within private enterprise networks. Also, 5G user equipment (UE) traffic hits the internet only after it breaks out from 5G user plane function (UPF) cloud-native network functions (CNFs). Anything before that (that is, all local 5G network fabric traffic) is not internet accessible.

Test VM traffic

You can test whether you can reach the web service running on the grouped VMs from within the cluster but from a different namespace.

That was successful, so now test outside the cluster:

$ curl http://api.acmhub2.narlabs.io:31923

Welcome to fenar-centos-vm02!

$ curl http://api.acmhub2.narlabs.io:31923

Welcome to fenar-centos-vm02!

$ curl http://api.acmhub2.narlabs.io:31923

Welcome to fenar-centos-vm01!

$ curl http://api.acmhub2.narlabs.io:31923

Welcome to fenar-centos-vm02!

[ Build a flexible foundation for your organization. Download An architect's guide to multicloud infrastructure. ]

Create highly available services

You can leverage Kubernetes service constructs to create highly available services in a mixed container and VM environment, and you can do it without the need for any external components. This approach can be very handy in small-footprint and edge deployments where container and VM workloads coexist.

关于作者

Fatih, known as "The Cloudified Turk," is a seasoned Linux, Openstack, and Kubernetes specialist with significant contributions to the telecommunications, media, and entertainment (TME) sectors over multiple geos with many service providers.

Before joining Red Hat, he held noteworthy positions at Google, Verizon Wireless, Canonical Ubuntu, and Ericsson, honing his expertise in TME-centric solutions across various business and technology challenges.

With a robust educational background, holding an MSc in Information Technology and a BSc in Electronics Engineering, Fatih excels in creating synergies with major hyperscaler and cloud providers to develop industry-leading business solutions.

Fatih's thought leadership is evident through his widely appreciated technology articles (https://fnar.medium.com/) on Medium, where he consistently collaborates with subject matter experts and tech-enthusiasts globally.

Rimma Iontel is a Chief Architect responsible for supporting Red Hat’s global ecosystem of customers and partners in the telecommunications industry. Since joining Red Hat in 2014, she’s been assisting customers and partners in their network transformation journey, helping them leverage Red Hat open source solutions to build telecommunication networks capable of providing modern, advanced services to consumers in an efficient, cost-effective way.

Iontel has more than 20 years of experience working in the telecommunications industry. Prior to joining Red Hat, she spent 14 years at Verizon working on Next Generation Networks initiatives and contributing to the first steps of the company’s transition from legacy networks to the software-defined cloud infrastructure.