This is part one of a two-part series that discusses multicluster service discovery in OpenShift using Submariner and Lighthouse. This is active research and development, with support expected in an upcoming OpenShift release.

Service discovery is the process by which a service exposed from a cluster is made available for DNS requests from clients. For services within the same cluster, DNS resolution is handled by Kubernetes via the kube-dns component. However, for a hybrid multicluster deployment with clusters deployed on different cloud providers and/or on-premises, another solution is needed.

There are several bespoke implementations that try to solve this problem, but a standard solution is lacking. The Lighthouse project under Submariner provides cross-cluster service discovery for the clusters that are connected by Submariner.

There is a proposal in the Kubernetes community to solve the multicluster service discovery. The Lighthouse project predates this proposal and hence uses a slightly different approach to using its own API definitions. In previous and upcoming releases, Lighthouse is moving towards embracing the upstream proposal and using the standard APIs.

The core Submariner component connects overlay networks of different Kubernetes clusters and is designed to be compatible with any CNI plug-in. When two clusters are connected using Submariner, pod-to-pod and pod-to-service connectivity is provided via their IPs. The Lighthouse project was initiated to provide reachability of services via their name.

Lighthouse provides cross-cluster DNS resolution using a custom CoreDNS server that is deployed in each cluster. It is authoritative for the supercluster.local domain (as defined in the upstream proposal). The in-cluster KubeDNS is configured to forward all the requests sent to this domain to the Lighthouse CoreDNS for resolution. Lighthouse also runs an agent in each cluster which is responsible for syncing service information across clusters using custom resource definitions (CRDs). The Lighthouse DNS server will return an A record for a DNS request on <service>.<namespace>.svc.supercluster.local based on the synced-service information.

Lighthouse uses an opt-in model for service distribution whereby a service must be explicitly exported to other clusters. This is done by creating a ServiceExport resource with the same name and namespace as the service to export.

Architecture

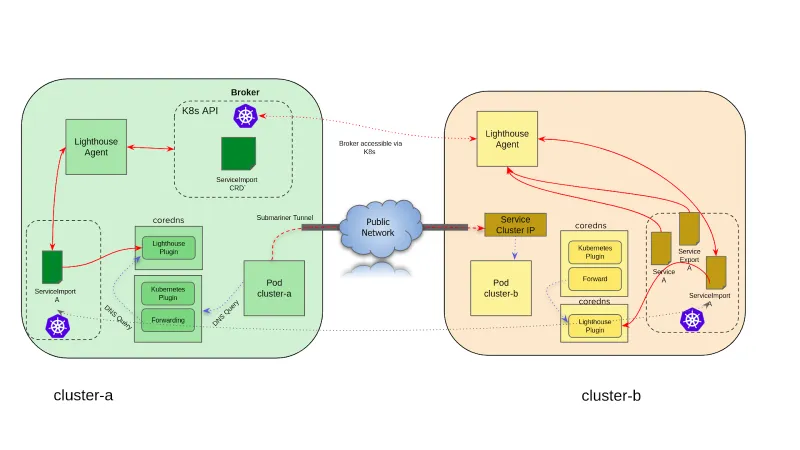

The diagram below shows the basic Lighthouse architecture. The details about Submariner architecture is available in https://submariner-io.github.io/architecture/.

Submariner flattens the network between two clusters and ensures reachability across clusters with an IPsec tunnel. It uses a central Broker component to store and distribute data across clusters.

Lighthouse Agent

The Lighthouse Agent runs in every cluster and accesses the Kubernetes API server running in the Broker cluster to exchange service metadata information with other clusters. Local service information is exported to the Broker, and service information from other clusters is imported.

The workflow is as follows:

- Lighthouse agent connects to the Broker’s Kubernetes API server.

- For every service in the local cluster for which a ServiceExport has been created, the agent creates a corresponding ServiceImport resource and exports it to the Broker to be consumed by other clusters.

- For every ServiceImport resource in the Broker exported from another cluster, it creates a copy of it in the local cluster.

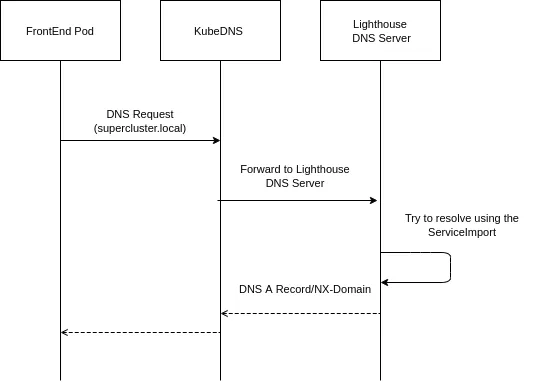

Lighthouse DNS Server

The Lighthouse DNS server runs as an external DNS server, which owns the supercluster.local domain. KubeDNS is configured to forward any request sent to supercluster.local to the Lighthouse DNS server, which uses the ServiceImport resources that are distributed by the controller for DNS resolution.

The workflow is as follows:

- A Pod tries to resolve a Service Name using the domain name supercluster.local.

- KubeDNS forwards the request to the Lighthouse DNS server.

- The Lighthouse DNS server will use its ServiceImport cache to try to resolve the request.

- If a record exists, it will be returned, or an NXDomain error will be returned.

Deploying Submariner with Lighthouse

Submariner with Lighthouse can be easily deployed using the subctl command line utility. The detailed steps are available in https://submariner.io/quickstart/.

The user needs to deploy a Broker and join the clusters with the Broker. The Broker can be deployed on a data cluster or a separate cluster. Subctl’s join command deploys the Submariner Operator and creates the necessary RBAC roles, role bindings, and service accounts (SAs). The Operator deploys the Submariner and Lighthouse component. If clusters have overlapping IP address ranges, the Globalnet feature can be enabled.