In this, the fourth and final post in our series, we’re going to use sysbench to stress our OpenShift Container Platform / OpenShift Container Storage cluster. We’ll use the configuration of 60 MySQL database instances (similar to the test configuration in Part 3) and stress all of them at the same time using sysbench 0.5. We’ll also compare the performance and node resources between GlusterFS and GlusterBlock. Finally, we’ll simulate the same failure scenario from Part 3, GlusterFS versus EBS, but under the stress of sysbench.

Throughout this post, we’ll use different types of StatefulSet (STS), pods, ConfigMaps, and deployments. As in previous posts, let’s make sure we know and understand our test environment as detailed in the following section.

OpenShift on AWS test environment

All posts in this series use a Red Hat OpenShift Container Platform on AWS setup that includes 8 EC2 instances deployed as 1 master node, 1 infra node, and 6 worker nodes that also run Red Hat OpenShift Container Storage Gluster and Heketi pods.

The 6 worker nodes are basically the storage provider and persistent storage consumers (MySQL). As shown in the following, the OpenShift Container Storage worker nodes are of instance type m5.2xlarge with 8 vCPUs, 32 GB memory, and 3x100GB GP2 volumes attached to each node for OpenShift Container Platform and one 1TB GP2 volume for OpenShift Container Storage storage cluster.

The AWS region us-west-2 has availability zones (AZs) us-west-2a, us-west-2b, and us-west-2c, and the 6 worker nodes are spread across the 3 AZs, 2 nodes in each AZ. This means the OpenShift Container Storage cluster is stretched across these 3 AZs. Following is a view from the AWS console showing the EC2 instances and how they are placed in the us-east-2 AZs.

MySQL and sysbench pods deployment

We’re going to use a StatefulSet (STS) again to deploy MySQL and sysbench in our environment. The first phase is to create the persistent volume and run the “sysbench prepare” statement to create database for all our pods. A quick way to do so is via one STS that includes two containers, one for MySQL and one for sysbench. While running two containers in the same pod may not be a typical real-life scenario, doing so forces you to scale both at the same time. For our purpose of creating all the databases simultaneously, it is useful.

Remember that when deleting the MySQL/sysbench STS, the PersistentVolumeClaims (PVCs) that were created when you initialized the STS are still alive and intact. This means you can re-use them as much as you want. In our case, it means we can run different test scenarios using the same PVC for different MySQL/sysbench pod creations knowing the data should stay the same.

So let’s get to it. Following is the STS definition file for creating the MySQL container, the sysbench container, and the PVC:

$ cat OCS-FS-STS.yaml --- apiVersion: apps/v1 kind: StatefulSet metadata: name: mysql-ocs-fs spec: selector: matchLabels: app: mysql-ocs-fs serviceName: "mysql-ocs-fs" podManagementPolicy: Parallel replicas: 60 template: metadata: labels: app: mysql-ocs-fs spec: terminationGracePeriodSeconds: 10 containers: - name: mysql-ocs-fs image: mysql:5.7 env: resources: requests: memory: 2Gi limits: memory: 2Gi ports: - containerPort: 3306 name: mysql volumeMounts: - name: mysql-ocs-fs-data mountPath: /var/lib/mysql - name: custom-rh-mysql-config mountPath: /etc/mysql/conf.d - name: sysbench image: vaelinalsorna/sysbench-container:1.8 env: - name: MYSQL_ROOT_PASSWORD value: password - name: MY_NODE_NAME valueFrom: fieldRef: fieldPath: spec.nodeName - name: MY_POD_NAME valueFrom: fieldRef: fieldPath: metadata.name - name: MY_POD_NAMESPACE valueFrom: fieldRef: fieldPath: metadata.namespace - name: MY_POD_IP valueFrom: fieldRef: fieldPath: status.podIP resources: limits: memory: 512Mi command: - "bash" - "-c" - > while ! /usr/bin/mysql -sN --user=root --password=password -h $(hostname) -e "select 3" >/dev/null 2>&1 ; do /bin/sleep 0.5; done; log_file=/tmp/sysbench-$MY_POD_NAME-$MY_NODE_NAME.log; /usr/bin/mysql -sN --user=root --password=password -h $(hostname) -e "create database sysbench" >> $log_file 2>&1; ./run_sysbench prepare 3 600 off off $(hostname) 10 >> $log_file 2>&1; /bin/sleep infinity; volumes: - name: custom-rh-mysql-config configMap: name: mysql-custom-config volumeClaimTemplates: - metadata: name: mysql-ocs-fs-data spec: storageClassName: glusterfs-storage accessModes: [ "ReadWriteOnce" ] resources: requests: storage: 15Gi

Some thoughts on the STS YAML file:

As you can see, we added another container called “sysbench,” which we’re pulling from docker.io. The container build is very basic and only hold the packages needed to compile and run sysbench 0.5. The extra bit here is a simple bash script we created called run_sysbench. This script basically accepts a lot of parameters for running sysbench, including number of threads, read/write scenarios, reporting interval, the MySQL service to connect to, and test duration.

The database we created will use the sysbench OLTP method and will include 50 tables, each with 1 million rows. With 50 tables and 1 million rows per table, our approximate database size is 10GB. Various scenarios and database sizes can be tested. We’ve chosen one that is on one hand close to the previous multi-tenant scenario of MySQL/WordPress and on the other hand not too small for OLTP environment. (Note: The database size via tables and rows is hard coded in the script. Feel free to modify to fit your needs).

Throughout this series, we used MySQL default settings to make things simple and to show these are real-life numbers without any special variable configurations. As you can see in this STS OCS-FS-STS.yaml, we are using a simple ConfigMap called “mysql-custom-config.” We use this ConfigMap to force a specific value for the MySQL innodb_buffer_pool_size variable so no more than 1GB of buffer can be used for database caching. Remember that from Part 3 that each MySQL container is using 2GB RAM, so we’re using half of it for the innodb_buffer_pool_size. The ConfigMap “mysql-custom-config” shown in the following is really using the set of default variables that comes with the official MySQL container image. The only change is the value of innodb_buffer_pool_size variable.

apiVersion: v1 kind: ConfigMap metadata: name: mysql-custom-config labels: app: mysql-ocs-fs data: mysql.ocs.cnf: | [mysqld] skip-host-cache skip-name-resolve innodb_buffer_pool_size = 1073741824 [mysqldump] quick quote-names max_allowed_packet = 16M

Now let’s review the memory limitations and calculations we did in both the OCS-FS-STS.yaml and ConfigMap.yaml files. In the OCS-FS-STS.yaml file, we limited the memory consumption for the sysbench container to 0.5GB and the MySQL container to 2GB.

If we do the math, each corresponding pod requires 2.5GB of memory. Multiply this by 10 pods per node to get 25GB. Because each of our worker nodes instances have 32GB of RAM, theoretically that leaves 7GB available for the operation of OCP and OpenShift Container Storage. In the ConfigMap.yaml file, we limited the innodb_buffer_pool_size to 1GB. This follows the general rule of configuring for 10% of your database to be cached.

All that is needed now for setup is to run “oc create -f OCS-FS-STS.yaml.” All 60 pods will be created at the same time, with 60 new PVCs created and 60 processes of the sysbench prepare phase running to populate the database for each MySQL container.

You can monitor the creation of the databases across all pods by using the following query, which checks the number of rows in the last table that is supposed to be created (sbtest50). Run this at the shell:

for pod in $(oc get pods|grep mysql|awk '{print $1}');do echo "pod $pod";oc exec -c mysql-ocs-fs $pod -- bash -c "mysql -uroot -ppassword -h \$(hostname) sysbench -e \"SELECT count(1) from sbtest50;\"";done

Once all pods have a database with the last table sbtest50 with 1 million rows created for GlusterFS-based MySQL pods, you can create GlusterBlock-based MySQL pods, assuming you have available storage for both, which we do.

Currently in our OCS-FS-STS.yaml file, we’ve used the “glusterfs-storage” storage class, so now we’ll switch to using “glusterfs-storage-block” and create 60 GlusterBlock-based MySQL/sysbench pods including the prepare stage for the database creation.

To do so, we need to create new pods with new GlusterBlock PVCs. Because of the memory (and CPU) calculation we outlined previously, we must free up the resources for the GlusterBlock-based MySQL. We can delete the GlusterFile STS (“delete sts mysql-ocs-fs”) to free up these resources, and all the claims for these pods (the PVCs) will still be intact and available to use. In fact, we’ll use these PVCs later.

We can count the number of GlusterFile PVCs after the STS delete using the following:

$ oc get pvc |grep mysql-ocs-fs-data-mysql-ocs-fs|wc -l 60

At this point, let’s talk a little bit about GlusterBlock. It has been available in OpenShift Container Storage since Container Native Storage 3.6 (the previous name for OCS) and is useful for applications that require a block interface. To do the same test configuration for GlusterBlock, we did a search and replace, changed “mysql-ocs-fs” to “mysql-ocs-block” in OCS-FS-STS.yaml, and, of course, changed the storage class to “glusterfs-storage-block” so new PVCs will be claimed from this storageclass. Once the prepare finishes, we have 60 GlusterBlock PVCs with sysbench OLTP data ready to use:

$ oc get pvc |grep mysql-ocs-block-data-mysql-ocs-block|wc -l 60

With the 60 GlusterBlock PVCs we have 60 PVCs for GlusterFS and can start the sysbench run phase using these populated PVCs.

Stressing our OCP/OCS cluster using sysbench

Because our the pods currently running already are using GlusterBlock, we will start with them.

To run a workload using sysbench, and because we used StatefulSets (STS), a simple Bash script will start the sysbench run phase on all 60 pods:

#!/bin/bash run_name=glusterblock-test test_length=300 threads=5 read_only=off write_only=off output_interval=10 for pod_name in $(oc get pods|grep -v NAME|awk '{print $1}') do echo "background executing $script_name in pod $pod_name" oc exec -c sysbench $pod_name -- nohup bash -c "/usr/src/sysbench/run_sysbench run $test_length $threads $read_only $write_only \$(hostname) $output_interval >> /tmp/$run_name-$threads-$pod_name" & done

This will run the test for 5 minutes, using 5 threads on each pod. Because we’re comparing performance, it’s always better to run one dry-run for 5 minutes and then run several longer tests (in our case 10 minutes), averaging all results.

We also collected performance information on the OpenShift worker nodes during the runs using “vmstat”, “iostat”, “mpstat,” and “pidstat” for the MySQL and sysbench processes. (If you’re interested in the full set of scripts used here, you can clone from our git repository.)

Once we’ve collected the information on GlusterBlock, we can move to running it on pods using GlusterFS. We run oc delete sts mysql-ocs-block to delete all the MySQL/sysbench pods and then use the previous STS yaml file to create the GlusterFS based pods (oc create -f OCS-FS-STS.yaml) using the existing GlusterFS PVCs created in the last section.

Before discussing the numbers, one fact should be explained. GlusterBlock volumes are created in a GlusterFS volume called the “GlusterBlock host volume” with replica count set to 3. When deploying GlusterBlock, we set the GlusterBlock host volume size to 950GB so we can fit 60 x 15GB PVs and have some extra room. With replicas set to 3 in OpenShift Container Storage/Heketi for the “GlusterBlock host volume,” it means that out of our 6 worker nodes that provide storage for the OpenShift Container Storage cluster, only 3 of the worker nodes will be used to provide storage.

And now for some numbers:

The value “Average TPS” (transactions per second) is the average we got from each of the 60 pods using sysbench default read/write parameters, which calculate into something like 70% reads, 20% writes, and 10% other. Other category includes operations like index updates and so on.

The next graph shows the difference between GlusterBlock and GlusterFS when running 60 pods (MySQL/sysbench). The values are TPS per pod averages:

The next graph shows the data for the whole cluster. This graph also shows the difference between GlusterBlock and GlusterFS when running 60 pods (MySQL/sysbench). The following values are TPS averages for the entire cluster:

So what we are seeing here is that GlusterBlock TPS are roughly 35-40% behind GlusterFS TPS and that, while adding more pressure (more threads in the test), the difference in the TPS gap increases.

Comparison of different AWS Elastic Block Store types

Because AWS is offering different types of EBS volumes, we wanted to find out how much they differ from a performance point of view. To do so, we set up a second, identical OpenShift Container Storage deployment with one difference: We did not use the GP2 volume for these OpenShift Container Storage tests but instead used the EBS storage type io1. According to AWS, the significant difference between the two is the maximum number of IOPS per volume and the throughput:

In the “OCS on gp2 vs io1” graph, the “Average TPS” value is the average of the results we got from the 60 individual pods using sysbench default read/write parameters (70% reads, 20% writes, and 10% other). In this setup, using EBS io1 storage instead of GP2 storage for the OpenShift Container Storage cluster, we repeated the tests described in the preceding for both GlusterFS and GlusterBlock PVCs. The following graph shows the difference between using EBS GP2 vs io1 when running 60 pods (MySQL/sysbench) similar to as before. The following values are TPS per pod averages for each storage type:

It’s obvious from comparing the graphs for TPS per pod that io1 storage easily outperforms GP2 by a factor of 3X to 4X using the same AWS EC2 instance type. Taking into account that GP2 storage is backed by standard SSDs while io1 uses high-performance SSDs, this is not a surprise.

Failover of a node under stress, comparison of GlusterFS and EBS

In this last section, we’ll repeat a similar failover test comparison like the one in Part 3, but this time our “application” communicating with MySQL will be sysbench and the failed simulated node will be under stress from sysbench (meaning, IOs will continue to be sent). Unlike in Part 3, we’re going to run all the sysbench pods from one dedicated node, and that’s because we’re testing not the resilience of sysbench or its ability to sustain IO loss but the underlying storage components, in our case GlusterFS PVs or EBS GP2 PVs. For this testing, GlusterBlock was not included.

As we explained in Part 3, because we have only one OpenShift master in one AZ location we’re limited with EBS to running this test between 2 OpenShift worker nodes (the ones in AZ us-west-2a). The first thing we’ll do is label one of the OpenShift nodes not hosting MySQL deployments:

$ oc label nodes node5 application_name=sysbench

So we just created a label called “application_name” and gave it a value of sysbench and assigned the label to this specific node. This will be the OpenShift noded dedicated to running the sysbench pods. To deploy our sysbench pods, all that is needed is to use the same container definition from our OCS-FS-STS.yaml, only add this to the deployment definition:

nodeSelector: application_name: sysbench

Any deployment of the sysbench pod will now be forced to run on this specific OpenShift node with this label.

Now we’re going to do the same, adding a label for the two workers nodes in AZ us-west-2a:

$ oc label nodes node1 node2 application_name=mysql-ocs-fs

We’ll also add the “nodeSelector” using this label to the MySQL pod/container definition we had in the STS deployment.

In this test, like in Part 3, we’re going to run as a multi-tenant, so we’ll create 10 projects, each of them will have a MySQL pod, a sysbench pod, the matching service, and ConfigManager and PVs/PVCs. (You can find the full scripts in our git repository.)

The failover test is similar to that in Part 3. We start sysbench on the 10 designated sysbench pods (remember these are confined to a specific node via label “application_name: sysbench”), each connected to a different MySQL pod. The MySQL pods are also all cordoned (confined) to one node via label “application_name: mysql-ocs-fs.”

Once all the 10 MySQL pods are running the sysbench workload, we’ll delete the MySQL pods, thereby causing them to be restarted on a different OpenShift node in the same AZ. We monitor the time IOs were not received from sysbench until the time IOs are received, because MySQL is online again and responding to read/write requests. We also used “watch oc get pods” to see how long the new pods take to get created.

We ran the test 10 times for each of the volume storage types (GlusterFS and EBS GP2).

Before showing the sample output from sysbench, we’d like to define a new term: “io-pause.” This term describes the time during which IOs are not acknowledged by our MySQL pods.

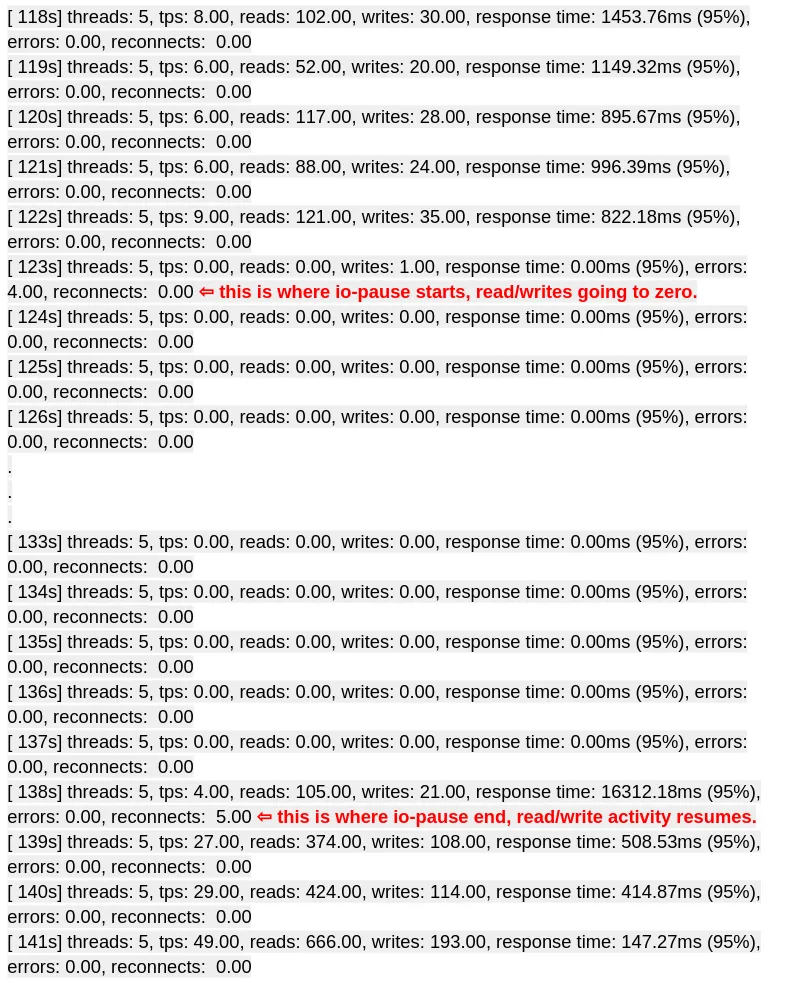

Here’s a sample from the sysbench output from one of the pods running on GlusterFS storage:

As you can see, the sysbench output shows that IOs were not received by MySQL for roughly 14 seconds. We ran the same test 10 times, calculating the average between these 10 pods in each of the 10 tests and, on average, the “IO-pause” time was 15 seconds when using GlusterFS volumes.

We re-ran the same set of sets, but instead of using GlusterFS volumes for the MySQL containers, we used EBS GP2 volumes. When calculating the average from the 10 tests with 10 pods, “IO-pause” time was 239 seconds when using EBS GP2 volumes.

Another sample of data is a loop we ran every second on get pod --all-namespaces |grep mysql| column -t during the “IO-pause” period and measured how long it takes for all pods to fully migrate from one node to another. The idea is that on a worker node failure I need to get all pods from a failed node to migrate, so the time it takes for all pods on the worker node to migrate is important to measure.

As you can see from the following sample image, when using GlusterFS storage it took 15 seconds for all 10 pods to migrate to the other worker node, while with EBS GP2 it took ~7 minutes. These values are also the averages from all 10 tests. We found that GlusterFS average time is 15 seconds verses EBS GP2 average time is 421 seconds.

Conclusion

We covered a lot in this post. We showed a simple way to use StatefulSets to run read/write sysbench stress tests on an OpenShift cluster using two OpenShift Container Storage volume types GlusterFS and GlusterBlock. We compared the performance of 60 pods running MySQL and sysbench using either GlusterFS or GlusterBlock volumes and showed that GlusterFS has better performance in a scenario of many relatively small MySQL databases running on an AWS cloud environment that is limited to worker nodes with 8 cores, 32GB of RAM, and 3,000 IOPS EBS GP2 volume.

Last, we did another failover test similar to what was done in Part 3, only this time the test was when the whole cluster was busy and, more important, our 6 worker nodes were all running sysbench at the same time. We referenced two different parameters as the method of testing this:

- The average time IOs were paused (“io-pause”) between sysbench and the MySQL pods.

- The average time it takes to migrate all pods from one worker node to another node and have the 10 pods again in Running status.

For average “io-pause” time when using GlusterFS storage, we found that the “io-pause” duration was 15 times less than when compared to using EBS GP2 storage mounted to the MySQL containers. For migration time of all 10 pods, pods using GlusterFS were able to migrate all pods from one worker node to another 28 times faster than when tested with EBS.

This post concludes our MySQL blog series, which revealed even more:

- StatefulSets are a great tool for creating deployments that need tightly ordered pod names and PVCs that are consistent (i.e., pod mysql-ocs-1 used PVC mysql-ocs-data-mysql-ocs-1) and easy to understand.

- OCS affords a great deal of flexibility in terms of providing storage dynamically and accommodating failure scenarios. As we saw throughout this blog series, failover times can be dramatically reduced when using OpenShift Container Storage compared to the usage of AWS EBS volumes for workload storage.

- We also showed what is the expected performance from 6 worker nodes that both provide and consume storage under heavy load of 60 MySQL/WordPress instances using a modest set of AWS instance types (M5 is part of the general purpose family) and using a low budget AWS storage type (EBS GP2, limit of 3000 IOPS).

关于作者

Sagy Volkov is a former performance engineer in ScaleIO, he initiated the performance engineering group and the ScaleIO enterprise advocates group, and architected the ScaleIO storage appliance reporting to the CTO/founder of ScaleIO. He is now with Red Hat as a storage performance instigator concentrating on application performance (mainly database and CI/CD pipelines) and application resiliency on Rook/Ceph.

He has spoke previously in Cloud Native Storage day (CNS), DevConf 2020, EMC World and the Red Hat booth in KubeCon.