With the release of Red Hat OpenStack Platform 13 (Queens) we’ve added support to Red Hat OpenStack Platform director to deploy the overcloud controllers as virtual machines in a Red Hat Virtualization cluster. This allows you to have your controllers, along with other supporting services such as Red Hat Satellite, Red Hat CloudForms, Red Hat Ansible Tower, DNS servers, monitoring servers, and of course, the undercloud node (which hosts director), all within a Red Hat Virtualization cluster. This can reduce the physical server footprint of your architecture and provide an extra layer of availability.

Please note: this is not using Red Hat Virtualization as an OpenStack hypervisor (i.e. the compute service, which is already nicely done with nova via libvirt and KVM) nor is this about hosting the OpenStack control plane on OpenStack compute nodes.

https://www.youtube.com/watch?v=8OCaIvWvRgU

Video courtesy: Rhys Oxenham, Manager, Field & Customer Engagement

Benefits of virtualization

Red Hat Virtualization (RHV) is an open, software-defined platform built on Red Hat Enterprise Linux and the Kernel-based Virtual Machine (KVM) featuring advanced management tools. RHV gives you a stable foundation for your virtualized OpenStack control plane.

By virtualizing the control plane you gain instant benefits, such as:

- Dynamic resource allocation to the virtualized controllers: scale up and scale down as required, including CPU and memory hot-add and hot-remove to prevent downtime and allow for increased capacity as the platform grows.

- Native high availability for Red Hat OpenStack Platform director and the control plane nodes.

- Additional infrastructure services can be deployed as VMs on the same RHV cluster, minimizing the server footprint in the datacenter and making an efficient use of the physical nodes.

- Ability to define more complex OpenStack control planes based on composable roles. This capability allows operators to allocate resources to specific components of the control plane, for example, an operator may decide to split out networking services (Neutron) and allocate more resources to them as required.

- Maintenance without service interruption: RHV supports VM live migration, which can be used to relocate the OSP control plane VMs to a different hypervisor during their maintenance.

- Integration with third party and/or custom tools engineered to work specifically with RHV, such as backup solutions.

Benefits of subscription

There are many ways to purchase Red Hat Virtualization, but many Red Hat OpenStack Platform customers already have it since it’s included in our most popular OpenStack subscription bundles, Red Hat Cloud Infrastructure and Red Hat Cloud Suite. If you have purchased OpenStack through either of these, you already own RHV subscriptions!

Logical Architecture

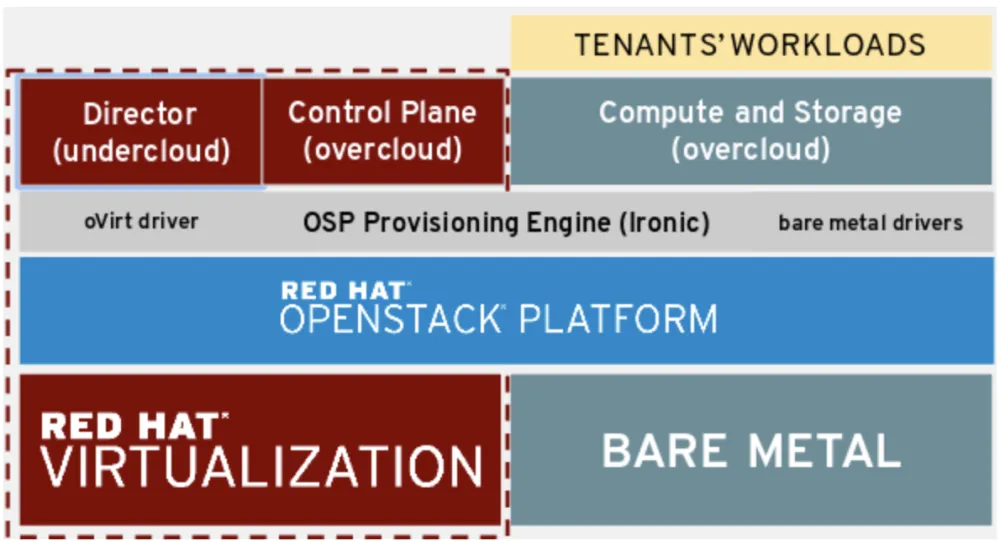

This is how the architecture looks when splitting the overcloud between Red Hat Virtualization for the control plane and utilizing bare metal for the tenants’ workloads via the compute nodes.

Installation workflow

A typical installation workflow looks like this:

Preparation of the Cluster/Host networks

In order to use multiple networks (referred to as “network isolation” in OpenStack deployments), each VLAN (Tenant, Internal, Storage, …) will be mapped to a separate logical network and allocated to the hosts’ physical nics. Full details are in the official documentation.

Preparation of the VMs

The Red Hat OpenStack Platform control plane usually consists of one director node and (at least) three controller nodes. When these VMs are created in RHV, the same requirements we have for these nodes on bare metal apply.

The director VM should have a minimum of 8 cores (or vCPUs), 16 GB of RAM and 100 GB of storage. More information can be found in the official documentation.

The controllers should have at least 32 GB of RAM and 16 vCPUs. While the same amount of resources are required for virtualized controllers, by using RHV we gain the ability to better optimize that resource consumption across our underlying hypervisors

Red Hat Virtualization Considerations

Red Hat Virtualization needs to be configured with some specific settings to host the VMs for the controllers:

Anti-affinity for the controller VMs

We want to ensure there is only one OpenStack controller per hypervisor so that in case of a hypervisor failure, the service level disruption minimalized to a single controller. This allows for HA to be taken care of using the different levels of high availability mechanisms already built in to the system. For this to work we use RHV to configure an affinity group with "soft negative affinity," effectively giving us “anti-affinity!” Additionally it provides the flexibility to override this rule in case of system constraints.

VM network configuration

One vNIC per VLAN

In order to use multiple networks (referred to as “network isolation” in OpenStack deployments), each VLAN (Tenant, Internal, Storage, …) will be mapped to a separate virtual NIC (vNIC) in the controller VMs and VLAN “untagging” will be done at the hypervisor (cluster) and VM level.

Full details can be found in the official documentation.

Allow MAC Spoofing

For the virtualized controllers to allow the network traffic in and out correctly, the MAC spoofing filter must be disabled on the networks that are attached to the controller VMs. To do this we set no_filter in the vNIC of the director and controller VMs, then restart the VMs and disable the MAC anti-spoofing filter.

Important Note: If this is not done DHCP and PXE booting of the VMs from director won’t work.

Implementation in director

Red Hat OpenStack Platform director (TripleO’s downstream release) uses the Ironic Bare Metal provisioning component of OpenStack to deploy the OpenStack components on physical nodes. In order to add support for deploying the controllers on Red Hat Virtualization VMs, we enabled support in Ironic with a new driver named staging-ovirt.

This new driver manages the VMs hosted in RHV similar to how other drivers manage physical nodes using BMCs supported by Ironic, such as iRMC, iDrac or iLO. For RHV this is done by interacting with the RHV manager directly to trigger power management actions on the VMs.

Enabling the staging-ovirt driver in director

Director needs to enable support for the new driver in Ironic. This is done as you would do it for any other Ironic driver by simply specifying it in the undercloud.conf configuration file:

enabled_hardware_types = ipmi,redfish,ilo,idrac,staging-ovirt

After adding the new entry and running openstack undercloud install we can see the staging-ovirt driver listed in the output:

(undercloud) [stack@undercloud-0 ~]$ openstack baremetal driver list +---------------------+-----------------------+ | Supported driver(s) | Active host(s) | +---------------------+-----------------------+ | idrac | localhost.localdomain | | ilo | localhost.localdomain | | ipmi | localhost.localdomain | | pxe_drac | localhost.localdomain | | pxe_ilo | localhost.localdomain | | pxe_ipmitool | localhost.localdomain | | redfish | localhost.localdomain | | staging-ovirt | localhost.localdomain |

Register the RHV-hosted VMs with director

When defining a RHV-hosted node in director’s instackenv.json file we simply set the power management type (pm_type) to the “staging-ovirt” driver, provide the relevant RHV manager host name, and include the username and password for the RHV account that can control power functions for the VMs.

{

"nodes": [

{

"name":"osp13-controller-1",

"pm_type":"staging-ovirt",

"mac":[

"00:1a:4a:16:01:39"

],

"cpu":"2",

"memory":"4096",

"disk":"40",

"arch":"x86_64",

"pm_user":"admin@internal",

"pm_password":"secretpassword",

"pm_addr":"rhvm.lab.redhat.com",

"pm_vm_name":"osp13-controller-1",

"capabilities": "profile:control,boot_option:local"

},

{

"name":"osp13-controller-2",

"pm_type":"staging-ovirt",

"mac":[

"00:1a:4a:16:01:3a"

],

"cpu":"2",

"memory":"4096",

"disk":"40",

"arch":"x86_64",

"pm_user":"admin@internal",

"pm_password":"secretpassword",

"pm_addr":"rhvm.lab.redhat.com",

"pm_vm_name":"osp13-controller-2",

"capabilities": "profile:control,boot_option:local"

},

{

"name":"osp13-controller-3",

"pm_type":"staging-ovirt",

"mac":[

"00:1a:4a:16:01:3b"

],

"cpu":"2",

"memory":"4096",

"disk":"40",

"arch":"x86_64",

"pm_user":"admin@internal",

"pm_password":"secretpassword",

"pm_addr":"rhvm.lab.redhat.com",

"pm_vm_name":"osp13-controller-3",

"capabilities": "profile:control,boot_option:local"

}

]

}A summary of the relevant parameters required for RHV are as follows:

- pm_user: RHV-M username.

- pm_password: RHV-M password.

- pm_addr: hostname or IP of the RHV-M server.

- pm_vm_name: Name of the virtual machine in RHV-M where the controller will be created.

For more information on Red Hat OpenStack Platform and Red Hat Virtualization contact your local Red Hat office today!