It is well known that SAP landscapes, apart from being critical to how global companies function, tend towards high levels of complexity. This is not only because of the vast array of different applications that constitute the average SAP installation base (like ERP, BW, GRC, SCM, CRM), but also because these products typically interact with many other third-party applications, forming altogether a large ecosystem.

Since the SAP landscape encompasses data related to HR, finance, supply change, customer relationship, business warehouse and many other areas, it is a diverse set of applications accessing this data. This translates into an heterogeneity that can be very challenging to manage for developers and architects, especially when they need to define integrations between the SAP estate and the applications that need to connect to it.

The classic approach to creating and using these integrations has always been based on ESB (Enterprise Service Bus) or EAI (Enterprise Application Integration) patterns. Integration platforms can be created following both models, but one of the main problems that they have is the lack of integration abstraction. This makes it very difficult to implement reusable components for different applications, resulting in a fairly ad hoc view that is neither portable nor easy to maintain. SAP’s historic integration platform—SAP PI/PO (formerly SAP XI)—follows these patterns.

ESB and EAI patterns, because of their nature, are not really suited for interactions with cloud-based applications. Since cloud-native deployment is a clear developer trend, there is a need to change the way companies integrate their applications with the SAP backbone. Additionally, microservices are being broadly adopted because they provide a much more modular approach, and—since processes are broken down into more atomic parts—if one of them fails, it will drop the entire process.

All this points in the direction of reusability, abstraction, generalization and portability; these characteristics are encompassed by the API-First approach for integration. In this, the APIs to call the functions that need to be executed are defined first, and once this is done, the actual code is written. This model is extremely convenient for developers and users, since they are only presented with sets of APIs. The implementation details are abstracted away, leaving them to only need to write calls from the application or code being written. This enhances portability between platforms, so we don't have to redefine an integration if we want to use it on-premise or on a different cloud from where it was initially created.

The importance of using an integration platform based on the API-First approach is even bigger if we consider the need to migrate to SAP S/4HANA by 2027. One of its premises is to “keep the core clean,” meaning that no custom code is created in its core (what made the SAP version upgrades really cumbersome). To stick to this, customers should move all their own deployments outside (plus creating new ones also elsewhere) but also still connect to and exchange data with the SAP core.

Even customers that are not yet performing this migration can benefit from extracting their custom code from SAP (they will most likely find many parts of it that are not used anymore and can be eliminated), and moving it elsewhere. This can make the process of migration much faster and less complex.

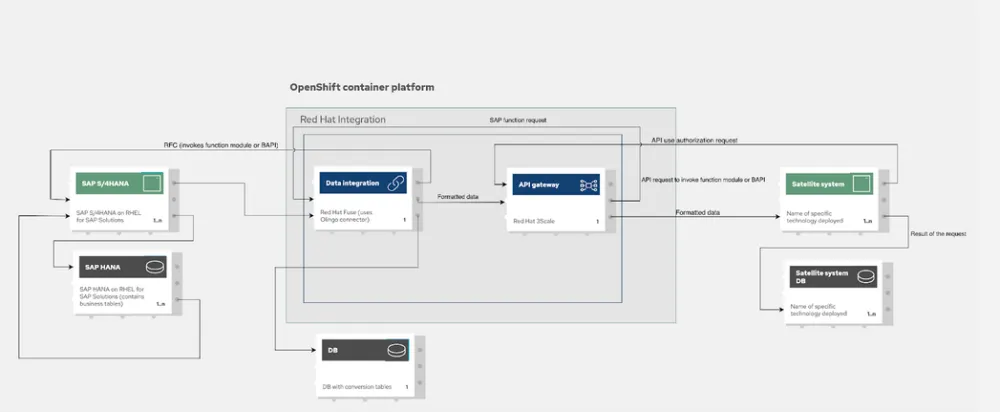

Figure 1 shows a high-level illustration of what an API management platform for SAP could look like, along with some of the main reasons for customers to create and use it.

Figure 1. API management platform for SAP

The satellite systems host the different applications that consume data from SAP and they do not need to know any details about the structure of this data or of the SAP systems.

Implementing the solution

The integration platform based on Red Hat OpenShift allows customers to migrate all their legacy applications to OpenShift and to do all of their new deployments on Red Hat OpenShift clusters. This is in line with the need for modernizing application development, another current trend.

Red Hat Integration provides the set of technologies that make the integration of SAP and non-SAP applications possible. The main technologies used in this solution are Red Hat Fuse, which is based on Apache Camel and has specific components for SAP that use its main protocols (RFC, OData, iDocs, etc.). It exposes the SAP functions and data structures as APIs and performs the translation between the formats used by the different third-party applications and those used by SAP.

The other main component is Red Hat 3Scale, which serves as an API gateway and it is the single point of contact for the applications that need to interact with SAP. It keeps all the APIs needed in the whole IT landscape as an inventory and adds Role Based Access Control (RBAC) to make sure that only the applications that are entitled to use the APIs will call them. It also lets you keep track of the usage of each API.

Figure 2. Integration possibilities with Red Hat Fuse and Red Hat 3Scale

Some use cases of the API management platform

Let's look at some scenarios where an integration platform based on APIs has been implemented.

The first case is an application that needs to run a function in SAP, such as to obtain the details of a purchase order (PO).

The application will connect to the API gateway and request to use the API to retrieve the details of the PO. If it is allowed to do so, the API gateway (Red Hat 3Scale) will forward the API call to the data integration component (Red Hat Fuse) with the parameters received from the application. Data integration will convert the request from the application to the format used by SAP and open a remote function call (RFC) connection to the SAP system. The process to retrieve the PO will then be triggered by executing a business API (BAPI). The information will then be sent back to the caller application via the API gateway.

Figure 3. Application invoking an SAP function

The second use case is an application that needs to directly access a data structure in the SAP backend. For this it will connect to the API gateway and call the needed API. If the application is allowed to use the API, the call will be passed on to data integration which will perform another security check, this time to see if the caller application has access to the SAP client from which it is trying to access the data (to implement this check, an additional database with authentication tables has been deployed on Red Hat Openshift). If it does, the query will be translated into OData format and sent to SAP. Once the data is retrieved, data integration will send it back to the calling application via the API gateway.

Figure 4. Application retrieving data from SAP

Another interesting use case involves applications that frequently consume data for business intelligence that comes from a SAP BW system. Since the queries used in this kind of system are expensive and can cause performance issues, a good solution is to implement a cache functionality to avoid processing bottlenecks in the SAP backend.

Another use case involves file/message manipulation between source (non-SAP) and target (SAP) applications, such as the one they have used at Tokyo/TEL.

In order to show a simpler implementation in this scenario, we are not using the API gateway, and the applications use the JDBC/ODBC connector that comes with Red Hat Integration that allows for direct connections to SAP systems by using the Python OData library. When the application sends the request to the connector, it will first check if the requested data is already present in a cache PostgreSQL database that has been deployed on the Red Hat OpenShift cluster. If it is, it will send the data back to the application, otherwise the query will be sent in OData format to the SAP BW system. The result is then stored in the DB and sent back to the application.

Figure 5. Application used cached data from SAP BW system

Finally, another scenario worth mentioning is using Red Hat Fuse to replace legacy batch operations for file exchange that are ad hoc and not portable at all. In this case Red Hat Fuse will perform all the file manipulation needed so that the third-party applications and SAP can send and receive them in their own format instead of having to use complex logics that will work for only a limited set of workflows.

Figure 6. Example of batch process to process invoices that can be transformed using APIs in Red Hat Fuse

Summary

Integration of SAP and non-SAP applications is a very important topic for customers that are still on legacy SAP systems and preparing to migrate to SAP S/4HANA, as well as for those who have already completed the move. In both cases, the SAP backend needs to interact with many other applications, and since the number of these is growing rapidly—along with the need to be flexible and deploy in a more agile way—they need to have a platform that is easy to manage and that can provide any kind of integration.

Red Hat Integration on OpenShift covers both of these scenarios. It provides a way to create integrations between SAP and non-SAP applications that are reusable and easy to maintain because they are based on APIs. It also provides a platform to develop and run all the applications that need to connect to SAP, allowing the use of cloud-native techniques and microservices that follow DevOps methodologies. This will help companies shorten their development cycles and be much more flexible.

If you are interested in more solutions built with these and other products of the Red Hat's portfolio visit the Portfolio Architecture website.

Learn more

Red Hat Integration helps enterprises optimize application performance and business results

关于作者

Ricardo Garcia Cavero joined Red Hat in October 2019 as a Senior Architect focused on SAP. In this role, he developed solutions with Red Hat's portfolio to help customers in their SAP journey. Cavero now works for as a Principal Portfolio Architect for the Portfolio Architecture team.