This post is a companion to the talk I gave at Cloud Native Rejekts NA ’19 in San Diego on how to work around common issues when deploying applications with the Istio service mesh in a Kubernetes cluster.

The Istio Service Mesh

The rise of microservices, powered by Kubernetes, brings new challenges. One of the biggest changes with distributed applications is the need to understand and control the network traffic these microservices generate. Service meshes have stepped in to address that need.

While a number of open-source service mesh projects are available (Tim Hockin posited in his Cloud Native Rejekts NA’19 talk that Kubernetes has its own native, if primitive, service mesh), and more continue to be developed, Istio offers perhaps the most varied feature set. It offers seamless encryption over the wire, service-to-service authentication and authorization, per-connection metrics, request tracing, and a wide array of request routing and traffic management controls. (See my blog post on Getting Started with Istio Service Mesh for more details on what Istio can do and why you may or may not want to use it.)

Even though the Istio project is now more than two years old and its development team has spent much of the past year focused on improving its operability, Istio still poses a number of challenges with adoption and operability, largely due to Istio’s complexity. While most basic HTTP-based services will work with Istio without issue, some common use cases still present problems as well as figuring out where to start debugging configurations or other operational issues. Below, we will cover some options for applications that do not “just work” with Istio. First, we will go over how to start looking for answers when Istio does not behave as expected.

Troubleshooting and Debugging

It can be tricky to figure out why Istio traffic is not behaving as expected or sometimes even to realize there is an issue. Because Istio itself consists of multiple control plane components in addition to its data plane, which itself is made up of as many Envoy proxy sidecar containers as there are application pods in your mesh, tracking down and diagnosing problems takes some practice. This section will show you where to get started, but it is not intended to be a comprehensive guide.

Note that these examples and commands were tested against Istio 1.3.5, unless otherwise noted. They should work for slightly older and newer Istio releases, although the output format may differ in some cases. See my recent blog post for an overview of changes and new features in the recently-released Istio 1.4.0.

Check the Control Plane Status

-

Run

kubectl get pods -n istio-systemto check pods. Everything should have a state of Running or Completed. If you see pods with errors, follow standard Kubernetes pod troubleshooting steps to diagnose -

Starting with Istio 1.4.0, you can use the experimental

istioctlcommandanalyze:$ istioctl version

client version: 1.4.0

citadel version: 1.4.0

egressgateway version: 1.4.0

galley version: 1.4.0

ingressgateway version: 1.4.0

nodeagent version:

nodeagent version:

nodeagent version:

pilot version: 1.4.0

policy version: 1.4.0

sidecar-injector version: 1.4.0

telemetry version: 1.4.0

data plane version: 1.4.0 (3 proxies)

$ istioctl x analyze -k

Warn [IST0102] (Namespace default) The namespace is not enabled for Istio injection. Run 'kubectl label namespace default istio-injection=enabled' to enable it, or 'kubectl label namespace default istio-injection=disabled' to explicitly mark it as not needing injection

Error: Analyzer found issues.

$ kubectl label namespace default istio-injection=enabled

namespace/default labeled

$ istioctl x analyze -k

✔ No validation issues found.

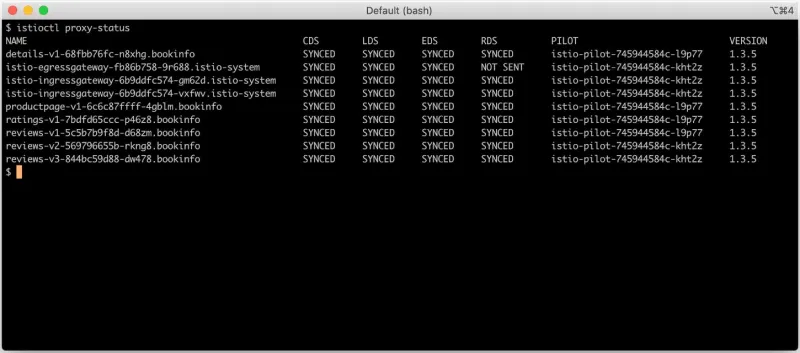

Check the Status of the Istio Proxies

- Run

istioctl proxy-status(istioctl psfor short) for the current Istio Pilot sync status of each pod istio-proxy container. The output includes the following fields:-

CDS: Envoy Cluster Discovery Service. Envoy defines a “cluster” as a group of hosts that accept traffic for a given endpoint or service. Don’t confuse an Envoy cluster with a Kubernetes cluster - in a Kubernetes cluster, an Envoy “cluster” will typically, though not always, be a Kubernetes deployment or other replica set.

-

LDS: Envoy Listener Discovery Service. Envoy defines a “listener” for each configured host:port endpoint. A Kubernetes cluster will typically have an Envoy listener for each target service port in an Envoy cluster.

-

EDS: Envoy Endpoint Discovery Service. Envoy defines an “endpoint” as a member of an Envoy cluster, to which it can connect for a service. In a Kubernetes cluster, an Envoy endpoint would be a pod backing the destination service.

-

RDS: Envoy Route Discovery Service. Envoy defines a “route” as the set of Envoy rules that match a service or virtual host to an Envoy “cluster.”

-

- Retrieve the current Envoy xDS configuration for a given pod’s proxy sidecar with the

istioctl proxy-config cluster|listener|endpoint|routecommands.

- If you have applied an Istio configuration, but it does not seem to be taking effect, and

istioctl proxy-statusshows all proxies as synced, there may be a conflict with the rule. Check the Pilot logs with the commandkubectl logs -l app=pilot -n istio-system -c discoveryand if you see a non-emptyProxyStatusblock, Pilot cannot reconcile or apply configurations for the named Envoy resources.

- If Pilot doesn’t report any conflicts or other configuration issues, the proxies may be having a connection issue. You can check the log of the

istio-proxycontainer in the source and destination pods for issues. If you don’t see anything helpful, you can increase the logging verbosity of the istio-proxy sidecar, which listens on port 15000 of the pod. (You may have to usekubectl port-forwardto be able to connect to the sidecar.) Use aPOSTrequest against the proxy port to update the logging level:curl -s -XPOST http://localhost:15000/logging?level=debug - Istio telemetry also collects the Envoy access logs, which include the connection response flags. (See the list here for RESPONSE_FLAGS.) Use the command

kubectl logs -l app=telemetry -n istio-system -c mixerto see the log entries if you’re using Mixer telemetry. If your cluster has a Prometheus instance configured to scrape Istio’s metrics, you can query that.

Resources for Istio Troubleshooting

- https://istio.io/docs/reference/commands/istioctl/

- https://istio.io/docs/ops/common-problems/

- https://istio.io/docs/ops/diagnostic-tools/proxy-cmd/

Common Application Problems in Istio Meshes

Istio Proxy Start-up Latency

The Issue: The application container in a pod tries to make initial network connections at start time, but it fails to reach the network.

The Cause: The istio-proxy container in a pod intercepts all (with occasional exceptions) incoming and outgoing network traffic to a pod because of iptables rules on the Kubernetes node. However, because the application container(s) in a pod may be up and running before the Istio proxy sidecar has started and pulled its initial configuration from the Pilot service, outgoing connections from the application containers will time out or get a connection refused error.

The Best Solution: Your applications should retry their initiation requests automatically until they succeed. (Networks, both local-area and wide-area, can still have regular issues, even without Istio proxies.)

The Workaround: You can wrap the containers’ entrypoint commands in the Kubernetes PodSpec so they will wait for the proxy’s port 15000 to be live before they execute the application:

spec:

containers:

- name: my-app

command:

- /bin/sh

- -c

args:

- |

while ! wget -q -O - http://localhost:15000/server_info | \

grep '"state": "LIVE"'; do

echo “Waiting for proxy”

done

echo “Istio-proxy ready”

exec /entrypoint

You can use nc or curl or some other simple HTTP-compatible client in place of wget. Note that having these network clients installed in your container image is generally considered a security bad practice.

Kubernetes Readiness/Liveness Probes vs. mTLS

The Issue: If containers in pods which require mTLS connections also have Kubernetes HTTP readiness or liveness probes, the probes will fail even if the containers are healthy.

The Cause: Kubernetes probes come from the kubelet process on the Kubernetes node. Because the kubelet does not generally get issued an Istio mTLS certificate, it cannot make a valid mTLS connection to the health check endpoints in the pods.

The Best Solution: Use separate container ports for the application service and for its health checks. You can then disable mTLS requirements on the health check port while leaving the application port secured. Alternatively, convert HTTP probes to Exec probes when possible, although this approach can create a performance hit.

The Workaround: Istio introduced the option to rewrite HTTP probes to route through Pilot, so they could then connect to the target pod’s Istio proxy using mTLS. The effects of this solution at scale are not well known.

The probe rewrites can either be enabled globally for a mesh by passing the option --set values.sidecarInjectorWebhook.rewriteAppHTTPProbe=true to helm, or per deployment by adding the annotation sidecar.istio.io/rewriteAppHTTPProbers: "true" to the PodSpec.

Mixed-Protocol Ports

The Issue: If a container port accepts more than one protocol, Pilot will reject one or both configurations for the target.

The Cause: An Envoy listener can handle only one protocol, and Envoy has some very specifically defined protocols. For example, even though HTTP is an application protocol built on TCP, Envoy considers these two different protocols, and therefore it cannot support ports that can accept both HTTP and non-HTTP TCP traffic.

The Best Solution: Use different container ports for different protocols (as defined by Envoy).

The Workaround: Creative additions of non-Istio proxies (e.g., HAProxy or Nginx) can allow you to add a different endpoint for one protocol, and the proxy can still send the traffic to the original back-end port. This proxy needs to sit in the original pod if it cannot “rewrite” or otherwise make the traffic compatible with the Envoy-configured protocol for the original application port. Otherwise, for example, if it is terminating non-Istio TLS, it can run as a separate deployment plus service in the cluster.

Non-TCP Protocols

The Issue: Istio cannot see or control non-TCP network traffic.

The Cause: Envoy currently does not have full support for UDP or other non-TCP IP protocols.

The Solution: For control of UDP traffic, use Kubernetes Network Policies.

Pod Security Policies or Security Contexts Break istio-init

The Issue: Restrictive pod security policies (PSPs) or Security Contexts prevent the istio-init container from running iptables and exiting successfully, preventing the pod from starting properly.

The Cause: The istio-init container requires the NET_ADMIN capability to create the node iptables rules that route traffic through the Istio proxy.

The Solution: Deploy the Istio CNI plugin in your cluster so it can manage iptables rules in place of the istio-init containers.

Services With Their Own TLS Certificates

The Issue: In clusters with global mTLS enforcement, services with their own TLS certificates not issued by Istio’s Citadel service cannot talk to other services in the mesh.

The Cause: When mTLS is enabled, the Istio proxies offer TLS certificates signed by the Citadel Certificate Authority (CA) for all connections, whether they are client or server. Certificates signed by another CA will be rejected, and the connections will be dropped or refused.

The Best Solution: Either use your application’s CA as the Istio Citadel CA (not helpful if your mesh has more than one special snowflake CA), or convert your applications to use Istio’s mTLS encryption and authentication/authorization features.

The Workaround: Set the mTLS mode to “Permissive” on affected services. This tactic is not generally recommended, because it creates risk for applications that do not manage their own client authentication and encryption reliably.

Headless Services

The Issue: Istio does not have good support for headless services, which do not have ClusterIP and are generally deployed to Kubernetes as StatefulSets - typically used for distributed datastore applications such as Apache Zookeeper, MongoDB, and Apache Cassandra.

The Cause: The applications that are generally deployed as headless services may have identical PodSpecs, but the running pods may have specialized or fluid roles. For example, in a MongoDB cluster, one pod (Mongo host) will be the primary, capable of handling write requests. If that pod fails, the other pods will elect a new primary in its place to minimize downtime. In a Cassandra ring, each pod (Cassandra node) will have its own unique subset of the ring data, determined by the ring’s replication factor and the node’s token.

Regardless of the datastore’s architecture, all of these headless services share a common need for each individual pod to be addressed directly. This requirement does not (bad pun alert) mesh with Istio’s model of service load balancing across interchangeable backends.

The Solution: To get the benefits of Istio’s mTLS and telemetry with headless services, you will have to provide your own service discovery for the StatefulSet’s pods and their clients. You will also need to create an Istio ServiceEntry object for each pod (e.g., cassandra-0, cassandra-1, etc.) They can then be addressed in the standard Istio/Kubernetes notation: cassandra-0.data-namespace.svc.cluster.local, etc.

For headless services that will receive connections from outside the mesh through an Istio ingressgateway, you will also need to create an Istio VirtualService object for each ServiceEntry/pod.

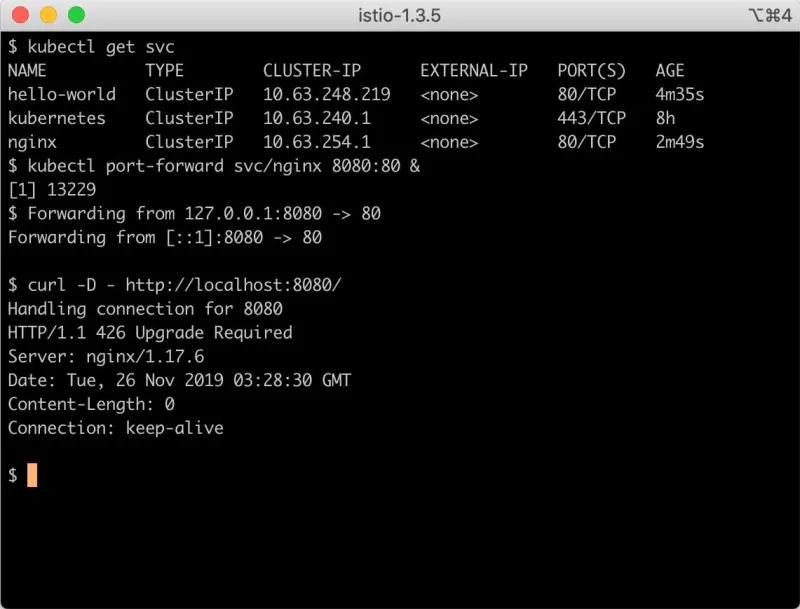

Bonus: Nginx and Upgrade Required

The Issue: Requests that get routed through an Nginx pod return the HTTP Status “426 Upgrade Required.”

The Cause: By default, Nginx still uses HTTP/1.0 for upstream connections. Envoy proxy does not support HTTP/1.0.

The Solution: Force Nginx to use HTTP/1.1 for upstream connections.

server {

listen 80;

location / {

proxy_pass http://hello-world;

proxy_http_version 1.1; # Add me for Envoy compatibility!

}

}

Long-term Strategies for Success With Istio

The following practices can help you ease your organization’s transition to Istio and reap its benefits.

- Keep Istio resource manifests with all other Kubernetes manifests for each application.

- Enforce version control.

- Apply identical configurations across pre-production environments and production to prevent surprises.

- Create an internal “library” of Istio resource manifest templates for different application types.

- Leverage the metrics and details Istio collects to show value and drive stack improvements.

- Suggest features and improvements to Istio.

While Istio’s power and myriad features can be extremely beneficial in some environments, Istio still presents a number of challenges. The sections above can help you work through these. As the past year of Istio releases has shown, the community’s working groups continue to address the common pain points around operability and are very receptive to suggestions and feedback, which will help improve Istio and further drive its adoption.

Sobre el autor

Más como éste

Looking ahead to 2026: Red Hat’s view across the hybrid cloud

Red Hat to acquire Chatterbox Labs: Frequently Asked Questions

Crack the Cloud_Open | Command Line Heroes

Edge computing covered and diced | Technically Speaking

Navegar por canal

Automatización

Las últimas novedades en la automatización de la TI para los equipos, la tecnología y los entornos

Inteligencia artificial

Descubra las actualizaciones en las plataformas que permiten a los clientes ejecutar cargas de trabajo de inteligecia artificial en cualquier lugar

Nube híbrida abierta

Vea como construimos un futuro flexible con la nube híbrida

Seguridad

Vea las últimas novedades sobre cómo reducimos los riesgos en entornos y tecnologías

Edge computing

Conozca las actualizaciones en las plataformas que simplifican las operaciones en el edge

Infraestructura

Vea las últimas novedades sobre la plataforma Linux empresarial líder en el mundo

Aplicaciones

Conozca nuestras soluciones para abordar los desafíos más complejos de las aplicaciones

Virtualización

El futuro de la virtualización empresarial para tus cargas de trabajo locales o en la nube