This article is the fourth in a six-part series where we present various use cases for confidential computing—a set of technologies designed to protect data in use, like memory encryption, and what needs to be done to get the technologies’ security and trust benefits.

In this article, we will focus on establishing a chain of trust and introduce a very simple pipeline called REMITS that we can use to compare and contrast various forms of attestation using a single referential.

- Part 1: Confidential computing primer

- Part 2: Attestation in confidential computing

- Part 3: Confidential computing use cases

REMITS, a simplified model for chains of trust

Some principles and techniques used to implement a chain of trust in confidential computing are general enough that they apply to all confidential computing platforms. Most of them can be traced back to the initial efforts in trusted computing, which we mentioned in a Brief history of trusted computing as part of the first article in this series.

Attestation is useful to build a chain of trust incrementally, in a continuous way from a root of trust all the way to actual trust about a system, generally expressed in the form of secrets being delivered. We will now explore the fundamental principles of this process and propose a simplified chain of trust model to compare and contrast different implementations more easily.

To be able to compare wildly differing implementations, let us focus on a very simple chain of trust pipeline identified by the acronym REMITS, where each letter identifies one of six key steps:

- Root of trust: This is a shared, immutable piece of information (such as a private key from a chip manufacturer where the public key is known), which is used to validate the whole chain of trust. This is a single point of failure for the whole scheme. For example, if a private key is leaked, the trust in the entire chain is lost.

- Endorsement: An endorsement makes it possible to create per-device information that can reliably be traced back to the root of trust. For example, a per-chip endorsement key signed by a manufacturer’s key confirms the manufacturer’s endorsement for that chip.

- Measurement: A measurement generates data that reliably identifies an attester’s particular configuration. In the case of confidential computing, this will typically include a cryptographic hash of the software stack, including configuration parameters such as command line or security options. The measurement must be reliably traced back to an endorsed device, and from there to the root of trust. This forms the evidence provided by the attester in the remote attestation procedures (RATS) model.

- Identity: The RATS verification process proves the attester’s identity by comparing submitted evidence with known reference values. In the case of remote attestation, this identity is typically represented as some internal token in the attestation service.

- Trust: The actual trust is not built from the identity alone, but, following the RATS model, by additionally applying policies to appraise the evidence. For example, in the case of confidential containers, we may want to have more relaxed policies during development that grants additional rights to developers. In other words, developers will receive a higher level of trust than regular deployments due to different policies.

- Secrets: Manifesting trust requires sharing various secrets that will be handed to the attester, allowing it to access confidential data. Secrets typically include disk encryption keys that the workloads need to run. This ensures that workloads that are not attested cannot access or leak confidential data.

While extremely simplified, this model is widely applicable, allowing us to compare various implementations.

Attestation on physical hosts with a Trusted Platform Module

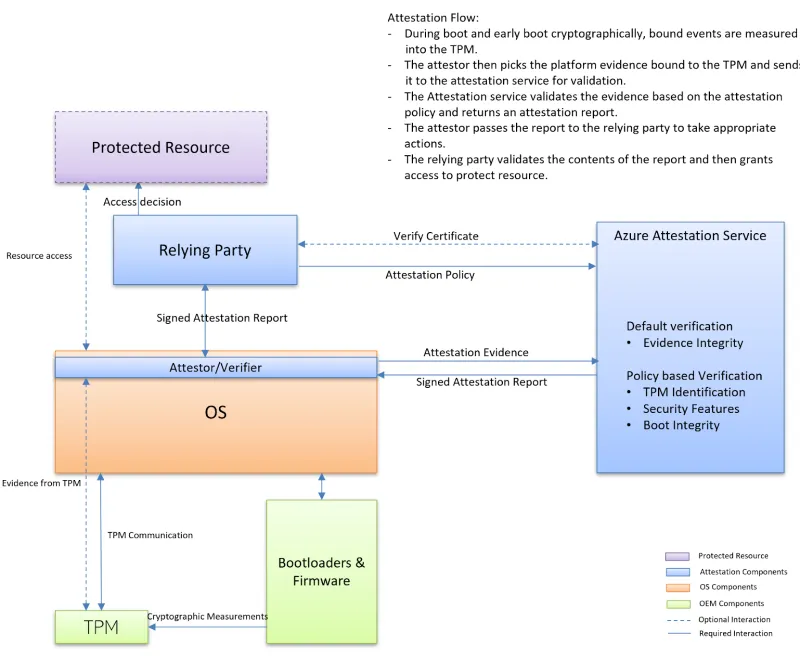

Physical host attestation typically uses a Trusted Platform Module (TPM). Microsoft has extensive documentation on this process, shown below:

Image source: Trusted Platform Module attestation

In this process, the REMITS pipeline looks like this:

Using virtual TPMs to run confidential virtual machines on cloud services

Generally speaking, cloud providers seem to be taking a direction where they expose trust for confidential virtual machines (CVM) using a virtual Trusted Platform Module (vTPM). There is a good reason for that: this is a known interface that was, among other things, made mandatory for secure boot on Windows 11.

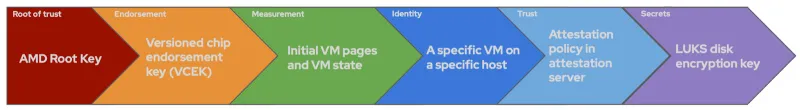

Let us illustrate this with the Microsoft Azure support for SEV-SNP instances. In this scenario, the REMITS pipeline actually runs twice, first in the new confidential computing environment—in a platform specific way—and the second time exposing more standard interfaces to the workload.

In the first run, we use hardware-provided evidence against the cloud provider’s own attestation architecture (such as Microsoft Azure Attestation). If successful, this first run unlocks the secrets necessary to build a vTPM (e.g. from persistent storage). How this is done precisely appears to rely on proprietary, non open-source Microsoft software. The root of trust in that first run is in hardware, namely an AMD root key (ARK) in the current SEV instances.

A second run will then start with the vTPM as a root of trust, and the secrets become accessible through the standard mechanisms specified for all TPMs, which we described above. Except for the root of trust being a vTPM instead of a physical TPM, the second run is otherwise equivalent.

Microsoft Azure’s infrastructure provides guidance on how to integrate their attestation service. Illustrated below, Microsoft provides multiple implementation choices based on who you decide to initially trust (i.e. what root of trust you are willing to use as the starting point for your chains of trust).

Image source: Azure Confidential VMs attestation guidance & FAQ

Attestation in confidential workloads

Confidential workloads implement remote attestation based on registration in a database of the workload launch measurement as well as a secret passphrase to unlock the encrypted disk. This passphrase is typically delivered to the Linux Unified Key Setup (LUKS), the most often used scheme for full-disk encryption on Linux:

{

"workload_id": "my_workload",

"launch_measurement": "hex-encoded-measurement",

"tee_config": "{\"flags\":{\"bits\":63},\"minfw\":{\"major\":0,\"minor\":0}",

"passphrase": "my_secret_passphrase",

}Confidential workloads use the same key broker service (KBS) protocol as confidential containers (see below). In fact, the confidential workload project delivered the first implementation of that protocol, called reference-kbs. The blog article documenting workload attestation shows what the protocol looks like internally—which is essentially the same for all variants:

- The Key Broker Client (KBC) initiates the exchange with a request that provides the protocol version number, the type of trusted execution environment (TEE)—AMD’s SEV SNP in this example—, and additional parameters, which are unused in the case of SNP.

{

"version": "0.0.0", // Ignored.

"tee": "snp", // AMD SEV-SNP.

"extra-params": "", // No extra parameters.

}- The KBS responds with a challenge that includes a nonce to prevent replay attacks:

{

"nonce": "vo4jefw854jh5x8ff39f8fh47hf4908fc38u",

"extra-params": "", // Also unused in SEV-SNP.

}- The KBC responds to the challenge with a structure that includes the TEE public key. The attestation server can use this public key to encrypt the secrets it sends back, along with the attestation report and a “freshness hash” built from the nonce:

{

"tee-pubkey": TeePubKey {

"alg": "RSA",

"k_mod": "base64-encoded-rsa-public-modulus",

"k_exp": "base64-encoded-rsa-public-exponent",

},

"tee-evidence": {

"report": "hex-encoded-attestation-report",

"cert_chain": "[]", // Attestation server's responsibility

"gen": "milan"

},

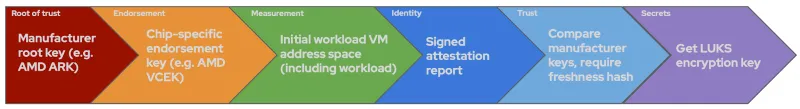

}- The KBS will validate this response, checking the freshness hash built from the nonce and whether the AMD-provided keys match the ones found on AMD servers (which have separate certificates for Naples, Rome, Milan and Genoa generations). The KBS will extract the versioned chip endorsement key (VCEK) and validate the entire certificate chain before comparing the measurements to what was registered with the server.

The REMITS pipeline for confidential workloads looks like this:

Confidential containers attestation architecture

The remote attestation architecture for the confidential containers project is described extensively in this blog article. The project evolved significantly over time, with the objective to become more generic while improving performance and reliability. The project’s documentation on GitHub describes the attestation service, the key broker service, and the underlying hardware-independent protocol.

The diagram below gives an overview of confidential containers attestation:

In this diagram, the blue components are owned by the host, the red components are part of the confidential platform, and the green components form the trusted domain. The blue/green shade indicates data that is stored on the host, but is encrypted, remaining inaccessible to the host administrators.

Attestation starts with the attestation agent, which collects a measurement from the hardware through a kernel/firmware interface. Like for a CVM, the root of trust and the endorsements are backed by confidential computing hardware (e.g. an AMD root key and chip endorsement key).

From the measurement, the attestation agent builds a quote, presented as evidence and proof of identity to the attestation service. The attestation service then validates that quote according to its own policies, affirming or denying trust. If attestation is successful, then the attestation service will authorize a KBS to release secrets. The attestation agent in the virtual machine uses these secrets to decrypt the container image layers (including encrypted layers).

This attestation architecture is currently being revisited so that more code can be shared across platforms. The diagram below shows what is currently being implemented:

On the left side of the above graphic, the attestation agent runs inside a CVM. In this design, it talks to the KBS, which will relay evidence provided by the agent to the attestation service. The reason for the agent to talk to the KBS rather than, say, the attestation service (AS) is mostly to simplify the network data path. The diagram also shows important additional aspects from a management point of view, like how owners can set or update policy definitions. Furthermore, the image building process must become part of a larger supply chain, in order to publish reference values whenever new container images are built.

A previous design already allowed multiple key broker services and key broker clients to coexist. However, the only part that is really expected to change from platform to platform are two sets of platform-specific drivers. On the agent side, attester drivers collect measurements. On the attestation service side, verifier drivers validate the measurements embedded in the quote.

The REMITS pipeline for confidential containers therefore looks like this:

Confidential clusters

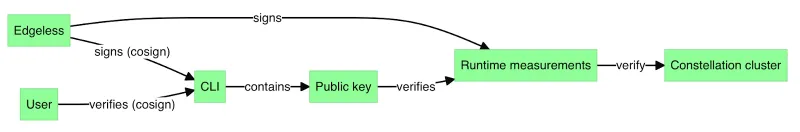

Attestation for confidential clusters is well documented for Edgeless Constellation. Edgeless Systems illustrate the chain of trust using the following diagram:

In the Edgeless Constellation case, runtime measurements combine infrastructure measurements—like those provided by the confidential VMs—with measurements produced by the bootloader and boot chain.

For this particular chain of trust, the REMITS pipeline looks like this:

The Constellation design also includes a service dedicated to providing secrets, notably storage encryption keys. As an alternative, they can also use an external key management system (KMS) like those from a cloud provider.

However, confidential clusters are one of the scenarios where multiple chains of trust need to be considered, with different personas consuming the resulting trust assessment. Notably, Edgeless has drawn attention to the need for such clusters to distinguish between cluster-facing attestation (keeping non-confidential nodes from joining a cluster, which could leak in-use data out), and user-facing attestation (letting users verify the identity and integrity of a cluster before deploying workloads on it).

User-facing verification lets users verify a cluster. That result could also be consumed by a tool. The user collects the signed measurements from the configured image. Edgeless offers a public registry for measurements associated with images they release. The measurements are signed using Edgeless Systems’ own key. This verification can be done either during cluster configuration, or at a later point in time.

The resulting attestation is in a clear format. The user is responsible for analyzing the measurements and matching them against the expected values, which are provided by Edgeless Systems. Since the user is doing the validation, there is no secret delivery in that particular case.

Conclusion

This simple REMITS pipeline is a common way to present varying attestation models between the various use cases we outlined in earlier articles. The differences between hardware platforms—a big source of variation—will be the topic of the next article in this series.

저자 소개

채널별 검색

오토메이션

기술, 팀, 인프라를 위한 IT 자동화 최신 동향

인공지능

고객이 어디서나 AI 워크로드를 실행할 수 있도록 지원하는 플랫폼 업데이트

오픈 하이브리드 클라우드

하이브리드 클라우드로 더욱 유연한 미래를 구축하는 방법을 알아보세요

보안

환경과 기술 전반에 걸쳐 리스크를 감소하는 방법에 대한 최신 정보

엣지 컴퓨팅

엣지에서의 운영을 단순화하는 플랫폼 업데이트

인프라

세계적으로 인정받은 기업용 Linux 플랫폼에 대한 최신 정보

애플리케이션

복잡한 애플리케이션에 대한 솔루션 더 보기

가상화

온프레미스와 클라우드 환경에서 워크로드를 유연하게 운영하기 위한 엔터프라이즈 가상화의 미래