OpenShift Container Platform has provided CI/CD services since the early days, allowing developers to easily build and deploy their applications from code to containers.

As CI/CD in the kubernetes space evolved, OpenShift stayed on the edge by providing services like OpenShift Pipelines (based on Tekton upstream project), a more kubernetes-native way of doing Continuous Delivery.

To further empower developers wanting to embrace Tekton on OpenShift, we have added a new feature (currently in Dev Preview) called “Pipelines-as-code”, which aims at leveraging the Tekton building blocks, but bootstrapping a lot of the plumbing needed to make it work and apply GitOps principles to pipelines.

What is this new feature called “Pipelines-as-code” in OpenShift?

Pipelines-as-code (later referred to as PAC) allows developers to ship their CI/CD pipelines within the same git repository as their application, making it easier to keep both of them in sync in terms of release updates.

By providing the pipeline as code (yaml) in a specific folder within the application, the developer can automatically trigger that pipeline on OpenShift, without much worrying about creating things like webhooks, EventListeners, etc, that are normally required to be set up for Tekton.

Figure 1: Pipelines-as-code overview

Here is the general workflow to use that feature, assuming it has been enabled on the cluster:

- The developer initializes the PAC feature for his project, using the tkn-pac CLI.

- The developer can choose what git events will trigger the pipeline, and scaffold a sample pipeline file matching the selected events (commit, pull-request for the moment).

- They then add a .tekton folder on the root of the application’s git repository, containing the pipeline Yaml file.

- They configure the pipeline content in the Yaml file by adding the required steps.

- When the targeted event (pull-request or commit) happens in the git repository, PAC on OpenShift intercepts the event and creates and runs the pipeline in the desired namespace.

- When running with GitHub, the bi-directional integration allows users to follow the pipeline execution status directly in GitHub UI, and link to OpenShift for more details.

What’s new and great with this new feature, compared to “just using” OpenShift Pipelines (Tekton) on OpenShift ?

If you have already used Tekton on Kubernetes, you probably know that the learning curve could be steep, since it still a project that lays the foundations of a kubernetes-native CI/CD, but the sugar & sweet required to make it very user-friendly is still in the making.

With OpenShift Pipelines, we already make it much easier to use Tekton, by providing several user-friendly capabilities such as a UI to create pipelines, a visual representation to follow their execution, and help troubleshoot them when there are issues.

Nonetheless, the developer had to do some preparation before the pipelines could be triggered, and the pipelines were not tracked in a git repository, instead they were directly instantiated as a “static” kubernetes resource, that needed manual update whenever the pipeline needed to change for a new release for example.

Ship code and pipelines, all in the same repository

Now, by allowing the developer to ship the pipeline with the application’s code, and letting OpenShift take care of updating and running the pipeline, we truly help the developer focus on the core, which is to write useful code, both for the application and it’s CI/CD pipeline.

Figure 2: Pipelines can be stored within the .tekton folder in the application’s repository

UI sweetness, and user happiness

In addition to that, there’s a native integration with GitHub and BitBucket (with GitLab soon to come) that allows the developer to track the pipeline’s execution history directly with the GitHub UI, and provides direct links to the execution logs within OpenShift.

Figure 3 : OpenShift updates GitHub with the pipeline execution status

Modern GitHub workflows

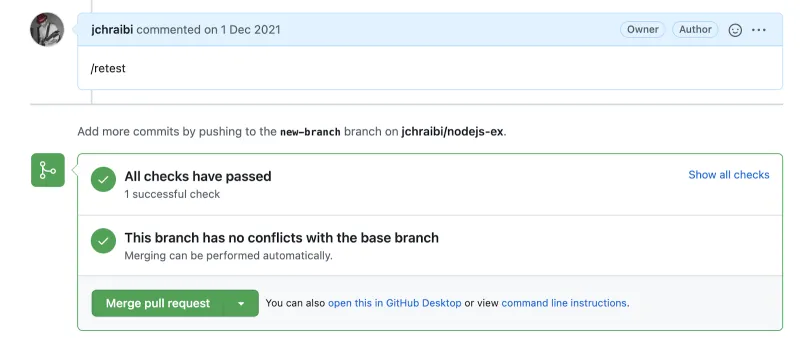

When the developer is working on a pull request (PR), and requires the pipeline to be run again without a new commit, a simple comment like “/retest” in the GitHub PR triggers a new execution of the pipeline and captures the results.

Figure 4: Triggering a pipeline run on OpenShift with a “/retest” command in GitHub

That’s great, now let’s see that in action - DEMO

The following demonstration shows the “Pipelines-as-code” feature in action:

The Level Up Hour (E53) | Pipeline as code

Additional resources

저자 소개

유사한 검색 결과

Red Hat to acquire Chatterbox Labs: Frequently Asked Questions

RPM and DNF features and enhancements in Red Hat Enterprise Linux 10.1

Command Line Heroes: Season 2: Bonus_Developer Advocacy Roundtable

Unlocking zero-trust supply chains | Technically Speaking

채널별 검색

오토메이션

기술, 팀, 인프라를 위한 IT 자동화 최신 동향

인공지능

고객이 어디서나 AI 워크로드를 실행할 수 있도록 지원하는 플랫폼 업데이트

오픈 하이브리드 클라우드

하이브리드 클라우드로 더욱 유연한 미래를 구축하는 방법을 알아보세요

보안

환경과 기술 전반에 걸쳐 리스크를 감소하는 방법에 대한 최신 정보

엣지 컴퓨팅

엣지에서의 운영을 단순화하는 플랫폼 업데이트

인프라

세계적으로 인정받은 기업용 Linux 플랫폼에 대한 최신 정보

애플리케이션

복잡한 애플리케이션에 대한 솔루션 더 보기

가상화

온프레미스와 클라우드 환경에서 워크로드를 유연하게 운영하기 위한 엔터프라이즈 가상화의 미래