Introduction

Red Hat Ansible Automation Platform has seen wide-scale adoption in a variety of automation domains, however with edge use cases becoming more mainstream, the thought process around automation must shift from “complete a task immediately” to being able to run automation now and later, and respond to incoming automation requests from devices that are yet unmanaged.

Automation in a hybrid cloud environment

In today’s hybrid cloud environment, automation exists in a tightly controlled and predictable space, meaning it’s easy to determine what endpoints are reachable and available for connection. In practice, this manifests as inventory syncs from our various management planes (think AWS/Azure/GCP/VMware) and then targeting the devices brought into Controller via those inventory syncs with automation. Cross connectivity shouldn’t be an issue: If we can see the device in a management plane, we can contact and automate against it. In addition, if there are exceptions to the “connectivity everywhere” model, Red Hat Ansible Automation Platform has features and functionality to help address more complex connectivity circumstances.

We can even take this automation approach one step further by pulling those management planes under the management of our automation, giving us the ability to really automate end-to-end. For example, if we want to automate against an endpoint that doesn’t yet exist, we can instruct the appropriate management plane and related systems to bring that endpoint into existence, then perform our automation against it.

What this boils down to is: We have significant control over whether our automation works, because we control both the endpoints and what controls the endpoints. Our automation exists in a proactive paradigm, able to automate and coordinate against all layers of the technology stack.

This approach, while very powerful, doesn’t hold up at the edge for a simple reason: We don’t necessarily control all the layers of the stack. The endpoints are run without a unified management plane and exist on different platforms, in different locations, and are subject to different rules around connectivity. Thus, the paradigm shift: In this environment we must still automate, but we need to balance both a reactive and proactive approach.

Automation at the edge

Without direct control over the various management planes and layers of the technology stack, our automation strategy must shift to be both proactive and reactive, responding in real time to changes in the environment, but also run automation on a schedule to enforce our desired configurations and workloads across a geographically distributed footprint of edge endpoints.

Reactive automation

First, our automation strategy must be reactive to respond to changes in the environment in real time. One of the most common events at the edge is the onboarding and re-imaging of systems, initiated by a process or person outside of the automation ecosystem. In reality, this is outside of the direct automation itself, instead being a function of the automation platform. Providing the appropriate access to resources within the platform at any time, from any allowed location, without requiring manual intervention being the main function of the platform. From there, the platform also facilities a form of event-driven automation, triggering work to be done as new devices come online.

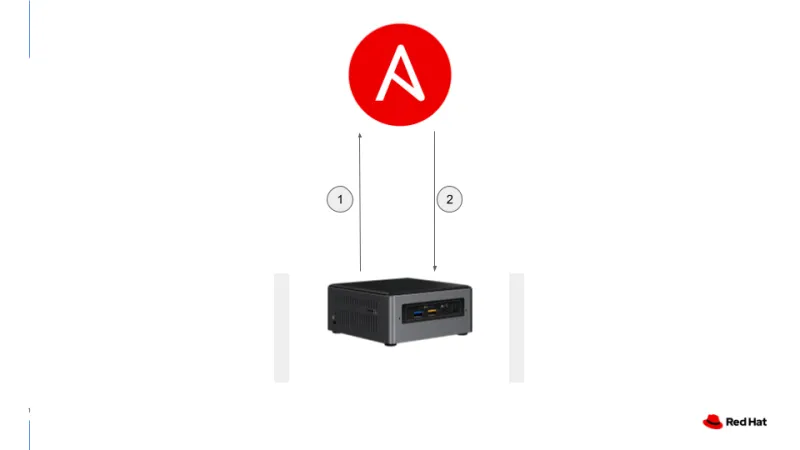

Caption: Here, the device initiates the process, as opposed to the automation platform initiating it.

In practice, this is typically addressed by adding a call home function to the deployment methodology, allowing the devices themselves to initiate interaction with the automation platform according to their availability and schedule over a centralized attempt to reach out. This call home functionality typically sends along two key pieces of information: a unique identifier for the device, and a way to contact it to begin automating against it.

The following is an example of a kickstart post file which leverages systemd to perform call home functionality via an Ansible Playbook run locally upon first boot. The playbook itself handles determining a unique identifier for the system (based off a Mac address in this example), adding the device to an inventory, and also defining Ansible’s magic variable of ansible_host so automation runs know how to contact the system:

%post

# create playbook for controller registration

cat > /var/tmp/aap-auto-registration.yml <<EOF

---

- name: register a r4e system to ansible controller

hosts:

- localhost

vars:

ansible_connection: local

controller_url: https://ansible-controller.company.local/api/v2

controller_inventory: Edge Systems

provisioning_template: Post-Install Edge System

module_defaults:

ansible.builtin.uri:

user: edge-callhome-user

Password: edge-callhome-password

force_basic_auth: yes

validate_certs: no

tasks:

- name: find the id of {{ controller_inventory }}

ansible.builtin.uri:

url: "{{ controller_url }}/inventories?name={{ controller_inventory | regex_replace(' ', '%20') }}"

register: controller_inventory_lookup

- name: set inventory id fact

ansible.builtin.set_fact:

controller_inventory_id: "{{ controller_inventory_lookup.json.results[0].id }}"

- name: create host in inventory {{ controller_inventory }}

ansible.builtin.uri:

url: "{{ controller_url }}/inventories/{{ controller_inventory_id }}/hosts/"

method: POST

body_format: json

body:

name: "edge-{{ ansible_default_ipv4.macaddress | replace(':','') }}"

variables:

'ansible_host: {{ ansible_default_ipv4.address }}'

register: create_host

changed_when:

- create_host.status | int == 201

failed_when:

- create_host.status | int != 201

- "'already exists' not in create_host.content"

- name: find the id of {{ provisioning_template }}

ansible.builtin.uri:

url: "{{ controller_url }}/workflow_job_templates?name={{ provisioning_template | regex_replace(' ', '%20') }}"

register: job_template_lookup

- name: set the id of {{ provisioning_template }}

ansible.builtin.set_fact:

job_template_id: "{{ job_template_lookup.json.results[0].id }}"

- name: trigger {{ provisioning_template }}

ansible.builtin.uri:

url: "{{ controller_url }}/workflow_job_templates/{{ job_template_id }}/launch/"

method: POST

status_code:

- 201

body_format: json

body:

limit: "edge-{{ ansible_default_ipv4.macaddress | replace(':','') }}"

EOF

# create systemd runonce file to trigger playbook

cat > /etc/systemd/system/aap-auto-registration.service <<EOF

[Unit]

Description=Ansible Automation Platform Auto-Registration

After=local-fs.target

After=network.target

ConditionPathExists=!/var/tmp/post-installed

[Service]

ExecStartPre=/usr/bin/sleep 20

ExecStart=/usr/bin/ansible-playbook /var/tmp/aap-auto-registration.yml

ExecStartPost=/usr/bin/touch /var/tmp/post-installed

User=root

RemainAfterExit=true

Type=oneshot

[Install]

WantedBy=multi-user.target

EOF

# Enable the service

systemctl enable aap-auto-registration.service

%endIn addition to adding the device to an inventory in the automation controller, this playbook also triggers a workflow run, limited to just the device itself. This becomes a kind of event-driven automation, where the event is a new device being installed, and the automation being triggered is the standard configuration and deployment of a workload to the device.

Proactive automation

This is closer to our hybrid cloud automation paradigm above, where we either have some knowledge of the endpoints (which is being handled by our reactive automation approach) or know where we can get information before attempting to run automation. At the edge, we want to keep our devices compliant with various configuration and security policies to ensure they’re functional, performant, and secure, however we’re not always confident we’ll be able to contact them immediately due to connectivity disruptions or site issues.

There’s three tools in the automation tool belt to help us here: automation mesh to help address connectivity challenges (same as above), schedules in the automation controller, and modifying our playbooks to have some level of built-in error handling via blocks and wait for connection. Schedules allow the automation controller to run our automation repeatedly, hitting the devices available at the time, and enforcing the desired configuration and security settings. If a device is unavailable for whatever reason, whether there’s a connectivity disruption, conflicting or scheduled maintenance, the automation controller will denote the failure and try again at the next scheduled time, allowing for repeated enforcement against devices as they’re available.

Finally, playbooks can be written to allow for interruptions in connectivity, or to wait for a device to become available before attempting to continue with other tasks. These constructs, such as the rescue functionality of a block, or the wait_for_connection module, help reduce errors caused by connectivity interruptions or poor network connectivity. Since these are deployed at the playbook level, they can wrap around specific long-running or troublesome tasks that involve moving data across a very slow network.

Conclusion

The edge is driving a paradigm shift in automation, from a primary proactive approach to a combination of proactive and reactive. This paradigm shift does drive some changes in the approach to automation, but also reinforces the need for automation, and allows for interoperability between centralized and decentralized systems.

***This blog was co-authored by Josh Swanson

저자 소개

유사한 검색 결과

Deterministic performance with Red Hat Enterprise Linux for industrial edge

Red Hat Enterprise Linux delivers deterministic performance for industrial TSN

How Do Roads Become Smarter? | Compiler

Rethinking Networks in Telecommunications | Code Comments

채널별 검색

오토메이션

기술, 팀, 인프라를 위한 IT 자동화 최신 동향

인공지능

고객이 어디서나 AI 워크로드를 실행할 수 있도록 지원하는 플랫폼 업데이트

오픈 하이브리드 클라우드

하이브리드 클라우드로 더욱 유연한 미래를 구축하는 방법을 알아보세요

보안

환경과 기술 전반에 걸쳐 리스크를 감소하는 방법에 대한 최신 정보

엣지 컴퓨팅

엣지에서의 운영을 단순화하는 플랫폼 업데이트

인프라

세계적으로 인정받은 기업용 Linux 플랫폼에 대한 최신 정보

애플리케이션

복잡한 애플리케이션에 대한 솔루션 더 보기

가상화

온프레미스와 클라우드 환경에서 워크로드를 유연하게 운영하기 위한 엔터프라이즈 가상화의 미래