The demand to extend applications to the edge has never been greater. From retail shops to industrial and manufacturing sites, there's a need to create, consume, and store data at the edge. Deploying applications at the edge comes with a set of physical constraints, but also with the need to deliver a truly cost-efficient and resilient architecture. When building applications at the edge, you must consider the needs of the individual site as well as the cost to deploy, manage, and maintain applications across multiple edge locations.

The good news is that Red Hat OpenShift is evolving to meet this demand head-on. With the introduction of the two-node OpenShift with arbiter topology, Red Hat and partners like Portworx by Pure Storage are delivering a cost-efficient and resilient architecture designed specifically for the edge.

The edge dilemma: High availability vs. cost optimization

The primary motivation behind the two-node OpenShift with arbiter (TNA) initiative is simple: Cost for large-scale edge deployments. For mission-critical edge sites, high availability is non-negotiable. Should a node fail, applications must continue running without disruption, which is why the control plane requires a quorum (typically three or more nodes) to prevent split-brain scenarios and maintain consistency (a principle known as the CAP theorem).

Two-node OpenShift with arbiter, now generally available (GA) with OpenShift 4.20, solves this by dramatically reducing the hardware footprint while preserving three-node consistency and availability.

Two-node OpenShift with arbiter explained

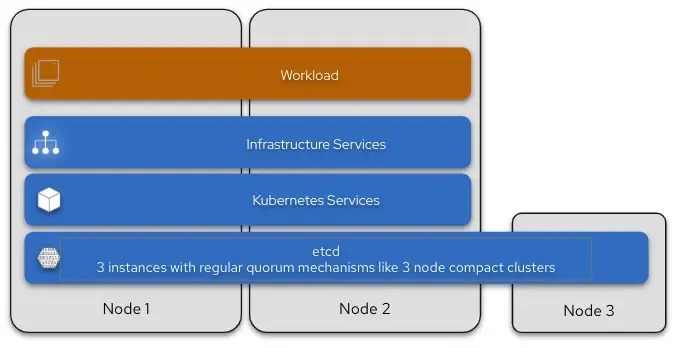

Two-node OpenShift with arbiter architecture is a specialized, cost-sensitive solution that is technically a three-node cluster. Here's how it works:

- The main nodes: Two full-sized nodes run the OpenShift control plane, all worker workloads, and the bulk of the infrastructure services.

- The arbiter node: A small, third node dedicated solely to running the third

etcdinstance necessary to maintain quorum for the control plane. By removing all other responsibilities, the arbiter node can be tiny.

This approach achieves single node outage tolerance without impacting your workload, while significantly reducing the bill of materials compared to three full nodes.

The arbiter node requires only minimal system resources (2 vCPU, 8 GB RAM, and a 50 GB SSD, or similar, disk). Note that it's generally recommended to place the arbiter within close proximity to the other nodes, ideally the same location or site, but in a different failure domain. However, it is possible to have the arbiter at a greater distance (for example, in the cloud or in the datacenter).

The term "end-to-end", in this case, means that it includes network roundtrip and disk IO (and really the maximum, not average latency). Note that this requires the etcd slow profile to be configured, and that the cluster will behave slow, because every etcd write transaction must be synced and committed with the arbiter. If additional components are added to the arbiter (for a software defined storage solution, for example), then the requirements for those components must be added. See below for an example.

The architecture supports both x86 and Arm architectures on bare metal hardware certified for Red Hat Enterprise Linux 9 (RHEL) and OpenShift. This means platform=none or platform=baremetal, which allows installation of the arbiter as a virtual machine on any hypervisor supported on RHEL (including kvm, Hyper-V, VMware).

To install a cluster, the usual OpenShift installation methods are supported: UPI, IPI, Agent Based, and Assisted Service.

What's the high-availability stance of TNA?

The high-availability stance of TNA is basically the same as a regular three-node compact cluster, but more sensitive in terms of capacity planning. If no performance degradation is allowed during loss of a node, then each node can be utilised at 50% maximum to have enough capacity to handle the load of the failed node. The load that fails over is 50%, not just 33% like in a three-node compact cluster. Capacity must be planned accordingly.

Is the arbiter node a regular node?

Yes, the arbiter node is a regular node. It's Red Hat CoreOS, Kubelet, Networking, and so on, but the node has a special role ("arbiter"). This prevents regular workload and components from being scheduled to it:

$ oc get nodes

NAME STATUS ROLES

arbiter-0 Ready arbiter

master-0 Ready control-plane,master,worker

master-1 Ready control-plane,master,workerThis also means that pods can be explicitly scheduled to it when necessary, by adding a corresponding toleration to the deployment:

tolerations:

- effect: NoSchedule

key: node-role.kubernetes.io/master

- effect: NoSchedule

key: node-role.kubernetes.io/arbiterThis is used by infrastructure components that also require three nodes to establish quorum to provide consistency. A good example of this is a software-defined hyperconverged storage solution.

Unified data services at the edge

For many edge workloads, especially those leveraging virtualized infrastructure, true high availability requires a robust software-defined storage (SDS) layer that can persist and protect data regardless of which node is running the application. This is where Portworx by Pure Storage comes in, providing essential services like synchronous disaster recovery (DR) and automated data management.

The integration of OpenShift with OpenShift Virtualization and Portworx delivers a powerful, unified platform for virtual machines (VMs) and containers from a single control plane. This hyperconverged Kubernetes-native storage platform brings high availability, disaster recovery, and performance to edge environments, all without dedicated SAN or external storage arrays.

Key benefits of using two-node OpenShift with arbiter and Portworx include:

- Dual-quorum maintenance: The small-footprint appliance that serves as the arbiter maintains quorum for both the OpenShift and Portworx control planes. This helps ensure platform and storage resilience with minimal hardware. Portworx storage requires only 4 vCPU (x86) and 4GB of RAM of additional resources on each of the nodes, including the arbiter.

- Data resiliency: Portworx uses a replication factor of 2 across the two main worker nodes. Portworx provides persistent storage for containerized apps and virtual machines managed by OpenShift Virtualization, ensuring data integrity for both types of workloads.

- Data services: Portworx provides unified VM and container data management benefits to the edge, helping organizations:

- Architect data resiliency with zero recovery point objective (RPO) disaster recovery

- Automate development and operations with self-service storage and automated capacity management

- Protect application data with container-granular and VM file-level backups, and ransomware protection

By handling data for VMs, disks, and containers simultaneously, Portworx helps organizations modernize at the edge while preserving investments in existing virtualized applications. Portworx helps you maintain the Day 0, Day 1, and Day 2 storage and data management capabilities you expect (synchronous DR, automated capacity management, seamless backup and restore).

Portworx 3.5.0 supports two-node OpenShift with arbiter as part of OpenShift 4.20, making this resilient, cost-optimized edge solution available for production deployments.

Cost efficiency and resilience for the open hybrid cloud

The combination of two-node OpenShift with arbiter and Portworx by Pure Storage directly addresses the edge dilemma by reducing node count without sacrificing data integrity or workload availability. It provides a powerful foundation for running stateful, mission-critical applications at scale, ensuring consistent storage management, and achieving near-zero RPO (recovery point objective) for business continuity. The future of edge infrastructure is lean, resilient, and ready for modern containerized and virtualized workloads.

To learn more about how Red Hat OpenShift and Portworx can scale and protect your stateful applications at the edge, explore these OpenShift edge resources. You can contact the Red Hat OpenShift team to inquire about the upcoming availability of two-node OpenShift with arbiter.

Learn more by checking out this demo:

제품 체험판

Red Hat OpenShift Container Platform | 제품 체험판

저자 소개

유사한 검색 결과

Deterministic performance with Red Hat Enterprise Linux for industrial edge

Red Hat Enterprise Linux delivers deterministic performance for industrial TSN

A composable industrial edge platform | Technically Speaking

What Can Video Games Teach Us About Edge Computing? | Compiler

채널별 검색

오토메이션

기술, 팀, 인프라를 위한 IT 자동화 최신 동향

인공지능

고객이 어디서나 AI 워크로드를 실행할 수 있도록 지원하는 플랫폼 업데이트

오픈 하이브리드 클라우드

하이브리드 클라우드로 더욱 유연한 미래를 구축하는 방법을 알아보세요

보안

환경과 기술 전반에 걸쳐 리스크를 감소하는 방법에 대한 최신 정보

엣지 컴퓨팅

엣지에서의 운영을 단순화하는 플랫폼 업데이트

인프라

세계적으로 인정받은 기업용 Linux 플랫폼에 대한 최신 정보

애플리케이션

복잡한 애플리케이션에 대한 솔루션 더 보기

가상화

온프레미스와 클라우드 환경에서 워크로드를 유연하게 운영하기 위한 엔터프라이즈 가상화의 미래