Red Hat OpenShift sandboxed containers, built on Kata Containers, now provide the additional capability to run confidential containers (CoCo). Confidential Containers are containers deployed within an isolated hardware enclave protecting data and code from privileged users such as cloud or cluster administrators. The CNCF Confidential Containers project is the foundation for the OpenShift CoCo solution. You can read more about the CNCF CoCo project in our previous blog What is the Confidential Containers project?

Confidential Containers are available from OpenShift sandboxed containers release version 1.7.0 as a tech preview on Azure cloud for both Intel TDX and AMD SEV-SNP. The tech preview also includes support for confidential containers on IBM Z and LinuxONE using Secure Execution for Linux (IBM SEL). Future releases will support bare metal deployments and additional public clouds.

Note that CoCo is an additional feature provided by OpenShift sandboxed containers, and consequently, it's available through the OpenShift sandboxed containers operator.

We are also introducing a new operator, the confidential compute attestation operator, which can verify the trustworthiness of TEEs remotely. Later in the blog, we will describe more details about this operator and how CoCo uses it.

An overview of OpenShift confidential containers

Why would you need confidential containers?

OpenShift sandbox containers (OSC) provide additional isolation for OpenShift workloads (pods):

- Isolation between workload: This makes sure workloads cannot interfere with each other even when granted elevated privileges, such as CI/CD workloads, which may require elevated privileges. You may also hear the term pod-sandboxing to describe this capability.

- Isolating the cluster from the workload: This makes sure the workload can’t perform any operations on the actual cluster such as accessing the OpenShift nodes.

Confidential Containers (CoCo) extend OSC to address a new type of isolation:

- Isolate the workload from the cluster: This makes sure that the cluster admin and the infra admin cannot see or tamper with the workload and its data. You get data in use protection for your workloads.

Why does this matter?

Today there are existing tools to protect your data at rest (encrypting your disk) and data in transit (securing your connection). However, there is a gap in protecting your workload when it’s running (data in use), such as running an AI model that is your secret sauce or sending your customer’s private data to your LLM for inferencing. Confidential containers solve this problem by protecting your data in use.

With CoCo—when you deploy your workload on infrastructure owned by someone else—the risk of unauthorized entities (such as infrastructure admins, infrastructure providers, privileged programs, etc.), accessing your workload data and extracting your secrets or intellectual property (IP) or tampering your application code is significantly reduced.

The following image shows the different types of isolation provided by OSC and its new CoCo functionality. The OpenShift sandboxed containers operator is not included in the diagram to keep it simple:

Confidential containers are based on Confidential Computing

Confidential Computing helps protect your data in use by leveraging dedicated hardware-based solutions. Using hardware, you can create isolated environments which are owned by you and help protect against unauthorized access or changes to your workload's data while it’s being executed (data in use). This is especially important when possessing sensitive information or in regulated industries.

The hardware used for creating confidential environments includes Intel TDX, AMD SEV-SNP, IBM SEL on IBM Z and LinuxONE and more. The problem is that these technologies are complicated and require a deep understanding to use and deploy.

Confidential containers aim to simplify things by providing cloud-native solutions for these technologies.

Confidential containers enable cloud-native confidential computing using a number of hardware platforms and supporting technologies. CoCo aims to standardize confidential computing at the pod level and simplify its consumption in Kubernetes environments. By doing so, Kubernetes users can deploy CoCo workloads using their familiar workflows and tools without needing a deep understanding of the underlying confidential containers technologies.

Using CoCo you can deploy workloads on shared infrastructure while reducing the risk of unauthorized access to your workload and data.

So how does this magic happen in practice?

CoCo integrates Trusted Execution Environments (TEE) infrastructure with the cloud-native world. A TEE is at the heart of a confidential computing solution. TEEs are isolated environments with enhanced security (e.g. runtime memory encryption, integrity protection), provided by confidential computing-capable hardware. A special virtual machine (VM) called a confidential virtual machine (CVM) that executes inside the TEE is the foundation for OpenShift CoCo solution.

Now, let's connect this to OSC. OSC sandboxes workloads (pods) using VMs and when using CVMs, OSC now provides confidential container capabilities for your workloads. When you create a CoCo workload, OSC creates a CVM which executes inside the TEE provided by confidential compute-capable hardware, and deploys the workload inside the CVM. The CVM prevents anyone who isn’t the workload's rightful owner from accessing or even viewing what happens inside it.

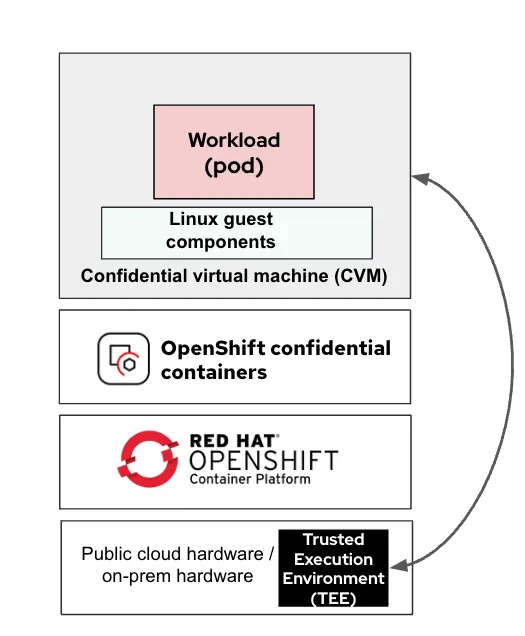

The following image shows the connection between a workload (pod) deployed on a CVM that runs inside the TEE provided in the public cloud hardware or on-prem hardware:

For additional information on CVMs we recommend reading our series, Learn about Red Hat Confidential Virtual Machines.

Confidential containers rely on attestation

An essential aspect of the confidential containers solution—especially in the context of the zero trust security model—is attestation. Before deploying your workload as a confidential container, you need a method to ensure the TEE is trusted. Attestation is the process used to verify that a TEE, where the workload will run (e.g. in a specific public cloud) or where you want to send confidential information, is indeed trusted.

The combination of TEEs and attestation capability enables the CoCo solution to provide a trusted environment to run workloads and technically enforce the protection of code and data from unauthorized access by privileged entities.

In the CoCo solution, the Trustee project (part of the CNCF Confidential Containers project) provides the capability of attestation. It’s responsible for performing the attestation operations and delivering secrets after successful attestation. For additional information on Trustee we recommend reading our previous article, Introducing Confidential Containers Trustee: Attestation Services Solution Overview and Use Cases.

Trustee contains, among others, the following key components:

- Trustee agents: These components run inside the CVM. This includes the Attestation Agent (AA), which is responsible for sending the evidence (claims) from the TEE to prove the environment's trustworthiness.

- Key Broker Service (KBS): This service is the entrypoint for remote attestation. It forwards the evidence (claims) from AA to the Attestation Service (AS) for verification and, upon successful verification, enables the delivery of secrets to the TEE.

- Attestation Service (AS): This service validates the TEE evidence.

The following diagram shows how Trustee components interacts in the OpenShift CoCo solution:

Note the following:

- The TEE is in the untrusted environment where your workload runs. It extends the trust from your trusted environment to the untrusted environment.

- You must deploy the Trustee services in an environment that you fully trust, whether on-premise or in a dedicated cloud environment.

The confidential compute attestation operator

This new confidential compute attestation operator is part of the OpenShift CoCo solution. It helps to deploy and manage Trustee services in an OpenShift cluster.

It provides a custom resource called KbsConfig to configure the required Trustee services, such as KBS, AS, etc. Additionally, it simplifies management of secrets for confidential containers.

The following diagram shows how this operator connects Trustee to OpenShift:

As illustrated in the diagram, the confidential compute attestation operator needs to run in a trusted environment to safeguard the integrity and security of critical services, such as the AS and KBS. These are important for verifying and maintaining the trustworthiness of the TEE.

We recommend the following when deploying this operator:

- Deploy in an OpenShift cluster running in a trusted environment: Use an existing, secured cluster, such as your secure software supply chain environment, to provide a trusted foundation.

- Integrate existing key management systems and connect it to the KBS, e.g. via the External Secrets Operator or Secrets Store CSI driver.

Bringing it all together

The following diagram shows a typical deployment of the OSC CoCo solution in an OpenShift cluster running on Azure while the confidential compute attestation operator is deployed in a separate trusted environment:

As shown in the diagram, OpenShift confidential containers use aCVM as the foundation and executes inside a TEE. The containers run inside the CVM to protect data confidentiality and integrity.

Note the following:

- OpenShift confidential containers uses a CVM that executes inside a TEE to protect data confidentiality and integrity

- The Linux guest components are responsible for downloading workload (pod) images and running them inside the CVM

- The containerized workload (pod) running in the CVM, benefits from encrypted memory and integrity guarantees provided by the TEE

- Trustee agents run in the CVM, performing attestation and obtaining required secrets

- The cluster where the pod runs is an untrusted environment, the only trusted components are the CVM and the components running inside it

- The confidential compute attestation operator runs in a trusted environment which is different from the one where the workload (pod) is running. This is your trust anchor to verify the trustworthiness of the TEE.

- Attestation verifies the trustworthiness of the TEE before the workload runs and can get access to the secrets

Summary

OpenShift confidential containers add a robust additional layer of security, helping make sure your data remains safe even while it’s being used. This protection of data in use means that not even privileged users, like cluster or infrastructure admins, can access your data without permission. This solution leverages hardware-basedTEEs combined with attestation and key management through Trustee services, all orchestrated by the OpenShift confidential compute attestation operator.

Deployment of the OpenShift CoCo solution necessitates using a trusted environment running the confidential compute attestation operator to provide the attestation and key management services.

OpenShift confidential containers are also designed to integrate seamlessly with other OpenShift solutions such as OpenShift AI and OpenShift Pipelines.

In our next article we will explore the ecosystem around the confidential compute attestation operator and look at practical use cases that highlight the advantages of OpenShift confidential containers.

저자 소개

Pradipta is working in the area of confidential containers to enhance the privacy and security of container workloads running in the public cloud. He is one of the project maintainers of the CNCF confidential containers project.

Jens Freimann is a Software Engineering Manager at Red Hat with a focus on OpenShift sandboxed containers and Confidential Containers. He has been with Red Hat for more than six years, during which he has made contributions to low-level virtualization features in QEMU, KVM and virtio(-net). Freimann is passionate about Confidential Computing and has a keen interest in helping organizations implement the technology. Freimann has over 15 years of experience in the tech industry and has held various technical roles throughout his career.

채널별 검색

오토메이션

기술, 팀, 인프라를 위한 IT 자동화 최신 동향

인공지능

고객이 어디서나 AI 워크로드를 실행할 수 있도록 지원하는 플랫폼 업데이트

오픈 하이브리드 클라우드

하이브리드 클라우드로 더욱 유연한 미래를 구축하는 방법을 알아보세요

보안

환경과 기술 전반에 걸쳐 리스크를 감소하는 방법에 대한 최신 정보

엣지 컴퓨팅

엣지에서의 운영을 단순화하는 플랫폼 업데이트

인프라

세계적으로 인정받은 기업용 Linux 플랫폼에 대한 최신 정보

애플리케이션

복잡한 애플리케이션에 대한 솔루션 더 보기

가상화

온프레미스와 클라우드 환경에서 워크로드를 유연하게 운영하기 위한 엔터프라이즈 가상화의 미래