Kubeflow is an open source machine learning toolkit for Kubernetes. It bundles popular ML/DL frameworks such as TensorFlow, MXNet, Pytorch, and Katib with a single deployment binary. By running Kubeflow on Red Hat OpenShift Container Platform, you can quickly operationalize a robust machine learning pipeline. However, the software stack is only part of the picture. You also need high performance servers, storage, and accelerators to deliver the stack’s full capability. To that end, Dell EMC and Red Hat’s Artificial Intelligence Center of Excellence recently collaborated on two white papers about sizing hardware for Kubeflow on OpenShift.

The first whitepaper is called “Machine Learning Using the Dell EMC Ready Architecture for Red Hat OpenShift Container Platform.” It describes how to deploy Kubeflow 0.5 and OpenShift Container Platform 3.11 on Dell PowerEdge servers. The paper builds on Dell’s Ready Architecture for OpenShift Container Platform 3.11 -- a prescriptive architecture for running OpenShift Container Platform on Dell hardware. It includes a bill of materials for ordering the exact servers, storage and switches used in the architecture. The machine learning whitepaper extends the ready architecture to include workload-specific recommendations and settings. It also includes instructions for configuring OpenShift and validating Kubeflow with a distributed TensorFlow training job.

Kubeflow is developed on upstream Kubernetes, which lacks many of the security features enabled in OpenShift Container Platform by default. Several of OpenShift Container Platform default security controls are relaxed in this whitepaper to get Kubeflow up and running. Additional steps might be required to meet your organization’s security standards for running Kubeflow on OpenShift Container Platform in production. These steps may include defining cluster roles for the Kubeflow services with appropriate permissions, adding finalizers to Kubeflow resources for reconciliation, and/or creating liveness probes for Kubeflow pods.

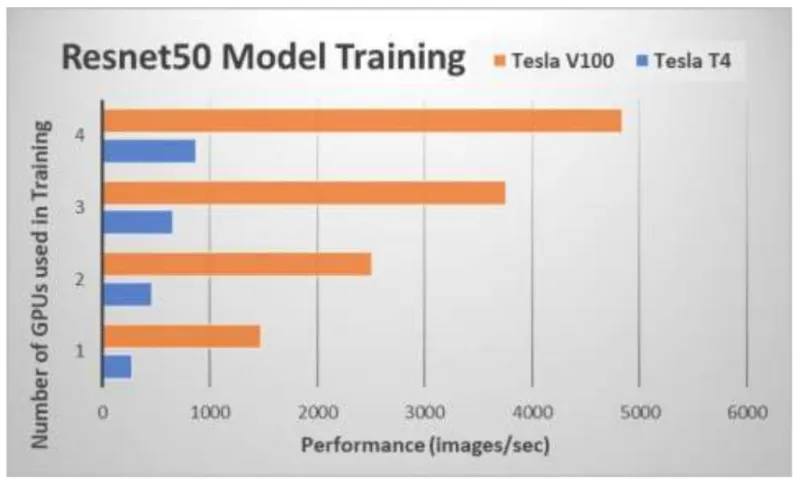

The second whitepaper is called “Executing ML/DL Workloads Using Red Hat OpenShift Container Platform v3.11.” It explains how to leverage Nvidia GPUs with Kubeflow for best performance on inferencing and training jobs. The hardware profile used in this whitepaper is similar to the ready architecture used in the first paper except the servers are outfitted with Nvidia Tesla GPUs. The architecture uses two GPU models. The OpenShift worker nodes have Nvidia Tesla T4 GPUs. Based on the Turing architecture, the T4s deliver excellent inference performance in a 70-Watt power profile. The storage nodes have Nvidia Tesla V100 GPUs. The V100 is a state of the art data center GPU. Based on the Volta architecture, the V100s are deep learning workhorses for both training and inference.

The researchers compared the GPU models when training the Resnet50 TensorFlow benchmark. This is shown in the figure above. Not surprisingly, the Tesla V100s outperformed the T4s when training. They have double the compute capability -- both in terms of FP64 and TensorCores -- along with higher memory bandwidth due to the HBM2 memory subsystem. But the T4s should give better performance per Watt than the V100s when running less floating-point intensive tasks, particularly inferencing in mixed precision.

These whitepapers make it easier for you to select hardware for running Kubeflow on premises. Dell and Red Hat are continuing to collaborate on updating these documents to the latest version of Kubeflow and OpenShift Container Platform 4.

저자 소개

Red Hatter since 2018, technology historian and founder of The Museum of Art and Digital Entertainment. Two decades of journalism mixed with technology expertise, storytelling and oodles of computing experience from inception to ewaste recycling. I have taught or had my work used in classes at USF, SFSU, AAU, UC Law Hastings and Harvard Law.

I have worked with the EFF, Stanford, MIT, and Archive.org to brief the US Copyright Office and change US copyright law. We won multiple exemptions to the DMCA, accepted and implemented by the Librarian of Congress. My writings have appeared in Wired, Bloomberg, Make Magazine, SD Times, The Austin American Statesman, The Atlanta Journal Constitution and many other outlets.

I have been written about by the Wall Street Journal, The Washington Post, Wired and The Atlantic. I have been called "The Gertrude Stein of Video Games," an honor I accept, as I live less than a mile from her childhood home in Oakland, CA. I was project lead on the first successful institutional preservation and rebooting of the first massively multiplayer game, Habitat, for the C64, from 1986: https://neohabitat.org . I've consulted and collaborated with the NY MOMA, the Oakland Museum of California, Cisco, Semtech, Twilio, Game Developers Conference, NGNX, the Anti-Defamation League, the Library of Congress and the Oakland Public Library System on projects, contracts, and exhibitions.

유사한 검색 결과

Resilient model training on Red Hat OpenShift AI with Kubeflow Trainer

Implementing best practices: Controlled network environment for Ray clusters in Red Hat OpenShift AI 3.0

Technically Speaking | Build a production-ready AI toolbox

Technically Speaking | Platform engineering for AI agents

채널별 검색

오토메이션

기술, 팀, 인프라를 위한 IT 자동화 최신 동향

인공지능

고객이 어디서나 AI 워크로드를 실행할 수 있도록 지원하는 플랫폼 업데이트

오픈 하이브리드 클라우드

하이브리드 클라우드로 더욱 유연한 미래를 구축하는 방법을 알아보세요

보안

환경과 기술 전반에 걸쳐 리스크를 감소하는 방법에 대한 최신 정보

엣지 컴퓨팅

엣지에서의 운영을 단순화하는 플랫폼 업데이트

인프라

세계적으로 인정받은 기업용 Linux 플랫폼에 대한 최신 정보

애플리케이션

복잡한 애플리케이션에 대한 솔루션 더 보기

가상화

온프레미스와 클라우드 환경에서 워크로드를 유연하게 운영하기 위한 엔터프라이즈 가상화의 미래