Red Hat Enterprise Linux (RHEL) system administrators and developers have long relied on a specific set of tools to diagnose issues, combined with years of accumulated intuition and experience. But as environments grow more complex, the cognitive load required to effectively decipher logs and troubleshoot issues has been increasing.

Today, we are excited to announce the developer preview of a new Model Context Protocol (MCP) server for RHEL. This new MCP server is designed to bridge the gap between RHEL and Large Language Models (LLMs), enabling a new era of smarter troubleshooting.

What is the MCP server for RHEL?

MCP is an open standard that allows AI models to interact with external data and systems, originally released by Anthropic and donated in December 2025 to the Linux Foundation's Agentic AI Foundation. RHEL's new MCP server is now in developer preview and uses this protocol to provide direct, context-aware access to RHEL from AI applications that support the MCP protocol, such as Claude Desktop or goose.

We have previously released MCP servers for Red Hat Lightspeed, and Red Hat Satellite that enable a number of exciting use cases. This new MCP server expands on these use cases, and is purpose-built for deep-dive troubleshooting on RHEL systems.

Enabling smarter troubleshooting

Connecting your LLM to RHEL with the new MCP server enables use cases such as:

- Intelligent log analysis: Sifting through log data is tedious. The MCP server allows LLMs to ingest and analyze RHEL system logs. This capability enables AI-driven root cause analysis and anomaly detection, helping you to turn raw log data into actionable intelligence.

- Performance analysis: The MCP server can access information about the number of CPUs, load average, memory information, and information about CPU and memory usage of running processes. This enables an LLM system to analyze the current state of the system and identify potential performance bottlenecks and make other performance related recommendations.

To help you explore these new capabilities in a safer manner, this developer preview focuses on read-only MCP enablement. The MCP server enables an LLM to inspect and recommend, and utilizes standard SSH keys for authentication. It also can be configured with an allow list for log file access and log level access. The MCP server does not allow for open shell access to your RHEL system as the commands the MCP server runs are pre-vetted.

Example use cases

In these examples, I'm using the goose AI agent, along with the MCP server to work with one of my RHEL 10 systems named rhel10.example.com. Goose supports a number of LLM providers, including hosted online providers as well as locally hosted providers. I'm using a locally hosted model.

I've installed goose and the MCP server on my Fedora workstation, with SSH key authentication set up with rhel10.example.com.

I'll start with a prompt asking the LLM to help me check the health of the rhel10.example.com system:

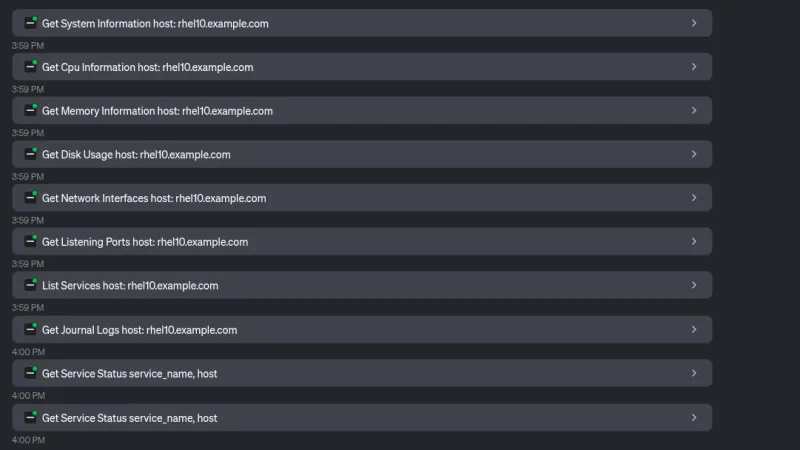

The LLM utilizes a number of tools provided by the MCP server to collect system information:

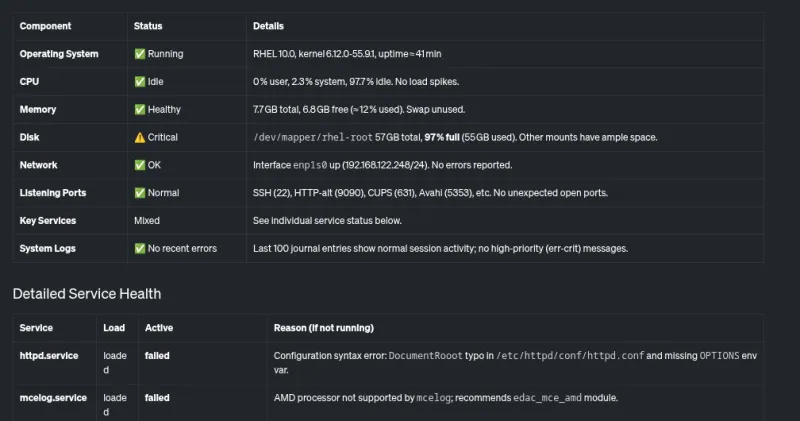

Based on this, the LLM provides an overview of the system and its health, including this table:

In addition, it provides this summary, noting critical issues that need to be addressed, such as the nearly full root filesystem, and a couple of services that are failing on the system.

Let's examine the issues identified. I'll ask the LLM to help me determine why the disk usage is so high:

The LLM uses tools provided by the MCP server to determine what's using the most disk space:

Based on this, the LLM determines that the /home/brian/virtual-machines directory has a 25 GB file in it, and that the /home/brian/.local directory is taking 24 GB of space:

And finally, I'll also ask the LLM to help with the httpd.service, which was previously reported as failing.

The LLM uses the Read File tool provided by the MCP server:

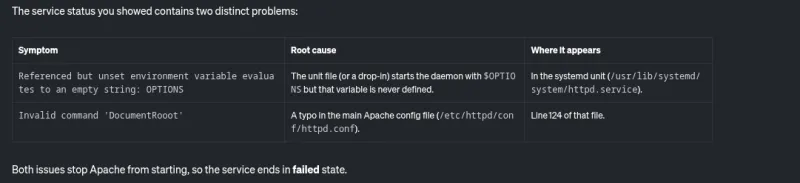

Based on this, the LLM reports on potential causes of the httpd.service failing:

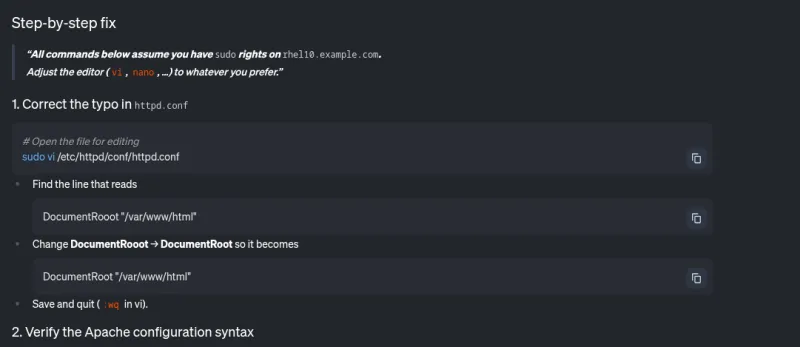

In addition, it also provides some step-by-step instructions for correcting this:

The MCP server for RHEL enabled me to easily identify and troubleshoot potential issues on this system related to an almost full filesystem and a failing httpd service.

What's next?

While we are starting with read-only analysis, our roadmap includes expanding out to additional use cases. To follow the development process, keep an eye on our upstream GitHub repository. We welcome upstream contributions! We are very interested in your feedback: enhancement requests, bug reports, and so on. You can reach the team on GitHub or through the Fedora AI/ML Special Interest Group (SIG).

Are you ready to experience smarter troubleshooting?

The MCP server for RHEL is available now in developer preview. Connect your LLM client application and see how context-aware AI can change the way you manage RHEL. To get started, refer to the Red Hat documentation and the upstream documentation.

제품 체험판

Red Hat Enterprise Linux | 제품 체험판

저자 소개

Brian Smith is a product manager at Red Hat focused on RHEL automation and management. He has been at Red Hat since 2018, previously working with public sector customers as a technical account manager (TAM).

Máirín Duffy is a Red Hat Distinguished Engineer and leads the Red Hat Enterprise Linux Lightspeed Incubation team at Red Hat as a passionate advocate for human-centered AI and open source. A recipient of the O’Reilly Open Source Award, Máirín first joined Red Hat as an intern in 2004 and has spent two decades in open source communities focusing on user experience in order to expand the reach of open source. A sought-after speaker and author, Mo holds 19 patents and authored 6 open source coloring books, including The SELinux Coloring Book.

유사한 검색 결과

Navigating secure AI deployment: Architecture for enhancing AI system security and safety

The AI resolution that will still matter in 2030

Technically Speaking | Build a production-ready AI toolbox

Technically Speaking | Platform engineering for AI agents

채널별 검색

오토메이션

기술, 팀, 인프라를 위한 IT 자동화 최신 동향

인공지능

고객이 어디서나 AI 워크로드를 실행할 수 있도록 지원하는 플랫폼 업데이트

오픈 하이브리드 클라우드

하이브리드 클라우드로 더욱 유연한 미래를 구축하는 방법을 알아보세요

보안

환경과 기술 전반에 걸쳐 리스크를 감소하는 방법에 대한 최신 정보

엣지 컴퓨팅

엣지에서의 운영을 단순화하는 플랫폼 업데이트

인프라

세계적으로 인정받은 기업용 Linux 플랫폼에 대한 최신 정보

애플리케이션

복잡한 애플리케이션에 대한 솔루션 더 보기

가상화

온프레미스와 클라우드 환경에서 워크로드를 유연하게 운영하기 위한 엔터프라이즈 가상화의 미래