This post was written by: Swati Sehgal, Alexey Perevalov, Killian Muldoon & Francesco Romani

How do you get the most out of your bare-metal hardware? Believe it or not, the physical layout in a computer of the resources a workload uses, from memory and CPU to storage and I/O, can have a dramatic impact on performance. Until recently Kubernetes users had no direct way to influence this key interaction between hardware and software, commonly called Resource Topology.

This blog post series describes Topology Aware Scheduling, a feature being rolled out in Kubernetes in 2021. Topology Aware Scheduling enables the Kubernetes control plane to keep to Resource Topology constraints when placing Pods on Nodes.This approach complements Topology Manager, which was initially introduced in Kubernetes 1.17, the node-level Resource Topology enforcer in kubelet, but more on that later.

Why does resource topology matter?

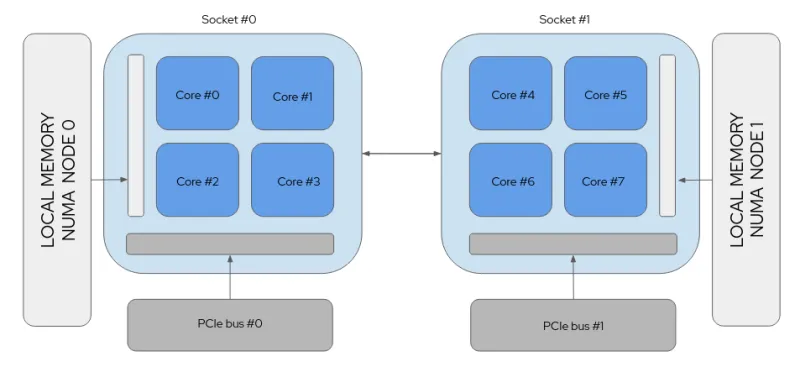

Non-Uniform Memory Access (NUMA) is a compute platform architecture that allows different CPUs to access different regions of memories at different speeds. The relative locations of CPUs, memory, and PCI devices are what we’re talking about when we say Resource Topology.

This architecture has major advantages. Any CPU core can potentially access all memory on a system, but there are some potential pitfalls with performance. For example, in the diagram below, memory closer to CPU core 1 will be quicker to access by CPU core 1 than memory close to CPU core 7.

FIGURE 1: A Non-uniform Memory Access (NUMA) system

It’s straightforward so far, and the underlying operating system will manage most of this, even in a Kubernetes cluster. When you’re trying to squeeze low-latency performance from bare metal, though, you need to dedicate isolated resources to specific applications. As we add new kinds of resources, things get increasingly complicated.

For I/O-constrained workloads, the network interface on a distant NUMA zone slows down how quickly information can reach the application. High-performance workloads, like those running the 5G network, can’t operate to spec under these conditions.

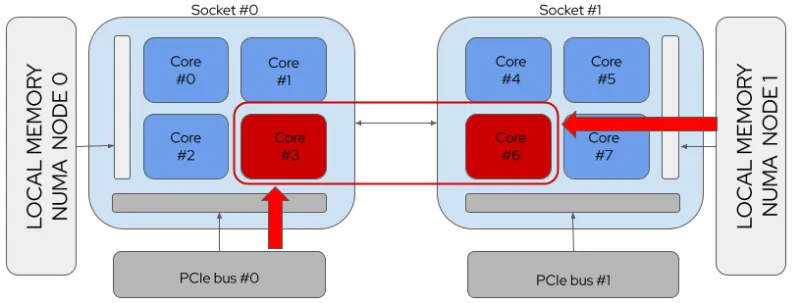

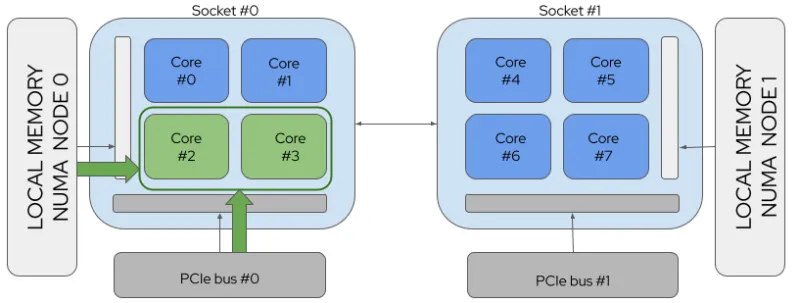

Taking an example of a pod requesting 2 CPUs and a PCI device, FIGURE 2 shows a scenario where resources are not NUMA aligned whereas FIGURE 3 shows a scenario where resources are NUMA aligned:

FIGURE 2: A NUMA System with no Resource Alignment

FIGURE 3: A NUMA System with Resource Alignment

Without handling Resource Topology, Kubernetes as it exists in 1.20 can’t meet the needs of these sorts of applications. End users can (and have!) found ways around this by adding constraints to their clusters. One option is to replace bare-metal deployments with VMs, while another is to limit the pod configs available to developers.

Does Kubernetes default-scheduler consider Resource Topology when assigning pods to nodes?

Kubernetes Topology Manager allows workloads to run in an environment optimized for low latency. Performance-critical workloads require topology information to use co-located CPU cores and devices for industries like telecommunications, High Powered Computing (HPC), and Internet of Things (IoT), but the current native scheduler does not select a node based on its topology. This happens due to the scheduler’s lack of knowledge of Resource Topology, which can lead to unpredictable application performance. In general, this means under performance, and in the worst case, complete mismatch of resource requests and kubelet policies such as scheduling a pod destined to fail, potentially entering a failure loop.

Exposing cluster level topology to the scheduler empowers it to make intelligent NUMA aware placement decisions optimizing cluster wide performance of workloads.

What is the business case for enabling Topology aware scheduling in Kubernetes?

A company could make a business by providing a public cloud or by selling a cloud solution to third parties (for example, telecom operators for NFV use cases and to others). In case of public cloud, the cloud provider in its end user agreement or in public offer can provide only tariffs with a fixed number of resources. In this case, the problem of resource alignment is solved by IAAS level and by the number of resources (NIC, GPU) we can find in tariffs, and these numbers are aligned to numbers per NUMA.

Another case is when a company sells cloud solutions and clients demand more flexibility. Flexibility to them is the ability to work on bare metal and the ability to request any number and kind of resources. So the solution that makes kube scheduler topology aware is interesting for those companies who sell cloud solutions to third parties.

In the next part of the blog post, we talk about Topology Manager and explain the design of Topology aware Scheduling in more detail.

저자 소개

유사한 검색 결과

AI insights with actionable automation accelerate the journey to autonomous networks

IT automation with agentic AI: Introducing the MCP server for Red Hat Ansible Automation Platform

Technically Speaking | Taming AI agents with observability

You Can’t Automate Cultural Change | Code Comments

채널별 검색

오토메이션

기술, 팀, 인프라를 위한 IT 자동화 최신 동향

인공지능

고객이 어디서나 AI 워크로드를 실행할 수 있도록 지원하는 플랫폼 업데이트

오픈 하이브리드 클라우드

하이브리드 클라우드로 더욱 유연한 미래를 구축하는 방법을 알아보세요

보안

환경과 기술 전반에 걸쳐 리스크를 감소하는 방법에 대한 최신 정보

엣지 컴퓨팅

엣지에서의 운영을 단순화하는 플랫폼 업데이트

인프라

세계적으로 인정받은 기업용 Linux 플랫폼에 대한 최신 정보

애플리케이션

복잡한 애플리케이션에 대한 솔루션 더 보기

가상화

온프레미스와 클라우드 환경에서 워크로드를 유연하게 운영하기 위한 엔터프라이즈 가상화의 미래