For Telcos considering OpenStack, one of the major areas of focus can be around network performance. While the performance discussion may often begin with talk of throughput numbers expressed in Million-packets-per-second (Mpps) values across Gigabit-per-second (Gbps) hardware, it really is only the tip of the performance iceberg. The most common requirement is to have absolutely stable and deterministic network performance (Mpps and latency) over the absolutely fastest possible throughput. With that in mind, many applications in the Telco space require low latency that can only tolerate zero packet loss.

In this “Operationalizing OpenStack” blogpost Federico Iezzi, EMEA Cloud Architect with Red Hat, discusses some of the real-world deep tuning and process required to make zero packet loss a reality!

Packet loss is bad for business ...

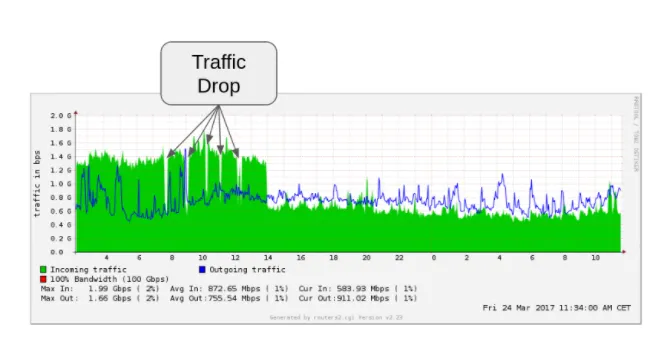

Packet loss can be defined as occurring “when one or more packets of data travelling across a computer network fail to reach their destination [1].” Packet loss results in protocol latency as losing a TCP packet requires retransmission, which takes time. What’s worse, protocol latency manifests itself externally as “application delay.” And, of course, “application delay” is nothing more than a fancy term for something that all Telco’s want to avoid: a fault. So, as network performance degrades, and packets drop, retransmission occurs at higher and higher rates. The more retransmission the more latency experienced and the slower the system gets. With increased packets due to this retransmission we also see increased congestion slowing the system even further.

Tune in now for better performance …

So how do we prepare OpenStack for Telco?

Photo CC0-licensed (Alan Levine)

Photo CC0-licensed (Alan Levine)

It's easy! Tuning!

Red Hat OpenStack Platform is supported by a detailed Network Functions Virtualization (NFV) Reference Architecture which offers a lot of deep tuning across multiple technologies ranging from Red Hat Enterprise Linux to the Data Plane Development Kit (DPDK) from Intel. A great place to start is with the Red Hat Network Functions Virtualization (NFV) Product Guide. It covers tuning for the following components:

- Red Hat Enterprise Linux version 7.3

- Red Hat OpenStack Platform version 10 or greater

- Data plane tuning

- Open vSwitch with DPDK at least version 2.6

- SR-IOV VF or PF

- System Partitioning through Tuned using profile cpu-partitioning at least version 2.8

- Non-uniform memory access (NUMA) and virtual non-uniform memory access (vNUMA)

- General OpenStack configuration

Hardware notes and prep …

It's worth mentioning that the hardware to be used in achieving zero packet loss often

Photo CC0-licensed (PC Gehäuse)

Photo CC0-licensed (PC Gehäuse)

needs to be latest generation. Hardware decisions around network interface cards and vendors can often affect packet loss and tuning success. For hardware, be sure to consult your vendor’s documentation prior to purchase to ensure the best possible outcomes. Ultimately, regardless of hardware, some setup should be done in the hardware BIOS/UEFI for stable CPU frequency while removing power saving features.

| Setting | Value |

| MLC Streamer | Enabled |

| MLC Spatial Prefetcher | Enabled |

| Memory RAS and Performance Config | Maximum Performance |

| NUMA optimized | Enabled |

| DCU Data Prefetcher | Enabled |

| DCA | Enabled |

| CPU Power and Performance | Performance |

| C6 Power State | Disabled |

| C3 Power State | Disabled |

| CPU C-State | Disabled |

| C1E Autopromote | Disabled |

| Cluster-on-Die | Disabled |

| Patrol Scrub | Disabled |

| Demand Scrub | Disabled |

| Correctable Error | 10 |

| Intel(R) Hyper-Threading | Disabled or Enabled |

| Active Processor Cores | All |

| Execute Disable Bit | Enabled |

| Intel(R) Virtualization Technology | Enabled |

| Intel(R) TXT | Disabled |

| Enhanced Error Containment Mode | Disabled |

| USB Controller | Enabled |

| USB 3.0 Controller | Auto |

| Legacy USB Support | Disabled |

| Port 60/64 Emulation | Disabled |

BIOS Settings from:

Divide and Conquer ...

Properly enforcing resource partitioning is essential in achieving zero packet loss performance and to do this you need to partition the resources between the host and the guest correctly. System partitioning ensures that software resources running on the host are always given access to dedicated hardware. However, partitioning goes further than just access to hardware as it can be used to ensure that resources utilize the closest possible memory addresses across all the processors. When a CPU retrieves data from a memory address it first looks at the local cache on the local processor core itself. Proper partitioning, via tuning, ensures that requests are answered from the closest cache (L1, L2 or L3 cache) as well as from the local memory, minimizing transaction times and the usage of a point-to-point processor interconnection bus such as the QPI (Intel QuickPath Interconnect). This way of accessing and dividing the memory is defined as NUMA (non-uniform memory access) design.

Tuned in …

System partitioning involves a lot of complex, low-level tuning. So how does one do this easily?

You’ll need to use the tuned daemon along with the the accompanying cpu partitioning profile. Tuned is a daemon that monitors the use of system components and dynamically tunes system settings based on that monitoring information. Tuned is distributed with a number of predefined profiles for common use cases. For all this to work, you’ll need the newest tuned features. This requires the latest version of tuned (i.e. 2.8 or later) as well as the latest tuned cpu-partitioning profile (i.e. 2.8 or later). Both have are available publicly via the Red Hat Enterprise Linux 7.4 beta release or you can grab the daemon and profiles directly from their upstream projects.

Interested in the latest generation of Red Hat Enterprise Linux? Be the first to know when it is released by following the official Red Hat Enterprise Linux Blog!

However, before any tuning can begin, you must first decide how the system should be partitioned.

Based on Red Hat experience with customer deployments, we usually find it necessary to define how the system should be partitioned for every specific compute model. In the example pictured above, the total number of PMD cores - one CPU core is two CPU threads - had to be carefully calculated by knowing the overall required Mpps as well as the total number of DPDK interfaces, both physical and vPort. An unbalanced PMD number versus DPDK ports will result in lower performance and interrupts which will generate packet-loss. The rest of the tuning was for the VNF threads, excluding at least one core per NUMA node for the operating system.

Looking for more great ways to ensure your Red Hat OpenStack Platform deployment is rock solid? Check out the Red Hat Services Webinar Don't fail at scale: How to plan, build, and operate a successful OpenStack cloud today!

Looking at the upstream templates as well as in the tuned cpu-partitioning profile, there is a lot to understand about the specific settings that are executed on each core per NUMA node.

So, just what needs to be tuned? Find out more in Part 2 where you’ll get a thorough and detailed breakdown of many specific tuning parameters to help achieve zero packet loss!

저자 소개

Federico Iezzi is an open-source evangelist who has witnessed the Telco NFV transformation. Over his career, Iezzi achieved a number of international firsts in the public cloud space and also has about a decade of experience with OpenStack. He has been following the Telco NFV transformation since 2014. At Red Hat, Federico is member of the EMEA Telco practice as a Principle Architect.

유사한 검색 결과

IT automation with agentic AI: Introducing the MCP server for Red Hat Ansible Automation Platform

General Availability for managed identity and workload identity on Microsoft Azure Red Hat OpenShift

Data Security 101 | Compiler

Technically Speaking | Build a production-ready AI toolbox

채널별 검색

오토메이션

기술, 팀, 인프라를 위한 IT 자동화 최신 동향

인공지능

고객이 어디서나 AI 워크로드를 실행할 수 있도록 지원하는 플랫폼 업데이트

오픈 하이브리드 클라우드

하이브리드 클라우드로 더욱 유연한 미래를 구축하는 방법을 알아보세요

보안

환경과 기술 전반에 걸쳐 리스크를 감소하는 방법에 대한 최신 정보

엣지 컴퓨팅

엣지에서의 운영을 단순화하는 플랫폼 업데이트

인프라

세계적으로 인정받은 기업용 Linux 플랫폼에 대한 최신 정보

애플리케이션

복잡한 애플리케이션에 대한 솔루션 더 보기

가상화

온프레미스와 클라우드 환경에서 워크로드를 유연하게 운영하기 위한 엔터프라이즈 가상화의 미래