With the release of Red Hat Enterprise Linux (RHEL) 8.3, Red Hat announced an rpm-ostree version of RHEL targeted for Edge use cases called RHEL for Edge.

One of the unique features of rpm-ostree is that when you update the operating system, a new deployment is created, and the previous deployment is also retained. This means that if there are issues on the updated version of the operating system, you can roll back to the previous deployment with a single rpm-ostree command, or by selecting the previous deployment in the GRUB boot loader.

While this ability to manually roll back is very useful, it still requires manual intervention. Edge computing use case scenarios might be up in the tens or hundreds of thousands of nodes, and with this number of systems, automation is critical. In addition, in Edge deployments, these systems might be across the country or across the world, and it might not be practical to access a console on them in the event of issues with an updated image.

This is why RHEL for Edge includes GreenBoot, which can automate RHEL for Edge operating system rollbacks.

This post will cover an overview of how to get started with GreenBoot and will walk through an example of using GreenBoot.

Prerequisites

Before you can use GreenBoot, you need to have a RHEL for Edge environment set up, and have an updated image that you would like to upgrade to. For more information on how to get started with RHEL for Edge, see the RHEL for Edge overview videos series, and refer to the documentation.

Overview of GreenBoot

When RHEL for Edge boots off an updated OS image, GreenBoot must make a determination between these two possibilities:

-

The system is working properly and should remain running on the updated image.

-

There is an issue and GreenBoot should roll back the system to the previous image

GreenBoot relies on “health check” scripts to make the above determination. These health check scripts must be created by the System Administrator, and will vary depending on the workload running on the system.

For example, the health check script could verify network connectivity, verify the applications started on the system, etc.

GreenBoot supports both shell scripts and systemd services for the health checks. This post focuses on using shell script health checks.

GreenBoot directory structure

There are four directories used in conjunction with GreenBoot scripts:

-

/etc/greenboot/check/required.d contains the health checks that must not fail (if they do, GreenBoot will initiate a rollback)

-

/etc/greenboot/check/wanted.d contains health scripts that may fail. GreenBoot will log that the script failed, however, it will not rollback.

-

/etc/greenboot/green.d contains scripts that should be run after GreenBoot has declared the boot successful

-

/etc/greenboot/red.d contains scripts that should be run after GreenBoot has declared the boot as failed.

When booting from an updated operating system, GreenBoot will run the scripts in the required.d and wanted.d directories. If any of the scripts fail in the required.d directory, GreenBoot will run any scripts in the red.d directory, then reboot.

However, since this failure might have been a temporary issue, or because a script in the red.d directory might have been able to make a corrective action to fix the issue, GreenBoot will make two more boot attempts on the updated operating system (for a total of three boot attempts). If on the 3rd boot the scripts in required.d are still failing, it will run the red.d scripts one last time, then reboot the system from the original rpm-ostree deployment.

Rolling back an edge deployment

In this example scenario, we have a RHEL for Edge system that requires the Korn shell (ksh) to be present on the system for the application to function. If the Korn shell is not available for any reason, GreenBoot should roll the system back to the previous deployment.

To start, I’ll create a health check script at /etc/greenboot/check/required.d/ksh.sh This basic health check script will simply verify if the /usr/bin/ksh file is present and executable. If so, it returns a zero return code (success), otherwise, it returns a one return code (anything other than zero being a failure).

Here are the ksh.sh script contents:

#!/bin/bash if [ -x /usr/bin/ksh ]; then echo "ksh shell found, check passed!" exit 0 else echo "ksh shell not found, check failed!" exit 1 fi

I’ll also create a script at /etc/greenboot/red.d/bootfail.sh to be run in the event that GreenBoot declares a boot unsuccessful. This example script will just log some information to the /var/roothome/greenboot.log file; however, a good use case for red.d scripts would be attempting to make corrective action that might resolve the issue on the next reboot.

Here are the bootfail.sh contents:

#!/bin/bash echo "greenboot detected a boot failure" >> /var/roothome/greenboot.log date >> /var/roothome/greenboot.log grub2-editenv list | grep boot_counter >> /var/roothome/greenboot.log echo "----------------" >> /var/roothome/greenboot.log echo "" >> /var/roothome/greenboot.log

Also note that I’m referring to the GRUB boot_counter variable. On the first failed boot, this value will be 2, and will be decremented each failed boot until it reaches 0 (zero), at which point GreenBoot rolls the system back to the previous deployment image.

Testing GreenBoot

GreenBoot is now configured to verify that the Korn shell is available after the system boots from an updated image. So to validate GreenBoot is actually working, I’ve created an updated RHEL for Edge image that removes the Korn Shell and adds the Z Shell. If I configured the GreenBoot health check script properly, GreenBoot should detect this issue, make three boot attempts on the updated image, and automatically rollback to the previous image.

I’ll first run rpm-ostree update --preview to validate an update is available:

# rpm-ostree update --preview 1 metadata, 0 content objects fetched; 196 B transferred in 0 seconds; 0 bytes content written AvailableUpdate: Timestamp: 2020-11-06T14:26:16Z Commit: 807c5a040419e7e6be4b6a0c2b0a442fad64a6e58b6c52fa090c36ea99228e5d Removed: ksh-20120801-254.el8.x86_64 Added: zsh-5.5.1-6.el8_1.2.x86_64

The output shows there is an available update and, in this update, the ksh package is removed and the zsh package is added.

I’ll go ahead and install the update with an rpm-ostree update command:

# rpm-ostree update ⠁ Receiving objects: 49% (691/1402) 2.7 MB/s 13.3 MB Receiving objects: 49% (691/1402) 2.7 MB/s 13.3 MB... done Staging deployment... done Removed: ksh-20120801-254.el8.x86_64 Added: zsh-5.5.1-6.el8_1.2.x86_64 Run "systemctl reboot" to start a reboot

Next, I’ll run the rpm-ostree status command:

# rpm-ostree status State: idle Deployments: ostree://rhel:rhel/8/x86_64/edge Timestamp: 2020-11-06T14:26:16Z Commit: 807c5a040419e7e6be4b6a0c2b0a442fad64a6e58b6c52fa090c36ea99228e5d Diff: 1 removed, 1 added * ostree://rhel:rhel/8/x86_64/edge Timestamp: 2020-11-04T19:06:03Z Commit: cbf8ac14651a72be3dd1ed0754b9dd69a8464d37bdc3a2b571c2382509c7a3e2

The original image is the one with the commit starting with cbf, and the updated image, where ksh was removed, is the commit starting with 807. Note that the asterisk on the left of the commit starting with cbf indicates this is the currently booted deployment. Also note the deployment listed at the top is the one that will be booted by default by the bootloader, which means the updated commit starting with 807 will be the deployment booted by default at the next reboot.

I’ll go ahead and run systemctl reboot to reboot the system off the updated image starting with commit 807.

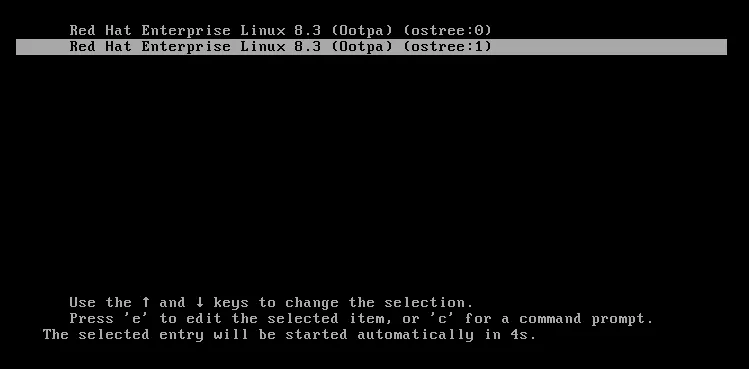

When the system reboots, the GRUB boot loader will default to the ostree:0, which is the 807 commit.

The system will boot up on the updated image and automatically run the GreenBoot /etc/greenboot/check/required.d/ksh.sh which will fail because the Korn shell is not present in the updated image. GreenBoot will then run the script in the /etc/greenboot/red.d/bootfail.sh, and reboot the system two additional times, repeating this process each time.

After the third failed boot on the updated image, the system will automatically reboot again, however this time, the GRUB boot loader will automatically default to boot off the ostree:1 entry, which is the original rpm-ostree deployment (with the commit starting with cbf):

At this point, the system will successfully boot up on the original deployment. I’ll log back in and run a few commands to check the state of the system.

First I’ll run systemctl status greenboot-stats.service:

# systemctl status greenboot-status.service ● greenboot-status.service - greenboot MotD Generator Loaded: loaded (/usr/lib/systemd/system/greenboot-status.service; enabled; vendor preset: disabled) Active: active (exited) since Mon 2021-02-22 22:50:26 UTC; 6min ago Process: 905 ExecStart=/usr/libexec/greenboot/greenboot-status (code=exited, status=0/SUCCESS) Main PID: 905 (code=exited, status=0/SUCCESS) Tasks: 0 (limit: 23498) Memory: 0B CGroup: /system.slice/greenboot-status.service Feb 22 22:50:26 localhost.localdomain systemd[1]: Starting greenboot MotD Generator... Feb 22 22:50:26 localhost.localdomain greenboot-status[905]: Boot Status is GREEN - Health Check SUCCESS Feb 22 22:50:26 localhost.localdomain greenboot-status[905]: FALLBACK BOOT DETECTED! Default rpm-ostree deployment has been rolled back. Feb 22 22:50:26 localhost.localdomain systemd[1]: Started greenboot MotD Generator.

Note that it logged FALLBACK BOOT DETECTED.

I’ll also run rpm-ostree status:

# rpm-ostree status State: idle Deployments: * ostree://rhel:rhel/8/x86_64/edge Timestamp: 2020-11-04T19:06:03Z Commit: cbf8ac14651a72be3dd1ed0754b9dd69a8464d37bdc3a2b571c2382509c7a3e2 ostree://rhel:rhel/8/x86_64/edge Timestamp: 2020-11-06T14:26:16Z Commit: 807c5a040419e7e6be4b6a0c2b0a442fad64a6e58b6c52fa090c36ea99228e5d

Note that the commit starting with cbf, which was our original deployment prior to the upgrade, is the currently booted deployment (as indicted by the asterisk). This is also at the top of the list, meaning it will be the default boot option if the system was rebooted again.

Finally, I’ll display the /root/greenboot.log file, which is the file written to by the /etc/greenboot/red.d/bootfail.sh script at each of the three failed boots.

# cat /root/greenboot.log greenboot detected a boot failure Mon Feb 22 22:49:34 UTC 2021 boot_counter=2 ---------------- greenboot detected a boot failure Mon Feb 22 22:49:51 UTC 2021 boot_counter=1 ---------------- greenboot detected a boot failure Mon Feb 22 22:50:06 UTC 2021 boot_counter=0 ----------------

Note that the GRUB boot_counter variable counted down to zero, at which point, the system was rolled back to the previous deployment.

Successful Update with GreenBoot

The previous section covered what happens if a required health check script fails. In the event that all of the required health check scripts are successful on the first boot after an update, the system will stay on the updated deployment.

In this scenario, when systemctl status greenboot-status is run after booting from the updated deployment, it will report:

# systemctl status greenboot-status ● greenboot-status.service - greenboot MotD Generator Loaded: loaded (/usr/lib/systemd/system/greenboot-status.service; enabled; vendor preset: disabled) Active: active (exited) since Mon 2021-03-01 17:29:15 UTC; 4min 18s ago Process: 818 ExecStart=/usr/libexec/greenboot/greenboot-status (code=exited, status=0/SUCCESS) Main PID: 818 (code=exited, status=0/SUCCESS) Tasks: 0 (limit: 23499) Memory: 0B CGroup: /system.slice/greenboot-status.service Mar 01 17:29:15 localhost.localdomain systemd[1]: Starting greenboot MotD Generator... Mar 01 17:29:15 localhost.localdomain greenboot-status[818]: Boot Status is GREEN - Health Check SUCCESS Mar 01 17:29:15 localhost.localdomain systemd[1]: Started greenboot MotD Generator.

As you can see, Green boot logged Boot Status is GREEN - Health Check SUCCESS

Learning more about GreenBoot and RHEL for Edge

Operating System updates can be scary. Although unlikely, an update could cause issues on the server, which causes an outage. The rpm-ostree technology RHEL for Edge provides the ability to rollback to a previous deployment, however, by default this requires manual intervention. GreenBoot allows the entire process to be automated, and since you define your own health check scripts, it is highly customizable. For a video overview of using GreenBoot, please refer to the RHEL for Edge Part 5: Automating Image Roll Back with GreenBoot video, which is part of the RHEL for Edge overview videos series.

Sobre o autor

Brian Smith is a product manager at Red Hat focused on RHEL automation and management. He has been at Red Hat since 2018, previously working with public sector customers as a technical account manager (TAM).

Mais como este

Solving the scaling challenge: 3 proven strategies for your AI infrastructure

From incident responder to security steward: My journey to understanding Red Hat's open approach to vulnerability management

Technically Speaking | Platform engineering for AI agents

Technically Speaking | Driving healthcare discoveries with AI

Navegue por canal

Automação

Últimas novidades em automação de TI para empresas de tecnologia, equipes e ambientes

Inteligência artificial

Descubra as atualizações nas plataformas que proporcionam aos clientes executar suas cargas de trabalho de IA em qualquer ambiente

Nuvem híbrida aberta

Veja como construímos um futuro mais flexível com a nuvem híbrida

Segurança

Veja as últimas novidades sobre como reduzimos riscos em ambientes e tecnologias

Edge computing

Saiba quais são as atualizações nas plataformas que simplificam as operações na borda

Infraestrutura

Saiba o que há de mais recente na plataforma Linux empresarial líder mundial

Aplicações

Conheça nossas soluções desenvolvidas para ajudar você a superar os desafios mais complexos de aplicações

Virtualização

O futuro da virtualização empresarial para suas cargas de trabalho on-premise ou na nuvem