With software-defined networking (SDN) and network functions virtualization (NFV) gaining traction, more cloud service providers are looking for open solutions, based on standardized hardware platforms and open source software. In particular, communication service providers (CSPs) are undergoing a major shift from specialized hardware-based network elements to a software based provisioning paradigm where virtualized network functions (VNFs) are deployed in private or hybrid clouds of network operators. Increasingly, OpenStack is seen as the virtual infrastructure platform of choice for NFV, with many of the world’s largest communications companies implementing solutions with OpenStack today.

While software-based solutions can enable faster delivery of new services, operators still need to support the quality and experience users are looking for, with performance being a key requirement. Depending on the application, VNFs may require different performance characteristics like throughput, latency, and jitter, that sometimes must be competitive with existing bare-metal equivalent solutions. The software networking stack end up needing a significant amount of time to process each packet. However, with the current speed of network devices we are not normally given that much time. On a 10 Gbps link, as an example, it is possible to pass packets with the smallest Ethernet frame size at a rate of 14.88 Mpps. At that rate we have just 67.2ns per packet.

OpenStack, as the virtual infrastructure platform, is responsible to provide network connectivity to the VNFs, implemented as virtual machine instances, so that their performance can meet service needs. Red Hat continues to work with the upstream communities including Linux, QEMU, KVM, Open vSwitch, DPDK, libvirt, OpenStack, and OPNFV to help accelerate NFV deployments and is committed to achieve improved performance of the infrastructure through open, standard, solutions.

VNFs as applications

Network elements that are widely deployed today are mostly treated as “black-boxes”. They are built out of customized hardware and software, and the actual network applications are tuned by the device for optimal performance resulted from years of engineering efforts. With the introduction of NFV, the industry is poised to go through a massive transformation as more networking vendors are starting to implement their traditional devices as VNFs. While the majority of them are looking into virtual machines (VMs), some are also looking at container-based approach, hoping to offer more efficiency and agility. This is going to be a tough and complicated process, and different vendors are in different stages of this journey today.

We believe that OpenStack and the underlying infrastructure components should offer a flexible, feature-rich layer for VNFs to connect to. As we see it, the OpenStack-based solution needs to be rich and flexible due to two primary reasons:

- Application readiness

As mentioned before, network vendors are currently in the process of transforming their devices into VNFs. We see different maturity level of VNFs in the market; common barriers to this readiness include enabling RESTful interfaces in their APIs, evolving their data models to become stateless, and providing automated management operations. Some VNFs are already optimized for virtualized environments and some are not, some are architected for elastic scale and some are not, and so on. We believe that OpenStack should provide a common platform for all.

- Broad use-cases

VNFs offer a broad range of applications that serve different use-cases. For example, Virtual Customer Premise Equipment (vCPE) aims at providing a number of network functions such as routing, firewall, VPN, and NAT at customer premises. Virtual Evolved Packet Core (vEPC), is a cloud architecture that provides a cost-effective platform for the core components of Long-Term Evolution (LTE) network, allowing dynamic provisioning of gateways and mobile endpoints to sustain the increased volumes of data traffic from smartphones and other devices.

These use-cases, by nature, are implemented using different network applications and protocols, and require different connectivity, isolation and performance characteristics from the infrastructure. It is also common to separate between control plane interfaces and protocols and the actual forwarding plane. One VNF might have some network interfaces dedicated to control plane operations (e.g signaling and session maintenance with protocols like SIP, Diameter or RTPC), and other interfaces dedicated to actual forwarding (e.g transport of the media itself over protocols like RTP or SCTP). Therefore, it makes sense that OpenStack should be flexible enough to offer different datapath connectivity options.

NFV datapath: a plethora of solutions

In principle, there are two common approaches for providing data plane connectivity to VMs:

- Direct hardware access

The first approach is to bypass any software layer and pass through direct hardware access to the physical Network Interface Card (NIC) or top-of-rack (ToR) switch using technologies such as PCI Passthrough or single root I/O virtualization (SR-IOV).

- Using a virtual switch

The second approach is to use a virtual software switch (vSwitch) in the Compute node (hypervisor) hosting the VMs. VMs are connected to the vSwitch using virtual interfaces (vNICs), and the vSwitch is capable of forwarding traffic between VMs as well as between VMs and the physical network.

Let’s dive into some of the common datapath options we see today:

SR-IOV

SR-IOV is a standard that makes a single PCI hardware device appears as multiple virtual PCI devices. SR-IOV works by introducing the idea of physical functions (PFs) and virtual functions (VFs). Physical functions (PFs) are the full-featured PCIe functions and represent the physical hardware ports; virtual functions (VFs) are lightweight functions that can be assigned to VMs. A suitable VF driver must reside within the VM, which sees the VF as a regular NIC that communicates directly with the hardware. The number of virtual instances that can be presented depends upon the network adapter card and the device driver. For example, a single card with two ports might have two PFs each exposing 128 VFs. These are, of course, theoretical limits.

SR-IOV for network devices is supported starting with RHEL OpenStack Platform 6, and described in more details in this two-series blog post.

While direct hardware access can provide near line-rate performance to the VM, this approach limits the flexibility of the deployment as it breaks the software abstraction. VMs must be initiated on Compute nodes where SR-IOV capable cards are placed, and the features offered to the VM depend on the capabilities of the specific NIC hardware.

Steering traffic to/from the VM (e.g based on MAC addresses and/or 802.1q VLAN IDs) is done by the NIC hardware and is not visible to the Compute layer. Even in the simple case where two VMs are placed in the same Compute node and want to communicate with each other (i.e intra-host communication), traffic that goes out the source VM must hit the physical network adapter on the host before it is switched back to the destination VM. Due to that, features such as firewall filtering (OpenStack security-groups) or live migration are currently not available when using SR-IOV with OpenStack. Therefore, SR-IOV is a good fit for self-contained appliances, where minimum policy is expected to be enforced by OpenStack and the virtualization layer.

Drawing 1: Direct hardware access using SR-IOV

Open vSwitch

Open vSwitch (OVS) is an open source software switch designed to be used as a vSwitch within virtualized server environments. OVS supports many of the capabilities you would expect from a traditional switch, but also offers support for “SDN ready” interfaces and protocols such as OpenFlow and OVSDB. Red Hat recommends Open vSwitch for RHEL OpenStack Platform deployments, and offers out of the box OpenStack Networking (Neutron) integration with OVS.

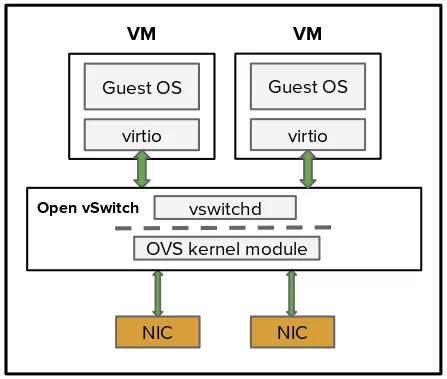

Standard OVS is built out of three main components:

- ovs-vswitchd - a user-space daemon that implements the switch logic

- kernel module (fast path) - that processes received frames based on a lookup table

- ovsdb-server - a database server that ovs-vswitchd queries to obtain its configuration. External clients can talk to ovsdb-server using OVSDB protocol

When a frame is received, the fast path (kernel space) uses match fields from the frame header to determine the flow table entry and the set of actions to execute. If the frame does not match any entry in the lookup table it is sent to the user-space daemon (vswitchd) which requires more CPU processing. The user-space daemon then determines how to handle frames of this type and sets the right entries in the fast path lookup tables.

OVS has several ports: outbound ports which are connected to the physical NICs on the host using kernel device drivers, and inbound ports which are connected to VMs. The VM guest operating system (OS) is presented with vNICs using the well-known VirtIO paravirtualized network driver.

Drawing 2: standard OVS architecture; user-space and kernel space layers

While most users can get decent performance numbers with the standard OVS, it was never designed with NFV in mind and does not meet some of the requirements we are starting to see from VNFs. Thus Red Hat, Intel, and others have contributed to enhance OVS by utilizing the Data Plane Development Kit (DPDK), boosting its performance to meet NFV demands.

DPDK

The Data Plane Development Kit (DPDK) consists of a set of libraries and drivers for fast packet processing. It is designed to run mostly in user-space, enabling applications to perform their own packet processing directly from/to the NIC. This reduces latency and allows more packets to be processed.

One of the key concepts of DPDK, compared to the kernel networking stack, is the use of Poll Mode Drivers (PMDs). Instead of using an interrupt-based system, or context switching between the kernel-space and user-space, which disturbs the CPU anytime a new frame is coming, PMDs use polling approach: they are continuously scanning the NIC to see whether frames arrived or not.

DPDK applications no longer communicate with the Linux kernel networking stack. Instead, all ports that are to be used by a DPDK application must be bound to the PMD before the application is run. DPDK helps to bypass the kernel network stack in order to deliver high performance. It is not intended to be a direct replacement for all the rich packet processing capabilities already found in the kernel, such as L3 forwarding, firewalling, or QoS, and in most cases these features are not currently available to DPDK applications. However, DPDK does provide useful libraries to help developing such features and appears to be evolving in this direction.

DPDK has followed a fairly typical open source project route. It was originally an Intel project, developed and maintained in-house by Intel. As it grew in popularity, it reached a point where it was clear that it needed a real, open, development community. This is where 6WIND took on the challenge and established an upstream development community hosted at dpdk.org.

These days, DPDK is becoming a critical component for NFV in general and for VNF applications in particular. We expect to see continued growth and interest by the development community and by customers and partners, and more and more VNFs written for DPDK and consuming the DPDK library. This is the reason that DPDK is already available with Red Hat Enterprise Linux 7 via the “Extras” channel.

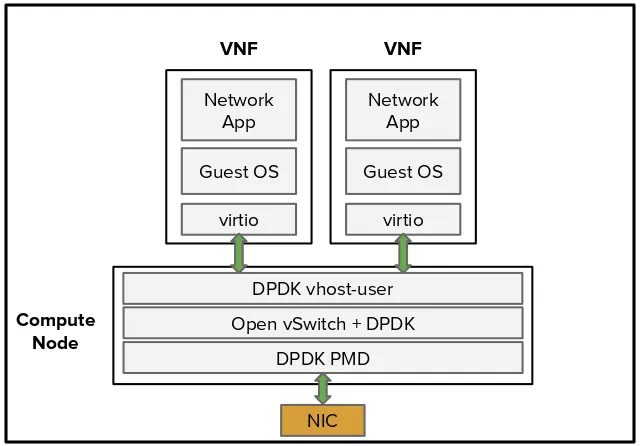

DPDK-accelerated Open vSwitch

Open vSwitch can be bundled with DPDK for better performance, resulting in a DPDK-accelerated OVS (OVS+DPDK). At a high level, the idea is to replace the standard OVS kernel datapath with a DPDK-based datapath, creating a user-space vSwitch on the host, which is using DPDK internally for its packet forwarding. The nice thing about this architecture is that it is mostly transparent to users as the basic OVS features as well as the interfaces it expose (such as OpenFlow, OVSDB, the command line, etc.) remains mostly the same.

The development of OVS+DPDK is now part of the OVS project, and the code is maintained under openvswitch.org. The fact that DPDK has established an upstream community of its own was key for that, so we now have the two communities - OVS and DPDK - talking to each other in the open, and the codebase for DPDK-accelerated OVS available in the open source community.

Starting with the upcoming RHEL OpenStack Platform 8 release, DPDK-accelerated Open vSwitch is expected to be available for customers and partners as a Technology Preview feature based on the work done in upstream OVS 2.4. This should include tight integration with the Compute and Networking layers of OpenStack via enhancements made to the OVS Neutron plug-in and agent. The implementation is also expected to include support for dpdkvhostuser ports (using QEMU vhost-user) so that VMs can still use the standard VirtIO networking driver when communicating with OVS on the host.

Red Hat sees the main advantage of OVS+DPDK as the flexibility it offers. SR-IOV, as previously described, is very tight to the physical NIC, resulting in a lack of software abstraction on the hypervisor side. DPDK-accelerated OVS has the promise to fix that by offering the “best of both worlds”: performance on one hand, and flexibility and programmability through the virtualization layer on the other.

Drawing 3: standard OVS versus user-space OVS accelerated with DPDK

DPDK with RHEL OpenStack Platform

Generally, we see two main use-cases for using DPDK with Red Hat and RHEL OpenStack Platform. To avoid confusion, I wanted to summarize them both here:

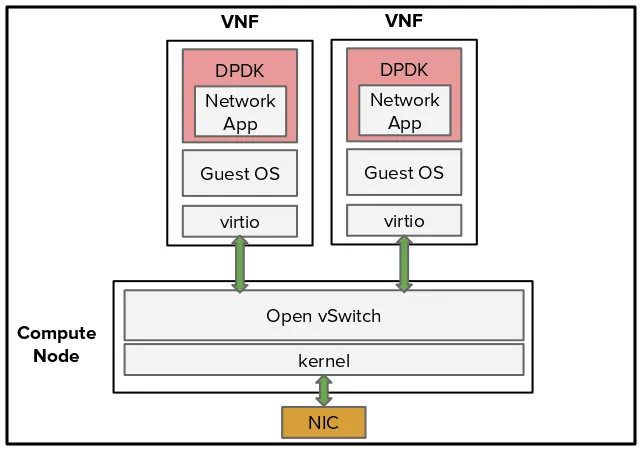

- DPDK enabled applications, or VNFs, written on top of RHEL as a guest operating system. Here we are talking about Network Functions that are taking advantage of DPDK as opposed to the standard kernel networking stack for enhanced performance.

- DPDK-accelerated Open vSwitch, running within RHEL OpenStack Platform Compute nodes (the hypervisors). Here it is all about boosting the performance of OVS and allowing for faster connectivity between VNFs.

I would like to highlight that if you want to run DPDK-accelerated OVS in the Compute node, you do not necessarily have to run DPDK-enabled applications in the VNFs that plug into it. This can be seen as another layer of optimization, but these are two different fundamental use-cases.

Drawing 4: standard OVS with DPDK-enabled VNFs

Drawing 5: DPDK-accelerated OVS with standard VNFs

Drawing 6: DPDK-accelerated OVS with DPDK enabled VNFs

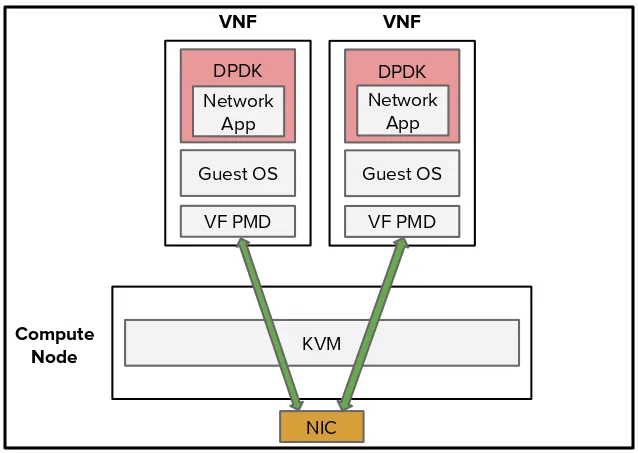

Additionally, it is possible to run DPDK-enabled VNFs without using OVS or OVS+DPDK on the Compute node, and utilize SR-IOV instead. This configuration requires a VF Poll Mode Driver (PMD) within the VM itself.

Drawing 7: SR-IOV with DPDK enabled VNFs

And the winner is…?

I am often asked “what is the best datapath solution for VNFs running on top of OpenStack?” My answer is, well.. it depends. We came to understand that a “one size fits all” approach falls short of addressing the unique trends in the NFV space. It is important to remember that different network applications, or VNFs, might have different requirements. Some require specific performance numbers or strict QoS, some might need live-migration or certain high-availability features, and so on.

RHEL OpenStack Platform, being the infrastructure manager, focuses on offering maximum flexibility and choice between different datapath options. This should allow customers to use Open vSwitch or the DPDK-accelerated Open vSwitch when they need it, as well as SR-IOV where applicable. Similarly, VNF providers can write their applications on top of base RHEL or on top of the DPDK library within a RHEL guest VM.

Red Hat remains committed to investments in open source contribution and to collaborating with the community, as well as our customers and partners, to help drive deployments of NFV and enhance RHEL OpenStack Platform to support a variety of data plane options.

Sobre o autor

Mais como este

New efficiency upgrades in Red Hat Advanced Cluster Management for Kubernetes 2.15

Friday Five — January 16, 2026

Technically Speaking | Build a production-ready AI toolbox

AI Is Changing The Threat Landscape | Compiler

Navegue por canal

Automação

Últimas novidades em automação de TI para empresas de tecnologia, equipes e ambientes

Inteligência artificial

Descubra as atualizações nas plataformas que proporcionam aos clientes executar suas cargas de trabalho de IA em qualquer ambiente

Nuvem híbrida aberta

Veja como construímos um futuro mais flexível com a nuvem híbrida

Segurança

Veja as últimas novidades sobre como reduzimos riscos em ambientes e tecnologias

Edge computing

Saiba quais são as atualizações nas plataformas que simplificam as operações na borda

Infraestrutura

Saiba o que há de mais recente na plataforma Linux empresarial líder mundial

Aplicações

Conheça nossas soluções desenvolvidas para ajudar você a superar os desafios mais complexos de aplicações

Virtualização

O futuro da virtualização empresarial para suas cargas de trabalho on-premise ou na nuvem