We all know the importance of setting up limits, whether it is with our kids, our diet, our physical activities and so on. But, when it comes to resources dedicated to our applications, we may think, “Why limit ourselves? If a resource is available, then give it to me.” Well, it turns out that this approach, even in the seemingly unlimited resources world that the cloud offers, may not be such a good idea.

To validate the recommended reservation of resources for system tasks in Red Hat OpenShift clusters, we deployed a “resource hungry” application on OpenShift clusters of different sizes. Specifically, we are interested in the behavior of clusters with shared resources between system and application such as Single Node OpenShift (SNO) clusters and Compact Clusters (three nodes serving both as masters and workers).

The results show that there are cases where no matter how many resources are reserved for system tasks, letting an application go limitless may cause the control plane to become unresponsive to the point where the entire cluster—limitless application included—becomes non-functional.

The moral of the story: As a cluster administrator, you could ask nicely that the developers deploying applications on the cluster(s) under your control set up limits in their application’s manifests. But it’s much safer to enforce the use of resource limitations by using the Kubernetes mechanism design for this purpose, i.e., setting resource quotas per namespace through the creation of ResourceQuota objects.

Motivation

Capacity planning is essential to achieve optimal utilization of your resources. However, it is not an easy task, and very often we end up with a solution that is either under or over capacity. As we work with our customers and partners on the design of OpenShift clusters to host their applications, it is our responsibility to deliver an optimal allocation of resources to system tasks to keep the system running smoothly while leaving the vast majority of available resources to application workloads.

On one particular engagement with a major partner in the telco industry, we were asked to validate our recommendations regarding the allocation of reserved cores to system workloads (four virtual cores in an SNO). Our goal in this experiment was to measure the utilization of cores by the system when running resource-hungry applications.

Fractals on demand

To emulate a scenario that resembles a typical web application with varying needs of server resources, we deployed a simple web application that generates fractals of differing sizes. This application was selected because it enables very easy control through parameters in the URL query string of CPU, memory and network utilization (Figure 1).

From left to right, we see the results of requesting 200 x 200 pixel, 400 x 400 pixel and 600 x 600 pixel fractals. As the image requested gets bigger the need for resources increases. The computation uses double-precision floating point matrices, so large fractals require a lot of resources. The fractal generator code was wrapped as an HTTP server with all the configuration space exposed as parameters in the URL query string. The resulting code is available here.

Figure 1: Fractals on demand

Not quite on demand…

To stress our web application and measure CPU and memory utilization in the cluster, we developed a very simple web client that, given an URL and a number n, creates n threads that simultaneously request the URL. This “parallel getter” is then used to access the web application simultaneously and continuously in an infinite loop from 1500 clients, with 1000 requesting small images (512 x 512 pixel) and the rest requesting large images (4096 x 4096 pixel).

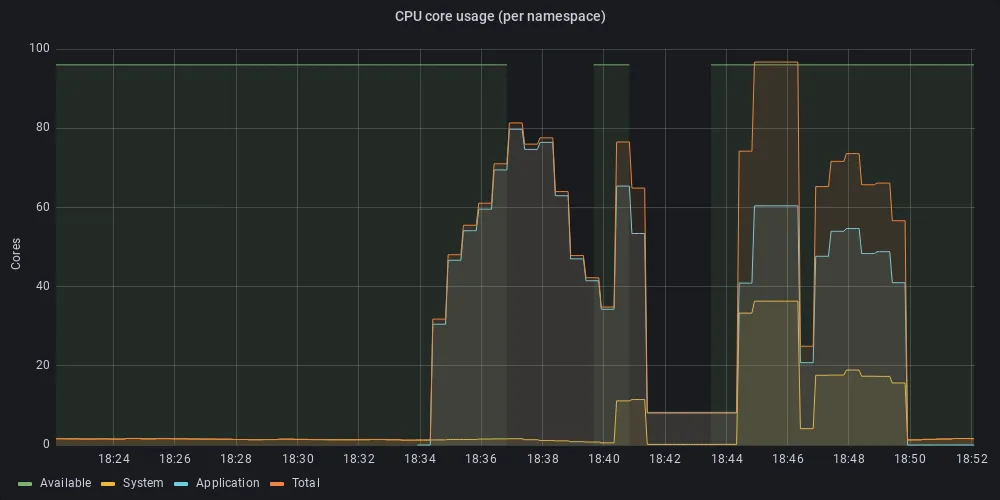

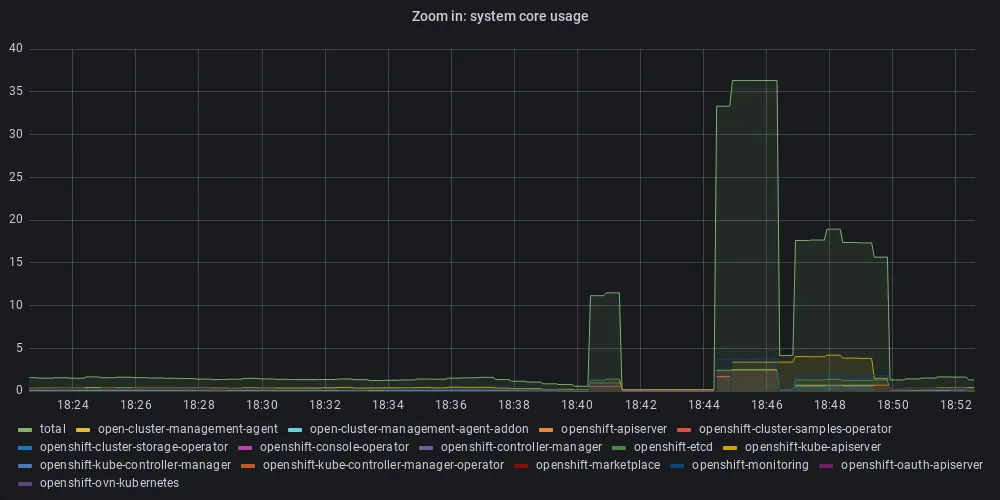

To our surprise, the “on-demand” fractal generator very quickly stops working and the cluster becomes unresponsive until the infinite loop of parallel web clients is stopped. At that point the CPU utilization of system workloads unexpectedly rises well above the recommended values. As seen in Figure 2, the system becomes unresponsive a little after 18:36 (figure 2a, utilization for all workloads), but the spike in CPU utilization by system workloads happens after 18:40 (Figure 2b, utilization for system workloads only).

Figure 2: CPU utilization generated by multiple client requesting fractal generation

Figure 2a: CPU utilization for all workloads

Figure 2b: CPU utilization for system workloads only

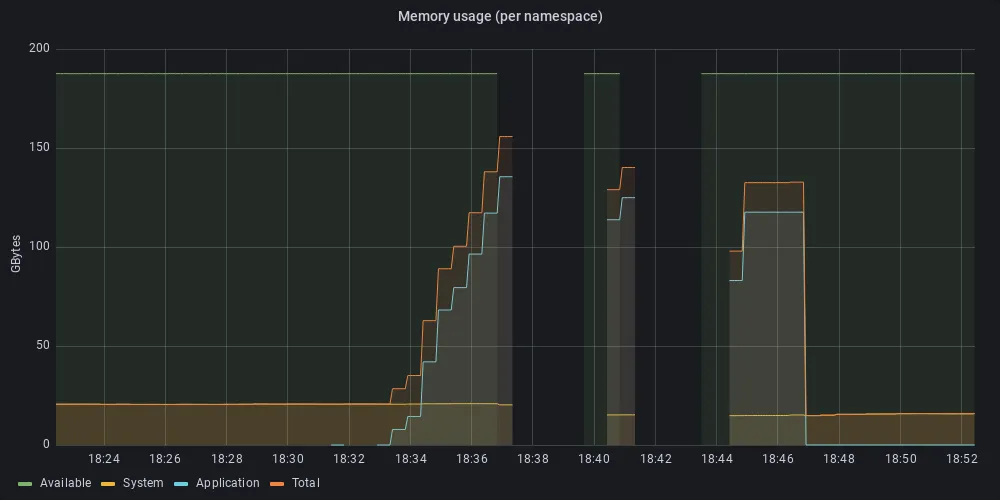

By only looking at CPU utilization it is not clear what is causing this spike, but a look at what is happening to memory utilization may give some insights. At the “breaking point,” the application workloads are consuming almost all the memory available to applications (Figure 3).

Figure 3: Memory utilization for the same period

Resource quotas to the rescue

Clearly, we don’t want our clusters to become unresponsive, no matter what applications are running on top of them. In particular, we need special care for SNOs and compact clusters, where the control plane shares resources with the applications. In our experiment, the problem is caused by a memory-hungry application that knows no bounds—it takes whatever is available. In Kubernetes, a well-behaved application should specify both minimum (resources.requests) and maximum (resources.limits) resource requirements per pod (in the spec.containers section). By default, however, these parameters are optional and it is up to application developers to set limits, which leaves the cluster open to abuse.

Fortunately, there is a mechanism that system administrators can use to force developers to set limits, the ResourceQuota object, defined in the official OpenShift documentation:

“A resource quota, defined by a ResourceQuota object, provides constraints that limit aggregate resource consumption per project. It can limit the quantity of objects that can be created in a project by type, as well as the total amount of compute resources and storage that might be consumed by resources in that project.”

Once a ResourceQuota has been created for a project, applications cannot be deployed unless the limits have been defined and are in range. Note that the ResourceQuota object restraints the resources that can be consumed per project, and to be effective in ensuring the cluster stability the resources allocated to all projects must not exceed the available resources. In our case, this is: Available memory = Total memory - Memory used by the system.

To be on the safe side (in case the system suddenly needs more memory), it is also recommended to limit consumption to no more than 90% of the available memory.

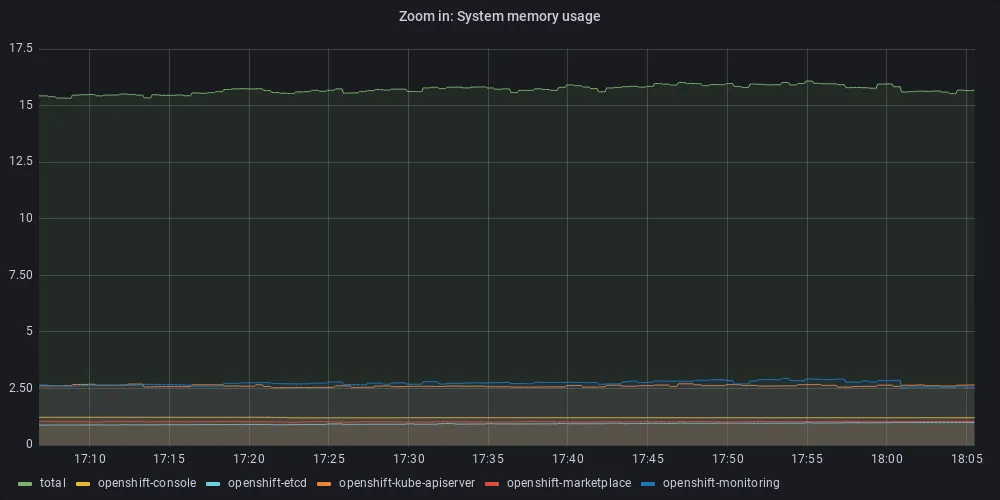

On the test SNO we have 200 GBytes total memory, and the system uses around 15 GBytes (see Figure 4), so in this cluster, allowing all applications to use collectively up to 185 GBytes should keep the cluster running without problem.

Figure 4: Memory used by system workloads

With this in mind we create a resource quota as follows:

apiVersion: v1 kind: ResourceQuota metadata: name: memory-quota spec: hard: requests.memory: 185Gi

Accordingly—assuming, as is the case in our tests—that we have a single application project in this cluster, and that the application will not grow above nine pods, we add the following memory limitation to the application manifest:

resources: limits: memory: 20Gi requests: cpu: 1000m

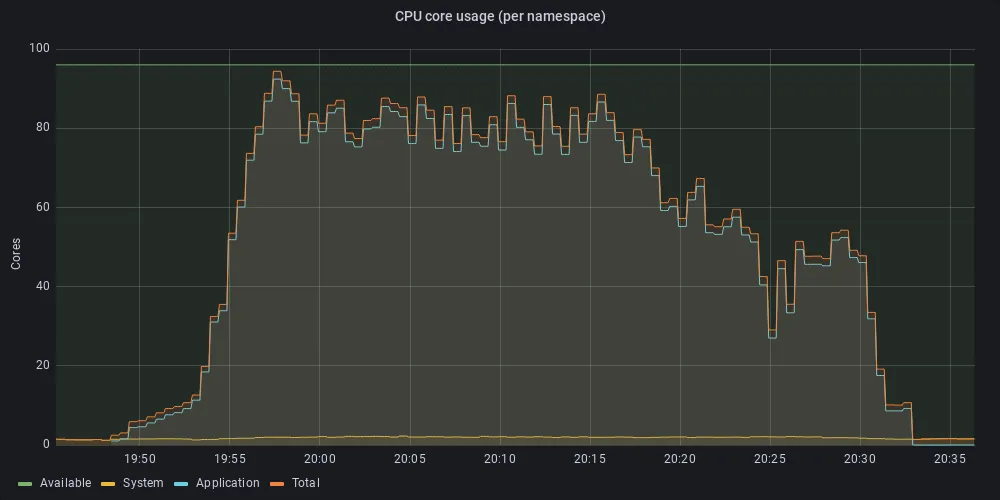

With these limitations in place, we again initiated the infinite loop of 1500 simultaneous web clients (as above). Now, as can be seen in Figure 5, the system remains responsive throughout the test (above 40 minutes), and the CPU utilization by the system workloads remains below the recommended allocation.

Figure 5 CPU and memory utilization under stress, when application is running with memory limits

Figure 5a: CPU utilization under stress

Figure 5b: CPU utilization under stress by system workloads

Figure 5c: Memory utilization under stress

So, with ResourceQuota we managed to enforce setting limits in application manifests, which in turn lead to a stable cluster. But what about the now limited applications? As shown in the screenshot in Figure 6, the application is still functional, as indicated by the “Thread ### finished …:” lines, but some requests do fail.

This is expected and it means that within the allocated resources, the application has reached its maximum capacity. To fix it without breaking the cluster—which in turn would lead to the application failing—the cluster needs more resources.

Figure 6: Screenshot of the multi-threaded web client requesting images from our fractal generator under stress. As expected, some threads succeed, while others fail.

Summary

Capacity planning is crucial for both building a stable cluster and for the performance and reliability of the applications running on top of it. By default, OpenShift clusters give a lot of power to application developers and do not impose limits. However, with great power comes great responsibility—without proper care, an application may bring down the entire cluster, particularly in restricted environments such as SNOs or Compact Clusters.

The main takeaway from this experiment is this: If you are a cluster administrator, it is always a good idea to enforce limits by creating resource quotas on every OpenShift project. In restricted environments, such as a SNO, it is not only a good idea—it is a must if you want to enhance cluster stability.

Sobre o autor

Benny Rochwerger has been a Principal Software Engineer in Red Hat’s Telco Engagement team since 2021. Prior to joining Red Hat, Rochwerger was a senior architect at Radware's CTO office, where he led the group on cloud-native security solutions for 5G networks, and before that he was a Senior Technical Staff Member at the IBM Haifa Research Lab, where he led research projects in the areas of network virtualization and cloud computing. His interests include software defined networking, networking virtualization, cloud computing, distributed systems and cyber-security. Rochwerger holds an MS in Computer Science from the University of Massachusetts Amherst and a B.Sc. in Computer Engineering from the Israel Institute of Technology (Technion).

Mais como este

AI insights with actionable automation accelerate the journey to autonomous networks

IT automation with agentic AI: Introducing the MCP server for Red Hat Ansible Automation Platform

Technically Speaking | Taming AI agents with observability

How Do We Make Updates Less Annoying? | Compiler

Navegue por canal

Automação

Últimas novidades em automação de TI para empresas de tecnologia, equipes e ambientes

Inteligência artificial

Descubra as atualizações nas plataformas que proporcionam aos clientes executar suas cargas de trabalho de IA em qualquer ambiente

Nuvem híbrida aberta

Veja como construímos um futuro mais flexível com a nuvem híbrida

Segurança

Veja as últimas novidades sobre como reduzimos riscos em ambientes e tecnologias

Edge computing

Saiba quais são as atualizações nas plataformas que simplificam as operações na borda

Infraestrutura

Saiba o que há de mais recente na plataforma Linux empresarial líder mundial

Aplicações

Conheça nossas soluções desenvolvidas para ajudar você a superar os desafios mais complexos de aplicações

Virtualização

O futuro da virtualização empresarial para suas cargas de trabalho on-premise ou na nuvem