This post is the sequel to the object storage performance testing we did two years back based on Red Hat Ceph Storage 2.0 FileStore OSD backend and Civetweb RGW frontend. In this post, we will compare the performance of the latest available (at the time of writing) Ceph Storage i.e. version 3.3 (BlueStore OSD backend & Beast RGW frontend) with Ceph Storage 2.0 version (mid-2017) (FileStore OSD backend & Civetweb RGW frontend).

We are conscious that results from both these performance studies are not scientifically comparable. However, we believe that comparing the two should provide you significant performance insights and enables you to make an informed decision when it comes to architecting your Ceph storage clusters.

As expected, Ceph Storage 3.3 outperformed Ceph Storage 2.0 for all the workloads that we have tested. We believe that Ceph Storage 3.3 performance improvements are attributed to the combination of several things. The BlueStore OSD backend, the Beast web frontend for RGW, the use of Intel Optane SSDs for BlueStore WAL, block.db, and the latest generation Intel Cascade Lake processors.

Let’s take a closer look at the performance comparison of the two Ceph Storage versions.

Test Lab Configuration

Here's a quick rundown of our lab environment. Testing was performed in a Dell EMC lab using their hardware, and hardware contributed by Intel.

Ceph Storage 3.3 Environment

Table 1: Ceph Storage 3.3 Test Lab Configurations

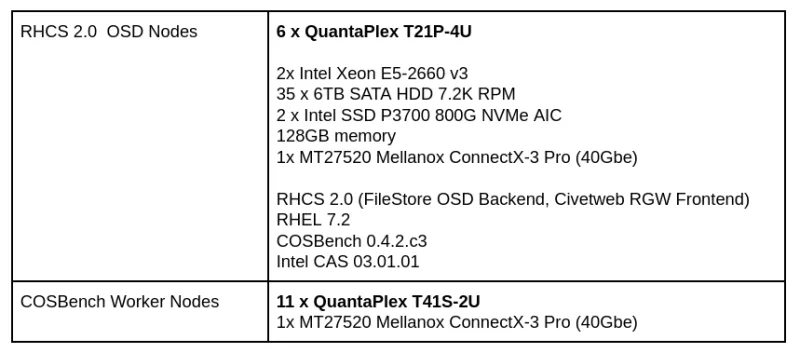

Ceph Storage 2.0 Environment

Table 2: Ceph Storage 2.0 Test Lab Configurations

Small Object Performance

In this section we'll take a look at small object performance.

Small Object 100% write workload

Chart 1 compares the performance of small object 100% write workload for both Ceph Storage 3.3 and Ceph Storage 2.0 versions. As shown in the chart, Ceph Storage 3.3 consistently delivered orders of magnitude higher Operations per second (Ops) performance compared to Ceph Storage 2.0. As such we have observed over 500% higher Ops with Ceph Storage 3.3.

You might be wondering, what could be the reason for 2 x sharp performance degradation points in Ceph Storage 3.3 performance line? This was supposedly caused due by the RGW dynamic bucket re-sharding event, which has been explained in-depth in the previous post.

Chart 1: Small Object Size 100% Write Performance

Small Object 100% read workload

The 100% read workload for Ceph Storage 3.3 showed near-perfect deterministic performance compared to Ceph Storage 2.0, whose performance falls from the cliff at around 18th hour of testing. For Ceph Storage 2.0, this decline in read OPS was caused due to an increase in time taken for filesystem metadata lookup as the cluster object count grew.

When the cluster held fewer objects, a greater percentage of filesystem metadata was cached by the kernel in memory. However, when the cluster grew to millions of objects, a smaller percentage of metadata was cached. Disks were then forced to perform I/O operations specifically for metadata lookups, adding additional disk seeks and resulting in lower read OPS.

Bluestore OSD backends powering Ceph Storage 3.3 and beyond do not rely on Linux page caches during read operations. As such the Bluestore OSDs does their own memory management and hence helps in achieving deterministic performance for read workloads as shown in chart 2.

Chart 2: Small Object Size 100% Read Performance

Large Object Performance

Next we'll turn our attention to large object performance benchmarks.

Large Object 100% write workload

For large object 100% write test, Ceph Storage 3.3 delivered sub-linear performance improvement, while Ceph Storage 2.0 shows retrograde performance as depicted in chart 3. During Ceph Storage 2.0 test, we were lacking additional RGW node while Containerized Storage Daemons were also not an option. As such, we were unable to test beyond 4 RGWs for Ceph Storage 2.0 test.

Ceph Storage 3.3, unless saturated by sub-system resource saturation, delivered ~5.5 GBps of write bandwidth. Ceph Storage 2.0, on the other hand showed declining performance.

Chart 3: Large Object Size 100% Write Performance

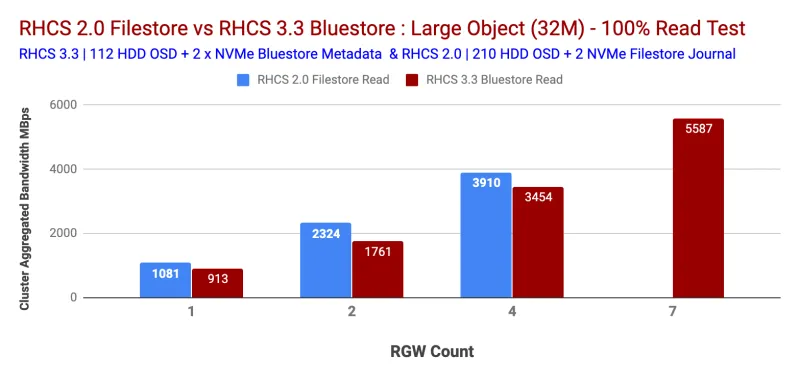

Large Object 100% read workload

The large object 100% read workload, showed sub-linear performance for both Ceph Storage 2.0 and 3.3 tests. As explained in the last section, at the time of Ceph Storage 2.0 test, we were not having additional physical nodes for RGWs hence we were limited to 4 and could not scale RGWs further.

Ceph Storage 3.3 showed ~5.5 GBps 100% read bandwidth with no bottlenecks observed with 7 RGWs. Our hypothesis is that, Ceph Storage 3.3 could have delivered more bandwidth, have we added more RGWs. Chart-4 compares the performance of Ceph Storage 3.3 and Ceph Storage 2.2 for 100% Large Object workload.

Chart 4: Large Object Size 100% Read Performance

Summary and up next

We acknowledge that the performance comparison shown above is not entirely “Apples-to-Apples." However, we believe that this study should give you key performance insights for different Ceph Storage versions and help you make informed decisions.

We tried our best to choose the common ground across the two studies. Interestingly, with just half, the number of spindles (110 Ceph Storage 3.3 test vs 210 Ceph Storage 2.0 test) Ceph Storage 3.3 with BlueStore OSD backend and Beast RGW frontend web server delivered better than 5x higher Ops for small object and better than 2x higher bandwidth for large object 100% write workload. In the next post, we will uncover the performance of storing 1 billion-plus objects into the Ceph cluster. Stay Tuned.

Sobre o autor

Mais como este

Friday Five — January 23, 2026 | Red Hat

Zero trust workload identity manager generally available on Red Hat OpenShift

Data Security 101 | Compiler

Technically Speaking | Build a production-ready AI toolbox

Navegue por canal

Automação

Últimas novidades em automação de TI para empresas de tecnologia, equipes e ambientes

Inteligência artificial

Descubra as atualizações nas plataformas que proporcionam aos clientes executar suas cargas de trabalho de IA em qualquer ambiente

Nuvem híbrida aberta

Veja como construímos um futuro mais flexível com a nuvem híbrida

Segurança

Veja as últimas novidades sobre como reduzimos riscos em ambientes e tecnologias

Edge computing

Saiba quais são as atualizações nas plataformas que simplificam as operações na borda

Infraestrutura

Saiba o que há de mais recente na plataforma Linux empresarial líder mundial

Aplicações

Conheça nossas soluções desenvolvidas para ajudar você a superar os desafios mais complexos de aplicações

Virtualização

O futuro da virtualização empresarial para suas cargas de trabalho on-premise ou na nuvem