O OpenShift Virtualization é uma ótima solução para aplicações não conteinerizadas. Por outro lado, apresenta alguns desafios em relação às soluções de virtualização legadas e aos sistemas bare metal. Um desses desafios envolve a interação com máquinas virtuais (VMs). O OpenShift é voltado para aplicações em containers, que, em geral, não exigem conexões de entrada para serem configuradas e gerenciadas (pelo menos, não do mesmo tipo de conexões que uma VM precisaria para o gerenciamento ou uso).

Neste post, discutiremos os vários métodos para acessar VMs em execução em um ambiente do OpenShift Virtualization. Aqui está um breve resumo desses métodos:

Interface de usuário (IU) do OpenShift

O OpenShift Virtualization permite fazer conexões VNC em sua IU, dando acesso direto ao console de uma VM. Não é necessário configurar as conexões seriais na IU ao usar imagens disponibilizadas pela Red Hat. Esses métodos de conexão são úteis para solucionar problemas com VMs.

Comando virtctl

O comando

virtctlusa websockets para fazer conexões com uma VM. Ele dá acesso aos consoles VNC e serial e acesso SSH na VM. O OpenShift Virtualization permite acessar os consoles VNC e serial como na IU. O acesso ao console VNC exige um cliente VNC no cliente que executa o comandovirtctl. O acesso ao console serial exige a mesma configuração de VM usada para acessar esse console na IU. O acesso SSH exige que o sistema operacional da VM seja configurado para esse tipo de acesso. Consulte a documentação da imagem da VM para saber quais são os requisitos de SSH.Rede do pod

Expor uma porta usando um serviço permite conexões de rede em uma VM. Qualquer porta em uma VM pode ser exposta usando um serviço. As portas mais usadas são: 22 (ssh), 5900+ (VNC) e 3389 (RDP). Estes são os três tipos de serviços mostrados neste post:

ClusterIP

Um serviço ClusterIP expõe a porta de uma VM dentro do cluster. Desse modo, as VMs podem se comunicar entre si, mas não podem se conectar com máquinas fora do cluster.

NodePort

Um serviço NodePort expõe a porta de uma VM fora do cluster usando os nós do cluster. A porta da VM é mapeada para uma porta nos nós. Em geral, a porta nos nós não é a mesma da VM. A VM pode ser acessada ao fazer a conexão com o IP e o número de porta apropriado dos nós.

LoadBalancer (LB)

Um serviço LB disponibiliza um pool de endereços IP para as VMs usarem. Esse tipo de serviço expõe a porta de uma VM externamente ao cluster. Um pool de endereços IP adquiridos ou especificados é usado para se conectar à VM.

Interface de camada 2 (L2)

É possível configurar uma interface de rede nos nós do cluster como ponte para permitir a conectividade L2 a uma VM. A interface da VM se conecta à ponte usando um objeto NetworkAttachmentDefinition. Isso ignora o stack de rede do cluster e expõe a interface da VM diretamente à rede interligada pela ponte. Ao ignorar o stack de rede do cluster, esse método também ignora a segurança integrada do cluster. É necessário proteger a VM da mesma forma que um servidor físico conectado à rede.

Um pouco sobre o cluster

O cluster usado neste post é chamado wd e está no domínio example.org . Ele consiste em três nós de control plane bare metal (wcp-0, wcp-1 e wcp-2) e três nós de trabalho bare metal (wwk-0, wwk-1 e wwk-2). Esses nós estão na rede principal do cluster (10.19.3.0/24).

| Nó | Função | IP | FQDN |

|---|---|---|---|

| wcp-0 | control plane | 10.19.3.95 | wcp-0.wd.example.org |

| wcp-1 | control plane | 10.19.3.94 | wcp-1.wd.example.org |

| wcp-2 | control plane | 10.19.3.93 | wcp-2.wd.example.org |

| wwk-0 | nó de trabalho | 10.19.3.92 | wwk-0.wd.example.org |

| wwk-1 | nó de trabalho | 10.19.3.91 | wwk-1.wd.example.org |

| wwk-2 | nó de trabalho | 10.19.3.90 | wwk-2.wd.example.org |

O MetalLB está configurado para disponibilizar quatro endereços IP (10.19.3.112 a 115) para as VMs dessa rede.

Os nós do cluster têm uma interface de rede secundária na rede 10.19.136.0/24. Essa rede secundária tem um servidor DHCP que disponibiliza endereços IP.

O cluster tem os operadores a seguir instalados. Todos eles são da Red Hat, Inc.

| Operador | Motivo da instalação |

|---|---|

| Kubernetes NMState Operator | Usado para configurar a segunda interface nos nós |

| OpenShift Virtualization | Dá os mecanismos para executar as VMs |

| Armazenamento local | Necessário para o OpenShift Data Foundation Operator quando HDDs locais são usados |

| MetalLB Operator | Disponibiliza o serviço LoadBalancing usado neste post |

| OpenShift Data Foundation | Serve como armazenamento para o cluster. O armazenamento é criado usando um segundo HDD nos nós. |

Há algumas VMs em execução no namespace blog do cluster:

- Uma VM Fedora 38 chamada fedora

- Uma VM Red Hat Enterprise Linux 9 (RHEL9) chamada rhel9

- Uma VM Windows 11 chamada win11

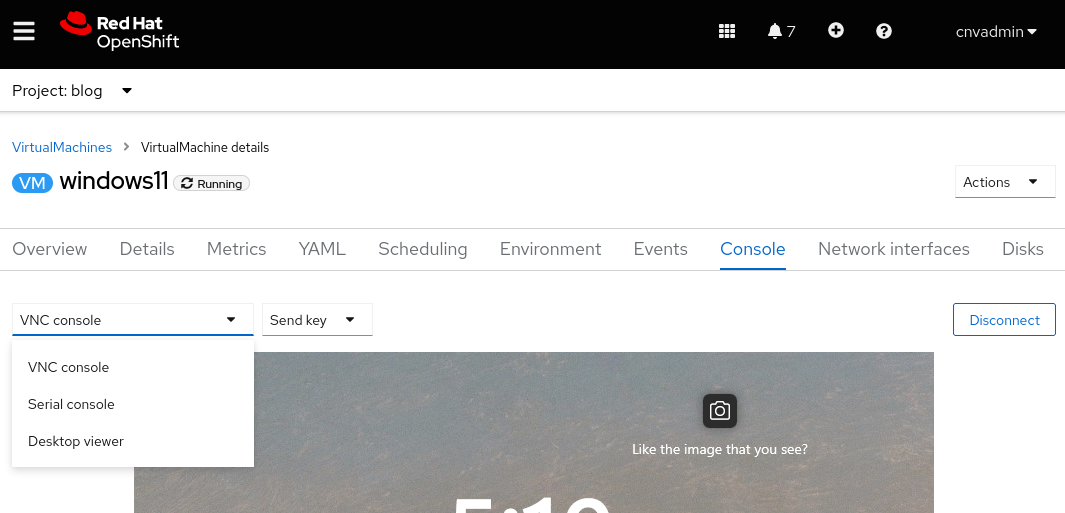

Como conectar na IU

Ao visualizar a VM na IU, você encontrará várias guias. Todas elas oferecem métodos para visualizar ou configurar diversos aspectos da VM. Uma guia que merece atenção é a Console . Essa guia tem três métodos para se conectar à VM: "VNC console", "Serial console" e "Desktop viewer" (RDP). O método RDP é exibido apenas para VMs que executam o Microsoft Windows.

Console VNC (VNC console)

O método "VNC console" está sempre disponível para qualquer VM. O serviço VNC é disponibilizado pelo OpenShift Virtualization e não exige nenhuma configuração do sistema operacional das VMs. Ele funciona como se fosse mágica.

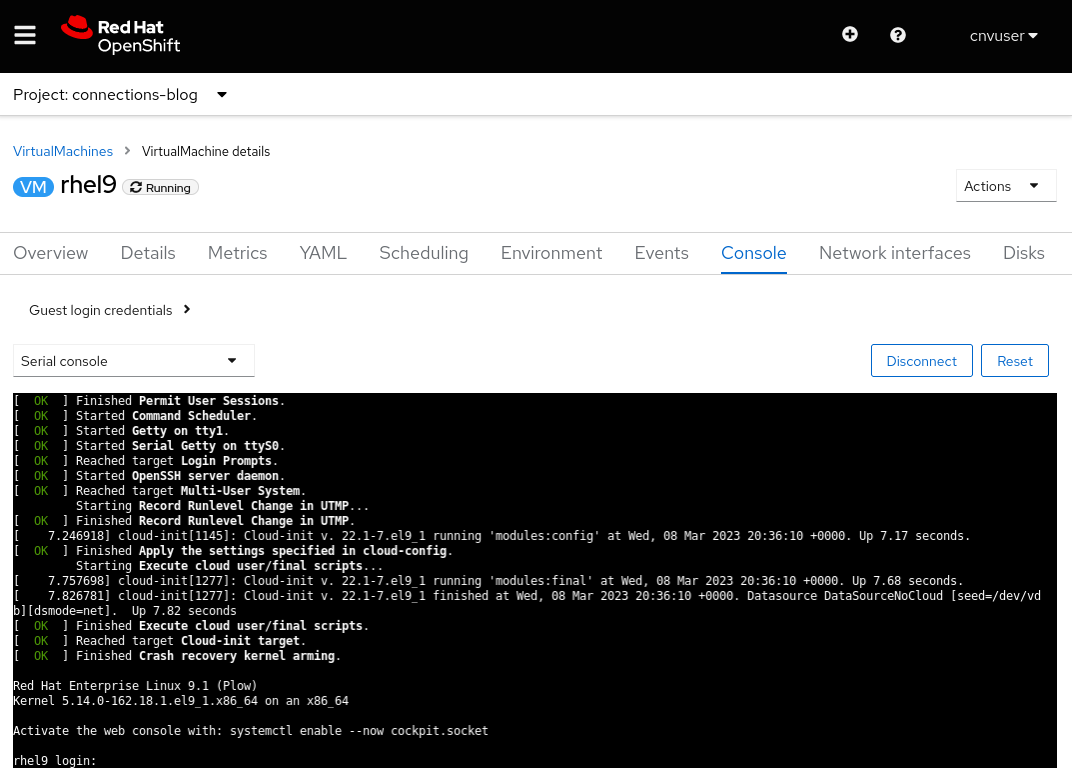

Console serial (Serial console)

O método "Serial console" exige configuração no sistema operacional da VM. Se o sistema operacional não for configurado para direcionar a saída para a porta serial da VM, esse método de conexão não funcionará. As imagens de VM disponibilizadas pela Red Hat são configuradas para enviar informações de inicialização para a porta serial e exibir um prompt de login após a VM concluir o processo de inicialização.

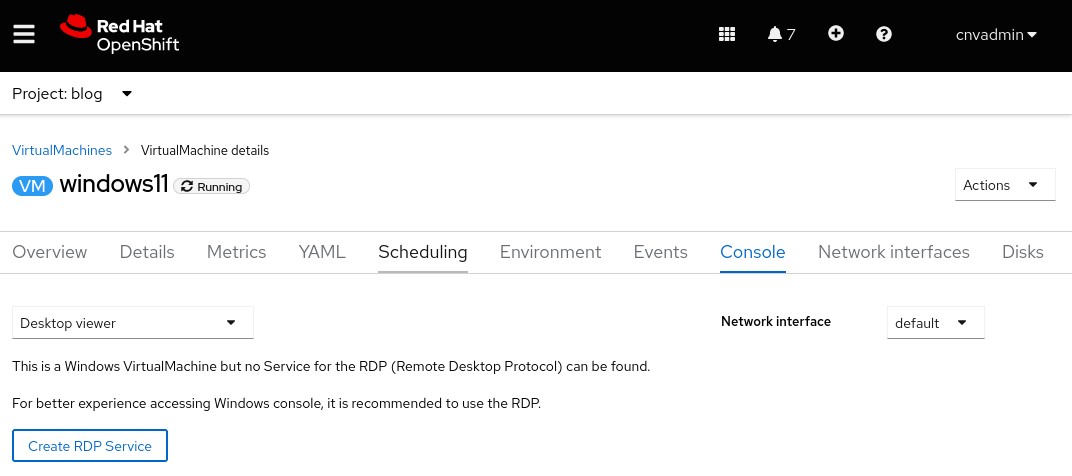

Visualizador de desktop (Desktop viewer)

Esse método de conexão exige que um serviço de desktop remoto (RDP) esteja instalado e em execução na VM. Ao optar por se conectar usando RDP na guia "Console", o sistema indicará que não há um serviço RDP para a VM e dará a opção de criar um. Ao selecionar essa opção, será exibida uma janela pop-up com a caixa de seleção "Expose RDP Service". Marque essa caixa para criar um serviço para a VM, permitindo as conexões RDP.

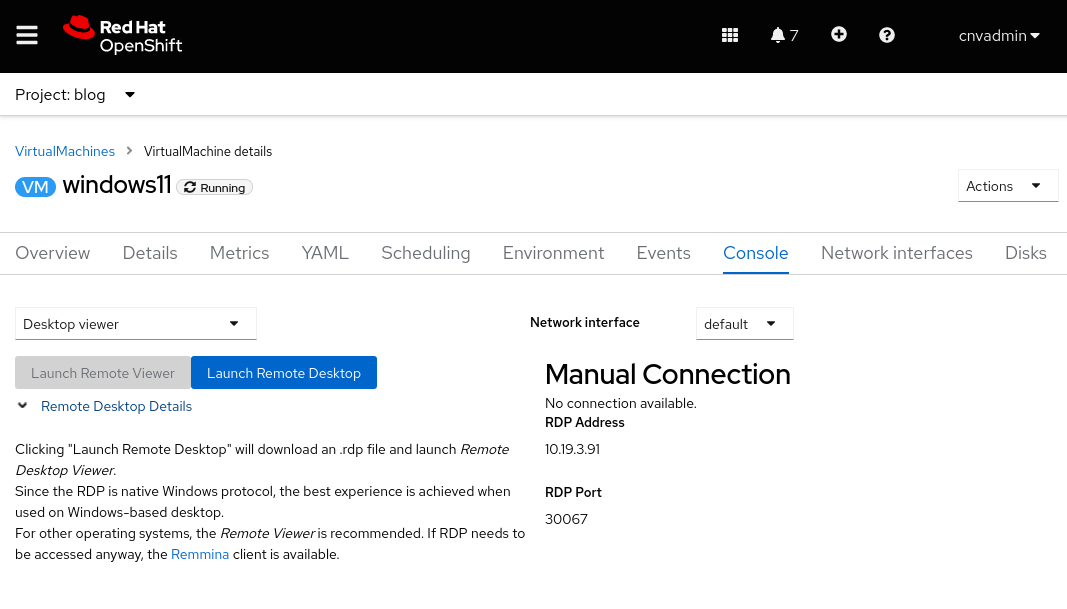

Depois da criação do serviço, a guia "Console" mostra as informações para fazer a conexão RDP.

Também será exibido o botão "Launch Remote Desktop". Clique nesse botão para fazer o download de um arquivo chamado console.rdp. Se seu navegador estiver configurado para abrir arquivos .rdp , o arquivo console.rdp será aberto em um cliente RDP.

Como conectar com o comando virtctl

O comando virtctl dá acesso aos consoles VNC e serial e acesso SSH à VM usando um túnel de rede pelo protocolo WebSocket.

- Para executar o comando

virtctl, o usuário precisa acessar o cluster na linha de comando. - Se o usuário não estiver no mesmo namespace da VM, será necessário especificar a opção

--namespace.

Você pode fazer o download da versão correta do virtctl e de outros clientes no seu cluster usando um URL como https://console-openshift-console.apps.NOMEDOCLUSTER.DOMINIODOCLUSTER/command-line-tools. Outra opção é clicar no ícone de ponto de interrogação na parte superior da IU e selecionar Command line tools.

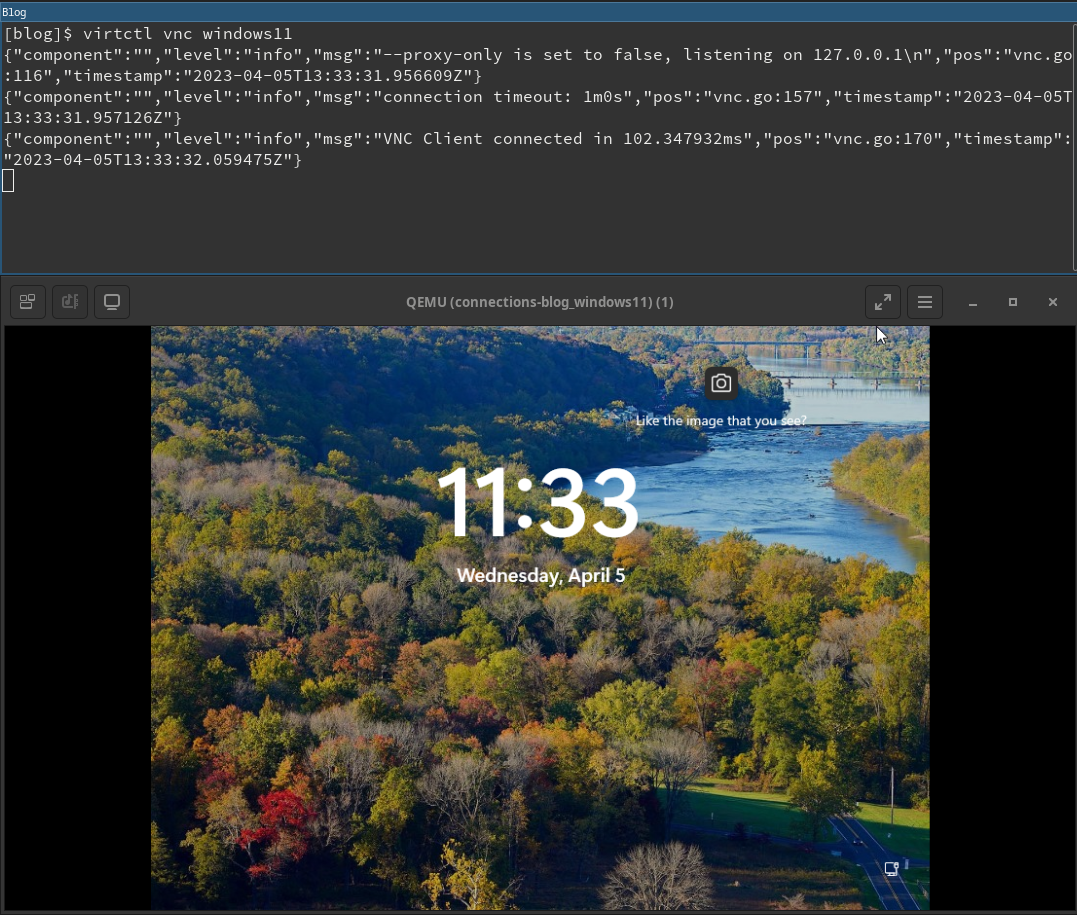

Console VNC

O comando virtctl faz a conexão com servidor VNC disponibilizado pelo OpenShift Virtualization. Para executar o comando virtctl , o sistema precisa ter o comando virtctl e um cliente VNC instalados.

Para abrir uma conexão VNC, basta executar o comando virtctl vnc . As informações sobre a conexão são exibidas no terminal, assim como uma nova sessão do console VNC.

Console serial

Para se conectar ao console serial usando o comando virtctl , execute virtctl console. Se a VM estiver configurada para enviar a saída para sua própria porta serial, como discutido anteriormente, será exibida a saída do processo de inicialização ou um prompt de login.

$ virtctl console rhel9

Successfully connected to rhel9 console. The escape sequence is ^]

[ 8.463919] cloud-init[1145]: Cloud-init v. 22.1-7.el9_1 running 'modules:config' at Wed, 05 Apr 2023 19:05:38 +0000. Up 8.41 seconds.

[ OK ] Finished Apply the settings specified in cloud-config.

Starting Execute cloud user/final scripts...

[ 8.898813] cloud-init[1228]: Cloud-init v. 22.1-7.el9_1 running 'modules:final' at Wed, 05 Apr 2023 19:05:38 +0000. Up 8.82 seconds.

[ 8.960342] cloud-init[1228]: Cloud-init v. 22.1-7.el9_1 finished at Wed, 05 Apr 2023 19:05:38 +0000. Datasource DataSourceNoCloud [seed=/dev/vdb][dsmode=net]. Up 8.95 seconds

[ OK ] Finished Execute cloud user/final scripts.

[ OK ] Reached target Cloud-init target.

[ OK ] Finished Crash recovery kernel arming.

Red Hat Enterprise Linux 9.1 (Plow)

Kernel 5.14.0-162.18.1.el9_1.x86_64 on an x86_64

Activate the web console with: systemctl enable --now cockpit.socket

rhel9 login: cloud-user

Password:

Last login: Wed Apr 5 15:05:15 on ttyS0

[cloud-user@rhel9 ~]$

SSH

Para invocar o cliente SSH, use o comando virtctl ssh . A opção -i desse comando permite que o usuário especifique uma chave privada a ser usada.

$ virtctl ssh cloud-user@rhel9-one -i ~/.ssh/id_rsa_cloud-user

Last login: Wed May 3 16:06:41 2023

[cloud-user@rhel9-one ~]$

Também é possível usar o comando virtctl scp para transferir arquivos para uma VM. Estou mencionando isso porque esse comando funciona de maneira semelhante ao virtctl ssh .

Encaminhamento de porta

O comando virtctl também pode ser usado para encaminhar o tráfego das portas locais da máquina do usuário para uma porta na VM. Consulte a documentação do OpenShift para mais informações sobre como isso funciona.

Essa opção é útil para encaminhar o cliente OpenSSH local para a VM, por ser mais robusto, em vez de usar o cliente SSH integrado do comando virtctl . Consulte a documentação do Kubevirt para conferir um exemplo de como fazer isso.

Também serve para se conectar a um serviço em uma VM quando você não quiser criar um serviço do OpenShift para expor a porta.

Por exemplo, tenho uma VM chamada fedora-proxy com o servidor web NGINX instalado. Um script personalizado na VM grava algumas estatísticas em um arquivo chamado "process-status.out". Sou a única pessoa interessada no conteúdo do arquivo, mas gostaria de visualizá-lo ao longo do dia. Posso usar o comando virtctl port-forward para encaminhar uma porta local no meu laptop ou desktop para a porta 80 da VM. Posso escrever um script curto para coletar os dados sempre que eu quiser.

#! /bin/bash

# Create a tunnel

virtctl port-forward vm/fedora-proxy 22080:80 &

# Need to give a little time for the tunnel to come up

sleep 1

# Get the data

curl http://localhost:22080/process-status.out

# Stop the tunnel

pkill -P $$

A execução do script obtém os dados que eu quero e depois faz a limpeza.

$ gather_stats.sh

{"component":"","level":"info","msg":"forwarding tcp 127.0.0.1:22080 to 80","pos":"portforwarder.go:23","timestamp":"2023-05-04T14:27:54.670662Z"}

{"component":"","level":"info","msg":"opening new tcp tunnel to 80","pos":"tcp.go:34","timestamp":"2023-05-04T14:27:55.659832Z"}

{"component":"","level":"info","msg":"handling tcp connection for 22080","pos":"tcp.go:47","timestamp":"2023-05-04T14:27:55.689706Z"}

Test Process One Status: Bad

Test Process One Misses: 125

Test Process Two Status: Good

Test Process Two Misses: 23

Como conectar usando uma porta exposta na rede do pod (serviços)

Serviços

Os serviços no OpenShift são usados para expor as portas de uma VM ao tráfego de entrada, que pode ser proveniente de outras VMs e pods ou de uma origem externa ao cluster.

Este post mostra como criar três tipos de serviços: ClusterIP, NodePort e LoadBalancer. O tipo de serviço ClusterIP não permite acesso externo às VMs. Todos os três tipos dão acesso interno entre VMs e pods e são o método preferencial para que as VMs dentro do cluster se comuniquem entre si. A tabela a seguir lista os três tipos de serviço com os respectivos escopos de acessibilidade.

| Fonte | Escopo interno do DNS interno do cluster | Escopo externo |

|---|---|---|

| ClusterIP | <service-name>.<namespace>.svc.cluster.local | Nenhum |

| NodePort | <service-name>.<namespace>.svc.cluster.local | Endereço IP de um nó do cluster |

| LoadBalancer | <service-name>.<namespace>.svc.cluster.local | Endereço IP externo de ipAddressPools do LoadBalancer |

É possível criar esses serviços usando o comando virtctl expose ou os definindo em YAML. Você pode usar a linha de comando ou a IU para criar um serviço usando YAML.

Primeiro, vamos definir um serviço usando o comando virtctl.

Como criar um serviço com o comando virtctl

O usuário precisa acessar o cluster para usar o comando virtctl . Se o usuário não estiver no mesmo namespace da VM, será necessário especificar a opção --namespace como o mesmo da VM.

O comando virtctl expose vm cria um serviço que pode ser usado para expor a porta da VM. As opções a seguir são as mais usadas com o comando virtctl expose ao criar um serviço.

| --name | Nome do serviço a ser criado. |

| --type | Especifica o tipo de serviço a ser criado: ClusterIP, NodePort ou LoadBalancer |

| --port | O número da porta em que o serviço detecta o tráfego. |

| --target-port | Opcional. É a porta da VM a ser exposta. Se não for especificada, equivale a "--port". |

| --protocol | Opcional. O protocolo que o serviço deve detectar. O padrão é TCP. |

O comando a seguir cria um serviço para acesso SSH em uma VM chamada rhel9.

$ virtctl expose vm rhel9 --name rhel9-ssh --type NodePort --port 22

Visualize o serviço para determinar a porta a ser usada para acessar a VM de fora do cluster.

$ oc get service

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

rhel9-ssh NodePort 172.30.244.228 <none> 22:32317/TCP 3s

Vamos excluir a porta por enquanto.

$ oc delete service rhel9-ssh

service "rhel9-ssh" deleted

Como criar um serviço em YAML

É possível criar um serviço em YAML na linha de comando usando oc create -f ou em um editor na IU. Ambos os métodos funcionam e cada um tem as próprias vantagens. É mais fácil escrever o script na linha de comando, mas a IU auxilia no esquema usado para definir o serviço.

Primeiro, vamos discutir o arquivo YAML, já que ele é o mesmo para ambos os métodos.

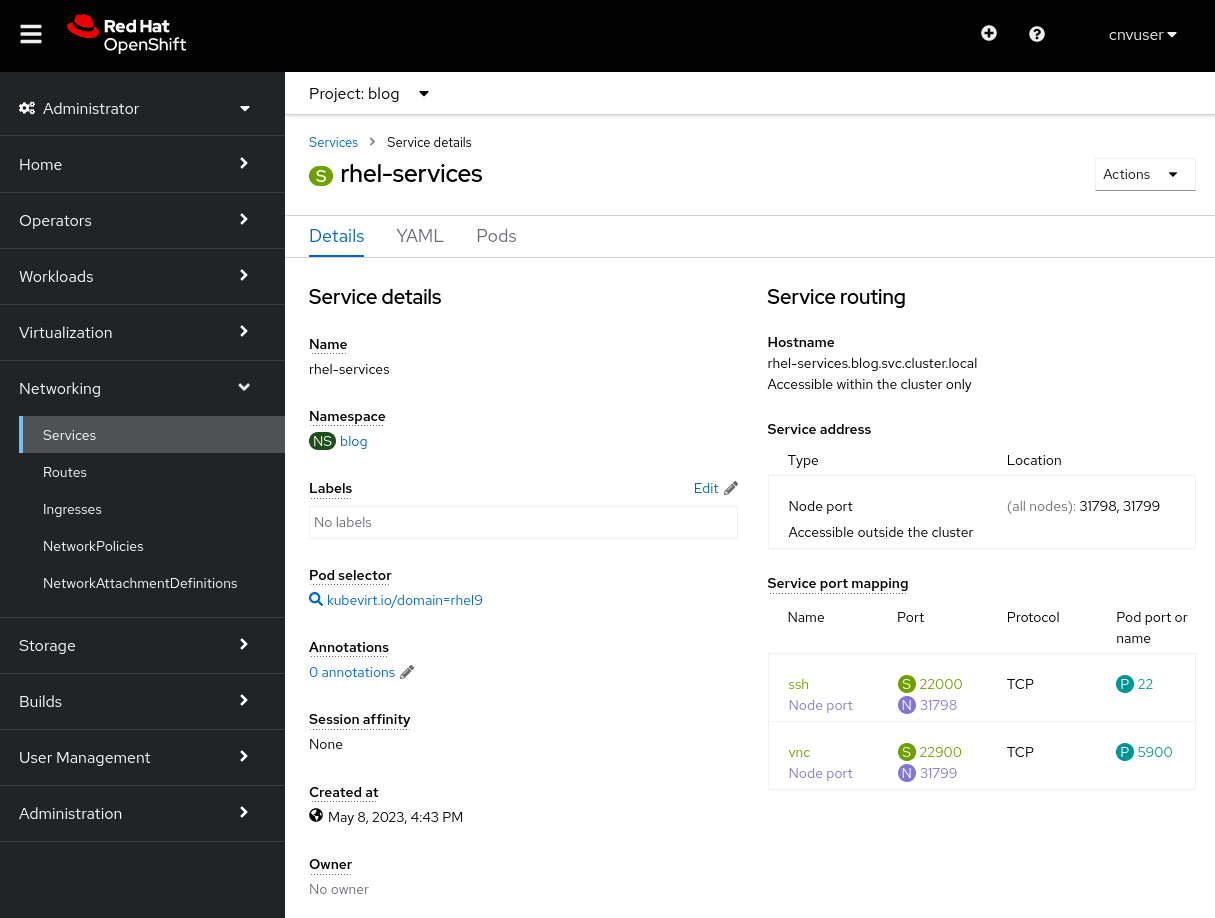

Uma única definição de serviço pode expor uma ou várias portas. O arquivo YAML abaixo é um exemplo de definição de serviço que expõe duas portas, uma para tráfego SSH e outra para tráfego VNC. As portas são expostas como um NodePort. As explicações dos principais itens estão listadas após o YAML.

apiVersion: v1

kind: Service

metadata:

name: rhel-services

namespace: blog

spec:

ports:

- name: ssh

protocol: TCP

nodePort: 31798

port: 22000

targetPort: 22

- name: vnc

protocol: TCP

nodePort: 31799

port: 22900

targetPort: 5900

type: NodePort

selector:

kubevirt.io/domain: rhel9

Aqui estão algumas configurações importantes no arquivo:

| metadata.name | O nome do serviço, que é exclusivo no namespace. |

| metadata.namespace | O namespace onde o serviço está. |

| spec.ports.name | Um nome para a porta que está sendo definida. |

| spec.ports.protocol | O protocolo do tráfego de rede (TCP ou UDP). |

| spec.ports.nodePort | A porta que é exposta fora do cluster. É exclusiva dentro do cluster. |

| spec.ports.port | Uma porta usada internamente na rede de clusters. |

| spec.ports.targetPort | A porta exposta pela VM. Várias VMs podem expor a mesma porta. |

| spec.type | O tipo de serviço a ser criado. Neste exemplo, usamos NodePort. |

| spec.selector | O seletor usado para vincular o serviço à VM. No exemplo, o vínculo é feito com uma VM chamada fedora |

Criar um serviço na linha de comando

Vamos criar os dois serviços no arquivo YAML usando a linha de comando. O comando que usaremos é o oc create -f.

$ oc create -f service-two.yaml

service/rhel-services created

$ oc get services

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

rhel-services NodePort 172.30.11.97 <none> 22000:31798/TCP,22900:31799/TCP 4s

Podemos constatar que duas portas são expostas em um único serviço. Agora, vamos remover o serviço usando o comando oc delete service .

$ oc delete service rhel-services

service "rhel-services" deleted

Criar um serviço na IU

Vamos criar o mesmo serviço usando a IU. Para criar um serviço usando a IU, acesse "Networking -> Services" e selecione "Create Service". Será aberto um editor com uma definição de serviço pré-preenchida e uma referência ao esquema. Cole o YAML acima no editor e clique em Create para criar o serviço.

Após você clicar em Create, os detalhes do serviço serão exibidos.

Também é possível conferir os serviços anexados a uma VM na guia "Details" ou na linha de comando com oc get service , como explicado anteriormente. Removeremos o serviço, assim como fizemos acima.

$ oc get services

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

rhel-services NodePort 172.30.11.97 <none> 22000:31798/TCP,22900:31799/TCP 4s

$ oc delete service rhel-services

service "rhel-services" deleted

Como criar serviços SSH e RDP de modo fácil

A IU oferece métodos simples de apontar e clicar para criar serviços SSH e RDP em VMs.

Para habilitar o SSH de modo fácil, use o menu suspenso SSH service type na guia "Details" da VM. Esse menu também facilita a criação de um serviço NodePort ou LoadBalancer.

O serviço é criado após o tipo ser selecionado. A IU exibe um comando que pode ser usado para fazer a conexão com o serviço, assim como o serviço criado.

Para habilitar o serviço RDP, acesse a guia "Console" da VM. Se a VM for baseada no Windows, o método Desktop viewer aparecerá como opção na lista suspensa de "Console".

Após selecionar o método, é exibida a opção Create RDP Service .

Ao clicar na opção, é exibida uma janela pop-up para selecionar Expose RDP Service.

Depois da criação do serviço, a guia "Console" mostra as informações de conexão.

Exemplo de conexão usando o serviço ClusterIP

Os serviços do tipo ClusterIP permitem que as VMs se conectem umas às outras internamente no cluster. Isso é útil quando uma VM disponibiliza serviços para outras, como uma instância de banco de dados. Em vez de configurar um banco de dados em uma VM, vamos apenas expor o SSH na VM fedora usando um ClusterIP.

Vamos escrever um arquivo YAML que cria um serviço para expor a porta SSH da VM fedora internamente no cluster.

apiVersion: v1

kind: Service

metadata:

name: fedora-internal-ssh

namespace: blog

spec:

ports:

- protocol: TCP

port: 22

selector:

kubevirt.io/domain: fedora

type: ClusterIP

Vamos aplicar a configuração.

$ oc create -f service-fedora-ssh-clusterip.yaml

service/fedora-internal-ssh created

$ oc get service

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

fedora-internal-ssh ClusterIP 172.30.202.64 <none> 22/TCP 7s

Usando a VM rhel9, podemos constatar que é possível se conectar à VM fedora por SSH.

$ virtctl console rhel9

Successfully connected to rhel9 console. The escape sequence is ^]

rhel9 login: cloud-user

Password:

Last login: Wed May 10 10:20:23 on ttyS0

[cloud-user@rhel9 ~]$ ssh fedora@fedora-internal-ssh.blog.svc.cluster.local

The authenticity of host 'fedora-internal-ssh.blog.svc.cluster.local (172.30.202.64)' can't be established.

ED25519 key fingerprint is SHA256:ianF/CVuQ4kxg6kYyS0ITGqGfh6Vik5ikoqhCPrIlqM.

This key is not known by any other names

Are you sure you want to continue connecting (yes/no/[fingerprint])? yes

Warning: Permanently added 'fedora-internal-ssh.blog.svc.cluster.local' (ED25519) to the list of known hosts.

Last login: Wed May 10 14:25:15 2023

[fedora@fedora ~]$

Exemplo de conexão usando o serviço NodePort

Neste exemplo, vamos expor o RDP da VM win11 usando um serviço NodePort para ter uma experiência de conexão com o desktop melhor do que a proporcionada pela guia "Console". Essa conexão será usada por usuários confiáveis que conhecerão os IPs dos nós do cluster.

Uma observação sobre o OVN-Kubernetes:

A versão mais recente do instalador do OpenShift usa por padrão o stack de rede OVN-Kubernetes. Se o serviço NodePort for usado com um cluster que executa o stack de rede OVN-Kubernetes, o tráfego de saída das VMs não funcionará até que a opção routingViaHost seja habilitada.

A aplicação de um simples patch no cluster ativa o tráfego de saída ao usar um serviço NodePort.

$ oc patch network.operator cluster -p '{"spec": {"defaultNetwork": {"ovnKubernetesConfig": {"gatewayConfig": {"routingViaHost": true}}}}}' --type merge

$ oc get network.operator cluster -o yaml

apiVersion: operator.openshift.io/v1

kind: Network

spec:

defaultNetwork:

ovnKubernetesConfig:

gatewayConfig:

routingViaHost: true

...Esse patch não será necessário se o cluster usar o stack de rede OpenShiftSDN ou se um serviço MetalLB for usado.

Exemplo de conexão usando o serviço NodePort

Para criar o serviço NodePort, primeiro vamos defini-lo em um arquivo YAML.

apiVersion: v1

kind: Service

metadata:

name: win11-rdp-np

namespace: blog

spec:

ports:

- name: rdp

protocol: TCP

nodePort: 32389

port: 22389

targetPort: 3389

type: NodePort

selector:

kubevirt.io/domain: windows11

Crie o serviço.

$ oc create -f service-windows11-rdp-nodeport.yaml

service/win11-rdp-np created

$ oc get service

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

win11-rdp-np NodePort 172.30.245.211 <none> 22389:32389/TCP 5s

Como esse é um serviço NodePort, podemos nos conectar a ele usando o IP de qualquer nó. O comando oc get nodes mostra os endereços IP dos nós.

$ oc get nodes -o=custom-columns=Name:.metadata.name,IP:status.addresses[0].address

Name IP

wcp-0 10.19.3.95

wcp-1 10.19.3.94

wcp-2 10.19.3.93

wwk-0 10.19.3.92

wwk-1 10.19.3.91

wwk-2 10.19.3.90

O elemento xfreerdp é um programa cliente que pode ser usado para conexões RDP. Vamos instruir esse programa a se conectar ao nó wcp-0 usando a porta RDP exposta no cluster.

$ xfreerdp /v:10.19.3.95:32389 /u:cnvuser /p:hiddenpass

[14:32:43:813] [19743:19744] [WARN][com.freerdp.crypto] - Certificate verification failure 'self-signed certificate (18)' at stack position 0

[14:32:43:813] [19743:19744] [WARN][com.freerdp.crypto] - CN = DESKTOP-FCUALC4

[14:32:44:118] [19743:19744] [INFO][com.freerdp.gdi] - Local framebuffer format PIXEL_FORMAT_BGRX32

[14:32:44:118] [19743:19744] [INFO][com.freerdp.gdi] - Remote framebuffer format PIXEL_FORMAT_BGRA32

[14:32:44:130] [19743:19744] [INFO][com.freerdp.channels.rdpsnd.client] - [static] Loaded fake backend for rdpsnd

[14:32:44:130] [19743:19744] [INFO][com.freerdp.channels.drdynvc.client] - Loading Dynamic Virtual Channel rdpgfx

[14:32:45:209] [19743:19744] [WARN][com.freerdp.core.rdp] - pduType PDU_TYPE_DATA not properly parsed, 562 bytes remaining unhandled. Skipping.

Temos uma conexão com a VM.

Exemplo de conexão usando o serviço LoadBalancer

Para criar o serviço LoadBalancer, primeiro vamos defini-lo em um arquivo YAML. Vamos usar a VM win11 e expor o RDP.

apiVersion: v1

kind: Service

metadata:

name: win11-rdp-lb

namespace: blog

spec:

ports:

- name: rdp

protocol: TCP

port: 3389

targetPort: 3389

type: LoadBalancer

selector:

kubevirt.io/domain: windows11

Crie o serviço. É possível constatar que ele obtém um IP automaticamente.

$ oc create -f service-windows11-rdp-loadbalancer.yaml

service/win11-rdp-lb created

$ oc get service

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

win11-rdp-lb LoadBalancer 172.30.125.205 10.19.3.112 3389:31258/TCP 3s

Podemos observar a conexão com o EXTERNAL-IP e a porta RDP padrão 3389 exposta pelo serviço. A saída do comando xfreerdp mostra que a conexão foi bem-sucedida.

$ xfreerdp /v:10.19.3.112 /u:cnvuser /p:changeme

[15:51:21:333] [25201:25202] [WARN][com.freerdp.crypto] - Certificate verification failure 'self-signed certificate (18)' at stack position 0

[15:51:21:333] [25201:25202] [WARN][com.freerdp.crypto] - CN = DESKTOP-FCUALC4

[15:51:23:739] [25201:25202] [INFO][com.freerdp.gdi] - Local framebuffer format PIXEL_FORMAT_BGRX32

[15:51:23:739] [25201:25202] [INFO][com.freerdp.gdi] - Remote framebuffer format PIXEL_FORMAT_BGRA32

[15:51:23:752] [25201:25202] [INFO][com.freerdp.channels.rdpsnd.client] - [static] Loaded fake backend for rdpsnd

[15:51:23:752] [25201:25202] [INFO][com.freerdp.channels.drdynvc.client] - Loading Dynamic Virtual Channel rdpgfx

[15:51:24:922] [25201:25202] [WARN][com.freerdp.core.rdp] - pduType PDU_TYPE_DATA not properly parsed, 562 bytes remaining unhandled. Skipping.

Não coloquei nenhuma captura de tela aqui porque é a mesma de acima.

Como conectar usando a interface de camada 2

Se a interface da VM será usada só internamente, sem que seja necessário a expor publicamente, uma boa opção é estabelecer uma conexão usando NetworkAttachmentDefinition e uma interface interligada por uma ponte nos nós. Esse método ignora o stack de rede dos clusters. Isso significa que esse stack não precisa processar cada pacote de dados, o que pode melhorar o desempenho do tráfego de rede.

Porém, esse método tem algumas desvantagens. As VMs são expostas diretamente a uma rede e não são protegidas por nenhum mecanismo de segurança dos clusters. Se uma VM for comprometida, um invasor poderá ganhar acesso às redes às quais ela está conectada. Ao usar esse método, é necessário ter cuidado para tomar as medidas de segurança adequadas no sistema operacional da VM.

NMState

É possível usar o operador NMState disponibilizado pela Red Hat para configurar interfaces físicas nos nós após a implantação do cluster. Podemos aplicar várias configurações, incluindo pontes, VLANs, agregações de enlace e muito mais. Usaremos o operador para configurar uma ponte em uma interface não utilizada em cada nó do cluster. Consulte a documentação do OpenShift para mais informações sobre como usar o operador NMState.

Vamos configurar uma ponte simples em uma interface não utilizada nos nós. A interface está conectada a uma rede que disponibiliza DHCP e distribui endereços na rede 10.19.142.0. O YAML a seguir cria uma ponte chamada brint na interface de rede ens5f1 .

---

apiVersion: nmstate.io/v1

kind: NodeNetworkConfigurationPolicy

metadata:

name: brint-ens5f1

spec:

nodeSelector:

node-role.kubernetes.io/worker: ""

desiredState:

interfaces:

- name: brint

description: Internal Network Bridge

type: linux-bridge

state: up

ipv4:

enabled: false

bridge:

options:

stp:

enabled: false

port:

- name: ens5f1

Aplique o arquivo YAML para criar a ponte nos nós de trabalho.

$ oc create -f brint.yaml

nodenetworkconfigurationpolicy.nmstate.io/brint-ens5f1 created

Use o comando oc get nncp para visualizar o estado de NodeNetworkConfigurationPolicy. Use o comando oc get nnce para visualizar o estado da configuração de nós individuais. Depois que a configuração é aplicada, o STATUS de ambos os comandos mostra Available e REASON mostra SuccessfullyConfigured.

$ oc get nncp

NAME STATUS REASON

brint-ens5f1 Progressing ConfigurationProgressing

$ oc get nnce

NAME STATUS REASON

wwk-0.brint-ens5f1 Pending MaxUnavailableLimitReached

wwk-1.brint-ens5f1 Available SuccessfullyConfigured

wwk-2.brint-ens5f1 Progressing ConfigurationProgressing

NetworkAttachmentDefinition

As VMs não podem se conectar diretamente à ponte que criamos, mas sim a um NetworkAttachmentDefinition (NAD). O exemplo a seguir cria um NAD chamado nad-brint que se conecta à ponte brint criada no nó. Consulte a documentação do OpenShift para uma explicação sobre como criar o NAD.

apiVersion: "k8s.cni.cncf.io/v1"

kind: NetworkAttachmentDefinition

metadata:

name: nad-brint

annotations:

k8s.v1.cni.cncf.io/resourceName: bridge.network.kubevirt.io/brint

spec:

config: '{

"cniVersion": "0.3.1",

"name": "nad-brint",

"type": "cnv-bridge",

"bridge": "brint",

"macspoofchk": true

}'

Depois de aplicar o YAML, você pode visualizar o NAD usando o comando oc get network-attachment-definition .

$ oc create -f brint-nad.yaml

networkattachmentdefinition.k8s.cni.cncf.io/nad-brint created

$ oc get network-attachment-definition

NAME AGE

nad-brint 19s

Também é possível criar o NAD na IU em "Networking -> NetworkAttachmentDefinitions".

Exemplo de conexão usando a interface de camada 2

Após criar o NAD, é possível adicionar uma interface de rede à VM ou modificar a interface que já existe para usá-la. Para adicionar uma nova interface, acessaremos os detalhes da VM e selecionaremos a guia "Network interfaces". Escolha a opção Add network interface. Para modificar uma interface que já existe, selecione o menu de três pontos ao lado.

Após reiniciar a VM, a guia "Overview" nos detalhes da VM mostra o endereço IP recebido do DHCP.

Agora podemos nos conectar à VM usando o endereço IP adquirido de um servidor DHCP na interface interligada pela ponte.

$ ssh cloud-user@10.19.142.213 -i ~/.ssh/id_rsa_cloud-user

The authenticity of host '10.19.142.213 (10.19.142.213)' can't be established.

ECDSA key fingerprint is SHA256:0YNVhGjHmqOTL02mURjleMtk9lW5cfviJ3ubTc5j0Dg.

Are you sure you want to continue connecting (yes/no/[fingerprint])? yes

Warning: Permanently added '10.19.142.213' (ECDSA) to the list of known hosts.

Last login: Wed May 17 11:12:37 2023

[cloud-user@rhel9 ~]$

O tráfego SSH está passando da VM pela ponte e saindo da interface de rede física. O tráfego ignora a rede do pod e parece estar na rede onde a interface com ponte reside. A VM não é protegida por nenhum firewall quando conectada dessa maneira. Além disso, todas as portas da VM estão acessíveis, até mesmo aquelas usadas para SSH e VNC.

Conclusão

Analisamos vários métodos para se conectar a VMs executadas no OpenShift Virtualization. Esses métodos oferecem maneiras de solucionar problemas de VMs que não estão funcionando corretamente, além de possibilitar a conexão para o uso diário. As VMs podem interagir entre si localmente dentro do cluster ou, então, sistemas externos podem acessar essas VMs diretamente ou usando a rede do pod dos clusters. Tudo isso facilita a transição na migração de sistemas físicos e VMs em outras plataformas para o OpenShift Virtualization.

Sobre o autor

Mais como este

PNC’s infrastructure modernization journey with Red Hat OpenShift Virtualization

Introducing OpenShift Service Mesh 3.2 with Istio’s ambient mode

Edge computing covered and diced | Technically Speaking

Navegue por canal

Automação

Últimas novidades em automação de TI para empresas de tecnologia, equipes e ambientes

Inteligência artificial

Descubra as atualizações nas plataformas que proporcionam aos clientes executar suas cargas de trabalho de IA em qualquer ambiente

Nuvem híbrida aberta

Veja como construímos um futuro mais flexível com a nuvem híbrida

Segurança

Veja as últimas novidades sobre como reduzimos riscos em ambientes e tecnologias

Edge computing

Saiba quais são as atualizações nas plataformas que simplificam as operações na borda

Infraestrutura

Saiba o que há de mais recente na plataforma Linux empresarial líder mundial

Aplicações

Conheça nossas soluções desenvolvidas para ajudar você a superar os desafios mais complexos de aplicações

Virtualização

O futuro da virtualização empresarial para suas cargas de trabalho on-premise ou na nuvem