O Tekton nasceu a partir do projeto Knative e, posteriormente, foi indicado pela Red Hat como o futuro para os pipelines no Red Hat OpenShift. Em 2018, quando ouvi falar do Tekton pela primeira vez, minha reação inicial foi: "Qual problema estamos tentando resolver?" Afinal, conheço o Jenkins e gosto dele. Por que passar pela fase de aprender sobre uma nova tecnologia se a que uso agora funciona bem?

A Red Hat foi reconhecida como líder no relatório Magic Quadrant™ do Gartner® de 2023

A capacidade de execução e a visão abrangente da Red Hat garantiram à empresa o primeiro lugar na categoria Gestão de Containers do relatório Magic Quadrant™ 2023, do Gartner.

Quando eu perguntava por que o Tekton é melhor do que o Jenkins, a resposta mais comum era "o Tekton é nativo em nuvem", geralmente seguida de silêncio ou de uma mudança rápida de assunto. Então, procurei uma definição clara de "nativo em nuvem" esperando ter uma epifania.

Em 2018, a Cloud Native Computing Foundation (CNCF) publicou esta definição: "Tecnologias nativas em nuvem capacitam as organizações a criar e executar aplicações escaláveis em ambientes modernos e dinâmicos, como nuvens públicas, privadas e híbridas".

Até aí, nada fora do normal. Além disso, tenho um apego irracional às ferramentas que funcionam bem e uso há anos. Para deixar de lado o fiel Jenkins, eu queria estar muito mais convencido de que a grama é realmente mais verde do outro lado da cerca. O Tekton precisava me oferecer algo muito além do que eu já tinha com o Jenkins.

No fim das contas, concluí que o Tekton se integra melhor ao espaço OpenShift/k8s e possibilita novas oportunidades que o Jenkins não necessariamente oferece.

Isso é discutido no restante deste artigo. Se você está se fazendo as mesmas perguntas, espero que encontre algumas das respostas que procura.

Minha experiência com o Jenkins

Sejamos honestos: o Jenkins é uma instituição antiga. Ele surgiu por volta de 2005 e não mudou muito desde então. Sua maior virtude é a enorme seleção de plug-ins, que oferecem uma interface simples para praticamente qualquer cenário. Mas isso é também um ponto fraco, porque os plug-ins têm um ciclo de vida de software indeterminado. Se houver um problema, você normalmente precisará buscar uma alternativa.

O Jenkins é baseado em Java, que exige muita memória e processador, pois está sempre em execução. E, com os custos agregados dos recursos de computação, isso pode começar a ser visto como um problema.

Na era pré-container, normalmente havia um "grande" Jenkins, um único servidor para todo o departamento de desenvolvimento. Isso, por sua vez, era o obstáculo. Devido à dificuldade de processar a grande carga colocada sobre ele, muitas vezes o Jenkins dava um nó e precisava ser reiniciado. Com isso, voltamos à estaca zero.

Depois vieram os containers, permitindo que cada equipe tenha um servidor Jenkins e configure-o como quiser. Mas isso gerou outro problema: a dispersão do Jenkins. Ou seja, diversos servidores Jenkins em execução sem fazer muito na maior parte do tempo e consumindo toda a capacidade. Sem mencionar os variados tipos de código de pipeline que cada equipe propaga.

Portanto, seria bom ter uma solução com área de ocupação mínima que pudesse ser descentralizada para cada equipe, mas que encorajasse todos os pipelines a serem semelhantes. Guarde essas informações, pois falaremos sobre isso mais adiante.

Sobre os modelos de sequenciamento de processos

Em um sistema de software, precisamos organizar a sequência de chamadas de serviço e fases do processo para produzir um resultado. Existem duas maneiras reconhecidas de fazer isso.

A primeira é a orquestração, que é tipificada pelo padrão Process Manager.

Fonte (este arquivo está sob a licença Creative Commons Attribution-Share Alike 4.0 International.)

O Process Manager atua como um maestro em uma orquestra. Por isso o nome orquestração.

O Jenkins é um exemplo desse padrão. Ele se comporta como um gerenciador de processos, o condutor. O processo é definido e codificado usando o JenkinsFile. Tudo que o Jenkins processa em suas diversas interfaces baseadas em plug-in retorna ao gerenciador de processos como concluído, permitindo que a próxima etapa do processo seja iniciada. Na maioria dos casos, esse comportamento parece ser síncrono.

O padrão Process Manager é descrito em Refactoring to Patterns (Kerevsky 2004) como "inerentemente frágil". Isso porque, quando alguém decide reiniciar o gerenciador de processos, as tarefas em execução no momento são interrompidas. Portanto, o design não funciona muito bem para processos de longa duração.

Agora, vamos conhecer o segundo padrão de sequenciamento. A imagem a seguir mostra uma multidão fazendo ola em um estádio.

Fonte. (Este arquivo está sob a licença Creative Commons Attribution-Share Alike 2.0 Generic.)

Cada membro da multidão sabe quando deve se levantar e erguer as mãos, sem que ninguém os oriente. Em um estádio grande, a orquestração não consegue lidar com tudo que acontece ao mesmo tempo. Esse é um exemplo do próximo padrão de sequenciamento, chamado coreografia.

A orquestração é a escolha de design inicial para a maioria dos desenvolvedores. Já a coreografia, apesar de não ser uma escolha tão óbvia, geralmente oferece uma reescrita e design aprimorados e funciona muito bem com eventos.

Isso é muito relevante no caso dos microsserviços, onde centenas de serviços podem precisar ser sequenciados. Da mesma maneira, em cenários com atividades de pipeline de longa duração, como implantação, teste e remoção, a coreografia é um modelo muito mais robusto e prático.

Os pipelines do Tekton são criados em containers separados, que são sequenciados usando eventos internos do Kubernetes no servidor da API do K8. Eles são um exemplo do tipo de sequenciamento por coreografia orientada a eventos. Não há um gerenciador de processos único que possa ser reiniciado, travar, monopolizar recursos ou apresentar outra falha. O Tekton permite que cada pod seja instanciado quando necessário para executar a etapa do processo de pipeline pelo qual é responsável. Após a conclusão, ele é encerrado, liberando os recursos usados para outra tarefa.

O ideal de baixo acoplamento e cadência por meio da reutilização

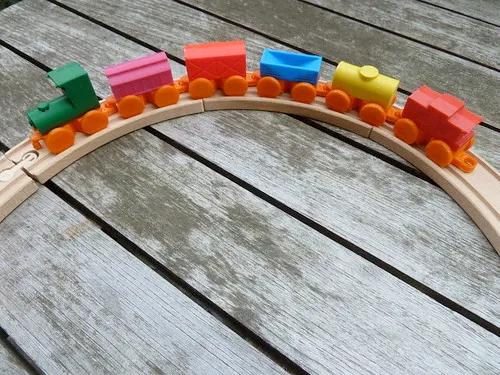

O Tekton também apresenta o que é descrito na arquitetura de software como baixo acoplamento. Considere a seguinte imagem de uma pista de trem infantil:

"Toy train for wood tracks" do Ultra-lab sob a licença CC BY-SA 2.0.

Como a conexão entre o trem e os vagões é magnética, a criança pode usar o trem sozinho ou com todos os vagões acoplados, entre outras combinações.

Também pode alterar a ordem dos vagões, por exemplo, invertendo o verde claro e o amarelo conforme o desejado. Se a criança tiver um segundo conjunto semelhante, todos os vagões poderão ser adicionados ao primeiro para formar um "supertrem".

Isso mostra por que o baixo acoplamento é a arquitetura ideal para o design de software. Ele promove de modo inerente a reutilização, que é parte relevante da filosofia do Tekton. Uma vez criados, os componentes podem ser compartilhados entre os projetos com muita facilidade.

Ainda sobre esse conceito, vamos explorar o alto acoplamento, o inverso do que vimos agora. Analisaremos agora outro brinquedo:

"09506_Pull-Along Caterpillar" de PINTOY® sob a licença CC BY-SA 2.0.

A lagarta também tem várias seções, mas elas são fixas e não podem ser reordenadas. Não é possível ter menos segmentos nem adicionar outros para fazer uma superlagarta. Se a criança quisesse menos segmentos em sua lagarta, precisaria convencer os pais a comprar um brinquedo diferente.

Nesse exemplo, vemos que o alto acoplamento não promove a reutilização, mas força a duplicação.

Sim, concordo que os pipelines do Jenkins podem ter baixo acoplamento e o código pode ser compartilhado entre projetos. Mas isso não é uma regra, e depende muito de como os pipelines foram projetados.

A alternativa é usar um pipeline do Jenkins para todos os projetos. No entanto, isso traz restrições, e logo você encontra um projeto com necessidades que a abordagem única não contempla. Ou seja, a integridade do design de pipeline único é violada.

O Tekton é declarativo por natureza, e seus pipelines se assemelham muito ao exemplo do trem de madeira. Você pode imaginar o objeto de alto nível do Tekton, o pipeline, como a locomotiva. O pipeline contém um conjunto de tarefas, representadas pelos vagões. Pipelines diferentes podem conter as mesmas tarefas, tendo sido reutilizadas com novos paramêtros. Com o baixo acoplamento, as tarefas parametrizadas são encadeadas para formar pipelines. O Tekton promove fortemente o baixo acoplamento, gerando oportunidades diretas de reutilização. Ou seja, o trabalho de um projeto pode ser aproveitado em outro lugar.

Além disso, o Tekton funciona como definições adicionais de objetos do Kubernetes, se encaixando muito facilmente com uma abordagem everything-as-code e GitOps. O código do pipeline pode ser facilmente aplicado ao cluster junto a outras configurações de cluster/namespace.

Por que tudo isso é importante?

Porque o conceito de ambientes formais de desenvolvimento, teste e UAT está, em grande parte, ultrapassado. Os ambientes fixos remontam à necessidade de adquirir servidores físicos e designar um uso para cada.

Antigamente, era assim. Em um mundo de OpenShift e everything-as-code, em que há diferentes estilos de gerenciamento de servidores (tipo "pet versus cattle"), não há razão para que esses ambientes não sejam instanciados dinamicamente usando pipelines e testes, sendo executados em seguida. Após a conclusão, eles podem ser desfeitos novamente para abrir caminho para outros testes, e assim por diante.

Definitivamente, esse ainda não é o caso na maioria das empresas. Talvez porque as ferramentas que tínhamos disponíveis até o momento não eram ideais.

Conclusão

O mundo ainda está tentando acompanhar todas as oportunidades que tecnologias como o OpenShift oferecem, o que não era possível no passado.

Com tantos recursos de computação que passam, no mínimo, a maior parte do tempo ociosos, são infinitas as oportunidades de automatizar os testes para entregar software melhor. Há muito tempo discuto essas ideias, mas nunca senti que o Jenkins era a ferramenta certa para o trabalho. Quando conheci o Tekton com a mente aberta, logo percebi que era a solução perfeita.

O Tekton se integra totalmente à API do Kubernetes e ao modelo de segurança, além de incentivar o baixo acoplamento e a reutilização. Ele é orientado por eventos e segue o modelo de coreografia, sendo ideal para controlar processos de testes de longa duração. Os artefatos de pipeline são apenas recursos adicionais do Kubernetes (definições de pod, contas de serviço, segredos etc.) que podem facilmente ser usados para everything-as-code, alinhados ao restante do ecossistema k8.

Cada equipe de aplicações pode ter o próprio código de pipeline, que fica inativo quando não está em execução. Isso garante as vantagens do Jenkins distribuído sem todas as cargas ociosas. O Apache Maven se tornou um sucesso rapidamente porque foi revolucionário. Ele oferecia uma maneira organizada de elaborar um projeto java, permitindo aos desenvolvedores compreender facilmente a configuração de build entre projetos. O Tekton faz o mesmo com os pipelines, tornando a reutilização de tarefas simples e óbvia.

O Cruise Control foi a primeira ferramenta de CI no início dos anos 2000 e, falando por experiência própria, era difícil de usar. Na época, o Jenkins pareceu oferecer uma melhoria radical em relação ao que existia antes. No mundo do OpenShift/k8s, o Tekton parece ser o futuro da tecnologia de pipeline.

Mais informações

Sobre o autor

Mais como este

Looking ahead to 2026: Red Hat’s view across the hybrid cloud

Red Hat to acquire Chatterbox Labs: Frequently Asked Questions

Where Coders Code | Command Line Heroes

You need Ops to AIOps | Technically Speaking

Navegue por canal

Automação

Últimas novidades em automação de TI para empresas de tecnologia, equipes e ambientes

Inteligência artificial

Descubra as atualizações nas plataformas que proporcionam aos clientes executar suas cargas de trabalho de IA em qualquer ambiente

Nuvem híbrida aberta

Veja como construímos um futuro mais flexível com a nuvem híbrida

Segurança

Veja as últimas novidades sobre como reduzimos riscos em ambientes e tecnologias

Edge computing

Saiba quais são as atualizações nas plataformas que simplificam as operações na borda

Infraestrutura

Saiba o que há de mais recente na plataforma Linux empresarial líder mundial

Aplicações

Conheça nossas soluções desenvolvidas para ajudar você a superar os desafios mais complexos de aplicações

Virtualização

O futuro da virtualização empresarial para suas cargas de trabalho on-premise ou na nuvem