This blog is based on a support case in which we observed a steady frame loss rate of 0.01% on Data Plane Development Kit (DPDK) interfaces on Red Hat OpenStack Platform 10.

Frame loss occurred at DPDK NIC receive (RX) level in a situation where the traffic can run for a few seconds/minutes and then we experience a burst of lost packets. The traffic will then be stable for a few seconds/minutes before another burst of lost packets. From following logs, we can confirm that we are losing packets in the RX queue but are not losing packets being transmitted (TX).

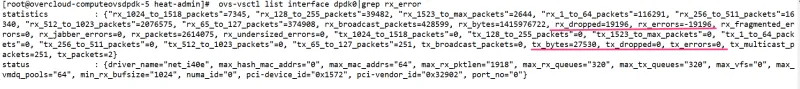

As you can see from this screenshot, no errors with TX packets, but quite a few dropped RX packets on port 1 and 2.

Figure: NFV DPDK architecture (Data Plane Development Kit)

Figure 1 shows the high-level architecture of OVS-DPDK. Poll Mode Drivers (PMD) enable direct transfer of packets between user space and the physical interface, bypassing the kernel network stack. DPDK traffic performance is affected by PMDs/isolation/tuning. PMDs, isolution, and tuning contributes to DPDK traffic performance. Hence OVS-DPDK troubleshooting methodology is in the following factors:

Openvswitch

Figure: An example of OVS-DPDK configured for a dual NUMA system

PMD threads Affinity

If the PMD thread doesn't consume the traffic quickly enough, the hardware NIC queue overflows and packets are dropped in batches. There is nothing much the OS can do with it, as this queue is within every single NIC board. The pmd-cpu-mask is a core bitmask that sets which cores are used by OVS-DPDK for datapath packet processing.

After pmd-cpu-mask is set, OVS-DPDK will poll DPDK interfaces with a processor core that is on the same NUMA node as the interface. The pmd-cpu-mask is used directly in OVS-DPDK and it can be set at any time, even when traffic is running.

Configure the 'pmd-cpu-mask' to enable PMD threads to be pinned to mask cores.

# ovs-vsctl set Open_vSwitch . \

other_config:pmd-cpu-mask=<cpu core mask>For example in a 24-core system where cores 0-11 are located on NUMA node 0 and 12-23 are located on NUMA node 1, set the following mask to enable one core on each node.

# ovs-vsctl set Open_vSwitch other_config:pmd-cpu-mask=1001dpdk-socket-mem

The dpdk-socket-mem variable defines how hugepage memory is allocated across NUMA nodes. It is important to allocate hugepage memory to all NUMA nodes that will have DPDK interfaces associated with them. If memory is not allocated to a NUMA node that is associated with a physical NIC or VM, they cannot be used. The following command is a comma-separated list of memory to pre-allocate from hugepages on specific sockets.

# ovs-vsctl set Open_vSwitch . \

other_config: dpdk-socket-mem=1024,1024Multiple Poll Mode Driver Threads

With PMD multi-threading support, OVS creates one PMD thread for each NUMA node by default, if there is at least one DPDK interface from that NUMA node added to OVS. However, when there are multiple ports/RX queues producing traffic, performance can be improved by creating multiple PMD threads running on separate cores. These PMD threads can share the workload by each being responsible for different ports/rxq's. Assignment of ports/rxq's to PMD threads is done automatically.

Enable HyperThreading

With HyperThreading, also known as simultaneous multithreading (SMT), enabled, a physical core appears as two logical cores. SMT can be utilized to spawn worker threads on logical cores of the same physical core thereby saving additional cores.

With DPDK, when pinning PMD threads to logical cores, care must be taken to set the correct bits of the pmd-cpu-mask to ensure that the PMD threads are pinned to SMT siblings.

In order to check what are the siblings of a given logical CPU, run this command:

$ cat /sys/devices/system/cpu/cpuN/topology/thread_siblings_listDPDK Physical Port RX Queues

The following command sets the number of RX queues for DPDK physical interface. The RX queues are assigned to pmd threads on the same NUMA node in a round-robin fashion.

# ovs-vsctl set Interface <DPDK interface> options:n_rxq=<integer>DPDK Physical Port Queue Sizes

If the VM isn't fast enough to consume the packets, the VirtIO ring buffer runs out of space and the vhost-user (DPDK) drops packets. We can try to increase the size of those rings.

Different n_rxq_desc and n_txq_desc configurations yield different benefits in terms of throughput and latency for different scenarios. Generally, smaller queue sizes can have a positive impact for latency at the expense of throughput. The opposite is often true for larger queue sizes.

# ovs-vsctl set Interface dpdk0 options:n_rxq_desc=<integer># ovs-vsctl set Interface dpdk0 options:n_txq_desc=<integer>The above command sets the number of rx/tx descriptors that the NIC associated with dpdk0 will be initialised with.

Linux Tuning

Isolated/tuned cores for PMD/VM

If the VM isn't fast enough to consume the packets, the virtio ring runs out of buffers and the vhost-user drops packets. Isolated cores can then be used to dedicatedly run vm and PMD. CPU pinning is the ability to run a PMD/VM on a specific physical CPU, on a given host. vCPU pinning provides similar advantages as task pinning on bare-metal systems. Since virtual machines run as user space tasks on the host operating system, pinning increases cache efficiency.

Configure hugepage for OVS/VM

Choosing a page size is a trade off between providing faster access times (quick I/O?) by using larger pages and ensuring maximum memory utilization by using smaller pages. Notice that using HugePages for backing the memory for your guest will also inhibit the overcommit savings by KSM (Kernel Same Page Merging). Learn more about KSM in our Product Documentation.

Understand DPDK Port statistics

Let's start with how to get port statistics. To get port statistics, we're going to use the ovs-vsctl utility. This is part of Open vSwitch.

From the source code of OSP10, we understand statistics as below:

-

rx_errors : "total number of erroneous received packets" - "Total of RX packets dropped by the HW"

-

tx_error: Total number of failed transmitted packets.

-

rx_dropped: "Total number of RX mbuf allocation failures" + "Total of RX packets dropped by the HW"

The following is an example of how to troubleshoot DPDK packet drop issue. Firstly, we dump the statistics of DPDK port using the ovs-vsctl utility.

From the statistics, there are packet drop and errors on RX_Req. Packet drop can be caused by performance or hardware. There are lots of aspects that affect DPDK performance, like not enough forwarding cores, not using multiqueue, slow VM response, MTU settings, bad queue to core assignment, etc. Here are some steps to help in troubleshooting.

Check Open vSwitch Configuration

# ovs-vsctl get open_vswitch . other_config

{dpdk-extra="-n 4", dpdk-init="true", dpdk-lcore-mask="f000000f",

dpdk-socket-mem="4096,4096", pmd-cpu-mask="f000000f0"}

The customer uses 4 cores for pmd threads, and socket memory is 4096. There should be sufficient cores for pmd and dpdk lcore to ensure dpdk performance. We may add more cores to pmd threads.

# ovs-vsctl --no-wait set Open_vSwitch . \

other_config:pmd-cpu-mask="fc00000fc"

# ovs-vsctl --no-wait set Open_vSwitch . \

other_config:dpdk-lcore-mask ="30000003"

Check PMD Threads Polling Cycles

# ovs-appctl dpif-netdev/pmd-rxq-show

pmd thread numa_id 0 core_id 2:

isolated : true

port: dpdk0 queue-id: 0

pmd thread numa_id 0 core_id 30:

isolated : true

port: dpdk0 queue-id: 1

pmd thread numa_id 0 core_id 34:

isolated : false

port: vhu4d717b90-39 queue-id: 0

port: vhu066565d2-14 queue-id: 0

pmd thread numa_id 0 core_id 6:

isolated : false

port: vhu9ba1d7e7-2b queue-id: 0

pmd thread numa_id 0 core_id 4:

isolated : true

port: dpdk1 queue-id: 0

pmd thread numa_id 0 core_id 32:

isolated : true

port: dpdk1 queue-id: 1

# ovs-appctl dpif-netdev/pmd-stats-show|grep -A9 -E "core_id (2|4|30|32):"

pmd thread numa_id 0 core_id 2:

emc hits:6971154401

megaflow hits:18787

avg. subtable lookups per hit:1.58

miss:375

lost:0

polling cycles:2726409361980 (25.74%)

processing cycles:7865260979784 (74.26%)

avg cycles per packet: 1519.35 (10591670341764/6971203155)

avg processing cycles per packet: 1128.25 (7865260979784/6971203155)

--

pmd thread numa_id 0 core_id 30:

emc hits:6971365714

megaflow hits:10

avg. subtable lookups per hit:1.00

miss:404

lost:0

polling cycles:2785958014653 (26.32%)

processing cycles:7799614633899 (73.68%)

avg cycles per packet: 1518.44 (10585572648552/6971366138)

avg processing cycles per packet: 1118.81 (7799614633899/6971366138)

--

pmd thread numa_id 0 core_id 4:

emc hits:52213

megaflow hits:18823

avg. subtable lookups per hit:1.00

miss:650

lost:0

polling cycles:7167842875131 (99.98%)

processing cycles:1346919081 (0.02%)

avg cycles per packet: 79208814.43 (7169189794212/90510)

avg processing cycles per packet: 14881.44 (1346919081/90510)

--

pmd thread numa_id 0 core_id 32:

emc hits:29149

megaflow hits:3

avg. subtable lookups per hit:1.00

miss:238

lost:0

polling cycles:7157381605371 (99.98%)

processing cycles:1257975648 (0.02%)

avg cycles per packet: 243549130.10 (7158639581019/29393)

avg processing cycles per packet: 42798.48 (1257975648/29393)

PMD threads polling on dpdk1 with one core (2 threads-4&30). One CPU Core (2PMD) isolated for each PHY. The PHY NIC has two queues (even only one queue has packet drop issue). We try to add more cores to the rx_req to confirm that packet drop is not caused by insufficient fwding cores.

# ovs-vsctl set Interface dpdk1 options:n_rxq=4The above command set the number of DPDK Physical Port Rx Queues as 4. So PMD Threads polling with 2 core (4 threads). But there is no improvement in packet loss.

Tuning compute node

The compute nodes are tuned by Tuned using the CPU-Partitioning Profile. Put all of the cores you want to isolate at /etc/tuned/cpu-partitioning-variables.conf. The remaining CPU cores are dedicated to guest VMs. Additionally, isolcpus and hugepages should be configured in the kernel boot cmdline. Refer to our solution on access.redhat.com.

Confirm that cores for VM & PMD are isolated from host.

Check VM CPU affinity

The PMD core should not be included in VMs CPU affinity and VMs CPU should be isolated from host.

[root@overcloud-computeovsdpdk-6 ~]# virsh emulatorpin 24

emulator: CPU Affinity

----------------------------------

*: 9,15,17,19,21,25,29,35,45,51,53,55,57,61,65,71

With all tuning above, frame drop still exists. And then we observed that rx_error is negative. From the source code of OSP10 we see this:

rx_errors : "total number of erroneous received packets" - "Total of RX packets dropped by the HW"

So, the packet drop is from "Total of RX packets dropped by the HW." And now, we can confirm that resource is not the root cause, rather it is a hardware issue.

To sum up, here's a numberof things to review when troubleshooting:

- Check the DPDK Port statistics to confirm what happened.

- Check the configuration and tuning.

On your Linux systems:

- Isolated/tuned cores for PMD threads

- Associate vfio-pci driver for dpdk nic

- configure hugepage for ovs&vm

With Open vSwitch

- Configure cores for PMD threads

- Configure number of memory channels

Additional information is available in the Red Hat Customer Portal for Configuration, Tuning and Troubleshooting of NFV technologies in Red Hat OpenStack Platform. Please note that this content requires a subscription to access. You may also wish to reference Kevin Traynor's post OVS-DPDK Parameters: Dealing with multi-NUMA

Sobre o autor

Mais como este

New efficiency upgrades in Red Hat Advanced Cluster Management for Kubernetes 2.15

Friday Five — January 16, 2026

Technically Speaking | Build a production-ready AI toolbox

AI Is Changing The Threat Landscape | Compiler

Navegue por canal

Automação

Últimas novidades em automação de TI para empresas de tecnologia, equipes e ambientes

Inteligência artificial

Descubra as atualizações nas plataformas que proporcionam aos clientes executar suas cargas de trabalho de IA em qualquer ambiente

Nuvem híbrida aberta

Veja como construímos um futuro mais flexível com a nuvem híbrida

Segurança

Veja as últimas novidades sobre como reduzimos riscos em ambientes e tecnologias

Edge computing

Saiba quais são as atualizações nas plataformas que simplificam as operações na borda

Infraestrutura

Saiba o que há de mais recente na plataforma Linux empresarial líder mundial

Aplicações

Conheça nossas soluções desenvolvidas para ajudar você a superar os desafios mais complexos de aplicações

Virtualização

O futuro da virtualização empresarial para suas cargas de trabalho on-premise ou na nuvem