In this post:

-

The OVN Octavia driver—the alternative driver offered in Red Hat OpenStack 16—leverages OVN, which runs on every node in OpenStack, to run the load balancers instead of spinning up a separate VM for them.

-

Despite its limitations, we recommend OVN over Amphora if it suits your use case.

-

We have a number of bug fixes, features, and integration improvements planned in upcoming releases of OpenShift.

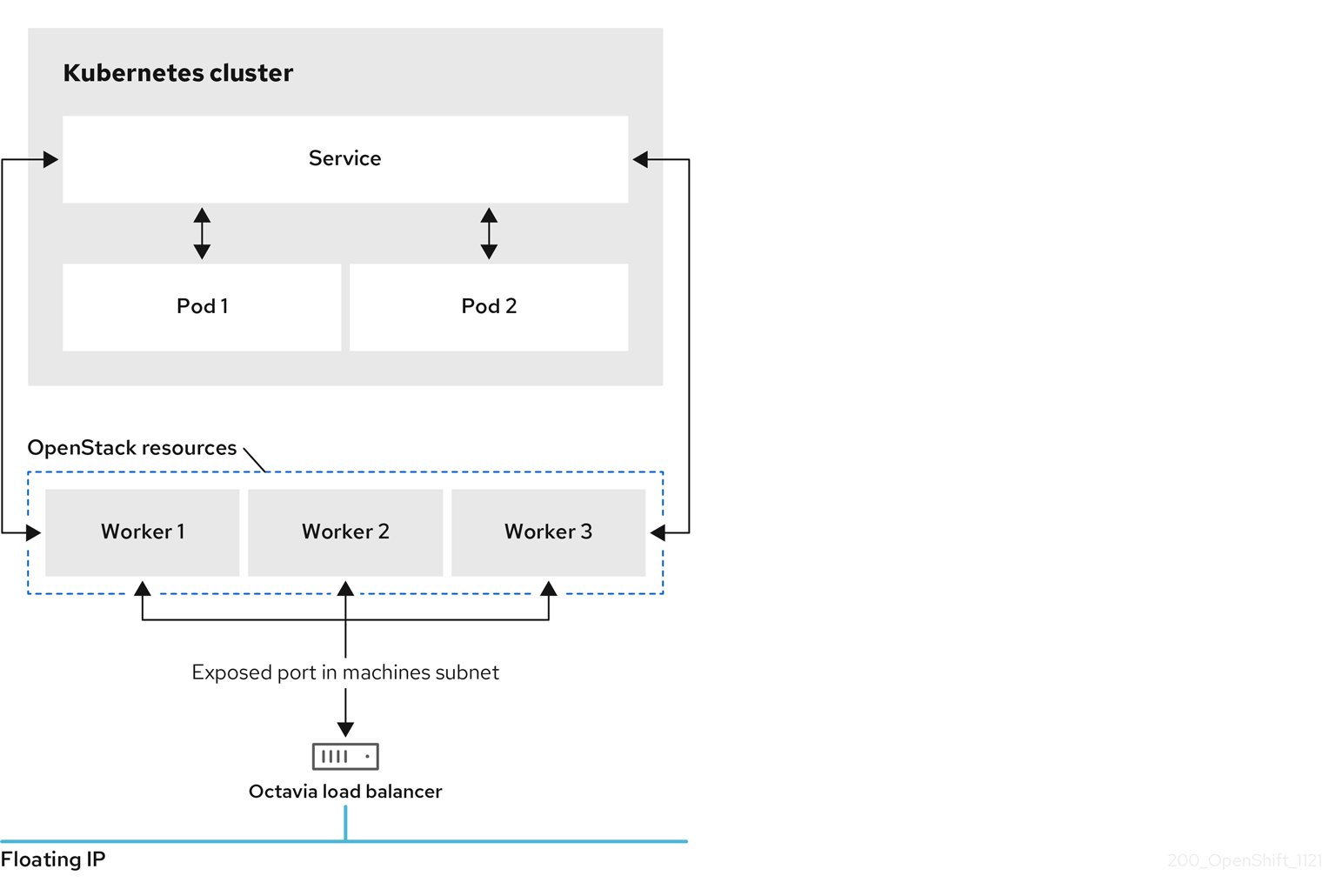

One of the most common methods to expose OpenShift applications to traffic from outside of the cluster is by using a load balancer service. In Red Hat OpenStack Platform 16, Amphora is the reference driver for Octavia and is the default provider driver for that service.

In this post, we introduce you to the OVN Octavia driver, the alternative driver that is offered in Red Hat OpenStack Platform 16. It is lightweight, fast to provision, and less resource-heavy than Amphora.

What is a load balancer service?

Prior to load balancer services, users relied on node ports to expose their applications to external traffic. However, node ports are limited and require the user to do manual steps to serve their application to external users.

When you use node ports to expose an application, the ports that your app’s entry points listen on get forwarded to the cluster network that your compute nodes are attached to in OpenStack.

In the OpenShift installer, the cluster network and subnet are commonly referred to as the machines network and subnet, so we will use that terminology here as well. For most deployments, the machine's network is not external, and this means that it is up to the user to figure out how to best expose these ports to external traffic.

Load balancer services go a step further: they create a load balancer in your cloud platform’s Load Balancing-as-a-Service (LBaaS) that is reachable by external traffic and serves traffic to the node ports of your application. They give users a fully automated method of exposing their applications to external traffic using high availability (HA) without having to manage the HA infrastructure themselves.

Choosing an Octavia Driver

If you're running your OpenShift on Red Hat OpenStack Platform 16, then the LBaaS is called Octavia. Its default provider is Amphora, which basically implements each load balancer as an amphorae, most of the time a virtual machine (VM) that runs HAProxy.

This means that each load balancer that is created with the Amphora driver requires a compute instance, and could require additional instances if they are run in active/passive high availability failover mode. As a consequence, they sometimes take a long time to spin up, and can be expensive at scale.

The OVN Octavia driver—the alternative driver offered in Red Hat OpenStack Platform 16—leverages OVN, which runs on every node in OpenStack, to run the load balancers instead of spinning up a separate VM for them. It is also distributed and highly available by default, and cheaper to scale.

All this makes OVN a perfect choice when running an OpenShift environment that is expected to create a high number of Octavia load balancers. For example, when your workload creates many LoadBalancer services or when you are using Kuryr-Kubernetes as your OpenShift CNI plug-in. In that case, even internal services will have a load balancer created in Octavia, so scalability is crucial.

That being said, there are a few drawbacks:

-

In order to use the load balancer driver, Neutron must also be configured to use OVN.

-

It lacks health monitors right now, but there are plans to implement them in a future release of OpenStack.

-

It supports the TCP and UDP protocols only, SCTP is coming in future OSP releases.

-

It doesn’t fully support IPv6 use cases yet.

The current limitations are documented in the provider driver documentation. Despite its limitations, we recommend OVN over Amphora if it suits your use case.

Enabling Octavia support in OpenShift

Kuryr has excellent documentation on how to enable or verify that your OpenStack cluster supports Octavia, and we recommend that you follow this before installing OpenShift.

Then once your OpenStack cluster has Octavia, you can configure the OpenShift cloud provider to use the load balancer integration before the installation or as a Day 2 operation.

Supported behavior

Red Hat OpenShift 4.6-4.9 still uses legacy in-tree openstack-cloud-provider. This means that there are several limitations:

-

Only TCP traffic is supported right now.

-

The field

loadBalancerSourceRangesis not supported when you create a load balancer service in Kubernetes. -

Setting

manage-security-groupsin your cloud provider config does not work for clusters running using non-admin OpenStack credentials. -

The floating IPs attached to your Octavia load balancers will not be deleted when you delete your cluster (this is fixed in 4.9).

-

The

loadBalancerIPproperty for load balancer services is not supported.

These limitations are also discussed in the feature documentation. Due to the fact that in-tree cloud provider don't accept code changes, it is unlikely that these known issues will be fixed in older releases.

These issues are expected to be solved in the external cloud provider that is considered tech preview in Red Hat OpenShift 4.10 and expected to reach GA in 4.11.

Please note that if your OpenShift cluster runs with Kuryr CNI, the load balancer integration should be kept disabled. This is because Kuryr will manage the Services of LoadBalancer type on its own.

Roadmap

We have a number of bug fixes, features, and integration improvements planned in upcoming releases of Red Hat OpenShift. In Red Hat OpenShift 4.9, we automated the configuration of your cloud provider config at install time and updated the security group settings so you don't have to.

This means that, unless you intend to switch your provider driver to OVN, there is nothing you will need to do as a Day 2 operation to start using load balancer services that are backed by Octavia in this release. We also made sure that floating IP addresses don’t get orphaned during cluster deletion.

Once we switch to the out-of-tree cloud provider in future OpenShift releases, expect more features and quality of life fixes to come. Our wish list includes support for the UDP, SCTP, GTP, QUIC, and HTTP over UDP with TLS protocols.

We encourage you to test the feature in the OpenShift version of your choice! If you want to learn more, check out the OpenShift on OpenStack documentation.

Thanks to Emilio Garcia for contributing,

Sobre o autor

Michal Dulko is a Senior Software Engineer at Red Hat.

Mais como este

Simplify Red Hat Enterprise Linux provisioning in image builder with new Red Hat Lightspeed security and management integrations

F5 BIG-IP Virtual Edition is now validated for Red Hat OpenShift Virtualization

Kubernetes and the quest for a control plane | Technically Speaking

Get into GitOps | Technically Speaking

Navegue por canal

Automação

Últimas novidades em automação de TI para empresas de tecnologia, equipes e ambientes

Inteligência artificial

Descubra as atualizações nas plataformas que proporcionam aos clientes executar suas cargas de trabalho de IA em qualquer ambiente

Nuvem híbrida aberta

Veja como construímos um futuro mais flexível com a nuvem híbrida

Segurança

Veja as últimas novidades sobre como reduzimos riscos em ambientes e tecnologias

Edge computing

Saiba quais são as atualizações nas plataformas que simplificam as operações na borda

Infraestrutura

Saiba o que há de mais recente na plataforma Linux empresarial líder mundial

Aplicações

Conheça nossas soluções desenvolvidas para ajudar você a superar os desafios mais complexos de aplicações

Virtualização

O futuro da virtualização empresarial para suas cargas de trabalho on-premise ou na nuvem