In part 2 of the vDPA kernel framework series, we discussed the design and implementation of vhost-vDPA bus driver and virtio-vDPA bus driver. Both drivers are based on the vDPA bus which is explained in part 1 of the vDPA kernel framework series. In this post we will cover the use cases for those two bus drivers and how they can be put to use for bare metal, container and VM.

This post is intended for developers and architects who want to understand how vDPA is integrated with the existing software stacks such as QEMU, traditional kernel subsystems (networking and block), DPDK applications etc.

The post is composed of two sections: the first part focuses on the typical use cases for the vhost-vDPA bus driver. The second part focuses on several typical use cases for the virtio-vDPA bus driver.

It should be noted that the examples provided in this post are for vDPA devices using a platform IOMMU. Those examples can be easily extended to vDPA devices that use on-chip IOMMU since the difference is only how to set up the DMA mapping on the host platform.

Use Case for vhost-vDPA

Using vhost-vDPA as a network backend for containers and bare metal

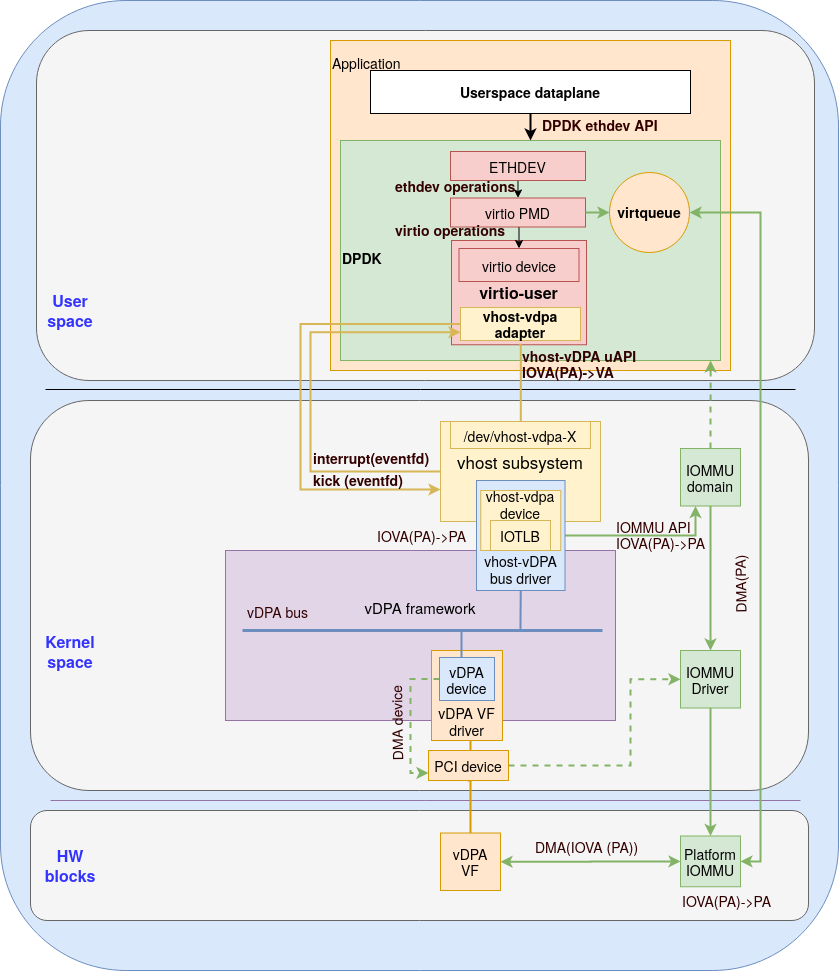

The vhost-vDPA bus driver could be used as a high performance userspace I/O path similar to what VFIO (Virtual Function I/O) can provide. High speed userspace dataplane can use the vhost-vDPA bus driver to set up a direct I/O path to userspace. For example, DPDK virtio-user supports the vhost-net datapath. This datapath can be extended to support the vhost-vDPA device as illustrated in the following figure:

Figure 1: Direct I/O to usrspace via vhost-vDPA and DPDK virtio-user (containers and bare metal)

The “application” box in the diagram can represent both a container or an application running on bare metal.

A new adapter using the vhost-vDPA device will be introduced for the virtio-user (see the “vhost-vdpa adapter” yellow box inside the “virito-user” pink box). Userspace dataplane will see the ethernet device (ETHDEV) and use DPDK ethernet API to send and receive packets (marked as “DPDK ethdev API” in the diagram). DPDK will convert the ethernet API to internal ethdev operations implemented by the virtio PMD (poll mode driver) which will populate virtqueues directly.

In order to set up the vDPA device, the Virtio PMD needs to talk with the virtio device virtualized by virtio-user. The virito-user will implement the virtio device operations through the vhost-vDPA uAPI.

To ease the IOVA allocation (the differences among addresses were explained in vDPA kernel framework part 2: vDPA bus drivers for kernel subsystem), DPDK uses the physical address (PA) of the hugepages as the IOVA which attempts to make sure the IOVA could be accessed through IOMMU. Since some IOMMUs have a limit on the valid IOVA address range this doesn’t always succeed (so you can’t DMA to that part of the memory).

The mappings passed from DPDK to vhost-vDPA device are actually the PA->VA mappings. The VA will be converted to PA and stored in the IOTLB of the vhost-vDPA device. The vhost-vDPA bus driver builds the IOVA(PA)->PA mapping (in IOVA(PA) the PA is used as the IO virtual address) in the platform IOMMU through IOMMU APIs. Then the vDPA device can use IOVA(PA) to perform direct I/O to userspace. The vhost-vDPA device isolates the DMA by dedicating the IOMMU domain to the userspace memory managed by DPDK.

Interrupts and virtqueue kicks are relayed via eventfd (two yellow arrows going to and from the “vhost subsystem” box). The vhost-vDPA adapter sets up eventfds via VHOST_SET_VRING_KICK (for virtqueue kick) and VHOST_SET_VRING_CALL (for virtqueue interrupt). When vhost-vDPA adapter needs to kick a specific virtqueue, it will write to that eventfd. The vhost-vDPA device will be notified and forward the kick request to the vDPA VF driver (which is one example of a vDPA device driver) via vDPA bus operations. When an interrupt is raised by a specific virtqueue, the vhost-vDPA device writes to the eventfd and wakes up the vhost-vDPA adapter in the userspace.

The userspace application can benefit from the hardware native performance by setting up direct userspace I/O provided by the vhost-vDPA bus driver through a DPDK library without any changes in the userspace dataplane implementation.

Using vhost-vDPA as a network backend for VM with guest kernel virtio-net driver

The vhost-vdpa device could be used as a network backend for VMs with the help of QEMU.

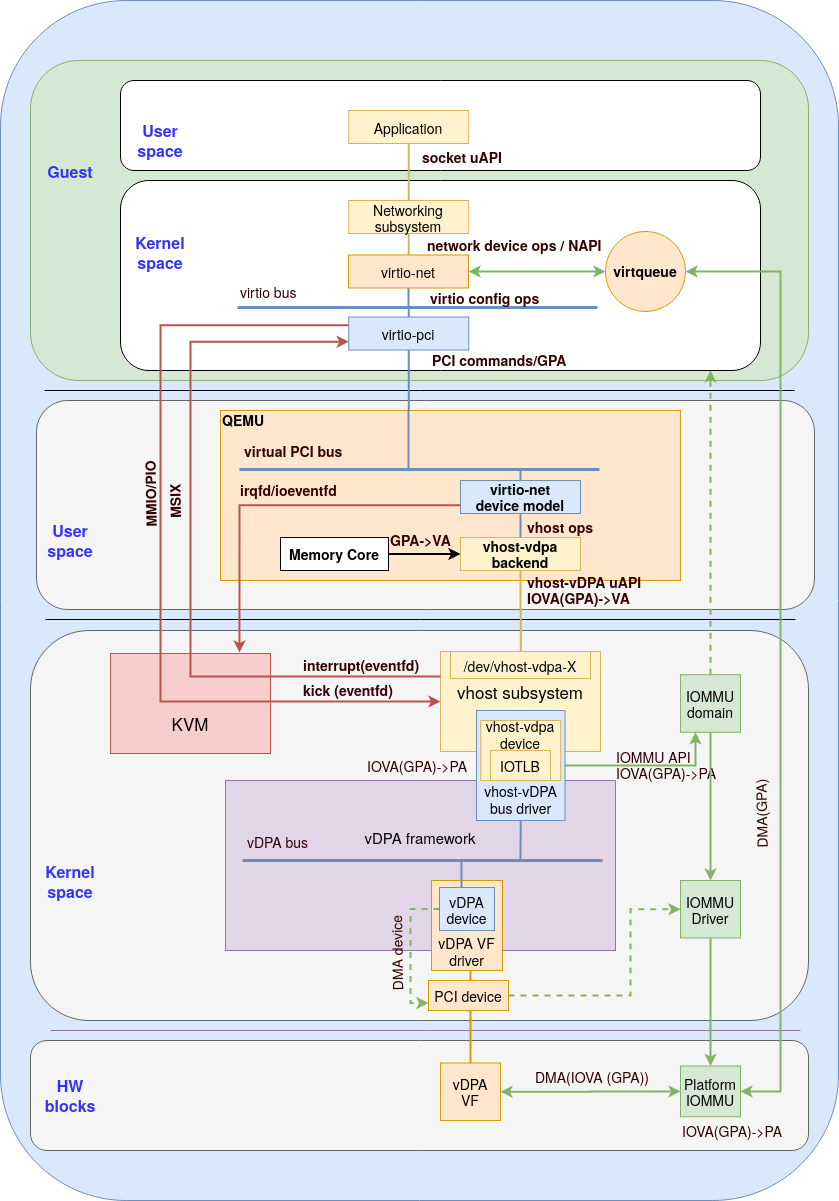

In the first use case we show how to connect the host to the guest kernel driver as illustrated in figure 2:

Figure 2: Using vhost-vdpa device as a backend for guest kernel

The above figure describes how the vDPA device is used by the guest kernel virtio-net driver. This is done by using QEMU to present a virtio device to the guest by mediating between the emulated virtio device and the vhost-vDPA device:

Modeling virtio-net device (“virtio-net device model” blue box ) - Deciding how to provide a virtio emulated device. We can split this task into two major parts:

Bus specific operations - virtio devices are commonly implemented as PCI devices. QEMU traps all PCI commands on the emulated PCI bus. The PCI bus emulation module will then process the generic PCI emulation (a command emulation box not drawn in the diagram) or forward the virtio general operations to the virtio-net device model.

Virtio general operations - the operations defined by virtio specification to configure virtio devices will be processed by the virtio-net device model

Converting virtio commands to vhost-vDPA commands - A new vhost-vDPA networking backend is introduced (yellow box inside QEMU). This vhost-vDPA backend accepts requests translated from virtio general operations by virtio-net device model through vhost ops (which is the abstraction of the common vhost operations), The vhost-vDPA backends converts those requests to vhost-vDPA uAPIs.

Setting up DMA mapping - when vIOMMU is not enabled in the VM, GPA (Guest Physical Address) is used by the guest kernel drivers. The vhost-vDPA backend will listen to the mappings of GPA->VA maintained by QEMU memory core, and update them to the vhost-vDPA device. The vhost-vDPA device (yellow box inside the “vhost-vDPA bus driver” blue box) isolates the DMA by dedicating the IOMMU domain to the Guest (GPA->VA mapping). Platform IOMMU will be requested for setting up the mapping. The vDPA device (vDPA VF) uses GPA as IOVA, and the platform IOMMU will validate and translate GPA->PA for the DMA to the buffer pointed in the virtqueue.

Setting up KVM ioeventfd and irqfd (inside the QEMU block)- The vhost-vDPA device will create two eventfds per virtqueue for relaying virtqueue kick or interrupt:

Ioveventfd - which is the eventfd that is used for relaying doorbell (virtqueue notification). For each virtqueue, QEMU creates an ioeventfd and maps it to the virtqueue’s doorbell MMIO/PIO area via KVM ioctl. The vhost-vdpa device will poll for fd and trigger the virtqueue kick through vDPA bus operation.

Irqfd - which is the eventfd that is used for relaying interrupt from the vhost-vDPA device to the guest. For each virtqueue, QEMU will create an eventfd and map it to a specific MSIX vector via KVM ioctl. This eventfd will also be passed to the vhost-vDPA device so vhost-vDPA can trigger the guest interrupt by simply writing to that eventfd.

In the guest, the virtqueue belongs to the kernel so the population is done by the virtio-net driver. The device configuration however is done by a specific bus implementation (PCI in the above figure) and not by the virtio-net driver since multiple busses are supported. The virtio-pci module (blue box inside the guest kernel space) implements virtio commands via the emulated PCI bus. The virtio-net driver will register itself as a networking device in order to be used by a kernel networking subsystem in the guest. A set of netdevice operations are implemented for the kernel networking subsystem to send and receive packets. The userspace application is connected with the socket uAPI.

The guest application uses sendmsg() syscall for sending a packet. Then the kernel networking subsystem will copy the userspace buffer into the kernel buffer and ask the virtio-net driver to send the packet. The virtio-net driver will add the buffer to the virtqueue and kick the virtqueue via virtio-pci through a MMIO write.

This MMIO write will be forward to the vhost-vDPA driver by KVM via ioeventfd. The vhost-vDPA driver will use a dedicated vDPA bus operation to touch the doorbell of the real hardware through the vDPA VF driver. The vDPA device (vDPA VF in the above figure) will start the datapath and send the packet to the wire through DMA from the buffer pointed in the virtqueue.

When the vDPA device receives a packet, it needs to DMA the packet to the buffer specified in the virtqueue. When the DMA finishes, it may raise an interrupt and the interrupt will be handled by the vDPA VF driver. The vDPA VF driver will trigger the virtqueue interrupt callback set by the vhost-vDPA bus driver.

In the callback, vhost-vDPA driver simply writes to irqfd which is polled by KVM. KVM will map the eventfd to a MSI-X interrupt and inject virtual interrupt to the guest. In the guest, this interrupt will be handled by the guest virtio-net driver which will populate the virtqueue and queue the buffer into the socket receive queue. Guest applications will be woken up and use recvmsg() to receive the packet.

With the cooperation between QEMU and vhost-vDPA, we accelerate the virtio datapath in a transparent, unified and safe way:

Native speed of the hardware - The vDPA device can DMA to or from guest memory directly.

A unified and transparent virtio interface - Guest virtio drivers may use vDPA devices transparently.

Minimum device exposure - The hardware implementations are hidden by the vhost-vDPA bus driver, there’s no direct access of the hardware registers from guests.

Using vhost-vDPA as a network backend for VM with guest user space virtio-PMD

In this use case we now move from connecting the host and the guest kernel to connecting the host and the guest userspace DPDK application.

With the help of vIOMMU, the vDPA device can be controlled by a userspace virtio driver (such as a virtio PMD) running in the guest as illustrated in figure 3:

Figure 3: Using vIOMMU with vhost-vDPA

The userspace DPDK virtio PMD is used instead of the kernel virtio-net driver. The Virtio PMD allocates the virtqueues and buffers in the userspace. The DPDK userspace virtio-pci driver will cooperate with the guest kernel VFIO module for achieving the following:

Setting up DMA mapping - An IOMMU dominan will be allocated by the VFIO for a virtio-net device. The Virtio PMD accesses the virtqueues and buffers through GVA (Guest Virtual Address). The GPA of the guest hugepages is used as IOVA. The IOVA (GPA)->GVA mapping is maintained and passed to VFIO. VFIO will convert GVA to GPA and locks the pages in the memory.

With the help of IOMMU domain the guest IOMMU drivers finally set up the IOVA(GPA)->GPA mapping in the vIOMMU. QEMU will intercept the new mapping and notify the vhost-vDPA backend. The vhost-vDPA backend will convert GPA->VA and forward the IOVA(GPA)->VA mapping to the vhost-vDPA device. VA will be converted to PA and the host IOMMU driver will set up IOVA(GPA)->PA mapping in the IOMMU hardware.Accessing the emulated virtio-net device - Guest Virtio PMD can access the emulated virtio-net device with the help of the guest VFIO PCI module through PCI commands. Those commands will be intercepted by QEMU and forwarded to the PCI bus emulation (blue box on the host inside the QEMU) or virtio-net device model (blue box on the host inside the QEMU) and finally passed to the vhost-VDPA device.

With the help of vIOMMU and guest VFIO module a direct I/O path to userspace applications running inside the guest is provided. The vDPA device (VF) can use IOVA(GPA) to perform direct I/O into guest virtio PMD. DMA isolation is done with the implicit cooperation of host IOMMU and vIOMMU through the help of the vhost-vDPA device and QEMU’s vhost-vDPA backend. Virtio commands are relayed via QEMU’s vhost-vDPA backend and vhost-vDPA device to the vDPA device driver.

Use Case for virtio-vDPA

Using virtio-vDPA for container or bare metal applications

We can use the virtio-vDPA bus driver to enable vDPA devices to interact with additional kernel subsystems instead of the vhost subsystem described in the previous use cases. This is done by bridging between the vDPA bus and the virtio bus thus presenting a kernel visible virtio interface to other kernel subsystems.

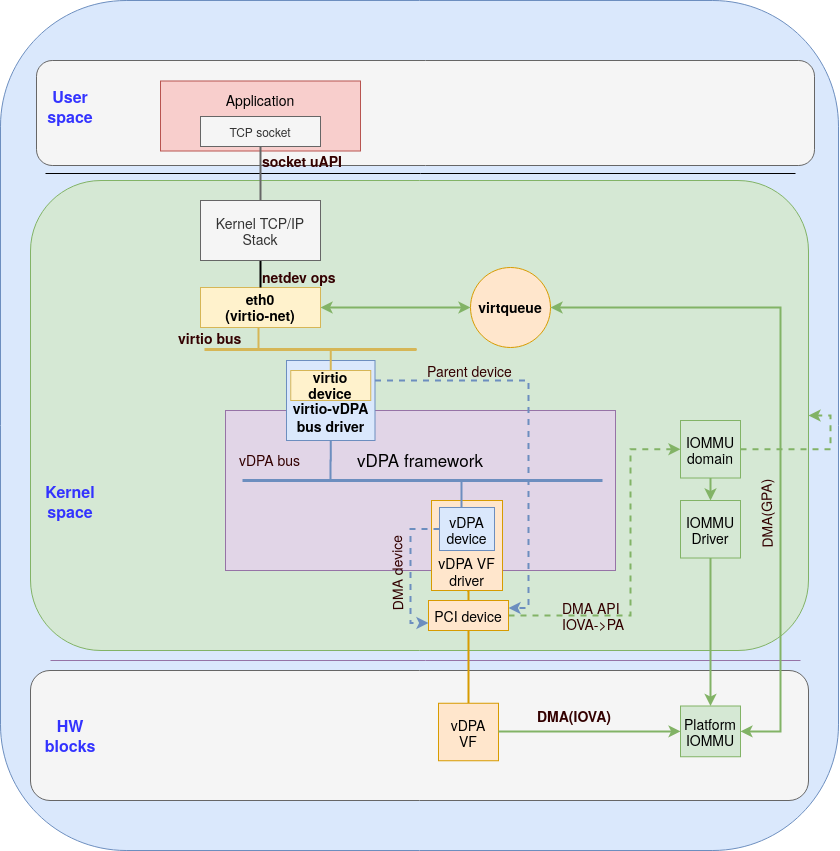

Figure 4 presents this approach:

Figure 4: Using Virtio-vDPA bus driver for container application

The figure demonstrates how a userspace application using standard socket base API (such as a TCP socket) can send and receive packets from a vDPA device. In this case, the virtqueue belongs to the kernel and the kernel will allocate a dedicated DMA domain for protecting its memory. To clarify, in the use cases we presented with the vhost subsystem the virtqueues were allocated and populated by the user space application thus the kernel had no awareness of them.

Let’s now split the discussion to TX flow (application to vDPA device) and RX flow (same direction).

For the TX direction the application needs to use the sendmsg() system call with a pointer to a userspace buffer for sending packets. The kernel TCP/IP stack then copies the userspace buffer to the kernel buffer and determines that eth0 is the interface that is used for packet transmission. The virtio-net driver’s transmission routine will be called in order to perform the following tasks:

DMA mapping for the kernel buffer - allocate IOVA for this buffer and then ivoke DMA APIs to setup the IOVA->PA mapping in the platform IOMMU.

Setting up virtio descriptors - using IOVA as the address of the buffer.

Populating the virtqueues - this is done by making the descriptor available for the virtio device to consume.

Kick the virtqueue on the virtio bus - notify the device on the new available buffer.

The virtio device (the yellow box inside virtio-vDPA bus driver) is presented by virtio-vDPA bus which converts the kick to vDPA bus operations. The vDPA VF driver will kick the hardware virtqueue in a vendor specific way. The vDPA hardware will process the new available descriptor and send the packet by performing DMA from the kernel buffer using IOVA.

When the hardware finishes the transmission, the TX completion interrupt will be handled by the vDPA VF driver. A virtio-net virtqueue callback set by the virtio-vDPA bus driver will be triggered. Then the virtio-net driver will perform post transmission tasks such as notifying the TCP/IP stack and reclaiming the memory.

For the RX direction, the vDPA hardware will DMA the packet into the buffer allocated by the kernel virtio-net driver (the eth0 yellow box on top of the virtio bus) via IOVA. Then the RX interrupt will be converted as a virtio-net RX virtqueue callback and NAPI (kernel polling routine for networking device) will be triggered. The packets will be passed to TCP/IP stack and queued in the socket receive queue. The application can then use recvmsg() to receive the packet which usually involves a copy from kernel buffer to user space buffer.

With the help of the virtio-vDPA driver, vDPA devices can be used by various existing kernel subsystems (networking, I/O etc). Applications can make use of vDPA devices without any modification.

Summary

This is the third and final part of the vDPA kernel framework.

The first post focused on the building blocks for this solution including vDPA bus, the vDPA bus driver and the vDPA device driver.

The second post focused on the vDPA bus drivers including the vhost-vDPA bus driver and the virtio-vDPA bus driver.

This post focused on the actual use cases of the vDPA kernel framework showing how everything comes together for containers, bare metal and VMs (both guest kernel and host).

We mainly focused on the vhost-vDPA subsystem (the vhost-vDPA bus driver) use cases however also provided a use case for the virtio-vDPA bus driver.

Looking forward the vDPA kernel framework will be enhanced with features such as shared memory support (both scalable IOV (SIOV) and sub functions), multi queue support, interrupt offloading etc.

It should be noted that the community engaged in vDPA is open to suggestions for new features, directions and use cases (send us a mail to virtio-networking@redhat.com).

In the next posts we will move on to providing hands on examples for using and developing drivers with the vDPA kernel framework.

Sobre o autor

Experienced Senior Software Engineer working for Red Hat with a demonstrated history of working in the computer software industry. Maintainer of qemu networking subsystem. Co-maintainer of Linux virtio, vhost and vdpa driver.

Navegue por canal

Automação

Últimas novidades em automação de TI para empresas de tecnologia, equipes e ambientes

Inteligência artificial

Descubra as atualizações nas plataformas que proporcionam aos clientes executar suas cargas de trabalho de IA em qualquer ambiente

Nuvem híbrida aberta

Veja como construímos um futuro mais flexível com a nuvem híbrida

Segurança

Veja as últimas novidades sobre como reduzimos riscos em ambientes e tecnologias

Edge computing

Saiba quais são as atualizações nas plataformas que simplificam as operações na borda

Infraestrutura

Saiba o que há de mais recente na plataforma Linux empresarial líder mundial

Aplicações

Conheça nossas soluções desenvolvidas para ajudar você a superar os desafios mais complexos de aplicações

Virtualização

O futuro da virtualização empresarial para suas cargas de trabalho on-premise ou na nuvem