You can’t scroll very far without seeing buzzwords like artificial intelligence (AI), machine learning (ML), and edge computing. But what happens when we combine these concepts to create AI at the edge? And should we combine them? The benefits of running AI in distributed edge computing environments can be enormous for use cases such as manufacturing, retail, transportation, logistics, smart cities, and more. The list is endless! In this blog post, we explore a few reasons why you should be using AI at the edge.

What is data at the edge?

Data at the edge includes IoT generated data, user generated data, or models running locally on edge-based devices. It does not include focus on training a large model on a centralized, public cloud.

1. Faster decision making

One of the most significant advantages of deploying AI inferencing at the edge is the ability to act fast. By having compute power right next to the data source or end user, you can reduce the latency that comes with sending user data over a network to a centralized server or cloud. This means that decisions can be made in near real-time, enabling quick response times and improving efficiency. For instance, in manufacturing AI at the edge can help detect anomalies in machinery and trigger maintenance alerts before a catastrophic failure occurs.

2. Reduction of data transfer

Another advantage of using AI at the edge is the reduction of data transfer required when processing locally. When data is processed at the edge through inferencing, it reduces the amount of data that needs to be sent over the network, freeing up bandwidth for other critical applications. This is particularly important for industries such as transportation and logistics, where large amounts of data are generated from sensors and cameras.

By processing data at the edge, you can reduce the burden on your network, improve reliability, and decrease costs. Imagine an assembly line for bottling drinks. Each drink needs a label that is rightside up and straight. This requires a camera to inspect each bottle as it passes by (often at high speed). If you constantly streamed a live video feed of the assembly line to help with quality assurance, then that would use a tremendous amount of bandwidth. Traditional cloud-based solutions would require high speed, expensive bandwidth from site to cloud. But by hosting local applications, outbound bandwidth is saved for critical use rather than raw video.

3. Ability to function when the network drops

One of the most underrated benefits of using AI at the edge is the ability to function without network connectivity. This means that critical operations can stay online during network outages. For example, in smart facilities like offices, AI at the edge can help manage critical building systems when network connectivity is compromised. Similarly, in logistics, AI at the edge can help track and monitor shipments in remote locations without relying on a network connection.

4. Air-gapped environments

Sometimes, apps or data are so sensitive that they can't be connected to the internet, or they're just too remote. Running AI at the edge means gaining speed and insights from local models in disconnected deployments. For example, a remote station looking for new energy sources might need powerful onsite computing, but is too remote for a connection to a cloud. Local hardware can quickly run machine learning models to expedite the process.

5. Data sovereignty

Finally, using AI at the edge contributes to data sovereignty. Because data never has to leave the area where it was first generated, there's a reduction of risk of it being intercepted or misused. This is particularly important for industries such as healthcare and finance, where sensitive data is involved. A public sector example, keeping data only inside a local facility means keeping it outside of unfriendly regions when compared to uploading it to a larger public cloud that can spread across regions (of course encryption and other security practices must still be used).

6. User experience and increasing application capabilities

Having compute resources right at your user’s location enables new use cases and enriches the user experience. Imagine going into a retail store where AI at the edge can analyze customer behavior and provide personalized recommendations, enhancing the shopping experience. AI at the edge not only reduces application responses but also maximizes opportunities with near real-time prediction at the same location and moment where the user is from providing an outfit recommendation for a specific event to using customer behavior depending on the past purchases to understand customer styles and consumer spending.

Small footprints and edge apps

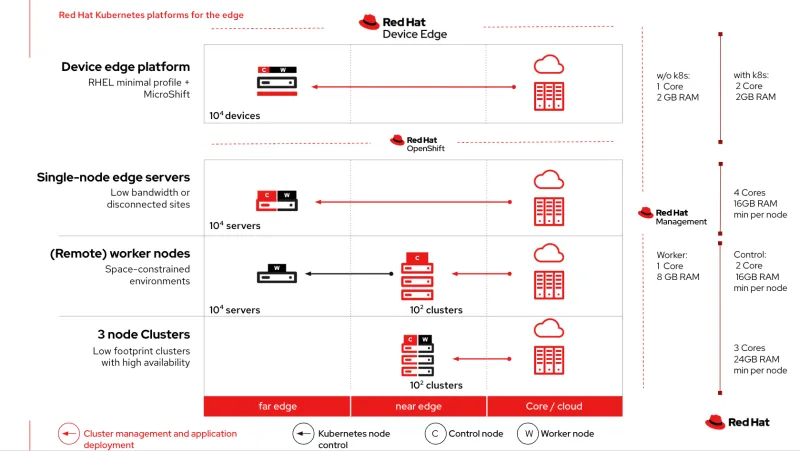

Red Hat offers multiple OpenShift topologies to cover various edge deployments. Starting with 3 node clusters, these bring high availability in small form factors. Remote worker nodes place a single worker node onsite while the high-availability control plane is further out. Single node OpenShift is our smallest OpenShift topology, which allows apps to run in the smallest and most remote locations. Red Hat OpenShift AI is extending its model serving capabilities to the edge by allowing the deployment of machine learning models to remote locations using single-node OpenShift. It provides inferencing capabilities in resource-constrained environments with intermittent or air-gapped network access. This technology preview feature provides organizations with a scalable, consistent operational experience from core to cloud to edge and includes out-of-the-box observability.

Need to go even smaller? We got you! Red Hat Device Edge goes even smaller (as small as 1-2 CPU Cores and 2 GB RAM). Whichever topology you choose, it’s all one platform (and yes, you can mix and match!) We provide a range of diverse topologies to fit the right device size for your applications to reduce costs and unnecessary use of resources.

Extending out of the datacenter to include distributed systems has been a choice for many years. We increasingly see new, decentralized architectures built to be self-contained and independently deployable and this paradigm extends to cloud-native and AI applications. The goal is a developer-centric approach to building better applications by bringing new ways of producing and delivering software to the Red Hat edge portfolio, offering a new way to move applications closer to the data source or end user. This allows applications to run locally to improve resiliency and increase speed while reducing the costs of unnecessary resources by only requiring the necessary resources at the location where they’ll be used.

The use of AI at the edge represents a significant shift in how we think about computing and data processing. By bringing compute power closer to the data source, we can realize numerous benefits, including faster decision making, reduced network bandwidth, improved reliability, and enhanced data privacy. As we continue to see the growth of IoT devices and the explosion of data from sensors, the case for AI at the edge becomes even more compelling. In short, you’re going to start hearing even more about AI working in more places.

Sobre los autores

Ben has been at Red Hat since 2019, where he has focused on edge computing with Red Hat OpenShift as well as private clouds based on Red Hat OpenStack Platform. Before this he spent a decade doing a mix of sales and product marking across telecommunications, enterprise storage and hyperconverged infrastructure.

Valentina Rodriguez is a Principal Technical Marketing Manager at Red Hat, focusing on the developer journeys in OpenShift and emerging technologies. Before this role, she worked with high-profile customers, helping them adopt new technologies, and worked closely with developers and platform engineers. Her background is in software engineering. She built software for 15 years, working in diverse roles from Developer to Tech Lead and Architect, from retail, healthcare, financial, e-commerce, telco, and many other industries. She loves contributing to the community and the industry and has spoken at conferences such as O'Reilly, KubeCon, Open Source Summit, Red Hat DevNation Day, and others. She's very passionate about technology and has been pursuing several certifications in this space, from frameworks to Kubernetes and project management. She possesses a Master's in Computer Science and an MBA.

Más como éste

Looking ahead to 2026: Red Hat’s view across the hybrid cloud

Resilient model training on Red Hat OpenShift AI with Kubeflow Trainer

Technically Speaking | Platform engineering for AI agents

Technically Speaking | Driving healthcare discoveries with AI

Navegar por canal

Automatización

Las últimas novedades en la automatización de la TI para los equipos, la tecnología y los entornos

Inteligencia artificial

Descubra las actualizaciones en las plataformas que permiten a los clientes ejecutar cargas de trabajo de inteligecia artificial en cualquier lugar

Nube híbrida abierta

Vea como construimos un futuro flexible con la nube híbrida

Seguridad

Vea las últimas novedades sobre cómo reducimos los riesgos en entornos y tecnologías

Edge computing

Conozca las actualizaciones en las plataformas que simplifican las operaciones en el edge

Infraestructura

Vea las últimas novedades sobre la plataforma Linux empresarial líder en el mundo

Aplicaciones

Conozca nuestras soluciones para abordar los desafíos más complejos de las aplicaciones

Virtualización

El futuro de la virtualización empresarial para tus cargas de trabajo locales o en la nube