In the next few articles we will present a new emerging use case for vDPA we call hyperscale. In this post specifically we'll give an overview of hyperscale and set the stage for the longer series. Let's go!

Hyperscale is a use case where storage, networking and security are offloaded to smartNICs (programmable accelerators) to free up the host server's resources to run workloads such as VMs and containers. For granting these workloads the “look & feel” that they are running as usual on the host server while in practice their storage, networking and security is running on a PCI connected smartNIC, resource mapping from the host server to the smartNIC is required.

For those not familiar with vDPA (virtio data path acceleration) please refer to our previous articles such as Introduction to vDPA kernel framework or a deep dive into vDPA framework. We relate to the vDPA approach as virtio/vDPA since the vDPA covers the control plane while the virtio covers the data plane.

This is where vendor specific closed solutions and in contrast virtio/vDPA technologies come into play providing the resource mapping and enabling the hyperscale use case to come to life. When focusing on virtio/vDPA we talk about associating vDPA instances to workloads.

In the next sections we will discuss existing technologies for creating vDPA instances and new approaches to help address the challenges of scaling the amount of virtio/vDPA instances.

This article is a solution overview and provides a high level perspective of this work. Future blogs will provide a technical deep dive and hands on instructions for this solution.

Demand for hyper-scalability and challenges

As mentioned previously, hyperscale use cases involving resource offloading to smartNICs are becoming common as part of the effort to run 10K+ or even 100K+ workloads on a single host server. For workloads to feel there simply communicating with the host server Linux operating system while in practice there communicating with the smartNIC, we need to map resources. There exist different vendor specific solutions to accomplish this similar to vendor specific SR-IOV implementations however we will only cover the virtio/vDPA approach.

Obviously a basic question is why look at virtio/vDPA solutions for this problem if vendors can provide the exact same capabilities through their own solutions which may also be more optimized. Putting aside the topic of wanting to avoid closed proprietary solutions (virtio/vDPA is open and standard) the key value virtio/vDPA brings in this context is a single data plane / control plane for all workloads.

This means that by using virtio/vDPA for the hyperscale use case all workloads (VMs, containers, Linux processes) can share the exact same control plane / data plane which significantly simplifies maintaining, troubleshooting and controlling resources.

Going back to our use case, virtio/vDPA through traditional technology such as SR-IOV using PFs (physical functions) and VFs (virtual functions) provides facilities to achieve basic scalability. Using VFs virtio/vDPA can reach several hundreds of instances to be consumed by workloads on the host server.

When attempting to scale the virtio/vDPA instances from hundreds to thousands however, a number of issues surface. Reaching 1K+ virtio/vDPA instances requires a new method which we relate to as hyper-scalability or in short, hyperscale.

We will start by reviewing the existing virtio/vDPA SR-IOV technology and its limitations. We will then discuss the challenges of scaling to more virtio/vDPA instances and present a number of methods to overcome the SR-IOV limitation for hyperscale use cases.

Short recap of SR-IOV based vDPA

To increase the effective hardware resource utilization, vDPA vendors usually choose to implement vDPA hardware that is built on top of the Single Root I/O Virtualization (SR-IOV) and shares specification extensions with the PCI Express specification. SR-IOV allows a single hardware PCIe device to be partitioned and appear as multiple devices or virtual functions (VF). Each of VFs can then be associated with a VM or a container workload.

The physical function (PF) is used as the management interface to the VFs which includes for example the action of provisioning the VF. SR-IOV usually requires the platform support for IOMMU (Input–output memory management unit) in order to isolate both the DMAs (dynamic memory access) and interrupts to ensure a secure environment for each VF.

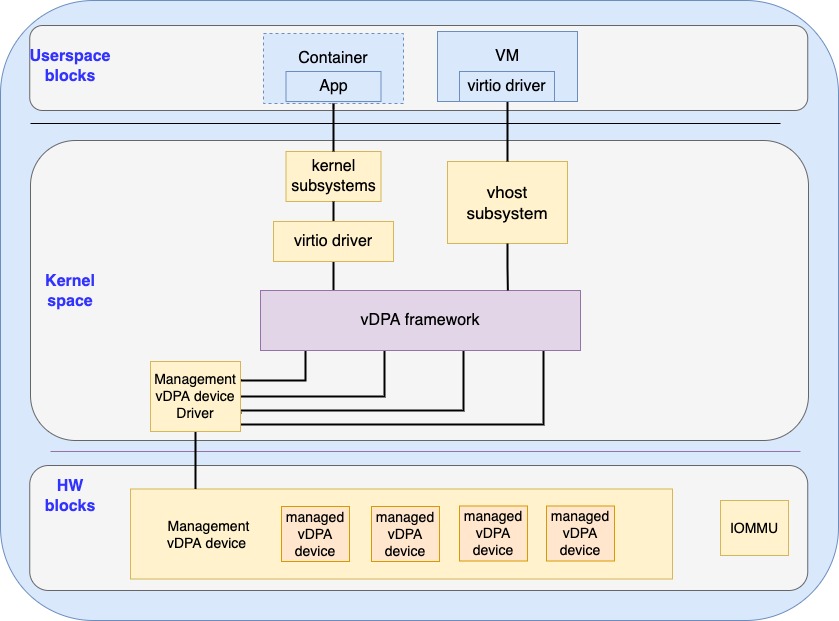

Figure 1 demonstrates the architecture of SR-IOV based vDPA.

The PF presents multiple VFs, the VF driver talks directly to the VF and presents vDPA devices to the vDPA framework. Users may choose to use the kernel virtio driver to serve containerized (or bare metal) applications via the kernel subsystem or the vhost subsystem for serving VMs.

When looking at vDPA in relation to VFs, this means that the vendor associated a virtio/vDPA instance to each VFs so the total number of vDPA instances is limited by the maximum number of VFs provisioned. This model works well for several hundreds of virtio/vDPA instances however it doesn’t scale well to larger numbers. This is due to a number of reasons:

-

Resources limitation: Based on the spec, SR-IOV allows the creation of up to 65535 VFs which would imply the same number of virtio/vDPA instances. In practice however it’s very difficult to reach that limitation. This is since each VF requires unique PCIe resources such as bus/device/function numbers, BAR/MMIO areas and configuration spaces for VFs to be assigned separately. These additional resource requirements make things challenging and require complicated bookkeeping both for the hardware and the hypervisor. For example, When the device tries to present more than 256 VFs, the VF needs to use a PCI bus number other than what PF uses and VF can’t be located on a bus number that is smaller than its PF. When there are no sufficient bus numbers or other PCIe resources, the software should reduce the number of VFs for this PF

-

Static provisioning: VFs can't be created on demand. Instead, it is expected to calculate the numbers of VFs that need to be used before we perform the assignment. This means the hypervisor might need to create thousands of VFs even if only a few of them are used in practice

-

Flexibility: All VFs are required to have the same device ID (the id used by the driver to identify a specific type of device) in PCIe specification which makes it impossible to have both networking and block vDPAs (used for storage use cases) connected to the same PF

Aside from these limitations, we expect to see additional requirements which SR-IOV can not satisfy. This includes for example:

-

Sophisticated hardware that is hard to be partitioned into VFs such as GPUs

-

Presenting multiple vDPA instances on a single VF such as in the case of nested virtualization environment

-

Supporting vDPA instances for non PCIe devices given that virtio supports additional transport layers such as MMIO and control channels

Introducing the vDPA hyperscale infrastructure

All of the above calls for a new device virtualization technology for vDPA decoupled from SR-IOV. When creating new vDPA devices we would like to provide the following new capabilities:

-

Lightweight vDPA device: the ability to have vDPA device with minimal (or even no) transport (PCIe) resources

-

Secure and scalable DMA context: the ability to scale vDPA instances with an isolated DMA context for a safe datapath assignment. The key point is to maintain the security for each of the vDPA devices which the DMA context provides. For PCIe it means we need a finer grain DMA isolation than the current BDF (bus/device/function number)

-

Interrupt scalability: support efficient interrupt delivery when scaling vDPA instances, each vDPA should have its own interrupt without any limitation from the transport. Without this capability interrupt sharing between the vDPA devices will be required resulting in reduced performance

-

Dynamic provisioning: creating and destroying vDPA instances on demand for efficient resource utilization. This replaces the static provisioning currently done with SR-IOV

Let’s now dive into each of these requirements in the following sections.

Lightweight vDPA device

The term lightweight means in this context that the vDPA instance should have as few transport (PCIe) specific resources associated as possible. To achieve this, we require a new approach allowing the vDPA devices to be created and configured in a non transport (PCIe) specific manner. Management vDPA device and managed vDPA devices are introduced for solving this problem:

-

Managed vDPA devices: a lightweight vDPA device with minimal transport specific resources (e.g the occupation of bus, device and function number) that can be assigned independently to a container or a VM

-

Management vDPA device: the model providing the facility to manage and transport virtio basic facility of the managed vDPA device

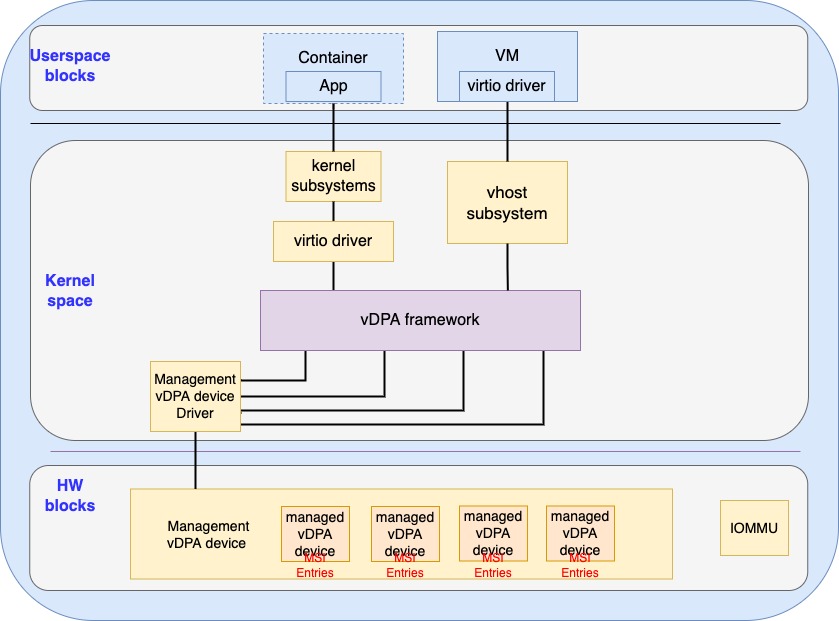

The major difference of this model compared with SR-IOV is that the presentation of vDPA devices now requires cooperation between the managed vDPA device and the hypervisor as shown as in Figure 2:

Figure 2: Managed vDPA devices

Figure 2: Managed vDPA devices

The management vDPA device is probed and configured through a transport layer specific way (for example PCIe). The managed vDPA device is probed and configured through the management vDPA device.

The device or transport specific interface of the management vDPA device is designed to allow being multiplexed in a scalable way and used for the managed vDPA devices where the transport specific resources are saved. Such multiplexing could be done with a queue or register based interface via either a vendor specific way or a virtio standard way (which is being proposed).

Unlike the case of SR-IOV where the VF driver presents a vDPAs device and talks to the individual VF device, at the transport level the only visible device is the management vDPA device. The presentation of the vDPA device to the host is done by the management vDPA device driver that will send the vDPA bus commands and the managed vDPA device identifier to the management vDPA device (inside the HW).

The management vDPA device will then perform necessary operations on the designated managed vDPA device in an implementation specific way. At the device level, the managed vDPA device could be associated with minimal or even no transport specific resources to be much more lightweight than what VF can have. This can save valuable transport specific resources which will provide improved scalability compared with SR-IOV.

Secure and Scalable DMA context

The managed vDPA device is invisible to the transport level, so it requires a secure DMA context which could be used at sub device level. This is different from SR-IOV where the DMA is isolated for each VF that is visible in the transport layer with a unique requestor ID (made of bus, device and function number).

Fortunately, several new methods can be used to address this. One such example is the Process Address Space ID (PASID) which was introduced in the PCIe spec to differentiate the DMA context with an additional 20 bit PASID. The PASID capable platform IOMMU can then provide different DMA contexts identified by requester ID and PASID.

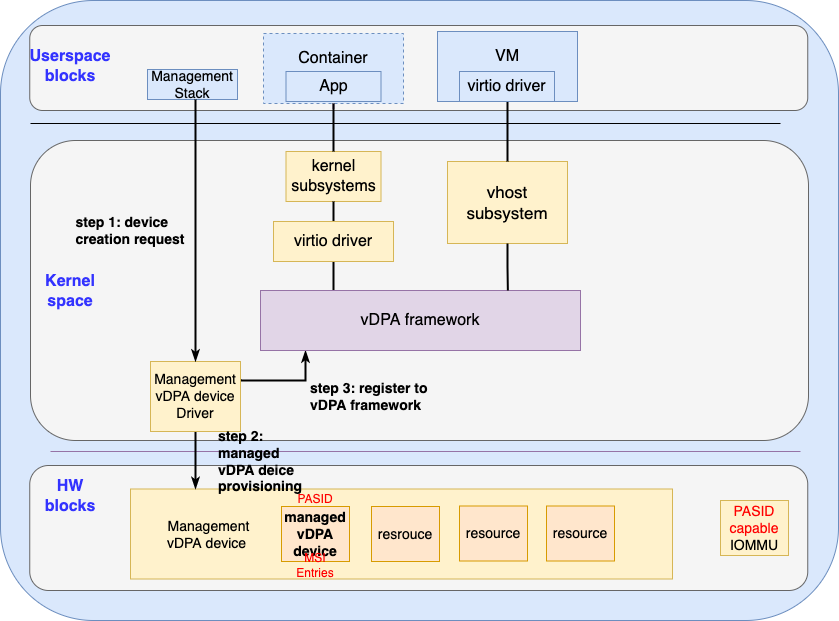

A single managed vDPA device could be identified with one or several unique PASIDs plus the requestor ID of the management vDPA device as shown in the following figure:

Figure 3: Secure DMA context with PASID

Figure 3: Secure DMA context with PASID

As shown in Figure 3, the kernel will allocate a unique address space ID (PASID for PCI devices) for each managed vDPA device. Such PASID will be assigned to each managed vDPA device by the Management vDPA device driver during the provisioning. The managed vDPA device will use its own PASID with the requestor ID of the management vDPA device for the PCIe transactions for DMA.

The DMA mapping is done with the assistance of PASID capable platform IOMMU device: the management vDPA device driver send the map with the PASID to the IOMMU driver then the driver can set the PASID based DMA mappings correctly in the hardware IOMMU. Though the figure only demonstrates a single PASID per managed vDPA device, it is allowed to have multiple PASIDs to be used by a single managed vDPA for control path (virtqueue) interception.

Alternatively, a vDPA vendor can choose to implement the sub device address space isolation by using address space identifier (ASID) via the vendor specific device IOMMU as shown in the following figure:

Figure 4: Secure DMA context with device MMU

Figure 4: Secure DMA context with device MMU

As shown in figure 4, the vendor device can have its own MMU that is capable to differentiate DMA from different managed vDPA devices based on the vendor specific implementation of ASID. The managed vDPA parent will then send the DMA mapping with the ASID to the management vDPA device driver and let it program the device MMU. With the help of the device MMU, the PASID is not a must, the management vDPA driver can choose to not use the PASID that might be provided by the platform.

Both PASID and device MMU can help us to have a secure and scalable sub device (transport aware) level DMA context scalability than SR-IOV can.

Interrupt scalability

In order to save transport (for example PCIe) specific resources, the managed vDPA device is usually not associated with full transport specific resources (such as config space) which could be identified by the transport and its driver directly. This means that the managed vDPA device can’t have message signal interrupt PCIe capabilities (since it might even not have an config space) as what the VF can have. Though the management vDPA device is transport (PCIe) aware, PCIe has the limitation of 2048 MSI entries which needs to be overcome. Per managed vDPA device interface to store MSI data is required as shown in the following figure:

Figure 5: MSI storage in managed vDPA

Figure 5: MSI storage in managed vDPA

The management vDPA device should offer an interface for configuring MSI entries per managed vDPA device per virtqueue. The interface could be either vendor specific method or a virtio standard way. Such a storage is different from the MSI/MSI-X capability that is offered by the management vDPA device which serves only for itself.

The management vDPA device driver is in charge of allocating the MSI entries per managed vDPA device per virtqueue(s) during its provisioning and the managed vDPA device is expected to store those MSI entries by itself in an implementation specific way. When the vDPA device wants to raise an MSI interrupt, it will use the MSI entry that is associated with the virtqueue in the PCIe transaction. With the help of storage in the managed vDPA device, more MSI entries than what are stored in PF’s PCIe capability can be provided to make sure we avoid interrupt sharing which will improve the interrupt scalability.

Dynamic Provisioning

To have better resource utilization in both hardware and software, dynamic vDPA provisioning is required. SR-IOV style of static provisioning won’t scale when there could be more than 10K+ vDPA instances since resources could be wasted even if there’s no real user and it lacks sufficient flexibility when multiple types of device with different features are required.The management vDPA device is expected to expose a vDPA device provision interface for the management vDPA device driver as shown in the Figure 6:

Figure 6: Managed vDPA provisioning

Figure 6: Managed vDPA provisioning

The management stack is in charge of provisioning both the software and hardware resources. As shown in Figure 6, before a VM or container is provisioned:

-

The management stack will ask the management device driver to create a vDPA device with required features or attributes.

-

The management vDPA device driver will then ask the management vDPA device to allocate the necessary hardware resources for the managed vDPA device through the provisioning API. After the hardware resources are ready, the management vDPA device driver will provision the managed vDPA device and the resources (memory, ASID, MSI entries) associated with it the managed vDPA device

-

When the managed vDPA device and its resources are ready, the management vDPA device driver will register the managed vDPA into the vDPA framework

Similarly, After the management stack destroys a VM or container, it will first unregister and reclaim the software resources that are allocated for the VM and vDPA. Then it will request the management vDPA device driver to free the hardware resources associated with it. Such a dynamic provisioning model allows a better resource utilization and better flexibility than what SR_IOV can provide.

Summary

In this blog we presented the model of the managed vDPA device and the management vDPA device for providing an efficient and secure hyperscale virtio/vDPA. This will enable the creation of 10K+ vDPA devices for a physical device and enable the offloading of networking and storage resources to smartNICs to scale the workloads running on servers.

Our goal is to provide improved scalability and flexibility compared to what vDPA with SR-IOV can currently provide. We covered the different components of this solution including lightweight managed vDPA devices, using PASID or similar secure DMA contexts, interrupt scalability and dynamic provisioning.

These approaches will be supported in the virtio/vDPA framework and some parts will also be added to the virtio spec as well.

Future articles will dive deeper into the technical implementations of the virtio/vDPA hyperscale use case.

Sobre los autores

Experienced Senior Software Engineer working for Red Hat with a demonstrated history of working in the computer software industry. Maintainer of qemu networking subsystem. Co-maintainer of Linux virtio, vhost and vdpa driver.

Más como éste

Accelerating VM migration to Red Hat OpenShift Virtualization: Hitachi storage offload delivers faster data movement

Introducing OpenShift Service Mesh 3.2 with Istio’s ambient mode

Crack the Cloud_Open | Command Line Heroes

Edge computing covered and diced | Technically Speaking

Navegar por canal

Automatización

Las últimas novedades en la automatización de la TI para los equipos, la tecnología y los entornos

Inteligencia artificial

Descubra las actualizaciones en las plataformas que permiten a los clientes ejecutar cargas de trabajo de inteligecia artificial en cualquier lugar

Nube híbrida abierta

Vea como construimos un futuro flexible con la nube híbrida

Seguridad

Vea las últimas novedades sobre cómo reducimos los riesgos en entornos y tecnologías

Edge computing

Conozca las actualizaciones en las plataformas que simplifican las operaciones en el edge

Infraestructura

Vea las últimas novedades sobre la plataforma Linux empresarial líder en el mundo

Aplicaciones

Conozca nuestras soluciones para abordar los desafíos más complejos de las aplicaciones

Virtualización

El futuro de la virtualización empresarial para tus cargas de trabajo locales o en la nube