Starting in Red Hat Ceph Storage 3.0, Red Hat added support for Containerized Storage Daemons (CSD) which allows the software-defined storage components (Ceph MON, OSD, MGR, RGW, etc) to run within containers. CSD avoids the need to have dedicated nodes for storage services thus reducing both CAPEX and OPEX by co-located storage containerized daemons.

Ceph-Ansible provides the required mechanism to put resource fencing to each storage container which is useful for running multiple storage daemon containers on one physical node. In this blog post, we will cover strategies to deploy RGW containers and their resource sizing guidance. Before we dive into the performance, let's understand what are the different ways to deploy RGW.

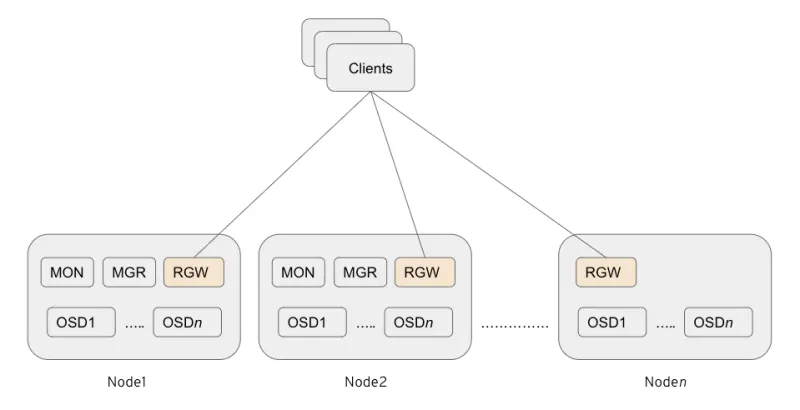

Co-located RGW

-

Does not require dedicated nodes for RGWs (can reduce CAPEX & OPEX).

-

A single instance of Ceph RGW container is placed on storage nodes co-resident with other storage containers.

-

Starting in Ceph Storage 3.0, this is the preferred way to deploy RGWs.

Figure 1: Co-resident RGW deployment strategy

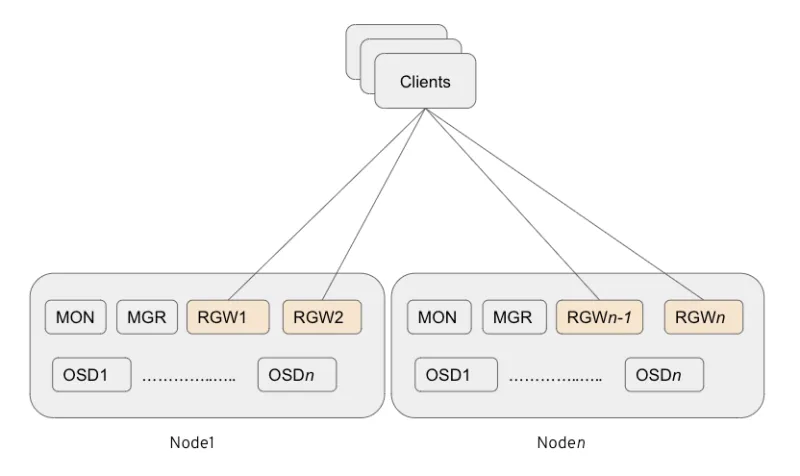

Multi Co-located RGW

-

Does not require dedicated nodes for RGWs (can help reduce CAPEX & OPEX).

-

Multiple Ceph RGW instances (currently tested 2x instances per storage node) co-resident with other storage containers.

-

Our testing showed this option delivers the highest performance without incurring an additional cost.

Figure 2: Multiple co-resident (2 x RGW Instance) deployment strategy

Standalone RGW

-

Need dedicated nodes for RGWs.

-

Ceph RGW component get deployed on a dedicated physical / virtual node.

-

Starting in Ceph Storage 3.0, this is no longer the preferred way to deploy RGWs.

Figure 3: Standalone RGW deployment strategy

Performance Summary

(I) RGW deployment and sizing guidelines

In the last section, we looked at different ways to deploy Ceph RGW. We will compare the performance differences between each of these methods. To gauge the performance we executed multiple tests by modulating RGW deployment strategy as well as RGW CPU core count across small and large, write and read workloads. The results are as follows.

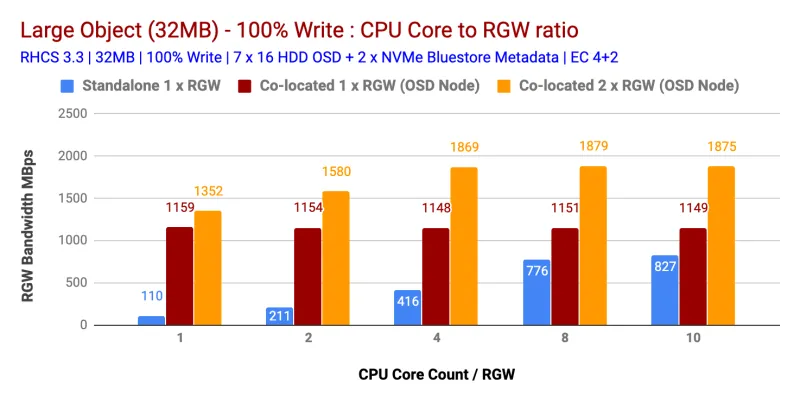

100% Write Workload

As shown in Chart 1 and Chart 2

-

Co-located (1x) RGW instance outperformed standalone RGW deployment for both small and large object sizes.

-

Similarly, multiple co-resident (2x) RGW instance outperformed co-resident (1x) RGW instance deployment. As such, Multiple co-resident (2x) RGW instances delivered 2328 Ops and 1879 MBps performance for small and large object size respectively.

-

Across multiple tests, the 4 CPU Core / RGW instance was found to be the optimal ratio between the CPU resources to RGW instance. The allocation of more CPU cores to the RGW instance did not deliver higher performance.

Chart 1: Small Object 100% write test

Chart 2: Large Object 100% write test

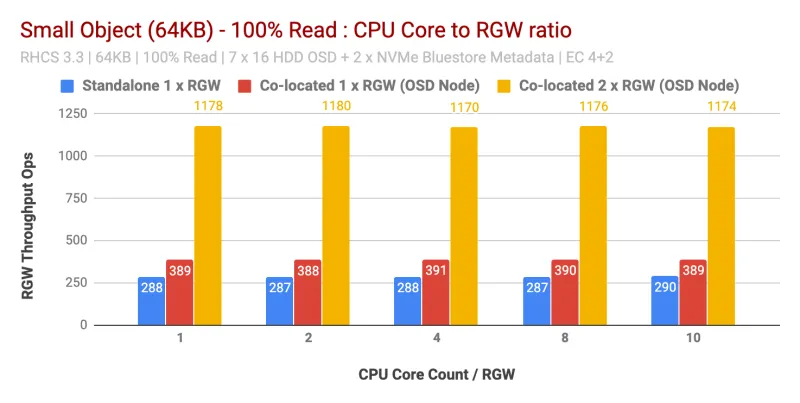

100% Read Workload Performance

Interestingly for read workload, increasing CPU cores per RGW instance did not deliver performance improvements for both small and large object sizes. As such results from 1 CPU core per RGW instance found to be almost similar to results from 10 CPU core per RGW instance.

In fact, based on our previous testing we observed similar results that read workloads do not consume a significant amount of CPU, perhaps because Ceph makes use of systematic erasure coding and does not need to decode chunks during read. As such, we found that if the RGW workload is read-intensive, over-allocating CPU does not help.

Comparing results from Standalone RGW to Co-located (1x) RGW test found to be very similar. However just by adding one more co-located RGW (2x) performance improved by ~200% in case of small object and ~90% in the case of large object size.

As such, if the workload is read-intensive, running multiple co-located (2x) RGW instances could boost overall read performance significantly.

Chart 3: Small Object 100% Read test

Chart 4: Large Object 100% Read test

(II) RGW thread pool sizing guidelines

One of the RGW tuning parameters which are very relevant while deciding CPU core allocation to RGW instance is rgw_thread_pool_size which is responsible for the number of threads spawned by Beast corresponding to HTTP requests. This effectively limits the number of concurrent connections that the Beast front end can service.

To identify the most appropriate value for this tunable we ran tests by varying the rgw_thread_pool_size together with CPU Core count pre RGW instance. As shown in chart-5 and chart-6, we found that setting rgw_thread_pool_size to 512 delivers maximum performance at 4 CPU core pre RGW instance. Increasing both CPU core count as well as rgw_thread_pool_size did not do any better.

We do acknowledge that this test could have been better if we had run a few more rounds of tests with lower rgw_thread_pool_size than 512. Our hypothesis is that since the Beast web server is based on an asynchronous c10k web server, it does not need a thread per connection and hence should perform as good with lower threads. Unfortunately, we're not able to test but will try to take care of this in the future.

As such multi-collocated (2x) RGW instance with 4 CPU Core per RGW instance and rgw_thread_pool_size of 512 delivers the maximum performance.

Chart 5: Small Object 100% Write test

Chart 6: Large Object 100% write test

Summary and up next

In this post, we learned that multi-collocated (2x) RGW instance with 4 CPU Core per RGW instance and rgw_thread_pool_size of 512 delivers the maximum performance without increasing the overall hardware cost. In the next post, we will learn what is / how to achieve maximum object storage performance from a fixed size cluster.

Sobre el autor

Más como éste

Data-driven automation with Red Hat Ansible Automation Platform

Ford's keyless strategy for managing 200+ Red Hat OpenShift clusters

Technically Speaking | Platform engineering for AI agents

Technically Speaking | Driving healthcare discoveries with AI

Navegar por canal

Automatización

Las últimas novedades en la automatización de la TI para los equipos, la tecnología y los entornos

Inteligencia artificial

Descubra las actualizaciones en las plataformas que permiten a los clientes ejecutar cargas de trabajo de inteligecia artificial en cualquier lugar

Nube híbrida abierta

Vea como construimos un futuro flexible con la nube híbrida

Seguridad

Vea las últimas novedades sobre cómo reducimos los riesgos en entornos y tecnologías

Edge computing

Conozca las actualizaciones en las plataformas que simplifican las operaciones en el edge

Infraestructura

Vea las últimas novedades sobre la plataforma Linux empresarial líder en el mundo

Aplicaciones

Conozca nuestras soluciones para abordar los desafíos más complejos de las aplicaciones

Virtualización

El futuro de la virtualización empresarial para tus cargas de trabajo locales o en la nube