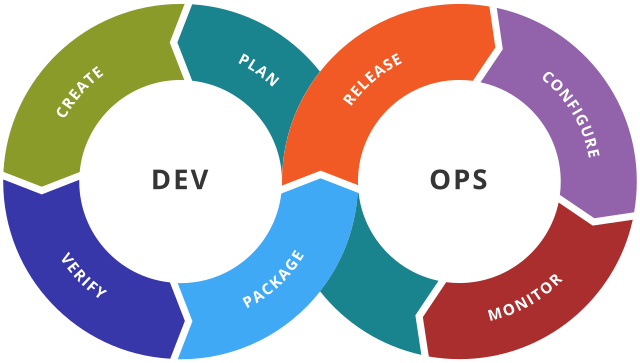

DevOps is a philosophy, a culture shift, and a way to break down siloes between teams. With it, teams that historically have been divided by responsibilities can work collaboratively to achieve a common goal: Deliver software at a higher and greater velocity than possible in an agile environment alone. This article discusses how I implement DevSecOps, which includes the security component in the usual DevOps practices.

The journey

Security issues change across the different stages of the journey—the more complex the environment, the more dynamic the challenges. The following outlines my typical milestones along the DevSecOps route.

1. Individual developer and project

In this early stage of the journey, individuals on my team are trying to get their hands dirty and get some experience with the task at hand, such as container images. At this stage, I implement three principles: secure coding, a shift-left security mindset, and software supply chain security.

Secure coding

Secure coding is the practice of developing computer software that guards against accidentally introducing security vulnerabilities. Defects, bugs, and logic flaws often cause commonly exploited software vulnerabilities.

I train developers on the importance of secure coding principles, and how to apply those principles during development. Developers help enforce high-quality code with regular compliance checks and security testing. The Open Web Application Security Project's (OWASP) secure coding practices checklist offers guidelines about what to look for. Examples include input validation, authentication and password management, access control, and data protection.

Shift-left security

With traditional security controls, the security team doesn't become involved until the "operate" and "monitor" stages at the end of the software development lifecycle (SDLC). This creates security debt, increases the risk of exposure, and increases costs and problems with fixing code in later development stages or even production.

Shift-left security's objective is to identify vulnerable code, libraries, and dependencies, and to fix them before deployment. When the software is ready to get pushed to operations, I put it through an automatic build that incorporates security. I also have my developers implement a code check, or "gate," before they make a commit. If the code doesn't meet specific requirements, it isn't permitted to pass through the gate, and the developer reviews it to correct any issues.

Once the software reaches the runtime, I scan it again to look for vulnerabilities and runtime issues. The idea is to facilitate remediation rather than cause arguments between security and development if security defects are uncovered during quality-check guardrails.

Secure software supply chain

Developers often use third-party software components (open source or commercial) to increase productivity instead of reinventing the wheel, so they can instead focus their efforts on innovation and development.

[ You might also be interested in the free eBook An architect's guide to multicloud infrastructure. ]

Because it is important to ensure those third-party components have not been tampered with, Red Hat conceived the sigstore project, which is overseen by the Linux Foundation. This digital signature verifies a software's origins to protect users against tampering during the software supply chain.

2. Official project

In this second stage, I have several developers working on the official project by contributing their code and ideas. I want to ensure proper governance and policy to ensure everyone follows and adheres to the same standard.

I look at several areas:

- Code quality: When writing the code, I often focus on whether it meets the software specification and usefulness by focusing on code quality. This coding practice is essential to ensure the codebase is maintainable, easy to understand, secure, and reliable. I implement tools such as SonarQube as the quality gate in my SDLC pipeline.

- Vulnerability management: It is essential to quickly respond to vulnerabilities, especially those with a high Common Vulnerabilities and Exposures (CVE) impact score, to minimize the attack surface. StackRox is an example of a tool I use to enforce policies and block activity that does not meet specified security criteria.

- Security configuration hygiene: I need to make sure that both platform and application configurations are in a hygienic state. The Center for Internet Security (CIS) Benchmark is one example I use to implement security hygiene. Red Hat OpenShift Container Platform (RHOCP) has compliance operators, and StackRox can help me remediate by suggesting a fix based on security benchmarks.

3. Production

There's a lot to look at during the production stage. From the operational aspect, this includes:

- Kubernetes component: Any vulnerability that this component can introduce also needs to be considered within the security lifecycle.

- Pod and runtime: A pod needs certain privileges to run. For example, RHOCP's SecurityContextConstraint (SCC) tells a pod what privileges it has. Wrongly assigning a privilege can be dangerous and make the pod susceptible to a host attack if it gets compromised. Runtime security helps to mitigate such attacks by helping detect and prevent malicious activity in the environment.

- Compliance: When there are specific requirements for my workload, I often look at the associated compliance benchmark and framework. These include the Payment Card Industry Data Security Standard (PCI DSS), Federal Risk and Authorization Management Program (FedRAMP), CIS Benchmarks, and the Health Insurance Portability and Accountability Act (HIPAA).

- Segmentation: Because Kubernetes is a multitenant platform, isolation is important to restrict traffic to and from a pod in case a namespace gets compromised. Implementing a Zero-Trust network and microsegmentation can help maintain segmentation. I find that extending applications to utilize service mesh functionality (like Istio) further strengthens the isolation.

[ For more insight, read Security automation: What does it mean, and how do I get there? ]

4. Expansion

At this stage, I might expand my Kubernetes environment and scale up. As the size of the environment increases, the complexity and risk also increase.

To reduce complexity and risk, I implement standardization and start to automate most tasks:

- Automation: With a decent-sized team running and maintaining the project, I use automation to keep up with the environment's expansion and its dynamic parts. This is a declarative way of managing platforms like GitOps or multicluster Kubernetes from a single pane of glass, like Red Hat Advanced Cluster Management for Kubernetes.

- Standardization: Maintaining the same standard across my workflow is important whether I'm provisioning a new cluster or reacting to incidents. This way, I can achieve better recovery time while significantly reducing operational and security debt.

5. Organizational standard

This is the stage when almost all applications are built based on cloud-native principles and deployed on top of Kubernetes.

Organizations might look at new technologies, such as service mesh, that provide advanced traffic management, observability, and service security. A service mesh provides service-to-service communication security with authentication capabilities. It also offers deep observability based on my code's instrumentation through the OpenTracing library.

The software delivery and packaging may also have evolved from a simple continuous integration/continuous delivery (CI/CD) pipeline to an operator's custom resource definition (CRD) or Helm combined with GitOps. Application deployment with the operator will be delivered with a set of configurations that can be controlled. I can reconcile any manual intervention or configuration drift with watchdog logic to maintain the desired state.

Protect your organization

Whether you have a legacy or cloud-native workload, security is essential to protect your data and organization. The difference between legacy and modern containers with cloud-native principles is that the approach to legacy security principles is no longer efficient. Each DevSecOps stage has a different set of security contexts and approaches. However, the fundamental idea is to protect the organization during build, deployment, and run.

저자 소개

Muhammad has spent almost 15 years in the IT industry at organizations, including a PCI-DSS secure hosting company, a system integrator, a managed services organization, and a principal vendor. He has a deep interest in emerging technology, especially in containers and the security domain. Currently, he is part of the Red Hat Global Professional Services (GPS) organization as an Associate Principal Consultant, where he helps organizations adopt container technology and DevSecOps practices

채널별 검색

오토메이션

기술, 팀, 인프라를 위한 IT 자동화 최신 동향

인공지능

고객이 어디서나 AI 워크로드를 실행할 수 있도록 지원하는 플랫폼 업데이트

오픈 하이브리드 클라우드

하이브리드 클라우드로 더욱 유연한 미래를 구축하는 방법을 알아보세요

보안

환경과 기술 전반에 걸쳐 리스크를 감소하는 방법에 대한 최신 정보

엣지 컴퓨팅

엣지에서의 운영을 단순화하는 플랫폼 업데이트

인프라

세계적으로 인정받은 기업용 Linux 플랫폼에 대한 최신 정보

애플리케이션

복잡한 애플리케이션에 대한 솔루션 더 보기

가상화

온프레미스와 클라우드 환경에서 워크로드를 유연하게 운영하기 위한 엔터프라이즈 가상화의 미래