모든 GPU가 데이터센터에 상주할 수는 없는 이유

전통적으로 중앙화된 IT 아키텍처는 확장, 관리, 환경 문제에 대처할 때 선호되는 방식이었습니다. 이러한 접근 방식의 활용 사례는 다음과 같습니다.

- 큰 규모의 하드웨어를 수용하는 데이터센터

- 추가 노드 또는 하드웨어를 추가할 수 있는 공간이 거의 항상 있으며, 모두 로컬에서 관리 가능(때로는 동일한 서브넷에서도 가능)

- 전원, 쿨링, 연결이 지속적이며 이중화됨

손상되지 않은 솔루션을 수정하는 이유는 무엇일까요? 솔루션에 문제가 있는 건 아니지만, 단일 솔루션이 모든 상황에 잘 맞는 경우는 거의 없습니다. 제조에서의 품질 관리를 예시로 살펴보겠습니다.

제조 품질 통제

공장이나 조립 라인에는 특정 태스크가 조립 라인에서 수행되는 영역이 수백 개, 때로는 수천 개 있을 수 있습니다. 기존 모델을 사용하려면 각 디지털 단계나 실제 툴에서 작업이 수행될 뿐만 아니라 작업 결과가 멀리 떨어진 클라우드에 있는 중앙 애플리케이션에 전달되어야 합니다. 여기서 다음과 같은 의문이 생길 수 있습니다.

- 속도: 사진을 촬영하고, 클라우드에 업로드하고, 중앙 애플리케이션에서 분석하고, 응답을 전송하고, 조치를 취하는 데 얼마나 걸리나요? 느리게 운영하여 매출이 줄어드나요? 신속하게 운영하지만 여러 오류나 사고의 위험을 감수하나요? 의사 결정은 실시간인가요, 아니면 실시간에 가까운가요?

- 수량: 모든 단일 센서가 원시 데이터 스트림을 지속적으로 업로드하거나 다운로드하는 데 필요한 네트워크 대역폭은 얼마인가요? 실현 가능한가요? 비용이 엄청나게 많이 드나요?

- 신뢰성: 네트워크 연결이 끊기면 어떻게 되나요? 전체 공장이 멈추나요?

- 확장: 비즈니스가 양호하다고 가정할 때 중앙 데이터센터를 확장하여 모든 위치에 있는 모든 기기의 모든 원시 데이터를 처리할 수 있나요? 가능하다면 비용은 얼마나 드나요?

- 보안: 중요한 원시 데이터가 있나요? 해당 원시 데이터를 다른 지역으로 이동하거나 어느 위치에나 저장하는 것이 허용되나요? 스트리밍하거나 분석하기 전에 암호화해야 하나요?

엣지로 이동

위의 질문 중 하나라도 답변할 때 망설여진다면 엣지 컴퓨팅을 고려해 볼 가치가 있습니다. 간단히 말해서, 엣지 컴퓨팅은 대기 시간에 민감한 소규모 애플리케이션 또는 프라이빗 애플리케이션 기능을 데이터센터 외부로 이동하여 실제 작업이 수행되는 위치 옆에 배치합니다. 엣지 컴퓨팅은 이미 보편화되어 있습니다. 예를 들면 우리가 운전하는 자동차나 주머니 속 휴대폰 같은 곳에도 존재합니다. 엣지 컴퓨팅은 확장 문제를 장점으로 전환합니다.

제조 예시를 다시 살펴보겠습니다. 각 조립 라인 근처에 소규모 클러스터가 있다면 위의 모든 문제를 완화할 수 있습니다.

- 속도: 덜 지연되도록 운영할 수 있는 로컬 하드웨어를 사용하여, 완료된 태스크의 사진을 현장에서 검토합니다.

- 수량: 외부 사이트에 대한 대역폭이 크게 줄어들어 반복적인 비용이 절감됩니다.

- 신뢰성: 광역 네트워크 연결이 끊기더라도 연결이 다시 설정되면 작업을 로컬에서 계속하고 중앙 클라우드로 다시 동기화할 수 있습니다.

- 규모: 운영하는 공장이 2개이든 200개이든 상관없이 필요한 리소스가 각 위치에 있으므로, 오로지 피크 타임에 운영하기 위해서 중앙 데이터센터를 과도하게 구축할 필요가 줄어듭니다.

- 보안: 원시 데이터가 온프레미스로 유지되므로 잠재적인 공격 표면이 줄어듭니다.

엣지 + 퍼블릭 클라우드는 어떤 모습일까요?

Red Hat OpenShift는 선도적인 엔터프라이즈 쿠버네티스 플랫폼으로, 애플리케이션 및 인프라를 가장 필요한 곳에 배치할 수 있는 유연한 환경을 제공합니다. 이 경우 중앙화된 퍼블릭 클라우드에서 실행할 수 있을 뿐만 아니라 동일한 애플리케이션을 조립 라인 자체로 이동할 수도 있습니다. 해당 위치에서 신속하게 데이터를 수집하고, 계산하고, 작업을 수행할 수 있습니다. 엣지의 머신 러닝(ML)입니다. 엣지 컴퓨팅을 프라이빗 및 퍼블릭 클라우드와 결합하는 세 가지 예시를 살펴보겠습니다. 첫 번째는 엣지 컴퓨팅과 퍼블릭 클라우드를 결합하는 것입니다.

- 데이터 수집은 온사이트에서 이루어지며, 이때 원시 데이터가 수집됩니다. 측정하거나 작업을 수행하는 센서와 사물인터넷(IoT) 기기는 Red Hat AMQ 스트림 또는 AMQ 브로커 구성 요소를 사용하여 로컬화된 엣지 서버에 연결할 수 있습니다. 애플리케이션 요구 사항에 따라 소규모 단일 노드부터 대규모 고가용성 클러스터까지 다양할 수 있습니다. 무엇보다도, 원격 지역의 소규모 노드와 더 많은 공간이 있는 대규모 클러스터를 선택하여 조합할 수 있습니다.

- 실제 작업은 데이터 준비, 모델링, 애플리케이션 개발 및 제공 시 이루어집니다. 데이터가 수집되고 저장 및 분석됩니다. 조립 라인 사례를 사용하면, 위젯 이미지에서 패턴(예: 자재 또는 프로세스의 결함)을 분석합니다. 바로 여기에서 실제 학습이 이루어집니다. 새롭게 얻은 인사이트를 바탕으로 이러한 지식은 엣지에 상주하는 클라우드 네이티브 애플리케이션에 다시 통합됩니다. 이제는 이 모든 작업이 엣지에서 수행되지는 않습니다. 집약적인 중앙 처리 장치 및 그래픽 처리 장치(CPU/GPU)를 고밀도의 중앙화된 클러스터에서 실행하면 경량 엣지 기기에서 실행하는 것에 비해 프로세스 속도가 며칠 또는 몇 주 정도 빨라지기 때문입니다.

- 개발자는 Red Hat OpenShift Pipelines 및 GitOps를 사용하여 지속적 통합/지속적 제공(CI/CD)을 통해 애플리케이션을 지속적으로 개선하여 프로세스를 최대한 가속화할 수 있습니다. 습득한 지식을 빨리 사용할수록 매출 창출에 시간과 리소스를 더 효율적으로 집중할 수 있습니다. 이를 통해 다시 엣지에서는 인공지능으로 구동되는 새로운(새롭게 업데이트된) 애플리케이션이 새로운 지식을 사용하여 최신 모델과 비교하기 전에 데이터를 관찰하고 수집합니다. 이 주기는 지속적인 개선의 일환으로 반복됩니다.

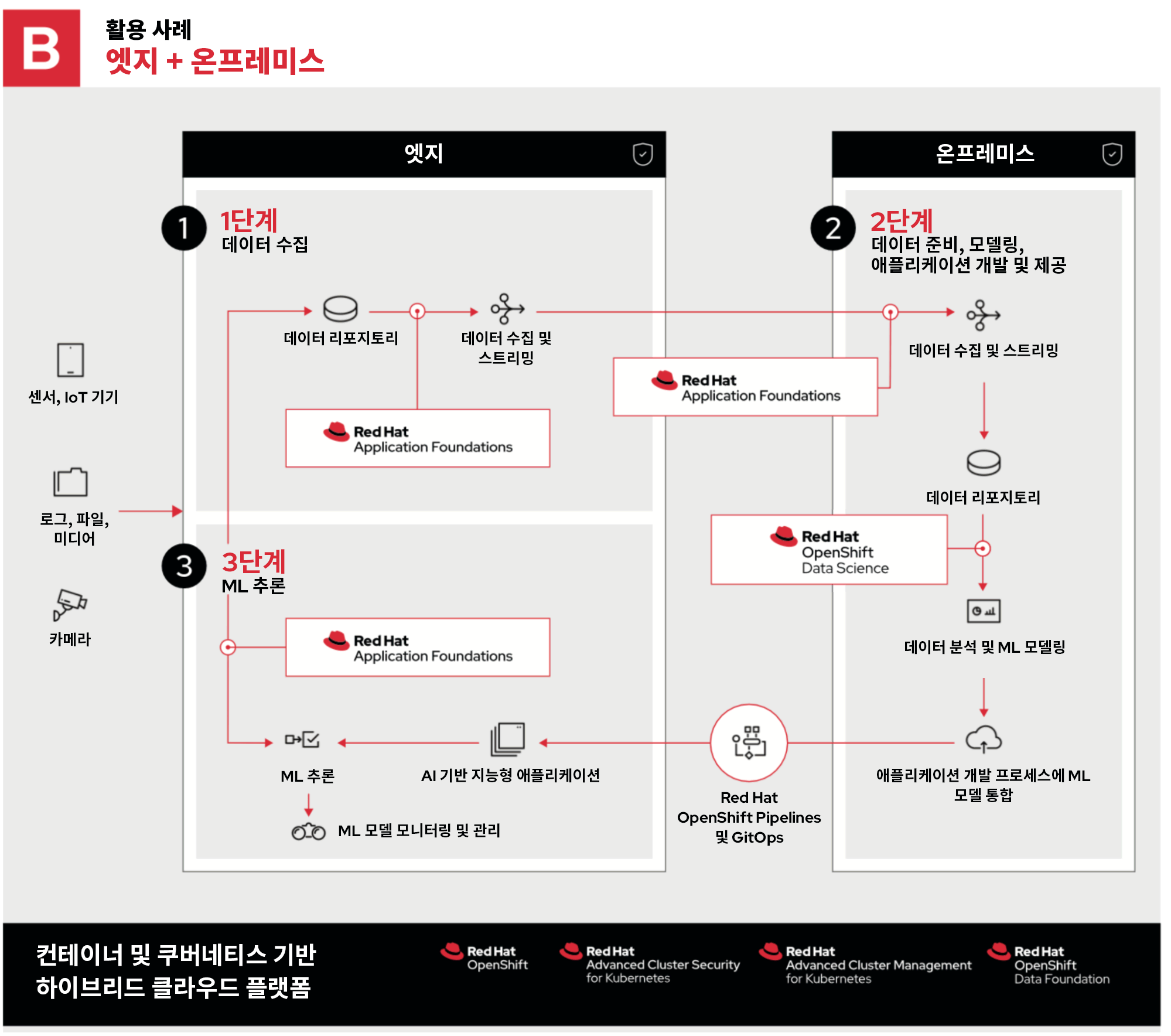

엣지 + 프라이빗 클라우드는 어떤 모습일까요?

두 번째 예시에서는 2단계를 제외하고 전체 프로세스가 동일하며, 현재 프라이빗 클라우드의 온프레미스에 상주합니다.

조직은 다음과 같은 이유로 프라이빗 클라우드를 선택할 수 있습니다.

- 하드웨어를 이미 소유하고 있으며 기존 자본을 사용할 수 있습니다.

- 데이터 로케일 및 보안에 관한 엄격한 규정을 준수해야 해서 민감한 데이터를 퍼블릭 클라우드에 저장하거나 퍼블릭 클라우드를 통과시킬 수 없습니다.

- 필드 프로그래머블 게이트 어레이, GPU 또는 퍼블릭 클라우드 공급업체로부터 대여할 수 없는 구성과 같은 사용자 정의 하드웨어가 필요합니다.

- 로컬 하드웨어보다 퍼블릭 클라우드에서 실행하는 데 비용이 더 많이 드는 특정 워크로드가 있습니다.

위의 목록은 OpenShift가 제공하는 유연성의 몇 가지 예시에 불과합니다. Red Hat OpenStack Platform 프라이빗 클라우드에서 OpenShift를 실행하기만 하면 됩니다.

엣지 + 하이브리드 클라우드는 어떤 모습일까요?

마지막 예시에서는 퍼블릭 클라우드와 프라이빗 클라우드로 구성된 하이브리드 클라우드를 사용합니다.

이 경우 1단계와 3단계는 동일하며 2단계도 동일한 프로세스지만, 최적의 환경에서 실행되도록 분산되어 있습니다.

- 2a 단계는 엣지에서 데이터를 수집, 저장, 분석하는 단계입니다. 여기서 퍼블릭 클라우드의 리소스 규모, 지리적 다양성, 연결성을 활용하여 데이터를 수집하고 해독합니다.

- 2b 단계에서는 온프레미스 애플리케이션 개발이 가능하여, 업데이트를 엣지로 다시 보내기 전에 특정 개발 워크플로우를 가속화하거나 보호하거나 사용자 정의할 수 있습니다.

Red Hat의 이점

다양한 변수와 사항을 고려해야 하므로 성공적인 엣지 컴퓨팅 환경을 조성하려면 유연성이 필요합니다. Red Hat은 느리거나 신뢰할 수 없거나 네트워크가 없는 연결, 엄격한 컴플라이언스 또는 극한의 성능 요구 사항을 처리하는 등 그 어떤 상황에서도 고객이 적합한 위치에서 적합한 애플리케이션을 실행하도록 조정된 유연한 솔루션을 구축하기에 필요한 툴을 제공합니다.

엣지, 잘 알려진 퍼블릭 클라우드, 프라이빗 클라우드의 온프레미스(아니면 이 모든 위치에서 한꺼번에) 등 어디에서 인사이트가 발생하든, 개발자는 익숙한 툴을 사용하여 클라우드 네이티브 애플리케이션(OpenShift에서 실행)을 지속적으로 혁신하고 가장 좋은 방법으로 가장 좋은 위치에서 실행할 수 있습니다.

저자 소개

Ben has been at Red Hat since 2019, where he has focused on edge computing with Red Hat OpenShift as well as private clouds based on Red Hat OpenStack Platform. Before this he spent a decade doing a mix of sales and product marking across telecommunications, enterprise storage and hyperconverged infrastructure.

유사한 검색 결과

AI in telco – the catalyst for scaling digital business

The nervous system gets a soul: why sovereign cloud is telco’s real second act

Technically Speaking | Build a production-ready AI toolbox

Technically Speaking | Platform engineering for AI agents

채널별 검색

오토메이션

기술, 팀, 인프라를 위한 IT 자동화 최신 동향

인공지능

고객이 어디서나 AI 워크로드를 실행할 수 있도록 지원하는 플랫폼 업데이트

오픈 하이브리드 클라우드

하이브리드 클라우드로 더욱 유연한 미래를 구축하는 방법을 알아보세요

보안

환경과 기술 전반에 걸쳐 리스크를 감소하는 방법에 대한 최신 정보

엣지 컴퓨팅

엣지에서의 운영을 단순화하는 플랫폼 업데이트

인프라

세계적으로 인정받은 기업용 Linux 플랫폼에 대한 최신 정보

애플리케이션

복잡한 애플리케이션에 대한 솔루션 더 보기

가상화

온프레미스와 클라우드 환경에서 워크로드를 유연하게 운영하기 위한 엔터프라이즈 가상화의 미래